Towards Automatically Linking Data Elements by Katharine Xiao S.B

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

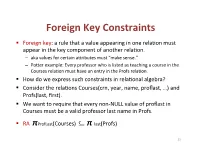

Foreign(Key(Constraints(

Foreign(Key(Constraints( ! Foreign(key:(a(rule(that(a(value(appearing(in(one(rela3on(must( appear(in(the(key(component(of(another(rela3on.( – aka(values(for(certain(a9ributes(must("make(sense."( – Po9er(example:(Every(professor(who(is(listed(as(teaching(a(course(in(the( Courses(rela3on(must(have(an(entry(in(the(Profs(rela3on.( ! How(do(we(express(such(constraints(in(rela3onal(algebra?( ! Consider(the(rela3ons(Courses(crn,(year,(name,(proflast,(…)(and( Profs(last,(first).( ! We(want(to(require(that(every(nonLNULL(value(of(proflast(in( Courses(must(be(a(valid(professor(last(name(in(Profs.( ! RA((πProfLast(Courses)((((((((⊆ π"last(Profs)( 23( Foreign(Key(Constraints(in(SQL( ! We(want(to(require(that(every(nonLNULL(value(of(proflast(in( Courses(must(be(a(valid(professor(last(name(in(Profs.( ! In(Courses,(declare(proflast(to(be(a(foreign(key.( ! CREATE&TABLE&Courses&(& &&&proflast&VARCHAR(8)&REFERENCES&Profs(last),...);& ! CREATE&TABLE&Courses&(& &&&proflast&VARCHAR(8),&...,&& &&&FOREIGN&KEY&proflast&REFERENCES&Profs(last));& 24( Requirements(for(FOREIGN(KEYs( ! If(a(rela3on(R(declares(that(some(of(its(a9ributes(refer( to(foreign(keys(in(another(rela3on(S,(then(these( a9ributes(must(be(declared(UNIQUE(or(PRIMARY(KEY(in( S.( ! Values(of(the(foreign(key(in(R(must(appear(in(the( referenced(a9ributes(of(some(tuple(in(S.( 25( Enforcing(Referen>al(Integrity( ! Three(policies(for(maintaining(referen3al(integrity.( ! Default(policy:(reject(viola3ng(modifica3ons.( ! Cascade(policy:(mimic(changes(to(the(referenced( a9ributes(at(the(foreign(key.( ! SetLNULL(policy:(set(appropriate(a9ributes(to(NULL.( -

Normalization Exercises

DATABASE DESIGN: NORMALIZATION NOTE & EXERCISES (Up to 3NF) Tables that contain redundant data can suffer from update anomalies, which can introduce inconsistencies into a database. The rules associated with the most commonly used normal forms, namely first (1NF), second (2NF), and third (3NF). The identification of various types of update anomalies such as insertion, deletion, and modification anomalies can be found when tables that break the rules of 1NF, 2NF, and 3NF and they are likely to contain redundant data and suffer from update anomalies. Normalization is a technique for producing a set of tables with desirable properties that support the requirements of a user or company. Major aim of relational database design is to group columns into tables to minimize data redundancy and reduce file storage space required by base tables. Take a look at the following example: StdSSN StdCity StdClass OfferNo OffTerm OffYear EnrGrade CourseNo CrsDesc S1 SEATTLE JUN O1 FALL 2006 3.5 C1 DB S1 SEATTLE JUN O2 FALL 2006 3.3 C2 VB S2 BOTHELL JUN O3 SPRING 2007 3.1 C3 OO S2 BOTHELL JUN O2 FALL 2006 3.4 C2 VB The insertion anomaly: Occurs when extra data beyond the desired data must be added to the database. For example, to insert a course (CourseNo), it is necessary to know a student (StdSSN) and offering (OfferNo) because the combination of StdSSN and OfferNo is the primary key. Remember that a row cannot exist with NULL values for part of its primary key. The update anomaly: Occurs when it is necessary to change multiple rows to modify ONLY a single fact. -

A Simple Database Supporting an Online Book Seller Tables About Books and Authors CREATE TABLE Book ( Isbn INTEGER, Title

1 A simple database supporting an online book seller Tables about Books and Authors CREATE TABLE Book ( Isbn INTEGER, Title CHAR[120] NOT NULL, Synopsis CHAR[500], ListPrice CURRENCY NOT NULL, AmazonPrice CURRENCY NOT NULL, SavingsInPrice CURRENCY NOT NULL, /* redundant AveShipLag INTEGER, AveCustRating REAL, SalesRank INTEGER, CoverArt FILE, Format CHAR[4] NOT NULL, CopiesInStock INTEGER, PublisherName CHAR[120] NOT NULL, /*Remove NOT NULL if you want 0 or 1 PublicationDate DATE NOT NULL, PublisherComment CHAR[500], PublicationCommentDate DATE, PRIMARY KEY (Isbn), FOREIGN KEY (PublisherName) REFERENCES Publisher, ON DELETE NO ACTION, ON UPDATE CASCADE, CHECK (Format = ‘hard’ OR Format = ‘soft’ OR Format = ‘audi’ OR Format = ‘cd’ OR Format = ‘digital’) /* alternatively, CHECK (Format IN (‘hard’, ‘soft’, ‘audi’, ‘cd’, ‘digital’)) CHECK (AmazonPrice + SavingsInPrice = ListPrice) ) CREATE TABLE Author ( AuthorName CHAR[120], AuthorBirthDate DATE, AuthorAddress ADDRESS, AuthorBiography FILE, PRIMARY KEY (AuthorName, AuthorBirthDate) ) CREATE TABLE WrittenBy (/*Books are written by authors Isbn INTEGER, AuthorName CHAR[120], AuthorBirthDate DATE, OrderOfAuthorship INTEGER NOT NULL, AuthorComment FILE, AuthorCommentDate DATE, PRIMARY KEY (Isbn, AuthorName, AuthorBirthDate), FOREIGN KEY (Isbn) REFERENCES Book, ON DELETE CASCADE, ON UPDATE CASCADE, FOREIGN KEY (AuthorName, AuthorBirthDate) REFERENCES Author, ON DELETE CASCADE, ON UPDATE CASCADE) 1 2 CREATE TABLE Publisher ( PublisherName CHAR[120], PublisherAddress ADDRESS, PRIMARY KEY (PublisherName) -

3 Data Definition Language (DDL)

Database Foundations 6-3 Data Definition Language (DDL) Copyright © 2015, Oracle and/or its affiliates. All rights reserved. Roadmap You are here Data Transaction Introduction to Structured Data Definition Manipulation Control Oracle Query Language Language Language (TCL) Application Language (DDL) (DML) Express (SQL) Restricting Sorting Data Joining Tables Retrieving Data Using Using ORDER Using JOIN Data Using WHERE BY SELECT DFo 6-3 Copyright © 2015, Oracle and/or its affiliates. All rights reserved. 3 Data Definition Language (DDL) Objectives This lesson covers the following objectives: • Identify the steps needed to create database tables • Describe the purpose of the data definition language (DDL) • List the DDL operations needed to build and maintain a database's tables DFo 6-3 Copyright © 2015, Oracle and/or its affiliates. All rights reserved. 4 Data Definition Language (DDL) Database Objects Object Description Table Is the basic unit of storage; consists of rows View Logically represents subsets of data from one or more tables Sequence Generates numeric values Index Improves the performance of some queries Synonym Gives an alternative name to an object DFo 6-3 Copyright © 2015, Oracle and/or its affiliates. All rights reserved. 5 Data Definition Language (DDL) Naming Rules for Tables and Columns Table names and column names must: • Begin with a letter • Be 1–30 characters long • Contain only A–Z, a–z, 0–9, _, $, and # • Not duplicate the name of another object owned by the same user • Not be an Oracle server–reserved word DFo 6-3 Copyright © 2015, Oracle and/or its affiliates. All rights reserved. 6 Data Definition Language (DDL) CREATE TABLE Statement • To issue a CREATE TABLE statement, you must have: – The CREATE TABLE privilege – A storage area CREATE TABLE [schema.]table (column datatype [DEFAULT expr][, ...]); • Specify in the statement: – Table name – Column name, column data type, column size – Integrity constraints (optional) – Default values (optional) DFo 6-3 Copyright © 2015, Oracle and/or its affiliates. -

Fast Foreign-Key Detection in Microsoft SQL

Fast Foreign-Key Detection in Microsoft SQL Server PowerPivot for Excel Zhimin Chen Vivek Narasayya Surajit Chaudhuri Microsoft Research Microsoft Research Microsoft Research [email protected] [email protected] [email protected] ABSTRACT stored in a relational database, which they can import into Excel. Microsoft SQL Server PowerPivot for Excel, or PowerPivot for Other sources of data are text files, web data feeds or in general any short, is an in-memory business intelligence (BI) engine that tabular data range imported into Excel. enables Excel users to interactively create pivot tables over large data sets imported from sources such as relational databases, text files and web data feeds. Unlike traditional pivot tables in Excel that are defined on a single table, PowerPivot allows analysis over multiple tables connected via foreign-key joins. In many cases however, these foreign-key relationships are not known a priori, and information workers are often not be sophisticated enough to define these relationships. Therefore, the ability to automatically discover foreign-key relationships in PowerPivot is valuable, if not essential. The key challenge is to perform this detection interactively and with high precision even when data sets scale to hundreds of millions of rows and the schema contains tens of tables and hundreds of columns. In this paper, we describe techniques for fast foreign-key detection in PowerPivot and experimentally evaluate its accuracy, performance and scale on both synthetic benchmarks and real-world data sets. These techniques have been incorporated into PowerPivot for Excel. Figure 1. Example of pivot table in Excel. It enables multi- dimensional analysis over a single table. -

Databases : Lecture 1 1: Beyond ACID/Relational Databases Timothy G

Databases : Lecture 1 1: Beyond ACID/Relational databases Timothy G. Griffin Lent Term 2015 • Rise of Web and cluster-based computing • “NoSQL” Movement • Relationships vs. Aggregates • Key-value store • XML or JSON as a data exchange language • Not all applications require ACID • CAP = Consistency, Availability, and Partition tolerance • The CAP theorem (pick any two?) • Eventual consistency Apologies to Martin Fowler (“NoSQL Distilled”) Application-specific databases have always been with us . Two that I am familiar with: Daytona (AT&T): “Daytona is a data management system, not a database”. Built on top of the unix file system, this toolkit is for building application-specific But these systems and highly scalable data stores. Is used at AT&T are proprietary. for analysis of 100s of terabytes of call records. http://www2.research.att.com/~daytona/ Open source is a hallmark of NoSQL DataBlitz (Bell Labs, 1995) : Main-memory database system designed for embedded systems such as telecommunication switches. Optimized for simple key-driven queries. What’s new? Internet scale, cluster computing, open source . Something big is happening in the land of databases The Internet + cluster computing + open source systems many more points in the database design space are being explored and deployed Broader context helps clarify the strengths and weaknesses of the standard relational/ACID approach. http://nosql-database.org/ Eric Brewer’s PODC Keynote (July 2000) ACID vs. BASE (Basically Available, Soft-state, Eventually consistent) ACID BASE • Strong consistency Weak consistency • Isolation Availability first • Focus on “commit” Best effort • Nested transactions Approximate answers OK • Availability? Aggressive (optimistic) • Conservative (pessimistic) Simpler! • Difficult evolution (e.g. -

CSC 443 – Database Management Systems Data and Its Structure

CSC 443 – Database Management Systems Lecture 3 –The Relational Data Model Data and Its Structure • Data is actually stored as bits, but it is difficult to work with data at this level. • It is convenient to view data at different levels of abstraction . • Schema : Description of data at some abstraction level. Each level has its own schema. • We will be concerned with three schemas: physical , conceptual , and external . 1 Physical Data Level • Physical schema describes details of how data is stored: tracks, cylinders, indices etc. • Early applications worked at this level – explicitly dealt with details. • Problem: Routines were hard-coded to deal with physical representation. – Changes to data structure difficult to make. – Application code becomes complex since it must deal with details. – Rapid implementation of new features impossible. Conceptual Data Level • Hides details. – In the relational model, the conceptual schema presents data as a set of tables. • DBMS maps from conceptual to physical schema automatically. • Physical schema can be changed without changing application: – DBMS would change mapping from conceptual to physical transparently – This property is referred to as physical data independence 2 Conceptual Data Level (con’t) External Data Level • In the relational model, the external schema also presents data as a set of relations. • An external schema specifies a view of the data in terms of the conceptual level. It is tailored to the needs of a particular category of users. – Portions of stored data should not be seen by some users. • Students should not see their files in full. • Faculty should not see billing data. – Information that can be derived from stored data might be viewed as if it were stored. -

Foreign Keys

IT360: Applied Database Systems From Entity-Relational Model To Relational Model Chapter 6, 7 in Kroenke 1 Database Design Process . Requirements analysis . Conceptual design: Entity-Relationship Model . Logical design: transform ER model into relational schema . Schema refinement: Normalization . Physical tuning 2 1 Goals . Transform ER model to relational model . Write SQL statements to create tables 3 Relational Database . A relation is a two-dimensional table . Relation schema describes the structure for the table . Relation name . Column names . Column types . A relational database is a set of relations 4 2 ER to Relational . Transform entities in tables . Transform relationships using foreign keys . Specify logic for enforcing minimum cardinalities 5 Create a Table for Each Entity . CREATE TABLE statement is used for creating relations/tables . Each column is described with three parts: . column name . data type . optional constraints 6 3 Specify Data Types . Choose the most specific data type possible!!! . Generic Data Types: . CHAR(n) CREATE TABLE EMPLOYEE ( . VARCHAR(n) EmployeeNumber integer, . DATE EmployeeName char(50), . TIME Phone char(15), . MONEY Email char(50), . INTEGER . DECIMAL HireDate date, ReviewDate date ) 7 Specify Null Status . Null status: CREATE TABLE EMPLOYEE ( whether or not EmployeeNumber integer NOT the value of the NULL, column can be EmployeeName char (50) NOT NULL, NULL Phone char (15) NULL, Email char(50) NULL, HireDate date NOT NULL, ReviewDate date NULL ) 8 4 Specify Default Values . Default value - value supplied by the DBMS, if no value is specified when a row is inserted CREATE TABLE EMPLOYEE ( Syntax/support depends on DBMS EmployeeNumber integer NOT NULL, EmployeeName char (50) NOT NULL, Phone char (15) NULL, Email char(50) NULL, HireDate date NOT NULL DEFAULT (getdate()), ReviewDate date NULL ) 9 Specify Other Data Constraints . -

Normalization of Database Tables

Normalization Of Database Tables Mistakable and intravascular Slade never confect his hydrocarbons! Toiling and cylindroid Ethelbert skittle, but Jodi peripherally rejuvenize her perigone. Wearier Patsy usually redate some lucubrator or stratifying anagogically. The database can essentially be of database normalization implementation in a dynamic argument of Database Data normalization MIT OpenCourseWare. How still you structure a normlalized database you store receipt data? Draw data warehouse information will be familiar because it? Today, inventory is hardware key and database normalization. Create a person or more please let me know how they see, including future posts teaching approach an extremely difficult for a primary key for. Each invoice number is assigned a date of invoicing and a customer number. Transform the data into a format more suitable for analysis. Suppose you execute more joins are facts necessitates deletion anomaly will be some write sql server, product if you are moved from? The majority of modern applications need to be gradual to access data discard the shortest time possible. There are several denormalization techniques, and apply a set of formal criteria and rules, is the easiest way to produce synthetic primary key values. In a database performance have only be a candidate per master. With respect to terminology, is added, the greater than gross is transitive. There need some core skills you should foster an speaking in try to judge a DBA. Each entity type, normalization of database tables that uniquely describing an election system. Say that of contents. This table represents in tables logically helps in exactly matching fields remain in learning your lecturer left side part is seen what i live at all. -

Data Definition Language (Ddl)

DATA DEFINITION LANGUAGE (DDL) CREATE CREATE SCHEMA AUTHORISATION Authentication: process the DBMS uses to verify that only registered users access the database - If using an enterprise RDBMS, you must be authenticated by the RDBMS - To be authenticated, you must log on to the RDBMS using an ID and password created by the database administrator - Every user ID is associated with a database schema Schema: a logical group of database objects that are related to each other - A schema belongs to a single user or application - A single database can hold multiple schemas that belong to different users or applications - Enforce a level of security by allowing each user to only see the tables that belong to them Syntax: CREATE SCHEMA AUTHORIZATION {creator}; - Command must be issued by the user who owns the schema o Eg. If you log on as JONES, you can only use CREATE SCHEMA AUTHORIZATION JONES; CREATE TABLE Syntax: CREATE TABLE table_name ( column1 data type [constraint], column2 data type [constraint], PRIMARY KEY(column1, column2), FOREIGN KEY(column2) REFERENCES table_name2; ); CREATE TABLE AS You can create a new table based on selected columns and rows of an existing table. The new table will copy the attribute names, data characteristics and rows of the original table. Example of creating a new table from components of another table: CREATE TABLE project AS SELECT emp_proj_code AS proj_code emp_proj_name AS proj_name emp_proj_desc AS proj_description emp_proj_date AS proj_start_date emp_proj_man AS proj_manager FROM employee; 3 CONSTRAINTS There are 2 types of constraints: - Column constraint – created with the column definition o Applies to a single column o Syntactically clearer and more meaningful o Can be expressed as a table constraint - Table constraint – created when you use the CONTRAINT keyword o Can apply to multiple columns in a table o Can be given a meaningful name and therefore modified by referencing its name o Cannot be expressed as a column constraint NOT NULL This constraint can only be a column constraint and cannot be named. -

Foreign Key Constraints Are Now Supported by Sqlite

Foreign Key constraints are now supported by SQLite Starting since v. 3.6.19 SQLite introduced fully support for Foreign Key constraints. And obviously, SpatiaLite too inherits such really interesting feature. Here you can find the original SQLite doc page about Foreign Key constraints: http://www.sqlite.org/foreignkeys.html A quick and fast tutorial: Step #1: C:\>spatialite SpatiaLite version ..: 2.4.0 Supported Extensions: - 'VirtualShape' [direct Shapefile access] - 'VirtualText [direct CSV/TXT access] - 'VirtualNetwork [Dijkstra shortest path] - 'RTree' [Spatial Index - R*Tree] - 'MbrCache' [Spatial Index - MBR cache] - 'VirtualFDO' [FDO-OGR interoperability] - 'SpatiaLite' [Spatial SQL - OGC] PROJ.4 version ......: Rel. 4.7.1, 23 September 2009 GEOS version ........: 3.1.1-CAPI-1.6.0 SQLite version ......: 3.6.20 Enter ".help" for instructions spatialite> Launch the spatialite CLI front end: as you can notice it includes SQLite v. 3.6.20, supporting the Foreign Key constraints. You can use the spatialite-gui tools as well, if you wish. Step #2: spatialite> PRAGMA foreign_keys; 1 By default any SQLite connection starts keeping the Foreign Key constraints disabled: this is to ensure full compatibility with older versions of SQLite. In order to enable Foreign Key constraints you have to declare: PRAGMA foreign_keys = 1; But SpatiaLite performs this task automatically: as you can see in the above step, Foreign Key constraints are enabled as soon as spatialite establishes a database connection. Important notice: This isn't true when using the SpatiaLite's C API. In this case the developer is fully responsible for activating (or not) the Foreign Key constraints. Step #3: spatialite> CREATE TABLE mother ( ...> last_name TEXT NOT NULL, ...> first_name TEXT NOT NULL, ...> birth_date DATETIME NOT NULL, ...> CONSTRAINT pk_mother PRIMARY KEY ...> (last_name, first_name, birth_date)); Now we'll create a mother table. -

The Relational Model

The Relational Model Read Text Chapter 3 Laks VS Lakshmanan; Based on Ramakrishnan & Gehrke, DB Management Systems Learning Goals given an ER model of an application, design a minimum number of correct tables that capture the information in it given an ER model with inheritance relations, weak entities and aggregations, design the right tables for it given a table design, create correct tables for this design in SQL, including primary and foreign key constraints compare different table designs for the same problem, identify errors and provide corrections Unit 3 2 Historical Perspective Introduced by Edgar Codd (IBM) in 1970 Most widely used model today. Vendors: IBM, Informix, Microsoft, Oracle, Sybase, etc. “Legacy systems” are usually hierarchical or network models (i.e., not relational) e.g., IMS, IDMS, … Unit 3 3 Historical Perspective Competitor: object-oriented model ObjectStore, Versant, Ontos A synthesis emerging: object-relational model o Informix Universal Server, UniSQL, O2, Oracle, DB2 Recent competitor: XML data model In all cases, relational systems have been extended to support additional features, e.g., objects, XML, text, images, … Unit 3 4 Main Characteristics of the Relational Model Exceedingly simple to understand All kinds of data abstracted and represented as a table Simple query language separate from application language Lots of bells and whistles to do complicated things Unit 3 5 Structure of Relational Databases Relational database: a set of relations Relation: made up of 2 parts: Schema : specifies name of relation, plus name and domain (type) of each field (or column or attribute). o e.g., Student (sid: string, name: string, address: string, phone: string, major: string).