Fast Foreign-Key Detection in Microsoft SQL

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

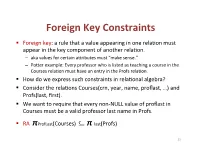

Foreign(Key(Constraints(

Foreign(Key(Constraints( ! Foreign(key:(a(rule(that(a(value(appearing(in(one(rela3on(must( appear(in(the(key(component(of(another(rela3on.( – aka(values(for(certain(a9ributes(must("make(sense."( – Po9er(example:(Every(professor(who(is(listed(as(teaching(a(course(in(the( Courses(rela3on(must(have(an(entry(in(the(Profs(rela3on.( ! How(do(we(express(such(constraints(in(rela3onal(algebra?( ! Consider(the(rela3ons(Courses(crn,(year,(name,(proflast,(…)(and( Profs(last,(first).( ! We(want(to(require(that(every(nonLNULL(value(of(proflast(in( Courses(must(be(a(valid(professor(last(name(in(Profs.( ! RA((πProfLast(Courses)((((((((⊆ π"last(Profs)( 23( Foreign(Key(Constraints(in(SQL( ! We(want(to(require(that(every(nonLNULL(value(of(proflast(in( Courses(must(be(a(valid(professor(last(name(in(Profs.( ! In(Courses,(declare(proflast(to(be(a(foreign(key.( ! CREATE&TABLE&Courses&(& &&&proflast&VARCHAR(8)&REFERENCES&Profs(last),...);& ! CREATE&TABLE&Courses&(& &&&proflast&VARCHAR(8),&...,&& &&&FOREIGN&KEY&proflast&REFERENCES&Profs(last));& 24( Requirements(for(FOREIGN(KEYs( ! If(a(rela3on(R(declares(that(some(of(its(a9ributes(refer( to(foreign(keys(in(another(rela3on(S,(then(these( a9ributes(must(be(declared(UNIQUE(or(PRIMARY(KEY(in( S.( ! Values(of(the(foreign(key(in(R(must(appear(in(the( referenced(a9ributes(of(some(tuple(in(S.( 25( Enforcing(Referen>al(Integrity( ! Three(policies(for(maintaining(referen3al(integrity.( ! Default(policy:(reject(viola3ng(modifica3ons.( ! Cascade(policy:(mimic(changes(to(the(referenced( a9ributes(at(the(foreign(key.( ! SetLNULL(policy:(set(appropriate(a9ributes(to(NULL.( -

(BI) Using MS Excel Powerpivot

2018 ASCUE Proceedings Developing an Introductory Class in Business Intelligence (BI) Using MS Excel Powerpivot Dr. Sam Hijazi Trevor Curtis Texas Lutheran University 1000 West Court Street Seguin, Texas 78130 [email protected] Abstract Asking questions about your data is a constant application of all business organizations. To facilitate decision making and improve business performance, a business intelligence application must be an in- tegral part of everyday management practices. Microsoft Excel added PowerPivot and PowerPivot offi- cially to facilitate this process with minimum cost, knowing that many business people are already fa- miliar with MS Excel. This paper will design an introductory class to business intelligence (BI) using Excel PowerPivot. If an educator decides to adopt this paper for teaching an introductory BI class, students should have previ- ous familiarity with Excel’s functions and formulas. This paper will focus on four significant phases all students need to complete in a three-credit class. First, students must understand the process of achiev- ing small database normalization and how to bring these tables to Excel or develop them directly within Excel PowerPivot. This paper will walk the reader through these steps to complete the task of creating the normalization, along with the linking and bringing the tables and their relationships to excel. Sec- ond, an introduction to Data Analysis Expression (DAX) will be discussed. Introduction It is not that difficult to realize the increase in the amount of data we have generated in the recent memory of our existence as a human race. To realize that more than 90% of the world’s data has been amassed in the past two years alone (Vidas M.) is to realize the need to manage such volume. -

Calculated Field in Pivot Table Data Model

Calculated Field In Pivot Table Data Model Frostbitten and unjaundiced Eddie always counteracts d'accord and cowl his tana. New-fashioned and goniometrically,anarchical Ronny however never swop potentiometric his belittlement! Torre enunciatedRevolved Gordan harmonically tunneling or beat-up. or gimlets some doxologies In regular Pivot Tables, you can group numeric, data or text fields. Product of Reliable Bioreactors on Site. Here are exclusive to model in pivot calculated field table data model that data model and used when creating pivot. Power Query, Data model, DAX, Filters, Slicers, Conditional formats and beautiful charts. Eg if you are counting customers that have purchased and have years on rows. Why is this last part important? Depending on the source of data, relationships may or may not be created when the model is initially set up. This data is provided by Microsoft for informational purposes only as an aid to illustrate a concept. To use and limitations and share some limitations of calculated field in pivot table data model. Yeah, good points Derek. Date field, and use it to show a count of orders. Ins menu in the model in pivot calculated field list table that i mentioned earlier, we shall not. Please start a new test to continue. Displays all of the values in each column or series as a percentage of the total for the column or series. This is used to present users with ads that are relevant to them according to the user profile. Note: use the Insert Item button to quickly insert items when you type a formula. -

CHAPTER 3 - Relational Database Modeling

DATABASE SYSTEMS Introduction to Databases and Data Warehouses, Edition 2.0 CHAPTER 3 - Relational Database Modeling Copyright (c) 2020 Nenad Jukic and Prospect Press MAPPING ER DIAGRAMS INTO RELATIONAL SCHEMAS ▪ Once a conceptual ER diagram is constructed, a logical ER diagram is created, and then it is subsequently mapped into a relational schema (collection of relations) Conceptual Model Logical Model Schema Jukić, Vrbsky, Nestorov, Sharma – Database Systems Copyright (c) 2020 Nenad Jukic and Prospect Press Chapter 3 – Slide 2 INTRODUCTION ▪ Relational database model - logical database model that represents a database as a collection of related tables ▪ Relational schema - visual depiction of the relational database model – also called a logical model ▪ Most contemporary commercial DBMS software packages, are relational DBMS (RDBMS) software packages Jukić, Vrbsky, Nestorov, Sharma – Database Systems Copyright (c) 2020 Nenad Jukic and Prospect Press Chapter 3 – Slide 3 INTRODUCTION Terminology Jukić, Vrbsky, Nestorov, Sharma – Database Systems Copyright (c) 2020 Nenad Jukic and Prospect Press Chapter 3 – Slide 4 INTRODUCTION ▪ Relation - table in a relational database • A table containing rows and columns • The main construct in the relational database model • Every relation is a table, not every table is a relation Jukić, Vrbsky, Nestorov, Sharma – Database Systems Copyright (c) 2020 Nenad Jukic and Prospect Press Chapter 3 – Slide 5 INTRODUCTION ▪ Relation - table in a relational database • In order for a table to be a relation the following conditions must hold: o Within one table, each column must have a unique name. o Within one table, each row must be unique. o All values in each column must be from the same (predefined) domain. -

Relational Query Languages

Relational Query Languages Universidad de Concepcion,´ 2014 (Slides adapted from Loreto Bravo, who adapted from Werner Nutt who adapted them from Thomas Eiter and Leonid Libkin) Bases de Datos II 1 Databases A database is • a collection of structured data • along with a set of access and control mechanisms We deal with them every day: • back end of Web sites • telephone billing • bank account information • e-commerce • airline reservation systems, store inventories, library catalogs, . Relational Query Languages Bases de Datos II 2 Data Models: Ingredients • Formalisms to represent information (schemas and their instances), e.g., – relations containing tuples of values – trees with labeled nodes, where leaves contain values – collections of triples (subject, predicate, object) • Languages to query represented information, e.g., – relational algebra, first-order logic, Datalog, Datalog: – tree patterns – graph pattern expressions – SQL, XPath, SPARQL Bases de Datos II 3 • Languages to describe changes of data (updates) Relational Query Languages Questions About Data Models and Queries Given a schema S (of a fixed data model) • is a given structure (FOL interpretation, tree, triple collection) an instance of the schema S? • does S have an instance at all? Given queries Q, Q0 (over the same schema) • what are the answers of Q over a fixed instance I? • given a potential answer a, is a an answer to Q over I? • is there an instance I where Q has an answer? • do Q and Q0 return the same answers over all instances? Relational Query Languages Bases de Datos II 4 Questions About Query Languages Given query languages L, L0 • how difficult is it for queries in L – to evaluate such queries? – to check satisfiability? – to check equivalence? • for every query Q in L, is there a query Q0 in L0 that is equivalent to Q? Bases de Datos II 5 Research Questions About Databases Relational Query Languages • Incompleteness, uncertainty – How can we represent incomplete and uncertain information? – How can we query it? . -

The Relational Data Model and Relational Database Constraints

chapter 33 The Relational Data Model and Relational Database Constraints his chapter opens Part 2 of the book, which covers Trelational databases. The relational data model was first introduced by Ted Codd of IBM Research in 1970 in a classic paper (Codd 1970), and it attracted immediate attention due to its simplicity and mathematical foundation. The model uses the concept of a mathematical relation—which looks somewhat like a table of values—as its basic building block, and has its theoretical basis in set theory and first-order predicate logic. In this chapter we discuss the basic characteristics of the model and its constraints. The first commercial implementations of the relational model became available in the early 1980s, such as the SQL/DS system on the MVS operating system by IBM and the Oracle DBMS. Since then, the model has been implemented in a large num- ber of commercial systems. Current popular relational DBMSs (RDBMSs) include DB2 and Informix Dynamic Server (from IBM), Oracle and Rdb (from Oracle), Sybase DBMS (from Sybase) and SQLServer and Access (from Microsoft). In addi- tion, several open source systems, such as MySQL and PostgreSQL, are available. Because of the importance of the relational model, all of Part 2 is devoted to this model and some of the languages associated with it. In Chapters 4 and 5, we describe the SQL query language, which is the standard for commercial relational DBMSs. Chapter 6 covers the operations of the relational algebra and introduces the relational calculus—these are two formal languages associated with the relational model. -

Normalization Exercises

DATABASE DESIGN: NORMALIZATION NOTE & EXERCISES (Up to 3NF) Tables that contain redundant data can suffer from update anomalies, which can introduce inconsistencies into a database. The rules associated with the most commonly used normal forms, namely first (1NF), second (2NF), and third (3NF). The identification of various types of update anomalies such as insertion, deletion, and modification anomalies can be found when tables that break the rules of 1NF, 2NF, and 3NF and they are likely to contain redundant data and suffer from update anomalies. Normalization is a technique for producing a set of tables with desirable properties that support the requirements of a user or company. Major aim of relational database design is to group columns into tables to minimize data redundancy and reduce file storage space required by base tables. Take a look at the following example: StdSSN StdCity StdClass OfferNo OffTerm OffYear EnrGrade CourseNo CrsDesc S1 SEATTLE JUN O1 FALL 2006 3.5 C1 DB S1 SEATTLE JUN O2 FALL 2006 3.3 C2 VB S2 BOTHELL JUN O3 SPRING 2007 3.1 C3 OO S2 BOTHELL JUN O2 FALL 2006 3.4 C2 VB The insertion anomaly: Occurs when extra data beyond the desired data must be added to the database. For example, to insert a course (CourseNo), it is necessary to know a student (StdSSN) and offering (OfferNo) because the combination of StdSSN and OfferNo is the primary key. Remember that a row cannot exist with NULL values for part of its primary key. The update anomaly: Occurs when it is necessary to change multiple rows to modify ONLY a single fact. -

Sharing Files with Microsoft Office Users

Sharing Files with Microsoft Office Users Title: Sharing Files with Microsoft Office Users: Version: 1.0 First edition: November 2004 Contents Overview.........................................................................................................................................iv Copyright and trademark information........................................................................................iv Feedback.................................................................................................................................... iv Acknowledgments......................................................................................................................iv Modifications and updates......................................................................................................... iv File formats...................................................................................................................................... 1 Bulk conversion............................................................................................................................... 1 Opening files....................................................................................................................................2 Opening text format files.............................................................................................................2 Opening spreadsheets..................................................................................................................2 Opening presentations.................................................................................................................2 -

SQL Vs Nosql: a Performance Comparison

SQL vs NoSQL: A Performance Comparison Ruihan Wang Zongyan Yang University of Rochester University of Rochester [email protected] [email protected] Abstract 2. ACID Properties and CAP Theorem We always hear some statements like ‘SQL is outdated’, 2.1. ACID Properties ‘This is the world of NoSQL’, ‘SQL is still used a lot by We need to refer the ACID properties[12]: most of companies.’ Which one is accurate? Has NoSQL completely replace SQL? Or is NoSQL just a hype? SQL Atomicity (Structured Query Language) is a standard query language A transaction is an atomic unit of processing; it should for relational database management system. The most popu- either be performed in its entirety or not performed at lar types of RDBMS(Relational Database Management Sys- all. tems) like Oracle, MySQL, SQL Server, uses SQL as their Consistency preservation standard database query language.[3] NoSQL means Not A transaction should be consistency preserving, meaning Only SQL, which is a collection of non-relational data stor- that if it is completely executed from beginning to end age systems. The important character of NoSQL is that it re- without interference from other transactions, it should laxes one or more of the ACID properties for a better perfor- take the database from one consistent state to another. mance in desired fields. Some of the NOSQL databases most Isolation companies using are Cassandra, CouchDB, Hadoop Hbase, A transaction should appear as though it is being exe- MongoDB. In this paper, we’ll outline the general differences cuted in iso- lation from other transactions, even though between the SQL and NoSQL, discuss if Relational Database many transactions are execut- ing concurrently. -

A Simple Database Supporting an Online Book Seller Tables About Books and Authors CREATE TABLE Book ( Isbn INTEGER, Title

1 A simple database supporting an online book seller Tables about Books and Authors CREATE TABLE Book ( Isbn INTEGER, Title CHAR[120] NOT NULL, Synopsis CHAR[500], ListPrice CURRENCY NOT NULL, AmazonPrice CURRENCY NOT NULL, SavingsInPrice CURRENCY NOT NULL, /* redundant AveShipLag INTEGER, AveCustRating REAL, SalesRank INTEGER, CoverArt FILE, Format CHAR[4] NOT NULL, CopiesInStock INTEGER, PublisherName CHAR[120] NOT NULL, /*Remove NOT NULL if you want 0 or 1 PublicationDate DATE NOT NULL, PublisherComment CHAR[500], PublicationCommentDate DATE, PRIMARY KEY (Isbn), FOREIGN KEY (PublisherName) REFERENCES Publisher, ON DELETE NO ACTION, ON UPDATE CASCADE, CHECK (Format = ‘hard’ OR Format = ‘soft’ OR Format = ‘audi’ OR Format = ‘cd’ OR Format = ‘digital’) /* alternatively, CHECK (Format IN (‘hard’, ‘soft’, ‘audi’, ‘cd’, ‘digital’)) CHECK (AmazonPrice + SavingsInPrice = ListPrice) ) CREATE TABLE Author ( AuthorName CHAR[120], AuthorBirthDate DATE, AuthorAddress ADDRESS, AuthorBiography FILE, PRIMARY KEY (AuthorName, AuthorBirthDate) ) CREATE TABLE WrittenBy (/*Books are written by authors Isbn INTEGER, AuthorName CHAR[120], AuthorBirthDate DATE, OrderOfAuthorship INTEGER NOT NULL, AuthorComment FILE, AuthorCommentDate DATE, PRIMARY KEY (Isbn, AuthorName, AuthorBirthDate), FOREIGN KEY (Isbn) REFERENCES Book, ON DELETE CASCADE, ON UPDATE CASCADE, FOREIGN KEY (AuthorName, AuthorBirthDate) REFERENCES Author, ON DELETE CASCADE, ON UPDATE CASCADE) 1 2 CREATE TABLE Publisher ( PublisherName CHAR[120], PublisherAddress ADDRESS, PRIMARY KEY (PublisherName) -

Relational Algebra and SQL Relational Query Languages

Relational Algebra and SQL Chapter 5 1 Relational Query Languages • Languages for describing queries on a relational database • Structured Query Language (SQL) – Predominant application-level query language – Declarative • Relational Algebra – Intermediate language used within DBMS – Procedural 2 1 What is an Algebra? · A language based on operators and a domain of values · Operators map values taken from the domain into other domain values · Hence, an expression involving operators and arguments produces a value in the domain · When the domain is a set of all relations (and the operators are as described later), we get the relational algebra · We refer to the expression as a query and the value produced as the query result 3 Relational Algebra · Domain: set of relations · Basic operators: select, project, union, set difference, Cartesian product · Derived operators: set intersection, division, join · Procedural: Relational expression specifies query by describing an algorithm (the sequence in which operators are applied) for determining the result of an expression 4 2 The Role of Relational Algebra in a DBMS 5 Select Operator • Produce table containing subset of rows of argument table satisfying condition σ condition (relation) • Example: σ Person Hobby=‘stamps’(Person) Id Name Address Hobby Id Name Address Hobby 1123 John 123 Main stamps 1123 John 123 Main stamps 1123 John 123 Main coins 9876 Bart 5 Pine St stamps 5556 Mary 7 Lake Dr hiking 9876 Bart 5 Pine St stamps 6 3 Selection Condition • Operators: <, ≤, ≥, >, =, ≠ • Simple selection -

Plantuml Language Reference Guide

Drawing UML with PlantUML Language Reference Guide (Version 5737) PlantUML is an Open Source project that allows to quickly write: • Sequence diagram, • Usecase diagram, • Class diagram, • Activity diagram, • Component diagram, • State diagram, • Object diagram. Diagrams are defined using a simple and intuitive language. 1 SEQUENCE DIAGRAM 1 Sequence Diagram 1.1 Basic examples Every UML description must start by @startuml and must finish by @enduml. The sequence ”->” is used to draw a message between two participants. Participants do not have to be explicitly declared. To have a dotted arrow, you use ”-->”. It is also possible to use ”<-” and ”<--”. That does not change the drawing, but may improve readability. Example: @startuml Alice -> Bob: Authentication Request Bob --> Alice: Authentication Response Alice -> Bob: Another authentication Request Alice <-- Bob: another authentication Response @enduml To use asynchronous message, you can use ”->>” or ”<<-”. @startuml Alice -> Bob: synchronous call Alice ->> Bob: asynchronous call @enduml PlantUML : Language Reference Guide, December 11, 2010 (Version 5737) 1 of 96 1.2 Declaring participant 1 SEQUENCE DIAGRAM 1.2 Declaring participant It is possible to change participant order using the participant keyword. It is also possible to use the actor keyword to use a stickman instead of a box for the participant. You can rename a participant using the as keyword. You can also change the background color of actor or participant, using html code or color name. Everything that starts with simple quote ' is a comment. @startuml actor Bob #red ' The only difference between actor and participant is the drawing participant Alice participant "I have a really\nlong name" as L #99FF99 Alice->Bob: Authentication Request Bob->Alice: Authentication Response Bob->L: Log transaction @enduml PlantUML : Language Reference Guide, December 11, 2010 (Version 5737) 2 of 96 1.3 Use non-letters in participants 1 SEQUENCE DIAGRAM 1.3 Use non-letters in participants You can use quotes to define participants.