Normalization of Database Tables

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Foreign(Key(Constraints(

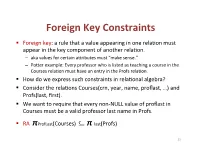

Foreign(Key(Constraints( ! Foreign(key:(a(rule(that(a(value(appearing(in(one(rela3on(must( appear(in(the(key(component(of(another(rela3on.( – aka(values(for(certain(a9ributes(must("make(sense."( – Po9er(example:(Every(professor(who(is(listed(as(teaching(a(course(in(the( Courses(rela3on(must(have(an(entry(in(the(Profs(rela3on.( ! How(do(we(express(such(constraints(in(rela3onal(algebra?( ! Consider(the(rela3ons(Courses(crn,(year,(name,(proflast,(…)(and( Profs(last,(first).( ! We(want(to(require(that(every(nonLNULL(value(of(proflast(in( Courses(must(be(a(valid(professor(last(name(in(Profs.( ! RA((πProfLast(Courses)((((((((⊆ π"last(Profs)( 23( Foreign(Key(Constraints(in(SQL( ! We(want(to(require(that(every(nonLNULL(value(of(proflast(in( Courses(must(be(a(valid(professor(last(name(in(Profs.( ! In(Courses,(declare(proflast(to(be(a(foreign(key.( ! CREATE&TABLE&Courses&(& &&&proflast&VARCHAR(8)&REFERENCES&Profs(last),...);& ! CREATE&TABLE&Courses&(& &&&proflast&VARCHAR(8),&...,&& &&&FOREIGN&KEY&proflast&REFERENCES&Profs(last));& 24( Requirements(for(FOREIGN(KEYs( ! If(a(rela3on(R(declares(that(some(of(its(a9ributes(refer( to(foreign(keys(in(another(rela3on(S,(then(these( a9ributes(must(be(declared(UNIQUE(or(PRIMARY(KEY(in( S.( ! Values(of(the(foreign(key(in(R(must(appear(in(the( referenced(a9ributes(of(some(tuple(in(S.( 25( Enforcing(Referen>al(Integrity( ! Three(policies(for(maintaining(referen3al(integrity.( ! Default(policy:(reject(viola3ng(modifica3ons.( ! Cascade(policy:(mimic(changes(to(the(referenced( a9ributes(at(the(foreign(key.( ! SetLNULL(policy:(set(appropriate(a9ributes(to(NULL.( -

Powerdesigner 16.6 Data Modeling

SAP® PowerDesigner® Document Version: 16.6 – 2016-02-22 Data Modeling Content 1 Building Data Models ...........................................................8 1.1 Getting Started with Data Modeling...................................................8 Conceptual Data Models........................................................8 Logical Data Models...........................................................9 Physical Data Models..........................................................9 Creating a Data Model.........................................................10 Customizing your Modeling Environment........................................... 15 1.2 Conceptual and Logical Diagrams...................................................26 Supported CDM/LDM Notations.................................................27 Conceptual Diagrams.........................................................31 Logical Diagrams............................................................43 Data Items (CDM)............................................................47 Entities (CDM/LDM)..........................................................49 Attributes (CDM/LDM)........................................................55 Identifiers (CDM/LDM)........................................................58 Relationships (CDM/LDM)..................................................... 59 Associations and Association Links (CDM)..........................................70 Inheritances (CDM/LDM)......................................................77 1.3 Physical Diagrams..............................................................82 -

Denormalization Strategies for Data Retrieval from Data Warehouses

Decision Support Systems 42 (2006) 267–282 www.elsevier.com/locate/dsw Denormalization strategies for data retrieval from data warehouses Seung Kyoon Shina,*, G. Lawrence Sandersb,1 aCollege of Business Administration, University of Rhode Island, 7 Lippitt Road, Kingston, RI 02881-0802, United States bDepartment of Management Science and Systems, School of Management, State University of New York at Buffalo, Buffalo, NY 14260-4000, United States Available online 20 January 2005 Abstract In this study, the effects of denormalization on relational database system performance are discussed in the context of using denormalization strategies as a database design methodology for data warehouses. Four prevalent denormalization strategies have been identified and examined under various scenarios to illustrate the conditions where they are most effective. The relational algebra, query trees, and join cost function are used to examine the effect on the performance of relational systems. The guidelines and analysis provided are sufficiently general and they can be applicable to a variety of databases, in particular to data warehouse implementations, for decision support systems. D 2004 Elsevier B.V. All rights reserved. Keywords: Database design; Denormalization; Decision support systems; Data warehouse; Data mining 1. Introduction houses as issues related to database design for high performance are receiving more attention. Database With the increased availability of data collected design is still an art that relies heavily on human from the Internet and other sources and the implemen- intuition and experience. Consequently, its practice is tation of enterprise-wise data warehouses, the amount becoming more difficult as the applications that data- of data that companies possess is growing at a bases support become more sophisticated [32].Cur- phenomenal rate. -

A Comprehensive Analysis of Sybase Powerdesigner 16.0

white paper A Comprehensive Analysis of Sybase® PowerDesigner® 16.0 InformationArchitect vs. ER/Studio XE2 Version 2.0 www.sybase.com TABLe OF CONTENtS 1 Introduction 1 Product Overviews 1 ER/Studio XE2 3 Sybase PowerDesigner 16.0 4 Data Modeling Activities 4 Overview 6 Types of Data Model 7 Design Layers 8 Managing the SAM-LDM Relationship 10 Forward and Reverse Engineering 11 Round-trip Engineering 11 Integrating Data Models with Requirements and Processes 11 Generating Object-oriented Models 11 Dependency Analysis 17 Model Comparisons and Merges 18 Update Flows 18 Required Features for a Data Modeling Tool 18 Core Modeling 25 Collaboration 27 Interfaces & Integration 29 Usability 34 Managing Models as a Project 36 Dependency Matrices 37 Conclusions 37 Acknowledgements 37 Bibliography 37 About the Author IntrOduCtion Data modeling is more than just database design, because data doesn’t just exist in databases. Data does not exist in isolation, it is created, managed and consumed by business processes, and those business processes are implemented using a variety of applications and technologies. To truly understand and manage our data, and the impact of changes to that data, we need to manage more than just models of data in databases. We need support for different types of data models, and for managing the relationships between data and the rest of the organization. When you need to manage a data center across the enterprise, integrating with a wider set of business and technology activities is critical to success. For this reason, this review will use the InformationArchitect version of Sybase PowerDesigner rather than their DataArchitect™ version. -

Database Design Solutions

spine=1.10" Wrox Programmer to ProgrammerTM Wrox Programmer to ProgrammerTM Beginning Stephens Database Design Solutions Databases play a critical role in the business operations of most organizations; they’re the central repository for critical information on products, customers, Beginning suppliers, sales, and a host of other essential information. It’s no wonder that Solutions Database Design the majority of all business computing involves database applications. With so much at stake, you’d expect most IT professionals would have a firm understanding of good database design. But in fact most learn through a painful process of trial and error, with predictably poor results. This book provides readers with proven methods and tools for designing efficient, reliable, and secure databases. Author Rod Stephens explains how a database should be organized to ensure data integrity without sacrificing performance. He shares procedures for designing robust, flexible, and secure databases that provide a solid foundation for all of your database applications. The methods and techniques in this book can be applied to any database environment, including Oracle®, Microsoft Access®, SQL Server®, and MySQL®. You’ll learn the basics of good database design and ultimately discover how to design a real-world database. What you will learn from this book ● How to identify database requirements that meet users’ needs ● Ways to build data models using a variety of modeling techniques, including Beginning entity-relational models, user-interface models, and -

Normalization Exercises

DATABASE DESIGN: NORMALIZATION NOTE & EXERCISES (Up to 3NF) Tables that contain redundant data can suffer from update anomalies, which can introduce inconsistencies into a database. The rules associated with the most commonly used normal forms, namely first (1NF), second (2NF), and third (3NF). The identification of various types of update anomalies such as insertion, deletion, and modification anomalies can be found when tables that break the rules of 1NF, 2NF, and 3NF and they are likely to contain redundant data and suffer from update anomalies. Normalization is a technique for producing a set of tables with desirable properties that support the requirements of a user or company. Major aim of relational database design is to group columns into tables to minimize data redundancy and reduce file storage space required by base tables. Take a look at the following example: StdSSN StdCity StdClass OfferNo OffTerm OffYear EnrGrade CourseNo CrsDesc S1 SEATTLE JUN O1 FALL 2006 3.5 C1 DB S1 SEATTLE JUN O2 FALL 2006 3.3 C2 VB S2 BOTHELL JUN O3 SPRING 2007 3.1 C3 OO S2 BOTHELL JUN O2 FALL 2006 3.4 C2 VB The insertion anomaly: Occurs when extra data beyond the desired data must be added to the database. For example, to insert a course (CourseNo), it is necessary to know a student (StdSSN) and offering (OfferNo) because the combination of StdSSN and OfferNo is the primary key. Remember that a row cannot exist with NULL values for part of its primary key. The update anomaly: Occurs when it is necessary to change multiple rows to modify ONLY a single fact. -

Normalization of Databases

Normalization of databases Database normalization is a technique of organizing the data in the database. Normalization is a systematic approach of decomposing tables to eliminate data redundancy and undesirable characteristics such as insertion, update and delete anomalies. It is a multi-step process that puts data in to tabular form by removing duplicated data from the relational table. Normalization is used mainly for 2 purpose. - Eliminating redundant data - Ensuring data dependencies makes sense. ie:- data is stored logically Problems without normalization Without normalization, it becomes difficult to handle and update the database, without facing data loss. Insertion, update and delete anomalies are very frequent is databases are not normalized. Example :- S_Id S_Name S_Address Subjects_opted 401 Adam Colombo-4 Bio 402 Alex Kandy Maths 403 Steuart Ja-Ela Maths 404 Adam Colombo-4 Chemistry Updation Anomaly – To update the address of a student who occurs twice or more than twice in a table, we will have to update S_Address column in all the rows, else data will be inconsistent. Insertion Anomaly – Suppose we have a student (S_Id), name and address of a student but if student has not opted for any subjects yet then we have to insert null, which leads to an insertion anomaly. Deletion Anomaly – If (S_id) 401 has opted for one subject only and temporarily he drops it, when we delete that row, entire student record will get deleted. Normalisation Rules 1st Normal Form – No two rows of data must contain repeating group of information. Ie. Each set of column must have a unique value, such that multiple columns cannot be used to fetch the same row. -

A Simple Database Supporting an Online Book Seller Tables About Books and Authors CREATE TABLE Book ( Isbn INTEGER, Title

1 A simple database supporting an online book seller Tables about Books and Authors CREATE TABLE Book ( Isbn INTEGER, Title CHAR[120] NOT NULL, Synopsis CHAR[500], ListPrice CURRENCY NOT NULL, AmazonPrice CURRENCY NOT NULL, SavingsInPrice CURRENCY NOT NULL, /* redundant AveShipLag INTEGER, AveCustRating REAL, SalesRank INTEGER, CoverArt FILE, Format CHAR[4] NOT NULL, CopiesInStock INTEGER, PublisherName CHAR[120] NOT NULL, /*Remove NOT NULL if you want 0 or 1 PublicationDate DATE NOT NULL, PublisherComment CHAR[500], PublicationCommentDate DATE, PRIMARY KEY (Isbn), FOREIGN KEY (PublisherName) REFERENCES Publisher, ON DELETE NO ACTION, ON UPDATE CASCADE, CHECK (Format = ‘hard’ OR Format = ‘soft’ OR Format = ‘audi’ OR Format = ‘cd’ OR Format = ‘digital’) /* alternatively, CHECK (Format IN (‘hard’, ‘soft’, ‘audi’, ‘cd’, ‘digital’)) CHECK (AmazonPrice + SavingsInPrice = ListPrice) ) CREATE TABLE Author ( AuthorName CHAR[120], AuthorBirthDate DATE, AuthorAddress ADDRESS, AuthorBiography FILE, PRIMARY KEY (AuthorName, AuthorBirthDate) ) CREATE TABLE WrittenBy (/*Books are written by authors Isbn INTEGER, AuthorName CHAR[120], AuthorBirthDate DATE, OrderOfAuthorship INTEGER NOT NULL, AuthorComment FILE, AuthorCommentDate DATE, PRIMARY KEY (Isbn, AuthorName, AuthorBirthDate), FOREIGN KEY (Isbn) REFERENCES Book, ON DELETE CASCADE, ON UPDATE CASCADE, FOREIGN KEY (AuthorName, AuthorBirthDate) REFERENCES Author, ON DELETE CASCADE, ON UPDATE CASCADE) 1 2 CREATE TABLE Publisher ( PublisherName CHAR[120], PublisherAddress ADDRESS, PRIMARY KEY (PublisherName) -

Database Normalization

Outline Data Redundancy Normalization and Denormalization Normal Forms Database Management Systems Database Normalization Malay Bhattacharyya Assistant Professor Machine Intelligence Unit and Centre for Artificial Intelligence and Machine Learning Indian Statistical Institute, Kolkata February, 2020 Malay Bhattacharyya Database Management Systems Outline Data Redundancy Normalization and Denormalization Normal Forms 1 Data Redundancy 2 Normalization and Denormalization 3 Normal Forms First Normal Form Second Normal Form Third Normal Form Boyce-Codd Normal Form Elementary Key Normal Form Fourth Normal Form Fifth Normal Form Domain Key Normal Form Sixth Normal Form Malay Bhattacharyya Database Management Systems These issues can be addressed by decomposing the database { normalization forces this!!! Outline Data Redundancy Normalization and Denormalization Normal Forms Redundancy in databases Redundancy in a database denotes the repetition of stored data Redundancy might cause various anomalies and problems pertaining to storage requirements: Insertion anomalies: It may be impossible to store certain information without storing some other, unrelated information. Deletion anomalies: It may be impossible to delete certain information without losing some other, unrelated information. Update anomalies: If one copy of such repeated data is updated, all copies need to be updated to prevent inconsistency. Increasing storage requirements: The storage requirements may increase over time. Malay Bhattacharyya Database Management Systems Outline Data Redundancy Normalization and Denormalization Normal Forms Redundancy in databases Redundancy in a database denotes the repetition of stored data Redundancy might cause various anomalies and problems pertaining to storage requirements: Insertion anomalies: It may be impossible to store certain information without storing some other, unrelated information. Deletion anomalies: It may be impossible to delete certain information without losing some other, unrelated information. -

3 Data Definition Language (DDL)

Database Foundations 6-3 Data Definition Language (DDL) Copyright © 2015, Oracle and/or its affiliates. All rights reserved. Roadmap You are here Data Transaction Introduction to Structured Data Definition Manipulation Control Oracle Query Language Language Language (TCL) Application Language (DDL) (DML) Express (SQL) Restricting Sorting Data Joining Tables Retrieving Data Using Using ORDER Using JOIN Data Using WHERE BY SELECT DFo 6-3 Copyright © 2015, Oracle and/or its affiliates. All rights reserved. 3 Data Definition Language (DDL) Objectives This lesson covers the following objectives: • Identify the steps needed to create database tables • Describe the purpose of the data definition language (DDL) • List the DDL operations needed to build and maintain a database's tables DFo 6-3 Copyright © 2015, Oracle and/or its affiliates. All rights reserved. 4 Data Definition Language (DDL) Database Objects Object Description Table Is the basic unit of storage; consists of rows View Logically represents subsets of data from one or more tables Sequence Generates numeric values Index Improves the performance of some queries Synonym Gives an alternative name to an object DFo 6-3 Copyright © 2015, Oracle and/or its affiliates. All rights reserved. 5 Data Definition Language (DDL) Naming Rules for Tables and Columns Table names and column names must: • Begin with a letter • Be 1–30 characters long • Contain only A–Z, a–z, 0–9, _, $, and # • Not duplicate the name of another object owned by the same user • Not be an Oracle server–reserved word DFo 6-3 Copyright © 2015, Oracle and/or its affiliates. All rights reserved. 6 Data Definition Language (DDL) CREATE TABLE Statement • To issue a CREATE TABLE statement, you must have: – The CREATE TABLE privilege – A storage area CREATE TABLE [schema.]table (column datatype [DEFAULT expr][, ...]); • Specify in the statement: – Table name – Column name, column data type, column size – Integrity constraints (optional) – Default values (optional) DFo 6-3 Copyright © 2015, Oracle and/or its affiliates. -

Fast Foreign-Key Detection in Microsoft SQL

Fast Foreign-Key Detection in Microsoft SQL Server PowerPivot for Excel Zhimin Chen Vivek Narasayya Surajit Chaudhuri Microsoft Research Microsoft Research Microsoft Research [email protected] [email protected] [email protected] ABSTRACT stored in a relational database, which they can import into Excel. Microsoft SQL Server PowerPivot for Excel, or PowerPivot for Other sources of data are text files, web data feeds or in general any short, is an in-memory business intelligence (BI) engine that tabular data range imported into Excel. enables Excel users to interactively create pivot tables over large data sets imported from sources such as relational databases, text files and web data feeds. Unlike traditional pivot tables in Excel that are defined on a single table, PowerPivot allows analysis over multiple tables connected via foreign-key joins. In many cases however, these foreign-key relationships are not known a priori, and information workers are often not be sophisticated enough to define these relationships. Therefore, the ability to automatically discover foreign-key relationships in PowerPivot is valuable, if not essential. The key challenge is to perform this detection interactively and with high precision even when data sets scale to hundreds of millions of rows and the schema contains tens of tables and hundreds of columns. In this paper, we describe techniques for fast foreign-key detection in PowerPivot and experimentally evaluate its accuracy, performance and scale on both synthetic benchmarks and real-world data sets. These techniques have been incorporated into PowerPivot for Excel. Figure 1. Example of pivot table in Excel. It enables multi- dimensional analysis over a single table. -

Databases : Lecture 1 1: Beyond ACID/Relational Databases Timothy G

Databases : Lecture 1 1: Beyond ACID/Relational databases Timothy G. Griffin Lent Term 2015 • Rise of Web and cluster-based computing • “NoSQL” Movement • Relationships vs. Aggregates • Key-value store • XML or JSON as a data exchange language • Not all applications require ACID • CAP = Consistency, Availability, and Partition tolerance • The CAP theorem (pick any two?) • Eventual consistency Apologies to Martin Fowler (“NoSQL Distilled”) Application-specific databases have always been with us . Two that I am familiar with: Daytona (AT&T): “Daytona is a data management system, not a database”. Built on top of the unix file system, this toolkit is for building application-specific But these systems and highly scalable data stores. Is used at AT&T are proprietary. for analysis of 100s of terabytes of call records. http://www2.research.att.com/~daytona/ Open source is a hallmark of NoSQL DataBlitz (Bell Labs, 1995) : Main-memory database system designed for embedded systems such as telecommunication switches. Optimized for simple key-driven queries. What’s new? Internet scale, cluster computing, open source . Something big is happening in the land of databases The Internet + cluster computing + open source systems many more points in the database design space are being explored and deployed Broader context helps clarify the strengths and weaknesses of the standard relational/ACID approach. http://nosql-database.org/ Eric Brewer’s PODC Keynote (July 2000) ACID vs. BASE (Basically Available, Soft-state, Eventually consistent) ACID BASE • Strong consistency Weak consistency • Isolation Availability first • Focus on “commit” Best effort • Nested transactions Approximate answers OK • Availability? Aggressive (optimistic) • Conservative (pessimistic) Simpler! • Difficult evolution (e.g.