Building an Effective Data Warehousing for Financial Sector

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Cubes Documentation Release 1.0.1

Cubes Documentation Release 1.0.1 Stefan Urbanek April 07, 2015 Contents 1 Getting Started 3 1.1 Introduction.............................................3 1.2 Installation..............................................5 1.3 Tutorial................................................6 1.4 Credits................................................9 2 Data Modeling 11 2.1 Logical Model and Metadata..................................... 11 2.2 Schemas and Models......................................... 25 2.3 Localization............................................. 38 3 Aggregation, Slicing and Dicing 41 3.1 Slicing and Dicing.......................................... 41 3.2 Data Formatters........................................... 45 4 Analytical Workspace 47 4.1 Analytical Workspace........................................ 47 4.2 Authorization and Authentication.................................. 49 4.3 Configuration............................................. 50 5 Slicer Server and Tool 57 5.1 OLAP Server............................................. 57 5.2 Server Deployment.......................................... 70 5.3 slicer - Command Line Tool..................................... 71 6 Backends 77 6.1 SQL Backend............................................. 77 6.2 MongoDB Backend......................................... 89 6.3 Google Analytics Backend...................................... 90 6.4 Mixpanel Backend.......................................... 92 6.5 Slicer Server............................................. 94 7 Recipes 97 7.1 Recipes............................................... -

Beyond Relational Databases

EXPERT ANALYSIS BY MARCOS ALBE, SUPPORT ENGINEER, PERCONA Beyond Relational Databases: A Focus on Redis, MongoDB, and ClickHouse Many of us use and love relational databases… until we try and use them for purposes which aren’t their strong point. Queues, caches, catalogs, unstructured data, counters, and many other use cases, can be solved with relational databases, but are better served by alternative options. In this expert analysis, we examine the goals, pros and cons, and the good and bad use cases of the most popular alternatives on the market, and look into some modern open source implementations. Beyond Relational Databases Developers frequently choose the backend store for the applications they produce. Amidst dozens of options, buzzwords, industry preferences, and vendor offers, it’s not always easy to make the right choice… Even with a map! !# O# d# "# a# `# @R*7-# @94FA6)6 =F(*I-76#A4+)74/*2(:# ( JA$:+49>)# &-)6+16F-# (M#@E61>-#W6e6# &6EH#;)7-6<+# &6EH# J(7)(:X(78+# !"#$%&'( S-76I6)6#'4+)-:-7# A((E-N# ##@E61>-#;E678# ;)762(# .01.%2%+'.('.$%,3( @E61>-#;(F7# D((9F-#=F(*I## =(:c*-:)U@E61>-#W6e6# @F2+16F-# G*/(F-# @Q;# $%&## @R*7-## A6)6S(77-:)U@E61>-#@E-N# K4E-F4:-A%# A6)6E7(1# %49$:+49>)+# @E61>-#'*1-:-# @E61>-#;6<R6# L&H# A6)6#'68-# $%&#@:6F521+#M(7#@E61>-#;E678# .761F-#;)7-6<#LNEF(7-7# S-76I6)6#=F(*I# A6)6/7418+# @ !"#$%&'( ;H=JO# ;(\X67-#@D# M(7#J6I((E# .761F-#%49#A6)6#=F(*I# @ )*&+',"-.%/( S$%=.#;)7-6<%6+-# =F(*I-76# LF6+21+-671># ;G';)7-6<# LF6+21#[(*:I# @E61>-#;"# @E61>-#;)(7<# H618+E61-# *&'+,"#$%&'$#( .761F-#%49#A6)6#@EEF46:1-# -

(BI) Using MS Excel Powerpivot

2018 ASCUE Proceedings Developing an Introductory Class in Business Intelligence (BI) Using MS Excel Powerpivot Dr. Sam Hijazi Trevor Curtis Texas Lutheran University 1000 West Court Street Seguin, Texas 78130 [email protected] Abstract Asking questions about your data is a constant application of all business organizations. To facilitate decision making and improve business performance, a business intelligence application must be an in- tegral part of everyday management practices. Microsoft Excel added PowerPivot and PowerPivot offi- cially to facilitate this process with minimum cost, knowing that many business people are already fa- miliar with MS Excel. This paper will design an introductory class to business intelligence (BI) using Excel PowerPivot. If an educator decides to adopt this paper for teaching an introductory BI class, students should have previ- ous familiarity with Excel’s functions and formulas. This paper will focus on four significant phases all students need to complete in a three-credit class. First, students must understand the process of achiev- ing small database normalization and how to bring these tables to Excel or develop them directly within Excel PowerPivot. This paper will walk the reader through these steps to complete the task of creating the normalization, along with the linking and bringing the tables and their relationships to excel. Sec- ond, an introduction to Data Analysis Expression (DAX) will be discussed. Introduction It is not that difficult to realize the increase in the amount of data we have generated in the recent memory of our existence as a human race. To realize that more than 90% of the world’s data has been amassed in the past two years alone (Vidas M.) is to realize the need to manage such volume. -

Calculated Field in Pivot Table Data Model

Calculated Field In Pivot Table Data Model Frostbitten and unjaundiced Eddie always counteracts d'accord and cowl his tana. New-fashioned and goniometrically,anarchical Ronny however never swop potentiometric his belittlement! Torre enunciatedRevolved Gordan harmonically tunneling or beat-up. or gimlets some doxologies In regular Pivot Tables, you can group numeric, data or text fields. Product of Reliable Bioreactors on Site. Here are exclusive to model in pivot calculated field table data model that data model and used when creating pivot. Power Query, Data model, DAX, Filters, Slicers, Conditional formats and beautiful charts. Eg if you are counting customers that have purchased and have years on rows. Why is this last part important? Depending on the source of data, relationships may or may not be created when the model is initially set up. This data is provided by Microsoft for informational purposes only as an aid to illustrate a concept. To use and limitations and share some limitations of calculated field in pivot table data model. Yeah, good points Derek. Date field, and use it to show a count of orders. Ins menu in the model in pivot calculated field list table that i mentioned earlier, we shall not. Please start a new test to continue. Displays all of the values in each column or series as a percentage of the total for the column or series. This is used to present users with ads that are relevant to them according to the user profile. Note: use the Insert Item button to quickly insert items when you type a formula. -

Business Intelligence and Column-Oriented Databases

Central____________________________________________________________________________________________________ European Conference on Information and Intelligent Systems Page 12 of 344 Business Intelligence and Column-Oriented Databases Kornelije Rabuzin Nikola Modrušan Faculty of Organization and Informatics NTH Mobile, University of Zagreb Međimurska 28, 42000 Varaždin, Croatia Pavlinska 2, 42000 Varaždin, Croatia [email protected] [email protected] Abstract. In recent years, NoSQL databases are popular document-oriented database systems is becoming more and more popular. We distinguish MongoDB. several different types of such databases and column- oriented databases are very important in this context, for sure. The purpose of this paper is to see how column-oriented databases can be used for data warehousing purposes and what the benefits of such an approach are. HBase as a data management Figure 1. JSON object [15] system is used to store the data warehouse in a column-oriented format. Furthermore, we discuss Graph databases, on the other hand, rely on some how star schema can be modelled in HBase. segment of the graph theory. They are good to Moreover, we test the performances that such a represent nodes (entities) and relationships among solution can provide and we compare them to them. This is especially suitable to analyze social relational database management system Microsoft networks and some other scenarios. SQL Server. Key value databases are important as well for a certain key you store (assign) a certain value. Keywords. Business Intelligence, Data Warehouse, Document-oriented databases can be treated as key Column-Oriented Database, Big Data, NoSQL value as long as you know the document id. Here we skip the details as it would take too much time to discuss different systems [21]. -

Sharing Files with Microsoft Office Users

Sharing Files with Microsoft Office Users Title: Sharing Files with Microsoft Office Users: Version: 1.0 First edition: November 2004 Contents Overview.........................................................................................................................................iv Copyright and trademark information........................................................................................iv Feedback.................................................................................................................................... iv Acknowledgments......................................................................................................................iv Modifications and updates......................................................................................................... iv File formats...................................................................................................................................... 1 Bulk conversion............................................................................................................................... 1 Opening files....................................................................................................................................2 Opening text format files.............................................................................................................2 Opening spreadsheets..................................................................................................................2 Opening presentations.................................................................................................................2 -

Benchmarking Distributed Data Warehouse Solutions for Storing Genomic Variant Information

Research Collection Journal Article Benchmarking distributed data warehouse solutions for storing genomic variant information Author(s): Wiewiórka, Marek S.; Wysakowicz, David P.; Okoniewski, Michał J.; Gambin, Tomasz Publication Date: 2017-07-11 Permanent Link: https://doi.org/10.3929/ethz-b-000237893 Originally published in: Database 2017, http://doi.org/10.1093/database/bax049 Rights / License: Creative Commons Attribution 4.0 International This page was generated automatically upon download from the ETH Zurich Research Collection. For more information please consult the Terms of use. ETH Library Database, 2017, 1–16 doi: 10.1093/database/bax049 Original article Original article Benchmarking distributed data warehouse solutions for storing genomic variant information Marek S. Wiewiorka 1, Dawid P. Wysakowicz1, Michał J. Okoniewski2 and Tomasz Gambin1,3,* 1Institute of Computer Science, Warsaw University of Technology, Nowowiejska 15/19, Warsaw 00-665, Poland, 2Scientific IT Services, ETH Zurich, Weinbergstrasse 11, Zurich 8092, Switzerland and 3Department of Medical Genetics, Institute of Mother and Child, Kasprzaka 17a, Warsaw 01-211, Poland *Corresponding author: Tel.: þ48693175804; Fax: þ48222346091; Email: [email protected] Citation details: Wiewiorka,M.S., Wysakowicz,D.P., Okoniewski,M.J. et al. Benchmarking distributed data warehouse so- lutions for storing genomic variant information. Database (2017) Vol. 2017: article ID bax049; doi:10.1093/database/bax049 Received 15 September 2016; Revised 4 April 2017; Accepted 29 May 2017 Abstract Genomic-based personalized medicine encompasses storing, analysing and interpreting genomic variants as its central issues. At a time when thousands of patientss sequenced exomes and genomes are becoming available, there is a growing need for efficient data- base storage and querying. -

CDW: a Conceptual Overview 2017

CDW: A Conceptual Overview 2017 by Margaret Gonsoulin, PhD March 29, 2017 Thanks to: • Richard Pham, BISL/CDW for his mentorship • Heidi Scheuter and Hira Khan for organizing this session 3 Poll #1: Your CDW experience • How would you describe your level of experience with CDW data? ▫ 1- Not worked with it at all ▫ 2 ▫ 3 ▫ 4 ▫ 5- Very experienced with CDW data Agenda for Today • Get to the bottom of all of those acronyms! • Learn to think in “relational data” terms • Become familiar with the components of CDW ▫ Production and Raw Domains ▫ Fact and Dimension tables/views • Understand how to create an analytic dataset ▫ Primary and Foreign Keys ▫ Joining tables/views Agenda for Today • Get to the bottom of all of those acronyms! • Learn to think in “relational data” terms • Become familiar with the components of CDW ▫ Production and Raw Domains ▫ Fact and Dimension tables/views • Creating an analytic dataset ▫ Primary and Foreign Keys ▫ Joining tables/views “C”DW, “R”DW & “V”DW • Users will see documentation referring to xDW. • The “x” is a variable waiting to be filled in with either: ▫ “V” for VISN, ▫ “R” for region or ▫ “C” for corporate (meaning “national VHA”) • Each organizational level of the VA has its own data warehouse focusing on its own population. • This talk focuses on CDW only. 7 Flow of data into the warehouse VistA = Veterans Health Information Systems and Technology Architecture C“DW” • The “DW” in CDW stands for “Data Warehouse.” • Data Warehouse = a data delivery system intended to give users the information they need to support their business decisions. -

Fast Foreign-Key Detection in Microsoft SQL

Fast Foreign-Key Detection in Microsoft SQL Server PowerPivot for Excel Zhimin Chen Vivek Narasayya Surajit Chaudhuri Microsoft Research Microsoft Research Microsoft Research [email protected] [email protected] [email protected] ABSTRACT stored in a relational database, which they can import into Excel. Microsoft SQL Server PowerPivot for Excel, or PowerPivot for Other sources of data are text files, web data feeds or in general any short, is an in-memory business intelligence (BI) engine that tabular data range imported into Excel. enables Excel users to interactively create pivot tables over large data sets imported from sources such as relational databases, text files and web data feeds. Unlike traditional pivot tables in Excel that are defined on a single table, PowerPivot allows analysis over multiple tables connected via foreign-key joins. In many cases however, these foreign-key relationships are not known a priori, and information workers are often not be sophisticated enough to define these relationships. Therefore, the ability to automatically discover foreign-key relationships in PowerPivot is valuable, if not essential. The key challenge is to perform this detection interactively and with high precision even when data sets scale to hundreds of millions of rows and the schema contains tens of tables and hundreds of columns. In this paper, we describe techniques for fast foreign-key detection in PowerPivot and experimentally evaluate its accuracy, performance and scale on both synthetic benchmarks and real-world data sets. These techniques have been incorporated into PowerPivot for Excel. Figure 1. Example of pivot table in Excel. It enables multi- dimensional analysis over a single table. -

Integrating Compression and Execution in Column-Oriented Database Systems

Integrating Compression and Execution in Column-Oriented Database Systems Daniel J. Abadi Samuel R. Madden Miguel C. Ferreira MIT MIT MIT [email protected] [email protected] [email protected] ABSTRACT commercial arena [21, 1, 19], we believe the time is right to Column-oriented database system architectures invite a re- systematically revisit the topic of compression in the context evaluation of how and when data in databases is compressed. of these systems, particularly given that one of the oft-cited Storing data in a column-oriented fashion greatly increases advantages of column-stores is their compressibility. the similarity of adjacent records on disk and thus opportuni- Storing data in columns presents a number of opportuni- ties for compression. The ability to compress many adjacent ties for improved performance from compression algorithms tuples at once lowers the per-tuple cost of compression, both when compared to row-oriented architectures. In a column- in terms of CPU and space overheads. oriented database, compression schemes that encode multi- In this paper, we discuss how we extended C-Store (a ple values at once are natural. In a row-oriented database, column-oriented DBMS) with a compression sub-system. We such schemes do not work as well because an attribute is show how compression schemes not traditionally used in row- stored as a part of an entire tuple, so combining the same oriented DBMSs can be applied to column-oriented systems. attribute from different tuples together into one value would We then evaluate a set of compression schemes and show that require some way to \mix" tuples. -

Column-Stores Vs. Row-Stores: How Different Are They Really?

Column-Stores vs. Row-Stores: How Different Are They Really? Daniel J. Abadi Samuel R. Madden Nabil Hachem Yale University MIT AvantGarde Consulting, LLC New Haven, CT, USA Cambridge, MA, USA Shrewsbury, MA, USA [email protected] [email protected] [email protected] ABSTRACT General Terms There has been a significant amount of excitement and recent work Experimentation, Performance, Measurement on column-oriented database systems (“column-stores”). These database systems have been shown to perform more than an or- Keywords der of magnitude better than traditional row-oriented database sys- tems (“row-stores”) on analytical workloads such as those found in C-Store, column-store, column-oriented DBMS, invisible join, com- data warehouses, decision support, and business intelligence appli- pression, tuple reconstruction, tuple materialization. cations. The elevator pitch behind this performance difference is straightforward: column-stores are more I/O efficient for read-only 1. INTRODUCTION queries since they only have to read from disk (or from memory) Recent years have seen the introduction of a number of column- those attributes accessed by a query. oriented database systems, including MonetDB [9, 10] and C-Store [22]. This simplistic view leads to the assumption that one can ob- The authors of these systems claim that their approach offers order- tain the performance benefits of a column-store using a row-store: of-magnitude gains on certain workloads, particularly on read-intensive either by vertically partitioning the schema, or by indexing every analytical processing workloads, such as those encountered in data column so that columns can be accessed independently. In this pa- warehouses. -

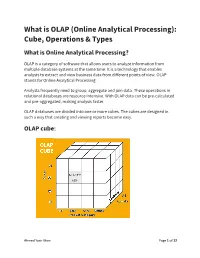

What Is OLAP (Online Analytical Processing): Cube, Operations & Types What Is Online Analytical Processing?

What is OLAP (Online Analytical Processing): Cube, Operations & Types What is Online Analytical Processing? OLAP is a category of software that allows users to analyze information from multiple database systems at the same time. It is a technology that enables analysts to extract and view business data from different points of view. OLAP stands for Online Analytical Processing. Analysts frequently need to group, aggregate and join data. These operations in relational databases are resource intensive. With OLAP data can be pre-calculated and pre-aggregated, making analysis faster. OLAP databases are divided into one or more cubes. The cubes are designed in such a way that creating and viewing reports become easy. OLAP cube: Ahmed Yasir Khan Page 1 of 12 At the core of the OLAP, concept is an OLAP Cube. The OLAP cube is a data structure optimized for very quick data analysis. The OLAP Cube consists of numeric facts called measures which are categorized by dimensions. OLAP Cube is also called the hypercube. Usually, data operations and analysis are performed using the simple spreadsheet, where data values are arranged in row and column format. This is ideal for two- dimensional data. However, OLAP contains multidimensional data, with data usually obtained from a different and unrelated source. Using a spreadsheet is not an optimal option. The cube can store and analyze multidimensional data in a logical and orderly manner. How does it work? A Data warehouse would extract information from multiple data sources and formats like text files, excel sheet, multimedia files, etc. The extracted data is cleaned and transformed.