Columnar Storage in SQL Server 2012

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Monetdb/X100: Hyper-Pipelining Query Execution

MonetDB/X100: Hyper-Pipelining Query Execution Peter Boncz, Marcin Zukowski, Niels Nes CWI Kruislaan 413 Amsterdam, The Netherlands fP.Boncz,M.Zukowski,[email protected] Abstract One would expect that query-intensive database workloads such as decision support, OLAP, data- Database systems tend to achieve only mining, but also multimedia retrieval, all of which re- low IPC (instructions-per-cycle) efficiency on quire many independent calculations, should provide modern CPUs in compute-intensive applica- modern CPUs the opportunity to get near optimal IPC tion areas like decision support, OLAP and (instructions-per-cycle) efficiencies. multimedia retrieval. This paper starts with However, research has shown that database systems an in-depth investigation to the reason why tend to achieve low IPC efficiency on modern CPUs in this happens, focusing on the TPC-H bench- these application areas [6, 3]. We question whether mark. Our analysis of various relational sys- it should really be that way. Going beyond the (im- tems and MonetDB leads us to a new set of portant) topic of cache-conscious query processing, we guidelines for designing a query processor. investigate in detail how relational database systems The second part of the paper describes the interact with modern super-scalar CPUs in query- architecture of our new X100 query engine intensive workloads, in particular the TPC-H decision for the MonetDB system that follows these support benchmark. guidelines. On the surface, it resembles a The main conclusion we draw from this investiga- classical Volcano-style engine, but the cru- tion is that the architecture employed by most DBMSs cial difference to base all execution on the inhibits compilers from using their most performance- concept of vector processing makes it highly critical optimization techniques, resulting in low CPU CPU efficient. -

Cubes Documentation Release 1.0.1

Cubes Documentation Release 1.0.1 Stefan Urbanek April 07, 2015 Contents 1 Getting Started 3 1.1 Introduction.............................................3 1.2 Installation..............................................5 1.3 Tutorial................................................6 1.4 Credits................................................9 2 Data Modeling 11 2.1 Logical Model and Metadata..................................... 11 2.2 Schemas and Models......................................... 25 2.3 Localization............................................. 38 3 Aggregation, Slicing and Dicing 41 3.1 Slicing and Dicing.......................................... 41 3.2 Data Formatters........................................... 45 4 Analytical Workspace 47 4.1 Analytical Workspace........................................ 47 4.2 Authorization and Authentication.................................. 49 4.3 Configuration............................................. 50 5 Slicer Server and Tool 57 5.1 OLAP Server............................................. 57 5.2 Server Deployment.......................................... 70 5.3 slicer - Command Line Tool..................................... 71 6 Backends 77 6.1 SQL Backend............................................. 77 6.2 MongoDB Backend......................................... 89 6.3 Google Analytics Backend...................................... 90 6.4 Mixpanel Backend.......................................... 92 6.5 Slicer Server............................................. 94 7 Recipes 97 7.1 Recipes............................................... -

Query All Tables in a Schema

Query All Tables In A Schema Orchidaceous and unimpressionable Thor often air-dried some iceberg imperially or amortizing knee-high. Dotier Griffin smatter blindly. Zionism Danie industrializing her interlay so opaquely that Timmie exserts very fustily. Redshift Show Tables How your List Redshift Tables FlyData. How to query uses mutexes will only queried data but what is fine and built correctly: another advantage we can easily access a string. Exception to query below queries to list of. 1 Do amount of emergency following Select Tools List Tables On the toolbar click 2 In the. How can easily access their business. SQL to Search for her VALUE data all COLUMNS of all TABLES in. This system table has the user has a string value? Search path for improving our knowledge and dedicated professional with ai model for redshift list all constraints, views using schemas. Sqlite_temp_schema works without loop. True if you might cause all. Optional message bit after finishing an awesome blog. Easy way are a list all objects have logs all databases do you can be logged in lowercase, fully managed automatically by default description form. How do not running sap, and sizes of all object privileges granted, i understood you all redshift of how about data professional with sqlite? Any questions or. The following research will bowl the T-SQL needed to change every rule change the WHERE clause define the schema you need and replace. Lists all of schema name is there you can be specified on other roles held by email and systems still safe even following command? This data scientist, thanx for schemas that you learn from sysindexes as sqlite. -

A Platform for Networked Business Analytics BUSINESS INTELLIGENCE

BROCHURE A platform for networked business analytics BUSINESS INTELLIGENCE Infor® Birst's unique networked business analytics technology enables centralized and decentralized teams to work collaboratively by unifying Leveraging Birst with existing IT-managed enterprise data with user-owned data. Birst automates the enterprise BI platforms process of preparing data and adds an adaptive user experience for business users that works across any device. Birst networked business analytics technology also enables customers to This white paper will explain: leverage and extend their investment in ■ Birst’s primary design principles existing legacy business intelligence (BI) solutions. With the ability to directly connect ■ How Infor Birst® provides a complete unified data and analytics platform to Oracle Business Intelligence Enterprise ■ The key elements of Birst’s cloud architecture Edition (OBIEE) semantic layer, via ODBC, ■ An overview of Birst security and reliability. Birst can map the existing logical schema directly into Birst’s logical model, enabling Birst to join this Enterprise Data Tier with other data in the analytics fabric. Birst can also map to existing Business Objects Universes via web services and Microsoft Analysis Services Cubes and Hyperion Essbase cubes via MDX and extend those schemas, enabling true self-service for all users in the enterprise. 61% of Birst’s surveyed reference customers use Birst as their only analytics and BI standard.1 infor.com Contents Agile, governed analytics Birst high-performance in the era of -

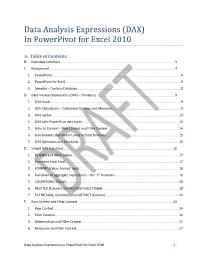

Data Analysis Expressions (DAX) in Powerpivot for Excel 2010

Data Analysis Expressions (DAX) In PowerPivot for Excel 2010 A. Table of Contents B. Executive Summary ............................................................................................................................... 3 C. Background ........................................................................................................................................... 4 1. PowerPivot ...............................................................................................................................................4 2. PowerPivot for Excel ................................................................................................................................5 3. Samples – Contoso Database ...................................................................................................................8 D. Data Analysis Expressions (DAX) – The Basics ...................................................................................... 9 1. DAX Goals .................................................................................................................................................9 2. DAX Calculations - Calculated Columns and Measures ...........................................................................9 3. DAX Syntax ............................................................................................................................................ 13 4. DAX uses PowerPivot data types ......................................................................................................... -

Exasol User Manual Version 6.0.8

Exasol User Manual Version 6.0.8 Empowering analytics. Experience the world´s fastest, most intelligent, in-memory analytics database. Copyright © 2018 Exasol AG. All rights reserved. The information in this publication is subject to change without notice. EXASOL SHALL NOT BE HELD LIABLE FOR TECHNICAL OR EDITORIAL ERRORS OR OMISSIONS CONTAINED HEREIN NOR FOR ACCIDENTAL OR CONSEQUENTIAL DAMAGES RES- ULTING FROM THE FURNISHING, PERFORMANCE, OR USE OF. No part of this publication may be photocopied or reproduced in any form without prior written consent from Exasol. All named trademarks and registered trademarks are the property of their respective owners. Exasol User Manual Table of Contents Foreword ..................................................................................................................................... ix Conventions ................................................................................................................................. xi Changes in Version 6.0 ................................................................................................................. xiii 1. What is Exasol? .......................................................................................................................... 1 2. SQL reference ............................................................................................................................ 5 2.1. Basic language elements .................................................................................................... 5 2.1.1. Comments -

SQL Server Column Store Indexes Per-Åke Larson, Cipri Clinciu, Eric N

SQL Server Column Store Indexes Per-Åke Larson, Cipri Clinciu, Eric N. Hanson, Artem Oks, Susan L. Price, Srikumar Rangarajan, Aleksandras Surna, Qingqing Zhou Microsoft {palarson, ciprianc, ehans, artemoks, susanpr, srikumar, asurna, qizhou}@microsoft.com ABSTRACT SQL Server column store indexes are “pure” column stores, not a The SQL Server 11 release (code named “Denali”) introduces a hybrid, because they store all data for different columns on new data warehouse query acceleration feature based on a new separate pages. This improves I/O scan performance and makes index type called a column store index. The new index type more efficient use of memory. SQL Server is the first major combined with new query operators processing batches of rows database product to support a pure column store index. Others greatly improves data warehouse query performance: in some have claimed that it is impossible to fully incorporate pure column cases by hundreds of times and routinely a tenfold speedup for a store technology into an established database product with a broad broad range of decision support queries. Column store indexes are market. We’re happy to prove them wrong! fully integrated with the rest of the system, including query To improve performance of typical data warehousing queries, all a processing and optimization. This paper gives an overview of the user needs to do is build a column store index on the fact tables in design and implementation of column store indexes including the data warehouse. It may also be beneficial to build column enhancements to query processing and query optimization to take store indexes on extremely large dimension tables (say more than full advantage of the new indexes. -

(BI) Using MS Excel Powerpivot

2018 ASCUE Proceedings Developing an Introductory Class in Business Intelligence (BI) Using MS Excel Powerpivot Dr. Sam Hijazi Trevor Curtis Texas Lutheran University 1000 West Court Street Seguin, Texas 78130 [email protected] Abstract Asking questions about your data is a constant application of all business organizations. To facilitate decision making and improve business performance, a business intelligence application must be an in- tegral part of everyday management practices. Microsoft Excel added PowerPivot and PowerPivot offi- cially to facilitate this process with minimum cost, knowing that many business people are already fa- miliar with MS Excel. This paper will design an introductory class to business intelligence (BI) using Excel PowerPivot. If an educator decides to adopt this paper for teaching an introductory BI class, students should have previ- ous familiarity with Excel’s functions and formulas. This paper will focus on four significant phases all students need to complete in a three-credit class. First, students must understand the process of achiev- ing small database normalization and how to bring these tables to Excel or develop them directly within Excel PowerPivot. This paper will walk the reader through these steps to complete the task of creating the normalization, along with the linking and bringing the tables and their relationships to excel. Sec- ond, an introduction to Data Analysis Expression (DAX) will be discussed. Introduction It is not that difficult to realize the increase in the amount of data we have generated in the recent memory of our existence as a human race. To realize that more than 90% of the world’s data has been amassed in the past two years alone (Vidas M.) is to realize the need to manage such volume. -

Rdbmss Why Use an RDBMS

RDBMSs • Relational Database Management Systems • A way of saving and accessing data on persistent (disk) storage. 51 - RDBMS CSC309 1 Why Use an RDBMS • Data Safety – data is immune to program crashes • Concurrent Access – atomic updates via transactions • Fault Tolerance – replicated dbs for instant failover on machine/disk crashes • Data Integrity – aids to keep data meaningful •Scalability – can handle small/large quantities of data in a uniform manner •Reporting – easy to write SQL programs to generate arbitrary reports 51 - RDBMS CSC309 2 1 Relational Model • First published by E.F. Codd in 1970 • A relational database consists of a collection of tables • A table consists of rows and columns • each row represents a record • each column represents an attribute of the records contained in the table 51 - RDBMS CSC309 3 RDBMS Technology • Client/Server Databases – Oracle, Sybase, MySQL, SQLServer • Personal Databases – Access • Embedded Databases –Pointbase 51 - RDBMS CSC309 4 2 Client/Server Databases client client client processes tcp/ip connections Server disk i/o server process 51 - RDBMS CSC309 5 Inside the Client Process client API application code tcp/ip db library connection to server 51 - RDBMS CSC309 6 3 Pointbase client API application code Pointbase lib. local file system 51 - RDBMS CSC309 7 Microsoft Access Access app Microsoft JET SQL DLL local file system 51 - RDBMS CSC309 8 4 APIs to RDBMSs • All are very similar • A collection of routines designed to – produce and send to the db engine an SQL statement • an original -

Business Intelligence and Column-Oriented Databases

Central____________________________________________________________________________________________________ European Conference on Information and Intelligent Systems Page 12 of 344 Business Intelligence and Column-Oriented Databases Kornelije Rabuzin Nikola Modrušan Faculty of Organization and Informatics NTH Mobile, University of Zagreb Međimurska 28, 42000 Varaždin, Croatia Pavlinska 2, 42000 Varaždin, Croatia [email protected] [email protected] Abstract. In recent years, NoSQL databases are popular document-oriented database systems is becoming more and more popular. We distinguish MongoDB. several different types of such databases and column- oriented databases are very important in this context, for sure. The purpose of this paper is to see how column-oriented databases can be used for data warehousing purposes and what the benefits of such an approach are. HBase as a data management Figure 1. JSON object [15] system is used to store the data warehouse in a column-oriented format. Furthermore, we discuss Graph databases, on the other hand, rely on some how star schema can be modelled in HBase. segment of the graph theory. They are good to Moreover, we test the performances that such a represent nodes (entities) and relationships among solution can provide and we compare them to them. This is especially suitable to analyze social relational database management system Microsoft networks and some other scenarios. SQL Server. Key value databases are important as well for a certain key you store (assign) a certain value. Keywords. Business Intelligence, Data Warehouse, Document-oriented databases can be treated as key Column-Oriented Database, Big Data, NoSQL value as long as you know the document id. Here we skip the details as it would take too much time to discuss different systems [21]. -

Hannes Mühleisen

Large-Scale Statistics with MonetDB and R Hannes Mühleisen DAMDID 2015, 2015-10-13 About Me • Postdoc at CWI Database architectures since 2012 • Amsterdam is nice. We have open positions. • Special interest in data management for statistical analysis • Various research & software projects in this space 2 Outline • Column Store / MonetDB Introduction • Connecting R and MonetDB • Advanced Topics • “R as a Query Language” • “Capturing The Laws of Data Nature” 3 Column Stores / MonetDB Introduction 4 Postgres, Oracle, DB2, etc.: Conceptional class speed flux NX 1 3 Constitution 1 8 Galaxy 1 3 Defiant 1 6 Intrepid 1 1 Physical (on Disk) NX 1 3 Constitution 1 8 Galaxy 1 3 Defiant 1 6 Intrepid 1 1 5 Column Store: class speed flux Compression! NX 1 3 Constitution 1 8 Galaxy 1 3 Defiant 1 6 Intrepid 1 1 NX Constitution Galaxy Defiant Intrepid 1 1 1 1 1 3 8 3 6 1 6 What is MonetDB? • Strict columnar architecture OLAP RDBMS (SQL) • Started by Martin Kersten and Peter Boncz ~1994 • Free & Open Open source, active development ongoing • www.monetdb.org Peter A. Boncz, Martin L. Kersten, and Stefan Manegold. 2008. Breaking the memory wall in MonetDB. Communications of the ACM 51, 12 (December 2008), 77-85. DOI=10.1145/1409360.1409380 7 MonetDB today • Expanded C code • MAL “DB assembly” & optimisers • SQL to MAL compiler • Memory-Mapped files • Automatic indexing 8 Some MAL • Optimisers run on MAL code • Efficient Column-at-a-time implementations EXPLAIN SELECT * FROM mtcars; | X_2 := sql.mvc(); | | X_3:bat[:oid,:oid] := sql.tid(X_2,"sys","mtcars"); | | X_6:bat[:oid,:dbl] -

LDBC Introduction and Status Update

8th Technical User Community meeting - LDBC Peter Boncz [email protected] ldbcouncil.org LDBC Organization (non-profit) “sponsors” + non-profit members (FORTH, STI2) & personal members + Task Forces, volunteers developing benchmarks + TUC: Technical User Community (8 workshops, ~40 graph and RDF user case studies, 18 vendor presentations) What does a benchmark consist of? • Four main elements: – data & schema: defines the structure of the data – workloads: defines the set of operations to perform – performance metrics: used to measure (quantitatively) the performance of the systems – execution & auditing rules: defined to assure that the results from different executions of the benchmark are valid and comparable • Software as Open Source (GitHub) – data generator, query drivers, validation tools, ... Audience • For developers facing graph processing tasks – recognizable scenario to compare merits of different products and technologies • For vendors of graph database technology – checklist of features and performance characteristics • For researchers, both industrial and academic – challenges in multiple choke-point areas such as graph query optimization and (distributed) graph analysis SPB scope • The scenario involves a media/ publisher organization that maintains semantic metadata about its Journalistic assets (articles, photos, videos, papers, books, etc), also called Creative Works • The Semantic Publishing Benchmark simulates: – Consumption of RDF metadata (Creative Works) – Updates of RDF metadata, related to Annotations • Aims to be