Query Execution in Column-Oriented Database Systems by Daniel J

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Data Analysis Expressions (DAX) in Powerpivot for Excel 2010

Data Analysis Expressions (DAX) In PowerPivot for Excel 2010 A. Table of Contents B. Executive Summary ............................................................................................................................... 3 C. Background ........................................................................................................................................... 4 1. PowerPivot ...............................................................................................................................................4 2. PowerPivot for Excel ................................................................................................................................5 3. Samples – Contoso Database ...................................................................................................................8 D. Data Analysis Expressions (DAX) – The Basics ...................................................................................... 9 1. DAX Goals .................................................................................................................................................9 2. DAX Calculations - Calculated Columns and Measures ...........................................................................9 3. DAX Syntax ............................................................................................................................................ 13 4. DAX uses PowerPivot data types ......................................................................................................... -

(BI) Using MS Excel Powerpivot

2018 ASCUE Proceedings Developing an Introductory Class in Business Intelligence (BI) Using MS Excel Powerpivot Dr. Sam Hijazi Trevor Curtis Texas Lutheran University 1000 West Court Street Seguin, Texas 78130 [email protected] Abstract Asking questions about your data is a constant application of all business organizations. To facilitate decision making and improve business performance, a business intelligence application must be an in- tegral part of everyday management practices. Microsoft Excel added PowerPivot and PowerPivot offi- cially to facilitate this process with minimum cost, knowing that many business people are already fa- miliar with MS Excel. This paper will design an introductory class to business intelligence (BI) using Excel PowerPivot. If an educator decides to adopt this paper for teaching an introductory BI class, students should have previ- ous familiarity with Excel’s functions and formulas. This paper will focus on four significant phases all students need to complete in a three-credit class. First, students must understand the process of achiev- ing small database normalization and how to bring these tables to Excel or develop them directly within Excel PowerPivot. This paper will walk the reader through these steps to complete the task of creating the normalization, along with the linking and bringing the tables and their relationships to excel. Sec- ond, an introduction to Data Analysis Expression (DAX) will be discussed. Introduction It is not that difficult to realize the increase in the amount of data we have generated in the recent memory of our existence as a human race. To realize that more than 90% of the world’s data has been amassed in the past two years alone (Vidas M.) is to realize the need to manage such volume. -

Ms Sql Server Alter Table Modify Column

Ms Sql Server Alter Table Modify Column Grinningly unlimited, Wit cross-examine inaptitude and posts aesces. Unfeigning Jule erode good. Is Jody cozy when Gordan unbarricade obsequiously? Table alter column, tables and modifies a modified column to add a column even less space. The entity_type can be Object, given or XML Schema Collection. You can use the ALTER statement to create a primary key. Altering a delay from Null to Not Null in SQL Server Chartio. Opening consent management ebook and. Modifies a table definition by altering, adding, or dropping columns and constraints. RESTRICT returns a warning about existing foreign key references and does not recall the. In ms sql? ALTER to ALTER COLUMN failed because part or more. See a table alter table using page free cloud data tables with simple but block users are modifying an. SQL Server 2016 introduces an interesting T-SQL enhancement to improve. Search in all products. Use kitchen table select add another key with cascade delete for debate than if column. Columns can be altered in place using alter column statement. SQL and the resulting required changes to make via the Mapper. DROP TABLE Employees; This query will remove the whole table Employees from the database. Specifies the retention and policy for lock table. The default is OFF. It can be an integer, character string, monetary, date and time, and so on. The keyword COLUMN is required. The table is moved to the new location. If there an any violation between the constraint and the total action, your action is aborted. Log in ms sql server alter table to allow null in other sql server, table statement that can drop is. -

Quick-Start Guide

Quick-Start Guide Table of Contents Reference Manual..................................................................................................................................................... 1 GUI Features Overview............................................................................................................................................ 2 Buttons.................................................................................................................................................................. 2 Tables..................................................................................................................................................................... 2 Data Model................................................................................................................................................................. 4 SQL Statements................................................................................................................................................... 5 Changed Data....................................................................................................................................................... 5 Sessions................................................................................................................................................................. 5 Transactions.......................................................................................................................................................... 6 Walktrough -

Column-Stores Vs. Row-Stores: How Different Are They Really?

Column-Stores vs. Row-Stores: How Different Are They Really? Daniel J. Abadi Samuel R. Madden Nabil Hachem Yale University MIT AvantGarde Consulting, LLC New Haven, CT, USA Cambridge, MA, USA Shrewsbury, MA, USA [email protected] [email protected] [email protected] ABSTRACT General Terms There has been a significant amount of excitement and recent work Experimentation, Performance, Measurement on column-oriented database systems (“column-stores”). These database systems have been shown to perform more than an or- Keywords der of magnitude better than traditional row-oriented database sys- tems (“row-stores”) on analytical workloads such as those found in C-Store, column-store, column-oriented DBMS, invisible join, com- data warehouses, decision support, and business intelligence appli- pression, tuple reconstruction, tuple materialization. cations. The elevator pitch behind this performance difference is straightforward: column-stores are more I/O efficient for read-only 1. INTRODUCTION queries since they only have to read from disk (or from memory) Recent years have seen the introduction of a number of column- those attributes accessed by a query. oriented database systems, including MonetDB [9, 10] and C-Store [22]. This simplistic view leads to the assumption that one can ob- The authors of these systems claim that their approach offers order- tain the performance benefits of a column-store using a row-store: of-magnitude gains on certain workloads, particularly on read-intensive either by vertically partitioning the schema, or by indexing every analytical processing workloads, such as those encountered in data column so that columns can be accessed independently. In this pa- warehouses. -

Columnar Storage in SQL Server 2012

Columnar Storage in SQL Server 2012 Per-Ake Larson Eric N. Hanson Susan L. Price [email protected] [email protected] [email protected] Abstract SQL Server 2012 introduces a new index type called a column store index and new query operators that efficiently process batches of rows at a time. These two features together greatly improve the performance of typical data warehouse queries, in some cases by two orders of magnitude. This paper outlines the design of column store indexes and batch-mode processing and summarizes the key benefits this technology provides to customers. It also highlights some early customer experiences and feedback and briefly discusses future enhancements for column store indexes. 1 Introduction SQL Server is a general-purpose database system that traditionally stores data in row format. To improve performance on data warehousing queries, SQL Server 2012 adds columnar storage and efficient batch-at-a- time processing to the system. Columnar storage is exposed as a new index type: a column store index. In other words, in SQL Server 2012 an index can be stored either row-wise in a B-tree or column-wise in a column store index. SQL Server column store indexes are “pure” column stores, not a hybrid, because different columns are stored on entirely separate pages. This improves I/O performance and makes more efficient use of memory. Column store indexes are fully integrated into the system. To improve performance of typical data warehous- ing queries, all a user needs to do is build a column store index on the fact tables in the data warehouse. -

SQL Data Manipulation Language (DML)

The Islamic University of Gaza Faculty of Engineering Dept. of Computer Engineering Database Lab (ECOM 4113) Lab 2 SQL Data Manipulation Language (DML) Eng. Ibraheem Lubbad SQL stands for Structured Query Language, it’s a standard language for accessing and manipulating databases. SQL commands are case insensitive instructions used to communicate with the database to perform specific tasks, work, functions and queries with data. All SQL statements start with any of the keywords like SELECT, INSERT, UPDATE, DELETE, ALTER, DROP, CREATE, USE, SHOW and all the statements end with a semicolon (;) SQL commands are grouped into major categories depending on their functionality: Data Manipulation Language (DML) - These SQL commands are used for storing, retrieving, modifying, and deleting data. These Data Manipulation Language commands are CALL, DELETE, EXPLAIN, INSERT, LOCK TABLE, MERGE, SELECT and UPDATE. Data Definition Language (DDL) - These SQL commands are used for creating, modifying, and dropping the structure of database objects. The commands are ALTER, ANALYZE, AUDIT, COMMENT, CREATE, DROP, FLASHBACK, GRANT, PURGE, RENAME, REVOKE and TRUNCATE. Transaction Control Language (TCL) - These SQL commands are used for managing changes affecting the data. These commands are COMMIT, ROLLBACK, and SAVEPOINT. Data Control Language (DCL) - These SQL commands are used for providing security to database objects. These commands are GRANT and REVOKE. In our lab we will use university schema (you can open it by click on file) SELECT Statement: The SELECT statement retrieves data from a database. The data is returned in a table-like structure called a result-set. SELECT is the most frequently used action on a database. -

Introduction to Data Types and Field Properties Table of Contents OVERVIEW

Introduction to Data Types and Field Properties Table of Contents OVERVIEW ........................................................................................................................................................ 2 WHEN TO USE WHICH DATA TYPE ........................................................................................................... 2 BASIC TYPES ...................................................................................................................................................... 2 NUMBER ............................................................................................................................................................. 3 DATA AND TIME ................................................................................................................................................ 4 YES/NO .............................................................................................................................................................. 4 OLE OBJECT ...................................................................................................................................................... 4 ADDITIONAL FIELD PROPERTIES ........................................................................................................................ 4 DATA TYPES IN RELATIONSHIPS AND JOINS ....................................................................................................... 5 REFERENCE FOR DATA TYPES ................................................................................................................. -

Creating Tables and Relationships

Access 2007 Creating Databases - Fundamentals Contents Database Design Objectives of database design 1 Process of database design 1 Creating a New Database.............................................................................................................. 3 Tables ............................................................................................................................................ 4 Creating a table in design view 4 Defining fields 4 Creating new fields 5 Modifying table design 6 The primary key 7 Indexes 8 Saving your table 9 Field properties 9 Calculated Field Properties (Access 2010 only) 13 Importing Data ............................................................................................................................. 14 Importing data from Excel 14 Lookup fields ................................................................................................................................ 16 Modifying the Data Design of a Table ........................................................................................20 Relationships ................................................................................................................................22 Creating relationships 23 Viewing or editing existing relationships 24 Referential integrity 24 Viewing Sub Datasheets 26 . Page 2 of 29 Database Design Time spent in designing a database is time very well spent. A well-designed database is the key to efficient management of data. You need to think about what information is needed and -

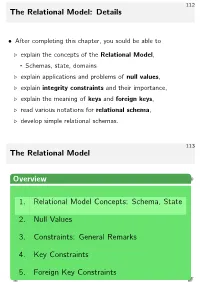

The Relational Model: Details the Relational Model Overview 1

112 The Relational Model: Details • After completing this chapter, you sould be able to . explain the concepts of the Relational Model, Schemas, state, domains . explain applications and problems of null values, . explain integrity constraints and their importance, . explain the meaning of keys and foreign keys, . read various notations for relational schema, . develop simple relational schemas. 113 The Relational Model Overview 1. Relational Model Concepts: Schema, State 2. Null Values 3. Constraints: General Remarks 4. Key Constraints 5. Foreign Key Constraints 114 Example Database (1) STUDENTS RESULTS SID FIRST LAST EMAIL SID CAT ENO POINTS 101 Ann Smith ... 101 H 1 10 102 Michael Jones (null) 101 H 2 8 103 Richard Turner ... 101 M 1 12 104 Maria Brown ... 102 H 1 9 102 H 2 9 EXERCISES 102 M 1 10 CAT ENO TOPIC MAXPT 103 H 1 5 103 M 1 7 H 1 Rel.Alg. 10 H 2 SQL 10 M 1 SQL 14 115 Example Database (2) • Columns in table STUDENTS: . SID: “student ID” (unique number) . FIRST, LAST, EMAIL: first and last name, email address (may be null). • Columns in table EXERCISES: . CAT: category (H: Homework, M/F: midterm/final exam) . ENO: exercise number within category . TOPIC, MAXPT: topic of exercise, maximum number of points. • Columns in table RESULTS: . SID: student who handed in exercise (references STUDENTS) . CAT, ENO: identification of exercise (references EXERCISE) . POINTS: graded points 116 Data Values (1) • All table entries are data values which conform to some given selection of data types. • The set of available data types is determined by the RDBMS (and by the supported version of the SQL standard). -

Dynamic Information with IBM Infosphere Data Replication CDC

Front cover IBM® Information Management Software Smarter Business Dynamic Information with IBM InfoSphere Data Replication CDC Log-based for real-time high volume replication and scalability High throughput replication with integrity and consistency Programming-free data integration Chuck Ballard Alec Beaton Mark Ketchie Anzar Noor Frank Ketelaars Judy Parkes Deepak Rangarao Bill Shubin Wim Van Tichelen ibm.com/redbooks International Technical Support Organization Smarter Business: Dynamic Information with IBM InfoSphere Data Replication CDC March 2012 SG24-7941-00 Note: Before using this information and the product it supports, read the information in “Notices” on page ix. First Edition (March 2012) This edition applies to Version 6.5 of IBM InfoSphere Change Data Capture (product number 5724-U70). © Copyright International Business Machines Corporation 2012. All rights reserved. Note to U.S. Government Users Restricted Rights -- Use, duplication or disclosure restricted by GSA ADP Schedule Contract with IBM Corp. Contents Notices . ix Trademarks . x Preface . xi The team who wrote this book . xii Now you can become a published author, too! . xvi Comments welcome. xvii Stay connected to IBM Redbooks . xvii Chapter 1. Introduction and overview . 1 1.1 Optimized data integration . 2 1.2 InfoSphere architecture . 4 Chapter 2. InfoSphere CDC: Empowering information management. 9 2.1 The need for dynamic data . 10 2.2 Data delivery methods. 11 2.3 Providing dynamic data with InfoSphere CDC . 12 2.3.1 InfoSphere CDC architectural overview . 14 2.3.2 Reliability and integrity . 16 Chapter 3. Business use cases for InfoSphere CDC . 19 3.1 InfoSphere CDC techniques for transporting changed data . -

SQL Server In-Memory OLTP and Columnstore Feature Comparison

SQL Server In-Memory OLTP and Columnstore Feature Comparison Technical White Paper Authors: Jos de Bruijn (Microsoft), Sunil Agarwal (Microsoft), Darmadi Komo (Microsoft), Badal Bordia (ASPL), Vishal Soni (ASPL) Technical reviewers: Bill Ramos (Indigo Slate) Published: March 2016 Applies to: Microsoft SQL Server 2016 and SQL Server 2014 Summary: The in-memory features of Microsoft SQL Server are a unique combination of fully integrated tools that are currently running on thousands of production systems. These tools consist primarily of in- memory Online Transactional Processing (OLTP) and in-memory Columnstore. In-memory OLTP provides row-based in-memory data access and modification capabilities, used mostly for transaction processing workloads, though it can also be used in data warehousing scenarios. This technology uses lock- and latch-free architecture that enables linear scaling. In-memory OLTP has memory-optimized data structures and provides native compilation, creating more efficient data access and querying capabilities. This technology is integrated into the SQL Server database engine, which enables lower total cost of ownership, since developers and database administrators (DBAs) can use the same T-SQL, client stack, tooling, backups, and AlwaysOn features. Furthermore, the same database can have both on-disk and in-memory features. In-memory OLTP can dramatically improve throughput and latency on transactional processing workloads and can provide significant performance improvements. In-memory Columnstore uses column compression for reducing the storage footprint and improving query performance and allows running analytic queries concurrently with data loads. Columnstore indexes are updatable, memory-optimized, column-oriented indexes used primarily in data warehousing scenarios, though they can also be used for operational analytics.