ED441402.Pdf

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Charismatic Leadership and Cultural Legacy of Stan Lee

REINVENTING THE AMERICAN SUPERHERO: THE CHARISMATIC LEADERSHIP AND CULTURAL LEGACY OF STAN LEE Hazel Homer-Wambeam Junior Individual Documentary Process Paper: 499 Words !1 “A different house of worship A different color skin A piece of land that’s coveted And the drums of war begin.” -Stan Lee, 1970 THESIS As the comic book industry was collapsing during the 1950s and 60s, Stan Lee utilized his charismatic leadership style to reinvent and revive the superhero phenomenon. By leading the industry into the “Marvel Age,” Lee has left a multilayered legacy. Examples of this include raising awareness of social issues, shaping contemporary pop-culture, teaching literacy, giving people hope and self-confidence in the face of adversity, and leaving behind a multibillion dollar industry that employs thousands of people. TOPIC I was inspired to learn about Stan Lee after watching my first Marvel movie last spring. I was never interested in superheroes before this project, but now I have become an expert on the history of Marvel and have a new found love for the genre. Stan Lee’s entire personal collection is archived at the University of Wyoming American Heritage Center in my hometown. It contains 196 boxes of interviews, correspondence, original manuscripts, photos and comics from the 1920s to today. This was an amazing opportunity to obtain primary resources. !2 RESEARCH My most important primary resource was the phone interview I conducted with Stan Lee himself, now 92 years old. It was a rare opportunity that few people have had, and quite an honor! I use clips of Lee’s answers in my documentary. -

Here Comes Television

September 1997 Vol. 2 No.6 HereHere ComesComes TelevisionTelevision FallFall TVTV PrPrevieweview France’France’ss ExpandingExpanding ChannelsChannels SIGGRAPHSIGGRAPH ReviewReview KorKorea’ea’ss BoomBoom DinnerDinner withwith MTV’MTV’ss AbbyAbby TTerkuhleerkuhle andand CTW’CTW’ss ArleneArlene SherShermanman Table of Contents September 1997 Vol. 2, . No. 6 4 Editor’s Notebook Aah, television, our old friend. What madness the power of a child with a remote control instills in us... 6 Letters: [email protected] TELEVISION 8 A Conversation With:Arlene Sherman and Abby Terkuhle Mo Willems hosts a conversation over dinner with CTW’s Arlene Sherman and MTV’s Abby Terkuhle. What does this unlikely duo have in common? More than you would think! 15 CTW and MTV: Shorts of Influence The impact that CTW and MTV has had on one another, the industry and beyond is the subject of Chris Robinson’s in-depth investigation. 21 Tooning in the Fall Season A new splash of fresh programming is soon to hit the airwaves. In this pivotal year of FCC rulings and vertical integration, let’s see what has been produced. 26 Saturday Morning Bonanza:The New Crop for the Kiddies The incurable, couch potato Martha Day decides what she’s going to watch on Saturday mornings in the U.S. 29 Mushrooms After the Rain: France’s Children’s Channels As a crop of new children’s channels springs up in France, Marie-Agnès Bruneau depicts the new play- ers, in both the satellite and cable arenas, during these tumultuous times. A fierce competition is about to begin... 33 The Korean Animation Explosion Milt Vallas reports on Korea’s growth from humble beginnings to big business. -

Table of Contents

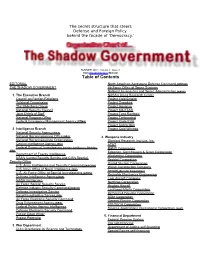

The secret structure that steers Defense and Foreign Policy behind the facade of 'Democracy.' SUMMER 2001 - Volume 1, Issue 3 from TrueDemocracy Website Table of Contents EDITORIAL North American Aerospace Defense Command (NORAD) THE SHADOW GOVERNMENT Air Force Office of Space Systems National Aeronautics and Space Administration (NASA) 1. The Executive Branch NASA's Ames Research Center Council on Foreign Relations Project Cold Empire Trilateral Commission Project Snowbird The Bilderberg Group Project Aquarius National Security Council Project MILSTAR Joint Chiefs of Staff Project Tacit Rainbow National Program Office Project Timberwind Federal Emergency Management Agency (FEMA) Project Code EVA Project Cobra Mist 2. Intelligence Branch Project Cold Witness National Security Agency (NSA) National Reconnaissance Office (NRO) 4. Weapons Industry National Reconnaissance Organization Stanford Research Institute, Inc. Central Intelligence Agency (CIA) AT&T Federal Bureau of Investigation , Counter Intelligence Division RAND Corporation (FBI) Edgerton, Germhausen & Greer Corporation Department of Energy Intelligence Wackenhut Corporation NSA's Central Security Service and CIA's Special Bechtel Corporation Security Office United Nuclear Corporation U.S. Army Intelligence and Security Command (INSCOM) Walsh Construction Company U.S. Navy Office of Naval Intelligence (ONI) Aerojet (Genstar Corporation) U.S. Air Force Office of Special Investigations (AFOSI) Reynolds Electronics Engineering Defense Intelligence Agency (DIA) Lear Aircraft Company NASA Intelligence Northrop Corporation Air Force Special Security Service Hughes Aircraft Defense Industry Security Command (DISCO) Lockheed-Maritn Corporation Defense Investigative Service McDonnell-Douglas Corporation Naval Investigative Service (NIS) BDM Corporation Air Force Electronic Security Command General Electric Corporation Drug Enforcement Agency (DEA) PSI-TECH Corporation Federal Police Agency Intelligence Science Applications International Corporation (SAIC) Defense Electronic Security Command Project Deep Water 5. -

Central Intelligence Agency (CIA) Freedom of Information Act (FOIA) Case Log October 2000 - April 2002

Description of document: Central Intelligence Agency (CIA) Freedom of Information Act (FOIA) Case Log October 2000 - April 2002 Requested date: 2002 Release date: 2003 Posted date: 08-February-2021 Source of document: Information and Privacy Coordinator Central Intelligence Agency Washington, DC 20505 Fax: 703-613-3007 Filing a FOIA Records Request Online The governmentattic.org web site (“the site”) is a First Amendment free speech web site and is noncommercial and free to the public. The site and materials made available on the site, such as this file, are for reference only. The governmentattic.org web site and its principals have made every effort to make this information as complete and as accurate as possible, however, there may be mistakes and omissions, both typographical and in content. The governmentattic.org web site and its principals shall have neither liability nor responsibility to any person or entity with respect to any loss or damage caused, or alleged to have been caused, directly or indirectly, by the information provided on the governmentattic.org web site or in this file. The public records published on the site were obtained from government agencies using proper legal channels. Each document is identified as to the source. Any concerns about the contents of the site should be directed to the agency originating the document in question. GovernmentAttic.org is not responsible for the contents of documents published on the website. 1 O ct 2000_30 April 2002 Creation Date Requester Last Name Case Subject 36802.28679 STRANEY TECHNOLOGICAL GROWTH OF INDIA; HONG KONG; CHINA AND WTO 36802.2992 CRAWFORD EIGHT DIFFERENT REQUESTS FOR REPORTS REGARDING CIA EMPLOYEES OR AGENTS 36802.43927 MONTAN EDWARD GRADY PARTIN 36802.44378 TAVAKOLI-NOURI STEPHEN FLACK GUNTHER 36810.54721 BISHOP SCIENCE OF IDENTITY FOUNDATION 36810.55028 KHEMANEY TI LEAF PRODUCTIONS, LTD. -

Supplemental Memo on DOJ Grantmaking

HENRY A. WAAMAN, CAUFORNIA, TOM DAVIS, VIRGINIA, CHAIRMAN RANKING MINORITY MEMBER TOM LANTOS, CALIFORNIA ONE HUNDRED TENTH CONGRESS DAN BURTON, INDIANA EDOLPHUS TOWNS, NEW YORK CHRISTOPHER SHAYS, CONNECTICUT PAUL E. KANJORSKI, PENNSYLVANIA JOHN M. McHUGH. NEW YORK CAROLYN B. MALONEY, NEW YORK JOHN L. MICA, FLORIDA EUJAH E. CUMMINGS, MARYLAND ([ongrt~~ ~tatt~ of tbt Wnfttb MARK E. SOUDER. INDIANA DENNIS J. KUCINICH, OHIO TODD RUSSELL PLATTS, PENNSYLVANIA DANNY K. DAVIS, ILUNOIS CHRIS CANNON. UTAH JOHN F. TIERNEY, MASSACHUSETTS 1!,JOU~t l\tprt~tntatibt~ JOHN J. DUNCAN. JR., TENNESSEE WM. LACY CLAY, MISSOURI of MICHAEL R. TURNER, OHIO DIANE E. WATSON, CAUFORNIA DARRELL E. ISSA, CAUFORNIA STEPHEN F. LYNCH, MASSACHUSETTS KENNY MARCHANT. TEXAS BRIAN HIGGINS, NEW YORK COMMITIEE ON OVERSIGHT AND GOVERNMENT REFORM LYNN A. WESTMORELAND. GEORGIA JOHN A. YARMUTH, KENTUCKY PATRICK T. McHENRY, NORTH CAROLINA BRUCE L. BRALEY, IOWA RAYBURN HOUSE OFFICE BUILDING VIRGINIA FOXX, NORTH CAROUNA ELEANOR HOLMES NORTON, 2157 BRIAN P. BILBRAY. CAUFORNIA DISTRICT OF COLUMBIA BILL SALI. IDAHO BETTY McCOLLUM, MINNESOTA WASHINGTON, DC 20515-6143 JIM JORDAN, OHIO JIM COOPER, TENNESSEE CHRIS VAN HOLLEN, MARYLAND MAJORITY (202) 225-5051 PAUL W. HODES, NEW HAMPSHIRE FACSIMILE (202) 225-4784 CHRISTOPHER S. MURPHY, CONNECTICUT MINORITY (202) 225-5074 JOHN P. SARBANES, MARYLAND PETER WELCH, VERMONT www.oversight.house.gov MEMORANDUM June 19,2008 To: Members ofthe Committee on Oversight and Government Reform Fr: Committee on Oversight and Government Reform, Majority Staff Re: Supplemental Information for Full Committee Hearing on Department ofJustice Grantmaking On Thursday, June 19,2008, at 10:00 a.m. in room 2154 ofthe Rayburn House Office Building, the full Committee will hold a hearing on grantmaking practices at the Department of Justice. -

„JETIX“ Aktenzeichen: KEK 503 Beschluss in Der Rundfu

Zulassungsantrag der Jetix Europe GmbH für das Fernsehspartenprogramm „JETIX“ Aktenzeichen: KEK 503 Beschluss In der Rundfunkangelegenheit der Jetix Europe GmbH, vertreten durch die Geschäftsführer Jürgen Hinz, Stefan Kas- tenmüller, Dene Stratton und Paul D. Taylor, Infanteriestraße 19/6, 80797 München, – Antragstellerin – w e g e n Zulassung zur bundesweiten Veranstaltung des Fernsehspartenprogramms „JETIX“ hat die Kommission zur Ermittlung der Konzentration im Medienbereich (KEK) auf Vorlagen der Bayerischen Landeszentrale für neue Medien (BLM) vom 02.06.2008 in der Sitzung am 19.08.2008 unter Mitwirkung ihrer Mitglieder Prof. Dr. Sjurts (Vorsitzende), Prof. Dr. Huber, Dr. Lübbert und Prof. Dr. Mailänder entschieden: Der von der Jetix Europe GmbH mit Schreiben vom 21.05.2008 bei der Bayeri- schen Landeszentrale für neue Medien (BLM) beantragten Zulassung zur Veran- staltung des bundesweit verbreiteten Fernsehspartenprogramms JETIX stehen Gründe der Sicherung der Meinungsvielfalt im Fernsehen nicht entgegen. 2 Begründung I Sachverhalt 1 Zulassungsantrag Die Antragstellerin hat mit Schreiben vom 21.05.2008 bei der BLM die Verlänge- rung der zum 30.09.2008 auslaufenden Zulassung für das Fernsehspartenpro- gramm JETIX um weitere acht Jahre beantragt. Die BLM hat der KEK den Antrag mit Schreiben vom 02.06.2008 zur medienkonzentrationsrechtlichen Prüfung vorge- legt. 2 Programmstruktur und -verbreitung 2.1 JETIX ist ein deutschsprachiges, digitales Spartenprogramm für Kinder mit dem Schwerpunkt auf Unterhaltung. Gesendet werden täglich von 06:00 bis 19:45 Uhr Action-, Humor- und Abenteuerformate. Die Zielgruppe sind Kinder im Alter zwi- schen 6 und 14 Jahren. 2.2 JETIX wird als Pay-TV-Angebot verschlüsselt und digital über die Programmplatt- form der Premiere Fernsehen GmbH & Co. -

Disney Channel’S That’S So Raven Is Classified in BARB As ‘Entertainment Situation Comedy US’

Children’s television output analysis 2003-2006 Publication date: 2nd October 2007 ©Ofcom Contents • Introduction • Executive summary • Children’s subgenre range • Children’s subgenre range by channel • Children’s subgenre range by daypart: PSB main channels • Appendix ©Ofcom Introduction • This annex is published as a supplement to Section 2 ‘Broadcaster Output’ of Ofcom’s report The future of children’s television programming. • It provides detail on individual channel output by children’s sub-genre for the PSB main channels, the BBC’s dedicated children’s channels, CBBC and CBeebies, and the commercial children’s channels, as well as detail on genre output by day-part for the PSB main channels. (It does not include any children’s output on other commercial generalist non-terrestrial channels, such as GMTV,ABC1, Sky One.) • This output analysis examines the genre range within children’s programming and looks at how this range has changed since 2003. It is based on the BARB Children’s genre classification only and uses the BARB subgenres of Children’s Drama, Factual, Cartoons, Light entertainment/quizzes, Pre-school and Miscellaneous. • It is important to note that the BARB genre classifications have some drawbacks: – All programme output that is targeted at children is not classified as Children’s within BARB. Some shows targeted at younger viewers, either within children’s slots on the PSB main channels or on the dedicated children’s channels are not classified as Children’s. For example, Disney Channel’s That’s so raven is classified in BARB as ‘Entertainment Situation Comedy US’. This output analysis is not based on the total output of each specific children’s channel, e.g. -

Gbruen Knujon Mitspamconf2

The Future of Anti-Spam: A Blueprint for New Internet Abuse Tools Garth Bruen, CEO Knujon.com LLC [email protected] Process and Document Map Abstract I. Introduction II. Failures and Limitations III. KnujOn Principles IV. Data Collection = Consumer Enfranchisement V. Sort, Categorize, Analyze VI. Redefining the Scope VII. Addressing the Support Structure VIII. Fixing Policy and Technology IX. Use the Law When Called For X. Brand Protection XI. The Daily Tally XII. Non-URL Successes XIII. Conclusion: What is Needed Next (Map of the Process and the Document) Abstract: It is this author’s contention that the efforts, technology, funding, and even critical thought in anti-spam development have been incorrectly focused on the email itself. In essence, the Internet security community has been “looking through the wrong end of the telescope.” Resources poured into larger and larger filtering engines and algorithms have failed to reduce the volume and flow of unsolicited email. In fact, spam volumes have generally increased since filtering was declared the de facto solution. The problem is not really about flaws in SMTP (simple mail transfer protocol), or email clients, or even email security in general. Nor is the problem solely attributed to botnets and malware. Garth Bruen and Dr. Robert Bruen have developed a solution that is based on mass abuse data collection, data analysis, policy enforcement, public reporting, and infrastructure enhancement. The ultimate key to solving the spam problem is about blocking transactions, stopping the flow of money to criminals who hire spammers to market their illicit products. Doing this through the existing, but enhanced, policy structure addresses the issue without exaggerated costs or reinventing the Internet. -

Congressional Record—Senate S9017

June 26, 1995 CONGRESSIONAL RECORD — SENATE S9017 the problems of decolonization. It has After this history, the image of puter networks, I believe Congress outlived its purpose. Rather than President Nelson Mandela—a man im- must act and do so in a constitutional search for a new purpose for this Coun- prisoned for 27 years in his fight manner to help parents who are under cil, we should ask whether it should against apartheid—handing the World assault in this day and age. There is a exist at all. Cup trophy to the white captain of the flood of vile pornography, and we must Mr. President, the other major area rugby team is indeed a powerful sym- act to stem this growing tide, because, for reform is in our thinking about bol of the dramatic changes in South in the words of Judge Robert Bork, it what the United Nations is and what Africa. Throughout the country, whites incites perverted minds. I refer to its role should be in American foreign and blacks alike celebrated the victory Judge Bork from the Spectator article policy. We cannot expect the United of the Springboks, the mascot of the that I have permission to insert in the Nations to be clearer in purpose than is national team. RECORD. its most powerful member state. Mr. President, I join with the inter- My bill, again, is S. 892, and provides At its core, the United Nations is a national community in congratulating just this sort of constitutional, nar- collection of sovereign states and is be- the people of South Africa on winning rowly focused assistance in protecting holden to them for guidance, funding, the rugby World Cup. -

Understanding the Business Ofonline Pharmaceutical Affiliate Programs

PharmaLeaks: Understanding the Business of Online Pharmaceutical Affiliate Programs Damon McCoy Andreas Pitsillidis∗ Grant Jordan∗ Nicholas Weaver∗† Christian Kreibich∗† Brian Krebs‡ Geoffrey M. Voelker∗ Stefan Savage∗ Kirill Levchenko∗ Department of Computer Science ∗Department of Computer Science and Engineering George Mason University University of California, San Diego †International Computer Science Institute ‡KrebsOnSecurity.com Berkeley, CA Abstract driven by competition between criminal organizations), a broad corpus of ground truth data has become avail- Online sales of counterfeit or unauthorized products able. In particular, in this paper we analyze the content drive a robust underground advertising industry that in- and implications of low-level databases and transactional cludes email spam, “black hat” search engine optimiza- metadata describing years of activity at the GlavMed, tion, forum abuse and so on. Virtually everyone has en- SpamIt and RX-Promotion pharmaceutical affiliate pro- countered enticements to purchase drugs, prescription- grams. By examining hundreds of thousands of orders, free, from an online “Canadian Pharmacy.” However, comprising a settled revenue totaling over US$170M, even though such sites are clearly economically moti- we are able to provide comprehensive documentation on vated, the shape of the underlying business enterprise three key aspects of underground advertising activity: is not well understood precisely because it is “under- Customers. We provide detailed analysis on the con- ground.” In this paper we exploit a rare opportunity to sumer demand for Internet-advertised counterfeit phar- view three such organizations—the GlavMed, SpamIt maceuticals, covering customer demographics, product and RX-Promotion pharmaceutical affiliate programs— selection (including an examination of drug abuse as a from the inside. -

Disney Fall 2020

DISNEY BOOK GROUP FALL 2020 WINTER 2021 HYPERION BOOKS FOR CHILDREN An Elephant & Piggie Biggie! Volume 3 Mo Willems Summary Catalog Copy: From award-winning, best-selling author and illustrator Mo Willems comes a bind-up of five Elephant & Piggie adventures to help foster early readers' problem-solving skills. Titles include: There is a Bird on Your Head!; Are You Ready to Play Outside?; Elephants Cannot Dance!; Should I Share My Ice Cream?; and I Will Take a Nap! Contributor Bio Author Bio: Hyperion Books for Children Mo Willems, a #1 New York Times best-selling author and illustrator, has been awarded three Caldecott 9781368057158 Honors for his picture books and two Theodor Seuss Geisel Medals and five Geisel Honors for his celebrated On Sale Date: 9/22/20 $16.99 USD/$22.99 CAD Elephant & Piggie series. Mo is the inaugural Education Artist-in-Residence at the John F. Kennedy Center for Hardcover Paper over boards the Performing Arts. He began his career as a writer and animator on Sesame Street, where he garnered six 320 Pages Emmy Awards. He lives in Massachusetts with his family. Learn more at pigeonpresents.com. Carton Qty: 20 Print Run: 250K Illustrator Bio: Ages 4 to 8, Grades P to 3 Juvenile Fiction / Animals JUV002080 Series: An Elephant and Piggie Book Unlimited Squirrels I Want to Sleep Under the Stars! Mo Willems Summary Catalog Copy: Mo Willems, creator of the revolutionary, award-winning, best-selling Elephant & Piggie books, has another breakout beginning-reader series. An ensemble cast of Squirrels, Acorns, and pop-in guests hosts a page-turning extravaganza. -

TEN EIE VICTORIES Protecting and Defending Children and Families in the Digital World

TEN EIE VICTORIES Protecting and Defending Children and Families in the Digital World #1 - The National Safe WiFi Campaign Turns Up the Heat on Starbucks EIE called on Corporate America to filter porn and child porn on its public WiFi 50,000 petitions and 75 partner organizations encouraged McDonald’s and Starbucks to lead Corporate America in this effort McDonald’s is now filtering WiFi in 14,000 stores nationwide and Subway in its company-owned stores On November 26, EIE turned up the heat on Starbucks in a national media and petition campaign for not holding its promise to filter pornography and child porn on its WiFi; Starbucks responded to EIE’s campaign within 24 hours and once again publicly pledged (via businessinsider.com) to offer safe WiFi beginning in 2019. The media campaign went viral with hundreds of media reports worldwide in the first week alone, including Forbes, CBS, NBC, Newsweek, The Washington Post and many others. Next Steps: Hold Starbucks accountable to follow through on its latest commitment made. Secure more commitments from restaurants, hotels/resorts, universities, retailers, shopping malls, libraries, travel industry,(planes, trains, buses);& churches both nationally & globally to provide safe WiFi. #2 - The Children’s Internet Safety Presidential Pledge, State Attorney Generals and Governor’s Pledges Donald Trump signed EIE’s historic and bi- partisan Children’s Internet Safety Presidential Pledge (and Hillary Clinton sent a letter of support) agreeing to enforce the existing federal obscenity, child pornography, sexual predation, and child trafficking laws and advance public policies to prevent the sexual exploitation of children online.