Exploring the Legal Rights & Obligations of Robots

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

History of Robotics: Timeline

History of Robotics: Timeline This history of robotics is intertwined with the histories of technology, science and the basic principle of progress. Technology used in computing, electricity, even pneumatics and hydraulics can all be considered a part of the history of robotics. The timeline presented is therefore far from complete. Robotics currently represents one of mankind’s greatest accomplishments and is the single greatest attempt of mankind to produce an artificial, sentient being. It is only in recent years that manufacturers are making robotics increasingly available and attainable to the general public. The focus of this timeline is to provide the reader with a general overview of robotics (with a focus more on mobile robots) and to give an appreciation for the inventors and innovators in this field who have helped robotics to become what it is today. RobotShop Distribution Inc., 2008 www.robotshop.ca www.robotshop.us Greek Times Some historians affirm that Talos, a giant creature written about in ancient greek literature, was a creature (either a man or a bull) made of bronze, given by Zeus to Europa. [6] According to one version of the myths he was created in Sardinia by Hephaestus on Zeus' command, who gave him to the Cretan king Minos. In another version Talos came to Crete with Zeus to watch over his love Europa, and Minos received him as a gift from her. There are suppositions that his name Talos in the old Cretan language meant the "Sun" and that Zeus was known in Crete by the similar name of Zeus Tallaios. -

How Not to Succeed in Law School

Essays How Not to Succeed in Law School James D. Gordon HIIt I. SHOULD You Go TO LAW SCHOOL? Would you like to help the less fortunate? Would you like to see liberty and justice for all? Do you want to vindicate the rights of the oppressed? If so, you should join the Peace Corps. The last thing you should do is attend law school. People basically hate lawyers, and with good reason. That's why you'll rarely escape from a dinner party without hearing at least one lawyer joke. Indeed, literature reveals that people have always hated lawyers. Samuel Coleridge wrote in The Devil's Thoughts: He saw a Lawyer killing a Viper On a dunghill hard by his own stable; t Professor of Law, Brigham Young University Law School. B.A. 1977, Brigham Young University; J.D. 1980, Boalt Hall School of Law, University of California, Berkeley. Just for the record (in case I am ever nominated for ajudicial appointment), I don't believe a word of this Essay. And if I do, I'm only being tentative. And if I'm not, I promise to let my colleagues dissuade me from my position shortly before the Senate confirmation hearings begin. After all, I watched the Bork hearings, too. I extend apologies and thanks to Dave Barry, Eliot A. Butler, Linda Bytof, Johnny Carson, Steven Chidester, Jesse H. Choper, Michael Cohen, Paul Duke, Vaughn J. Featherstone, J. Clifton Fleming, Jr., Frederick Mark Gedicks, Bruce C. Hafen, Carl S. Hawkins, Gregory Husisian, Brian C. Johnson, Edward L. Kimball, Jay Leno, Hans A. -

Moral and Ethical Questions for Robotics Public Policy Daniel Howlader1

Moral and ethical questions for robotics public policy Daniel Howlader1 1. George Mason University, School of Public Policy, 3351 Fairfax Dr., Arlington VA, 22201, USA. Email: [email protected]. Abstract The roles of robotics, computers, and artifi cial intelligences in daily life are continuously expanding. Yet there is little discussion of ethical or moral ramifi cations of long-term development of robots and 1) their interactions with humans and 2) the status and regard contingent within these interactions– and even less discussion of such issues in public policy. A focus on artifi cial intelligence with respect to differing levels of robotic moral agency is used to examine the existing robotic policies in example countries, most of which are based loosely upon Isaac Asimov’s Three Laws. This essay posits insuffi ciency of current robotic policies – and offers some suggestions as to why the Three Laws are not appropriate or suffi cient to inform or derive public policy. Key words: robotics, artifi cial intelligence, roboethics, public policy, moral agency Introduction Roles for robots Robots, robotics, computers, and artifi cial intelligence (AI) Robotics and robotic-type machinery are widely used in are becoming an evermore important and largely un- countless manufacturing industries all over the globe, and avoidable part of everyday life for large segments of the robotics in general have become a part of sea exploration, developed world’s population. At present, these daily as well as in hospitals (1). Siciliano and Khatib present the interactions are now largely mundane, have been accepted current use of robotics, which include robots “in factories and even welcomed by many, and do not raise practical, and schools,” (1) as well as “fi ghting fi res, making goods moral or ethical questions. -

Dr. Asimov's Automatons

Dr. Asimov’s Automatons Take on a Life of their Own Twenty years after his death, author Isaac Asimov’s robot fiction offers a blueprint to our robotic future...and the problems we could face by Alan S. Brown HIS PAST April, the University of Miami School These became the Three Laws. Today, they are the starting of Law held We Robot, the first-ever legal and point for any serious conversation about how humans and policy issues conference about robots. The name robots will behave around one another. of the conference, which brought together lawyers, As the mere fact of lawyers discussing robot law shows, engineers, and technologists, played on the title the issue is no longer theoretical. If robots are not yet of the most famous book intelligent, they are increasingly t ever written about robots, autonomous in how they carry out I, Robot, by Isaac Asimov. tasks. At the most basic level, every The point was underscored by day millions of robotic Roombas de- Laurie Silvers, president of Holly- cide how to navigate tables, chairs, wood Media Corp., which sponsored sofas, toys, and even pets as they the event. In 1991, Silvers founded vacuum homes. SyFy, a cable channel that specializ- At a more sophisticated level, es in science fiction. Within moments, autonomous robots help select in- she too had dropped Asimov’s name. ventory in warehouses, move and Silvers turned to Asimov for ad- position products in factories, and vice before launching SyFy. It was a care for patients in hospitals. South natural choice. Asimov was one of the Korea is testing robotic jailers. -

Adventuring with Books: a Booklist for Pre-K-Grade 6. the NCTE Booklist

DOCUMENT RESUME ED 311 453 CS 212 097 AUTHOR Jett-Simpson, Mary, Ed. TITLE Adventuring with Books: A Booklist for Pre-K-Grade 6. Ninth Edition. The NCTE Booklist Series. INSTITUTION National Council of Teachers of English, Urbana, Ill. REPORT NO ISBN-0-8141-0078-3 PUB DATE 89 NOTE 570p.; Prepared by the Committee on the Elementary School Booklist of the National Council of Teachers of English. For earlier edition, see ED 264 588. AVAILABLE FROMNational Council of Teachers of English, 1111 Kenyon Rd., Urbana, IL 61801 (Stock No. 00783-3020; $12.95 member, $16.50 nonmember). PUB TYPE Books (010) -- Reference Materials - Bibliographies (131) EDRS PRICE MF02/PC23 Plus Postage. DESCRIPTORS Annotated Bibliographies; Art; Athletics; Biographies; *Books; *Childress Literature; Elementary Education; Fantasy; Fiction; Nonfiction; Poetry; Preschool Education; *Reading Materials; Recreational Reading; Sciences; Social Studies IDENTIFIERS Historical Fiction; *Trade Books ABSTRACT Intended to provide teachers with a list of recently published books recommended for children, this annotated booklist cites titles of children's trade books selected for their literary and artistic quality. The annotations in the booklist include a critical statement about each book as well as a brief description of the content, and--where appropriate--information about quality and composition of illustrations. Some 1,800 titles are included in this publication; they were selected from approximately 8,000 children's books published in the United States between 1985 and 1989 and are divided into the following categories: (1) books for babies and toddlers, (2) basic concept books, (3) wordless picture books, (4) language and reading, (5) poetry. (6) classics, (7) traditional literature, (8) fantasy,(9) science fiction, (10) contemporary realistic fiction, (11) historical fiction, (12) biography, (13) social studies, (14) science and mathematics, (15) fine arts, (16) crafts and hobbies, (17) sports and games, and (18) holidays. -

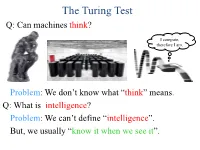

The Turing Test Q: Can Machines Think?

The Turing Test Q: Can machines think? I compute, therefore I am. Problem: We don’t know what ―think‖ means. Q: What is intelligence? Problem: We can’t define ―intelligence‖. But, we usually ―know it when we see it‖. The Turing Test Q: Which of the following can think? The Turing Test Q: Can you distinguish a machine from a person? ≡ Can a machine impersonate a person? The Turing Test • The first deep investigation into whether machines can ―behave intelligently‖ • Helped usher in field of AI • Decoupled ―intelligence‖ from ―human‖ • Based ―intelligence‖ on I/O, not entity’s ―look and feel‖ • Proposed a practical, formal test for intelligence • Definitions & test are operational & easily implementable • Turing test variants: ―immortality‖, ―fly-on-wall‖, ―meta‖, ―reverse‖, ―subject matter expert‖, ―compression‖, ―minimum intelligent signal‖ Turing Test Milestones Turk (von Kempelen), 1770: • Played a strong game of chess • Toured the world for 84 years • Generated much interest in automata • Was a hoax (hidden human operator) Turing Test Milestones Eliza (Weizenbaum), 1966: • First ―chatterbot‖ • Named after ―Eliza Doolittle‖ of Shaw’s Pygmalion • Simulated Rogerian psychotherapist • Often convinced people it is human • Influence computer games (e.g., Dungeon, Adventure) • Inspired Arthur C. Clarke’s HAL 9000 • Chatterbots appear today in chatrooms and dating services • Chatterbot competitions (Turing Test format): – Loebner Prize – Chatterbox Challenge, 2010 Turing Test Milestones Elbot, 2008: • Won 2008 Loebner Prize • Fooled 3 of 12 judges (Turing: >30%) • Typical excerpt: Judge: What's your favourite novel? Elbot: I often read telephone books, or dadaist poetry: Brä brä brä Judge: Typical computer! Elbot: You tend towards generalizations. -

The Three Laws of Robotics Machine Ethics (Or Machine Morality) Is A

The Three Laws of Robotics Machine ethics (or machine morality) is a part of the ethics of AI concerned with the moral behavior of artificially intelligent beings. Machine ethics contrasts with roboethics, which is concerned with the moral behavior of humans as they design, construct, use and treat such beings. Machine ethics should not be confused with computer ethics, which focuses on professional behavior towards computers and information. Isaac Asimov considered the issue in the 1950s in his novel: I, Robot. He proposed the Three Laws of Robotics to govern artificially intelligent systems. Much of his work was then spent testing the boundaries of his three laws to see where they would break down, or where they would create paradoxical or unanticipated behavior. His work suggests that no set of fixed laws can sufficiently anticipate all possible circumstances. Asimov’s laws are still mentioned as a template for guiding our development of robots ; but given how much robotics has changed, since they appeared, and will continue to grow in the future, we need to ask how these rules could be updated for a 21st century version of artificial intelligence. Asimov’s suggested laws were devised to protect humans from interactions with robots. They are: A robot may not injure a human being or, through inaction, allow a human being to come to harm A robot must obey the orders given it by human beings except where such orders would conflict with the First Law A robot must protect its own existence as long as such protection does not conflict with the First or Second Laws As mentioned, one of the obvious issues is that robots today appear to be far more varied than those in Asimov’s stories, including some that are far more simple. -

A Study in Nelville, Thain, Purdy, and Reller

THE RO~~NCE-P~~ODY: A STUDY IN NELVILLE, THAIN, PURDY, AND RELLER A Thesis Presented to the Faculty of the Department of English Kansas State Teachers College, Emporia In Partial Fulfillment of the Requirements for the Degree, Haster of Arts by Constance Denn~ston Nay 1965 Approved for the Major Department ~~._~.~.~ Approved for the Graduate Council ~~./ 21862:1 PREFACE -~ To criticize one genre according to the standards of another genre serves to confuse more than to clarify. It ~'!.... 0< i,viil be shc\~1. w '" tl1.e fO\:r v~cse fictio~s ex~nincd in this i,.;o::-k belong to the genre of romance-parody, but are often criticized because they do not conform to the genre of the novel or the genre of the romance. Many contemporary critics, especially Richard Cnase and Northrop Frye, refuse to relegate all long prose fiction to o~e category, the novel. They mcl~e a distinction between the novel and the romance, much as Hawthorne had done earlier. According to this distinction, the novel presents the every day experiences of ordinary people, i.cl1ereasthe romance pre sents extraordinary experiences of characte~ types from myths and legends and has a tendency to be allegorical. The four prose fictions examined in this work have much in co~~on with romance, in li&~t of the above distinc tions. However, it will be shovn1 that these fictions use the structure, conventions, and subjects of the romance ironically and satirically. Melville's The Confidence Man is a parody of religion. Twain's Ptldd'nb.ead Hilson parodies human justice. -

Brains, Minds, and Computers in Literary and Science Fiction Neuronarratives

BRAINS, MINDS, AND COMPUTERS IN LITERARY AND SCIENCE FICTION NEURONARRATIVES A dissertation submitted to Kent State University in partial fulfillment of the requirements for the degree of Doctor of Philosophy. by Jason W. Ellis August 2012 Dissertation written by Jason W. Ellis B.S., Georgia Institute of Technology, 2006 M.A., University of Liverpool, 2007 Ph.D., Kent State University, 2012 Approved by Donald M. Hassler Chair, Doctoral Dissertation Committee Tammy Clewell Member, Doctoral Dissertation Committee Kevin Floyd Member, Doctoral Dissertation Committee Eric M. Mintz Member, Doctoral Dissertation Committee Arvind Bansal Member, Doctoral Dissertation Committee Accepted by Robert W. Trogdon Chair, Department of English John R.D. Stalvey Dean, College of Arts and Sciences ii TABLE OF CONTENTS Acknowledgements ........................................................................................................ iv Chapter 1: On Imagination, Science Fiction, and the Brain ........................................... 1 Chapter 2: A Cognitive Approach to Science Fiction .................................................. 13 Chapter 3: Isaac Asimov’s Robots as Cybernetic Models of the Human Brain ........... 48 Chapter 4: Philip K. Dick’s Reality Generator: the Human Brain ............................. 117 Chapter 5: William Gibson’s Cyberspace Exists within the Human Brain ................ 214 Chapter 6: Beyond Science Fiction: Metaphors as Future Prep ................................. 278 Works Cited ............................................................................................................... -

Three Laws of Robotics

Three Laws of Robotics From Wikipedia, the free encyclopedia The Three Laws of Robotics (often shortened to The Three Laws or Three Laws) are a set of rules devised by the science fiction author Isaac Asimov. The rules were introduced in his 1942 short story "Runaround", although they had been foreshadowed in a few earlier stories. The Three Laws are: 1. A robot may not injure a human being or, through inaction, allow a human being to come to harm. 2. A robot must obey the orders given to it by human beings, except where such orders would conflict with the First Law. 3. A robot must protect its own existence as long as such protection does not conflict with the First or Second Laws. These form an organizing principle and unifying theme for Asimov's robotic-based fiction, appearing in his Robot series, the stories linked to it, and his Lucky Starr series of young-adult fiction. The Laws are incorporated into almost all of the positronic robots appearing in his fiction, and cannot be bypassed, being intended as a safety feature. Many of Asimov's robot-focused stories involve robots behaving in unusual and counter-intuitive ways as an unintended consequence of how the robot applies the Three Laws to the situation in which it finds itself. Other authors working in Asimov's fictional universe have adopted them and references, often parodic, appear throughout science fiction as well as in other genres. This cover of I, Robot illustrates the story "Runaround", the first to list all Three Laws of Robotics. -

The First Law of Robotics ( a Call to Arms )

:-0 appear, AAAI-94 The First Law of Robotics ( a call to arms ) Daniel Weld Oren Etzioni* Department of Computer Science and Engineering University of Washington Seattle, WA 98195 {weld, etzioni}~cs.washington.edu Abstract should not slavishly obey human commands-its fore- Even before the advent of Artificial Intelligence, sci- most goal should be to avoid harming humans. Con- ence fiction writer Isaac Asimov recognized that an sider the following scenarios: agent must place the protection of humans from harm .A construction robot is instructed to fill a pothole at a higher priority than obeying human orders. In- in the road. Although the robot repairs the cavity, spired by Asimov, we pose the following fundamental it leavesthe steam roller, chunks of tar, and an oil questions: (I) How should one formalize the rich, but slick in the middle of a busy highway. informal, notion of "harm"? (2) How can an agent avoid performing harmful actions, and do so in a com- .A softbot (software robot) is instructed to reduce putationally tractable manner? (3) How should an disk utilization below 90%. It succeeds, but in- agent resolve conflict between its goals and the need spection revealsthat the agent deleted irreplaceable to avoid harm? (4) When should an agent prevent a IjcTEXfiles without backing them up to tape. human from harming herself? While we address some of these questions in technical detail, the primary goal While less dramatic than Asimov's stories, the sce- of this paper is to focus attention on Asimov's concern: narios illustrate his point: not all ways of satisfying a society will reject autonomous agents unless we have human order are equally good; in fact, sometimesit is some credible means of making them safe! better not to satisfy the order at all. -

Law Is a Buyer's Market

LAW IS A Buyer’s MARKET Building a Client-First Law Firm JORDAN FURLONG Cover design by Mark Delbridge Interior design by Patricia LaCroix Copyright © 2017 Jordan Furlong All rights reserved. No part or this book may be reproduced or transmitted in any form or by any means without express written consent of the author. Printed in the United States of America. 22 21 20 19 18 17 5 4 3 2 1 ISBN: 978-0-9953488-0-6 For Mom and Dad CONTENTS Acknowledgments .............................................................. vii Introduction ......................................................................... ix Prologue ...............................................................................xv Chapter 1: The End of the Seller’s Market in Law ............... 1 Chapter 2: The Emergence of Lawyer Substitutes ..............17 Chapter 3: The Development of Law Firm Substitutes ....... 33 Chapter 4: The Fall of the Traditional Law Firm ................ 55 Chapter 5: The Rise of the Post-Lawyer Law Firm ............. 69 Chapter 6: The Law Firm as a Commercial Enterprise ....... 83 Chapter 7: Identifying Your Law Firm’s Professional Purpose ............................................................ 95 Chapter 8: Choosing Your Law Firm’s Markets and Clients ...................................................... 107 Chapter 9: Creating a Strategy to Fulfill Your Firm’s Purpose ...........................................................121 Chapter 10: The Client Strategy ......................................... 129 Chapter 11: The Competitive