Answer Span Correction in Machine Reading Comprehension

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Italian Wedding Soup

Italian Wedding Soup Yield: 8-10 servings Ingredients: Meatballs: ½ lb Ground Lean Beef ½ lb Ground Pork 1 Small Onion - grated 1 Large clove Fresh Garlic - minced 1 Large Egg 1 Slice White Bread - crust removed and torn into small pieces ½ Cup Parmigiano-Reggiano Cheese - grated ⅓ Cup Fresh Italian Parsley - small chopped 1 tsp Kosher Salt Fresh Ground Black Pepper to taste (Apx ¼ tsp) Soup: 1lb Acini di pepe pasta* 2 Quarts (8 Cups) Chicken Broth - homemade preferred but a low-sodium store bought is fine 1lb Escarole (can substitute curly endive or spinach) - rough chopped 3 Hard-boiled Eggs - diced 2 Large Stalks Celery - diced 1 Large Carrot - peeled and diced 1 Medium Onion - diced Kosher Salt and Fresh Ground Black Pepper to taste Preparation: 1) In a large bowl, mix together the grated onion, garlic, egg, bread, parsley, salt and pepper until thoroughly combined - Stir in the ground beef, ground pork, and parmigiano-reggiano cheese until well mixed (DO NOT 'squeeze' the meat) 2) Shape the resulting mixture into on inch diameter meatballs (apx 1 ½ tsp of mixture each) and place on a baking sheet until ready to use 3) Cook pasta according to package in a large pot of salted water until al dente - Drain and chill in refrigerator until ready to use 4) Bring chicken broth to a boil in a 4-6 quart soup pot over medium-high heat 5) Add celery, carrot, and onion to the broth and return to a simmer (adjust heat as necessary) - Allow to simmer for 10 minutes 6) Add the prepared meatballs and escarole to the broth and return to a simmer - Allow to simmer until meatballs are cooked through and escarole is tender (apx 8 minutes) 7) Add the diced hard-boiled egg taste and allow to simmer for an additional 1-2 minutes 8) Adjust seasoning with salt and pepper to taste 9) Place ½ cup of the cooked/chilled pasta to each serving bowl and ladle the soup directly over the pasta - Serve immediately garnished with a little grated parmigiano-reggiano cheese if desired * Acini di pepe is a small, round pasta that is commonly used in soups and cold salads. -

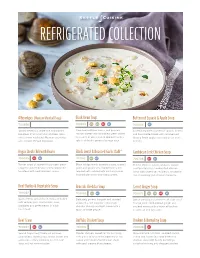

KC Refrigerated Product List 10.1.19.Indd

Created 3.11.09 One Color White REFRIGERATEDWhite: 0C 0M 0Y 0K COLLECTION Albondigas (Mexican Meatball Soup) Black Bean Soup Butternut Squash & Apple Soup 700856 700820 VN VG DF GF 700056 GF Savory meatballs, white rice and vibrant Slow-cooked black beans, red peppers, A blend of puréed butternut squash, onions tomatoes in a handcrafted chicken stock roasted sweet corn and diced green chilies and handcrafted stock with caramelized infused with traditional Mexican aromatics in a purée of vine-ripened tomatoes with a Granny Smith apples and a pinch of fresh and a touch of fresh lime juice. splash of fresh-squeezed orange juice. nutmeg. Angus Steak Chili with Beans Black Lentil & Roasted Garlic Dahl* Caribbean Jerk Chicken Soup 700095 DF GF 701762 VG GF 700708 DF GF Tender strips of seared Angus beef, green Black beluga lentils, sautéed onions, roasted Tender chicken, sweet potatoes, carrots peppers and red beans in slow-simmered garlic and ginger slow-simmered in a rich and tomatoes in a handcrafted chicken tomatoes with Southwestern spices. tomato broth, infused with warming spices, stock with white rice, red beans, traditional finished with butter and heavy cream. jerk seasoning and a hint of molasses. Beef Barley & Vegetable Soup Broccoli Cheddar Soup Carrot Ginger Soup 700023 700063 VG GF 700071 VN VG DF GF Seared strips of lean beef and pearl barley Delicately puréed broccoli and sautéed Sweet carrots puréed with fresh-squeezed with red peppers, mushrooms, peas, onions in a rich blend of extra sharp orange juice, hand-peeled ginger and tomatoes and green beans in a rich cheddar cheese and light cream with a sautéed onions with a touch of toasted beef stock. -

Touchofitaly.Com (302) 703-3090

PRIMI PIATTI SECONDI PIATTI Orecchiette con Salsiccia e Friarielli 18 Pollo al Taleggio 21 Ear shaped pasta with Italian sausage and broccoli rabe sautéed in Chicken cutlet breaded in our homemade bread crumbs, pan-seared in garlic, and EVOO a white wine Italian herb sauce then topped with melted taleggio cheese and prosciutto di parma, served over a bed of sautéed spinach Ravioli 18 Borgatti’s hand-crafted cheese ravioli topped with our homemade Salmone Oreganata 21 tomato basil sauce and a dollop of fresh ricotta Salmon roasted in our wood-fired oven then topped with warm caponata, served with escarole and cannellini beans Fettuccine Alfredo 18 Filetto di Manzo alla Griglia 28 Homemade fettuccini pasta tossed in a light parmigiano reggiano Fire-grilled hanger steak with Italian seasonings served with sautéed cream sauce topped with seared pancetta and shaved asiago cheese peppers, onions, and broccoli rabe Costoletta di Vitello 30 Garganelli alla Bolognese 19 Porterhouse veal chop roasted to a medium temperature in our wood- Homemade penne style pasta tossed in our classic meat sauce topped fired oven, seasoned with Italian herbs and EVOO, served with spinach with freshly grated parmigiano reggiano and basil and rosemary roasted potatoes Tagliatelle Fra’ diavolo 24 Pollo alla Contadina 23 Thin flat noodles with shrimp, clams, mussels, and calamari tossed Oven roasted chicken on the bone with sweet Italian sausage sautéed in a spicy hot marinara sauce with peppers, onions, potatoes, EVOO, and rosemary Gnocchi Calciano 14 Light as a feather -

Pasta ($10) Meat ($15) Fish

LUNCH ONLY / 12PM-4PM / TAKE OUT ONLY / DUPONT (202-483-3070) / 14TH (202-290-1178) PASTA ($10) Add: Meat Sauce $3.99, Shrimp (5 pcs) $4.99, Chicken $6.99, Gluten free Penne, Spaghetti $4.99 - Whole Wheat Penne, Spaghetti $2.99 Penne All'arrabbiata spicy tomato sauce, garlic Penne alla Margherita fresh tomato sauce, fresh basil, mozzarella Spaghetti con Polpette di Manzo spaghetti, fresh tomato sauce, beef meatballs Capellini con Pomodoro e Basilico angel hair pasta, fresh tomato sauce, garlic, basil Fettuccine Alfredo homemade fettuccine in a parmesan cream sauce Carbonara spaghetti, onions, pancetta, black pepper, egg Cavatelli homemade cavatelli, Italian sausage, tomato sauce, parmesan, broccoli rabe Ravioli Della Nonna homemade ravioli filled with pumpkin, amaretti, butter sage sauce Cacio e Pepe homemade tagliolini, parmigiano, pecorino, black pepper, olive oil MEAT ($15) Pollo alla Milanese Milanese style breaded chicken breast, cherry tomatoes, arugula Battuta di Pollo pounded, grilled chicken breast, mixed greens, cherry tomatoes FISH ($15) Salmone alla Griglia grilled fillet of Atlantic salmon, seasonal vegetables, olives, capers PIZZA ($10) Marinara* San Marzano tomato sauce, roasted garlic, oregano. Margherita Classica San Marzano tomato sauce, fresh mozzarella, basil. Napoletana* San Marzano tomato sauce, anchovies, capers, oregano. Sofia San Marzano tomato sauce, fresh mozzarella, gorgonzola, dolci. Salami San Marzano tomato sauce, fresh mozzarella, pepperoni, mushrooms. Vegetariana* San Marzano tomato sauce, roasted bell peppers, eggplant, zucchini. Indiavolata Fresh mozzarella, Italian sausage, broccoli rabe. Without san marzano tomato sauce Caciottaro Four cheeses: mozzarella, gorgonzola, taleggio, grana. Without san marzano tomato Verdona Ricotta cheese, spinach, olives, capers, mozzarella. Without san marzano tomato sauce . -

L'eccellenza Quotidiana

PDF Compressor Pro L’eccellenza quotidiana The Daily Excellence Regalati ogni giorno il massimo della qualità Have the maximum quality every day Granoro...your everyday First choice PDF Compressor Pro DIAGRAMMA DI FLUSSO PROCESS FLOW DIAGRAM ACCETTAZIONE MATERIE PRIME ACCEPTING RAW MATERIALS IMPASTO SEMOLA E ACQUA MIXING SEMOLINA AND WATER ESTRUSIONE EXTRUSION ESSICCAZIONE DRYING PROCESS PESATURA E CONFEZIONAMENTO WEIGHING AND PACKAGING METAL - CHECKING METAL - CHECKING INCARTONAMENTO PACKAGES IN CARTON BOX STOCCAGGIO PER IL CONSUMO FINALE STORAGE FOR END USING PDF Compressor Pro Famiglie di Prodotti Product Ranges FORMATI NORMALI • Conf. da 500 g e 1 kg • Pasta Lunga • Pasta Corta • Pastine GLI SPECIALI SEMOLA • Gli Speciali Semola • Pasta Lunga e LE SPECIALITÀ DI • Pasta Corta ATTILIO • Pastine • Gli Speciali Semola Nidi • Le Specialità di Attilio • Gli Speciali da Forno • Cannelloni Semola • Lasagne Semola • Lasagne Uovo PASTA ALL’UOVO e • Pasta all’Uovo • I Nidi all’Uovo SFOGLIA ANTICA • Le Pastine all’Uovo • Sfoglia Antica • Nidi all’Uovo con Sfoglia Ruvida LINEA BIOLOGICA • Pasta di semola da Agricoltura Biologica • Linea Rossa E Olio da • Passata di Pomodoro Bio Agricoltura Biologica • Pomodorini al naturale Bio • Polpa di Pomodoro Bio • Olio Extravergine di Oliva da Agricoltura Biologica CUORE MIO • Pasta Cuore Mio I FRESCHI • Gnocchi di Patate • Chicche di Patate LINEA ROSSO CUOCO • Passata di pomodoro • Pomodori Pelati • Pomodori Pelati San Marzano • Pomodorini al naturale • Polpa di Pomodoro CONDIMENTI • Condimenti • Crema di Peperoncino picc. Calabrese SUGHI PRONTI e • Patè di Olive Taggiasche LEGUMI • Pesto alla genovese • Sughi Pronti • Al Basilico • Alle Olive • Ai Funghi Porcini • All’Arrabbiata • Al Tonno • Alla Napoletana • Alla Bolognese • Legumi in vetro • Fagioli Cannellini • Fagioli Borlotti • Ceci • Piselli extra fi ni • Legumi in barattolo • Fagioli Cannellini • Fagioli Borlotti • Ceci • Piselli extra fi ni • Lenticchie OLIO EXTRAVERGINE • Olio extravergine di Oliva D.O.P. -

2017 Products Food Service

DATE: 10/02/2021 2017 PRODUCTS TIME: 04:42:32 FOOD SERVICE LONG CUTS CODE PRODUCT PACKAGE SIZE CASES / PALLET 65038 00140 Capellini 10'' 2 X 10lbs 90 65038 00135 Capellini 20" 1 X 20lbs 90 65038 00126 Fettuccine 10'' 2 X 10lbs 75 65038 00116 Fettuccine 10'' Grisspasta bag/label 4 X 5lbs 75 65038 00105 Fettuccine 20" 1 X 20lbs 90 65038 00887 Linguine 10" 20 X 500g 80 65038 00137 Linguine 10'' 2 X 10lbs 75 65038 00106 Linguine 20" 1 X 20lbs 90 65038 00734 Organic Whole Wheat Linguine Giardino carton 20 X 450gr 80 65038 00733 Organic Whole Wheat Spaghetti Giardino carton 20 X 450gr 80 65038 00727 Organic Whole Wheat Spaghetti 10'' 2 X 5lbs 192 65038 00120 Perciatelli 10" 1 X 20lbs 56 65038 00114 Spaghetti 10 '' Grisspasta bag/label 4 X 5lbs 75 65038 00123 Spaghetti 10" 2 X 10lbs 90 65038 00885 Spaghetti 10" 20 X 500g 80 65038 00102 Spaghetti 20" 1 X 20lbs 90 65038 01851 Spaghettini Grisspasta bag/label 9 X 1kg 80 65038 00104 Spaghettini 2A 20 '' 1 X 20lbs 90 65038 00124 Spaghettini 10" 2 X 10lbs 90 0265038 00115 Oct 2021 04:42:32 Spaghettini 10" Grisspasta bag/label 4 X 5lbs 75 Page 1 / 10 65038 00103 Spaghettini 20" 1 X 20lbs 90 65038 00653 Spinach Fettuccine 10'' 2 X 10lbs 75 65038 0652 Spinach Spaghetti 10'' 2 X 10lbs 90 65038 000523 Vegetable Spaghetti Grisspasta bag/label 20 X 400gr 60 65038 00308 Vegetable Spaghetti 10" 1 X 10lbs 192 65038 01308 Vegetable Spaghetti 10'' Grisspasta bag/label 2 X 5lbs 192 65038 00118 Vermicelli 10" 2 X 10lbs 90 65038 00107 Vermicelli 20" 1 x 20lbs 90 65038 00702 Whole Grain Fettuccine 10" 2 X 10lbs 75 -

The Geography of Italian Pasta

The Geography of Italian Pasta David Alexander University of Massachusetts, Amherst Pasta is as much an institution as a food in Italy, where it has made a significant contribution to national culture. Its historical geography is one of strong regional variations based on climate, social factors, and diffusion patterns. These are considered herein; a taxonomy of pasta types is presented and illustrated in a series of maps that show regional variations. The classification scheme divides pasta into eight classes based on morphology and, where appropriate, filling. These include the spaghetti and tubular families, pasta shells, ribbon forms, short pasta, very small or “micro- pasta” types, the ravioli family of filled pasta, and the dumpling family, which includes gnocchi. Three patterns of dif- fusion of pasta types are identified: by sea, usually from the Mezzogiorno and Sicily, locally through adjacent regions, and outwards from the main centers of adoption. Many dry pasta forms are native to the south and center of Italy, while filled pasta of the ravioli family predominates north of the Apennines. Changes in the geography of pasta are re- viewed and analyzed in terms of the modern duality of culture and commercialism. Key Words: pasta, Italy, cultural geography, regional geography. Meglio ch’a panza schiatta ca ’a roba resta. peasant’s meal of a rustic vegetable soup (pultes) Better that the belly burst than food be left on that contained thick strips of dried laganæ. But the table. Apicius, in De Re Coquinaria, gave careful in- —Neapolitan proverb structions on the preparation of moist laganæ and therein lies the distinction between fresh Introduction: A Brief Historical pasta, made with eggs and flour, which became Geography of Pasta a rich person’s dish, and dried pasta, without eggs, which was the food of the common man egend has it that when Marco Polo returned (Milioni 1998). -

The 30 Year Horizon

The 30 Year Horizon Manuel Bronstein W illiam Burge T imothy Daly James Davenport Michael Dewar Martin Dunstan Albrecht F ortenbacher P atrizia Gianni Johannes Grabmeier Jocelyn Guidry Richard Jenks Larry Lambe Michael Monagan Scott Morrison W illiam Sit Jonathan Steinbach Robert Sutor Barry T rager Stephen W att Jim W en Clifton W illiamson Volume 8.1: Axiom Gallery i Portions Copyright (c) 2005 Timothy Daly The Blue Bayou image Copyright (c) 2004 Jocelyn Guidry Portions Copyright (c) 2004 Martin Dunstan Portions Copyright (c) 2007 Alfredo Portes Portions Copyright (c) 2007 Arthur Ralfs Portions Copyright (c) 2005 Timothy Daly Portions Copyright (c) 1991-2002, The Numerical ALgorithms Group Ltd. All rights reserved. This book and the Axiom software is licensed as follows: Redistribution and use in source and binary forms, with or without modification, are permitted provided that the following conditions are met: - Redistributions of source code must retain the above copyright notice, this list of conditions and the following disclaimer. - Redistributions in binary form must reproduce the above copyright notice, this list of conditions and the following disclaimer in the documentation and/or other materials provided with the distribution. - Neither the name of The Numerical ALgorithms Group Ltd. nor the names of its contributors may be used to endorse or promote products derived from this software without specific prior written permission. THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS" AND ANY EXPRESS OR IMPLIED -

International Culinary Arts & Sciences Institute

TECHNIQUES CLASSES These hands-on classes are ideal for both novice cooking students and those experienced students seeking to refresh, enhance, and update their abilities. The recipe packages feature both exciting, up-to-the minute ideas and tried-and-true classic dishes arranged in a sequence of lessons that allows for fast mastery of critical cooking skills. Students seeking increased kitchen confidence will acquire fundamental kitchen skills, execute important cooking techniques, learn about common and uncommon ingredients, and create complex multi-component specialty dishes. All courses are taught in our state of the art ICASI facility by professional chefs with years of experience. Prerequisites: Because of the continuity of skills, it is strongly recommended that Basic Techniques series will be taken in order. Attendance at the first class of a series is mandatory. Basic Techniques of Cooking 1 (4 Sessions) Hrvatin Wednesdays, September 15, 22, 29, October 6, 2021 - 6:00 pm ($345, 4x3hrs, 1.2CEU) Mondays, November 22, 29, December 6, 13, 2021 – 6:00 pm ($345, 4x3hrs, 1.2CEU) Week 1: Knife Skills: French Onion Soup; Warm Vegetable Ratatouille; Vegetable Spring Rolls with Sweet & Sour Sauce; Vegetable Tempura; Garden Vegetable Parmesan Frittata Week 2: Stocks and Soups: Vegetable Stock; Fish Stock; Chicken Stock; Beef Stock; Black Bean Soup; Chicken Noodle Soup; Beef Consommé; Cream of Mushroom Soup; Fish Chowder; Potato Leek Soup Week 3: Grains and Potatoes: Creamy Polenta; Spicy Braised Lentils; Risotto; Israeli Couscous; Pommes -

10 Types of Pasta and Information About Them

10 types of pasta and information about them by: Rafael r. emata 3g Name: Mafaldine Name: $u%atini Origin: Origin: -Mafaldine/ Reginette -“bu%o" meaning hole& meaning “ittle “bu%ato" meaning !ueens" pier%ed Bucatini, also "nown as perciatelli, is a thic" spaghetti-- It is a type of ribbon-shaped pasta . It is flat and wide, li"e pasta with a hole running through the center. #he name usually about 1 cm (½ inch) in width, with wavy edges comes from Italian$$bucobuco, meaning %hole%, while bucato means on both sides. It is prepared similarly to other ribbon- %pierced%.&1' based pasta such as linguine and fettuccine. It is ucatini is common throughout a*io, particularly +ome. It is a usually served with a more delicate sauce. tubed pasta made of hard durum wheatwheat flour and water. Its Mafaldine were named in honor of rincess Mafalda of of length is /0 cm (101 in) with a / mm (12 inch) diameter. #he average coo"ing time is nine minutes. In Italian cuisine, it is served with buttery sauces, pancetta or or guanciale, vegetables, cheese, eggs, and anchovies or or sardinessardines.. Name: (appardelle Name: (i''o%%heri Origin: Origin: -“pappare" means “to -“pi''o%%heri" means gobble up “little bit" -#taly -#taly appardelle pasta is an Italian flat pasta cut into a broad ribbon shape. In width, the pasta is between tagliatelle and lasagna. #his pasta is traditionally served with very rich, heavy sauces, #his pasta is commonly recogni*ed as a shorter and especially sauces which include game such as wild sometimes wider version of the tagliatelli pasta, wwhichhich boar, and it is particularly popular in the winter, when it is composed of strips of egg and flour noodles. -

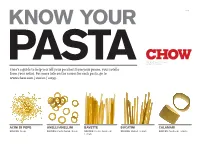

Chart-Of-Pasta-Shapes

KNOW YOUR 1 of 6 Photographs by Chris Rochelle PASTA (Images not actual size) Here’s a guide to help you tell your paccheri from your penne, your rotelle from your rotini. For more info on the sauces for each pasta, go to www.chow.com / stories / 11099. ACINI DI PEPE ANELLI/ANELLINI BAVETTE BUCatINI CALAMARI SAUCES: Soup SAUCES: Pasta Salad, Soup SAUCES: Pesto, Seafood, SAUCES: Baked, Tomato SAUCES: Seafood, Tomato Tomato Know Your Pasta 2 of 6 CAMpanELLE CAPELLINI CASARECCE CAVatELLI CAVaturI SAUCES: Butter/Oil, Cream/ (a.k.a. Angel Hair) SAUCES: Cream/Cheese, SAUCES: Cream/Cheese, SAUCES: Pasta Salad, Cheese, Meat, Pasta Salad, SAUCES: Butter/Oil, Cream/ Meat, Pesto, Seafood, Meat, Pasta Salad, Soup, Vegetable Vegetable Cheese, Pesto, Seafood, Tomato, Vegetable Vegetable Soup, Tomato, Vegetable CONCHIGLIE DItaLINI FarfaLLE FETTUCCINE FREGULA SAUCES: Cream/Cheese, SAUCES: Baked, Pasta Salad, SAUCES: Butter/Oil, Cream/ SAUCES: Butter/Oil, Cream/ SAUCES: Soup, Tomato Meat, Pasta Salad, Pesto, Soup Cheese, Meat, Pasta Salad, Cheese, Meat, Seafood, Tomato, Vegetable Pesto, Seafood, Soup, Tomato, Vegetable Tomato, Vegetable Know Your Pasta 3 of 6 FUSILLI FUSILLI COL BUCO FUSILLI NAPOLEtanI GEMELLI GIGLI SAUCES: Baked, Butter/Oil, SAUCES: Baked, Butter/Oil, SAUCES: Baked, Butter/Oil, SAUCES: Baked, Butter/Oil, SAUCES: Baked, Butter/Oil, Cream/Cheese, Meat, Pasta Cream/Cheese, Meat, Pasta Cream/Cheese, Meat, Pasta Cream/Cheese, Meat, Pasta Meat, Tomato Salad, Pesto, Soup, Tomato, Salad, Pesto, Soup, Tomato, Salad, Pesto, Soup, Tomato, Salad, -

Renaissance Cocktail Menu

Renaissance Cocktail Package Your package will include Exclusive use of our Ballroom or Clubroom for four hours Event Specialist Professional Wait staff China and Flatware Choice of linen color for tables and napkins Silver Chafers for food service Lantern and candle decorations for stations Unlimited soft drinks Alcohol ordering and delivery arrangements Free private parking lots Votive candles Table numbers Place cards Direction cards Stationary Appetizers (select two) Imported and Domestic Cheeses with Fresh Strawberries, Grapes and Crackers Fresh Vegetable Crudité with Assorted Dips Hummus, Olive Tapenade, Dalmatia Fig Preserves and Pita Triangles Fresh Tomato Bruschetta, Marinated Grilled Vegetables and Roasted Peppers Fresh Mozzarella, Plum Tomato and Basil Drizzled with Extra Virgin Olive Oil One Hour of Unlimited Butler Style Passed Hors d’oeuvres Select ten Hors d’ oeuvres from our full list of 90 options Dinner Stations Pasta Station Garnished with Parmesan Cheese, Red Pepper Flakes and Sliced Italian Bread Mixed Greens with a Balsamic Vinaigrette Or Traditional Caesar Salad with Croutons (select two) Penne Pasta with Vodka Sauce Cavatelli and Broccoli with Garlic and Oil Rigatoni Bolognaise Penne Pasta with Fresh Grilled Vegetables Fusilli Pasta with Broccoli and Roasted Peppers Tortellini with Vodka Sauce Chef Attended Carving Station Roasted New Potato or Smashed New Potato with Garlic and Cream Assorted Dinner Rolls Garnished with Appropriate Condiments (select two) Roasted Top Round of Beef with Au jus Roasted Peppered