Predicting Chroma from Luma with Frequency Domain Intra Prediction

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Encoding H.264 Video for Streaming and Progressive Download

W4: KEY ENCODING SKILLS, TECHNOLOGIES TECHNIQUES STREAMING MEDIA EAST - 2019 Jan Ozer www.streaminglearningcenter.com [email protected]/ 276-235-8542 @janozer Agenda • Introduction • Lesson 5: How to build encoding • Lesson 1: Delivering to Computers, ladder with objective quality metrics Mobile, OTT, and Smart TVs • Lesson 6: Current status of CMAF • Lesson 2: Codec review • Lesson 7: Delivering with dynamic • Lesson 3: Delivering HEVC over and static packaging HLS • Lesson 4: Per-title encoding Lesson 1: Delivering to Computers, Mobile, OTT, and Smart TVs • Computers • Mobile • OTT • Smart TVs Choosing an ABR Format for Computers • Can be DASH or HLS • Factors • Off-the-shelf player vendor (JW Player, Bitmovin, THEOPlayer, etc.) • Encoding/transcoding vendor Choosing an ABR Format for iOS • Native support (playback in the browser) • HTTP Live Streaming • Playback via an app • Any, including DASH, Smooth, HDS or RTMP Dynamic Streaming iOS Media Support Native App Codecs H.264 (High, Level 4.2), HEVC Any (Main10, Level 5 high) ABR formats HLS Any DRM FairPlay Any Captions CEA-608/708, WebVTT, IMSC1 Any HDR HDR10, DolbyVision ? http://bit.ly/hls_spec_2017 iOS Encoding Ladders H.264 HEVC http://bit.ly/hls_spec_2017 HEVC Hardware Support - iOS 3 % bit.ly/mobile_HEVC http://bit.ly/glob_med_2019 Android: Codec and ABR Format Support Codecs ABR VP8 (2.3+) • Multiple codecs and ABR H.264 (3+) HLS (3+) technologies • Serious cautions about HLS • DASH now close to 97% • HEVC VP9 (4.4+) DASH 4.4+ Via MSE • Main Profile Level 3 – mobile HEVC (5+) -

Fast Computational Structures for an Efficient Implementation of The

ARTICLE IN PRESS Signal Processing ] (]]]]) ]]]–]]] Contents lists available at ScienceDirect Signal Processing journal homepage: www.elsevier.com/locate/sigpro Fast computational structures for an efficient implementation of the complete TDAC analysis/synthesis MDCT/MDST filter banks Vladimir Britanak a,Ã, Huibert J. Lincklaen Arrie¨ns b a Institute of Informatics, Slovak Academy of Sciences, Dubravska cesta 9, 845 07 Bratislava, Slovak Republic b Delft University of Technology, Department of Electrical Engineering, Mathematics and Computer Science, Mekelweg 4, 2628 CD Delft, The Netherlands article info abstract Article history: A new fast computational structure identical both for the forward and backward Received 27 May 2008 modified discrete cosine/sine transform (MDCT/MDST) computation is described. It is Received in revised form the result of a systematic construction of a fast algorithm for an efficient implementa- 8 January 2009 tion of the complete time domain aliasing cancelation (TDAC) analysis/synthesis MDCT/ Accepted 10 January 2009 MDST filter banks. It is shown that the same computational structure can be used both for the encoder and the decoder, thus significantly reducing design time and resources. Keywords: The corresponding generalized signal flow graph is regular and defines new sparse Modified discrete cosine transform matrix factorizations of the discrete cosine transform of type IV (DCT-IV) and MDCT/ Modified discrete sine transform MDST matrices. The identical fast MDCT computational structure provides an efficient Modulated -

Google Chrome Browser Dropping H.264 Support 14 January 2011, by John Messina

Google Chrome Browser dropping H.264 support 14 January 2011, by John Messina with the codecs already supported by the open Chromium project. Specifically, we are supporting the WebM (VP8) and Theora video codecs, and will consider adding support for other high-quality open codecs in the future. Though H.264 plays an important role in video, as our goal is to enable open innovation, support for the codec will be removed and our resources directed towards completely open codec technologies." Since Google is developing the WebM technology, they can develop a good video standard using open source faster and better than a current standard video player can. The problem with H.264 is that it cost money and On January 11, Google announced that Chrome’s the patents for the technologies in H.264 are held HTML5 video support will change to match codecs by 27 companies, including Apple and Microsoft supported by the open source Chromium project. and controlled by MPEG LA. This makes H.264 Chrome will support the WebM (VP8) and Theora video expensive for content owners and software makers. codecs, and support for the H.264 codec will be removed to allow resources to focus on open codec Since Apple and Microsoft hold some of the technologies. patents for the H.264 technology and make money off the licensing fees, it's in their best interest not to change the technology in their browsers. (PhysOrg.com) -- Google will soon stop supporting There is however concerns that Apple and the H.264 video codec in their Chrome browser Microsoft's lack of support for WebM may impact and will support its own WebM and Ogg Theora the Chrome browser. -

Qoe Based Comparison of H.264/AVC and Webm/VP8 in Error-Prone Wireless Networkqoe Based Comparison of H.264/AVC and Webm/VP8 In

QoE based comparison of H.264/AVC and WebM/VP8 in an error-prone wireless network Omer Nawaz, Tahir Nawaz Minhas, Markus Fiedler Department of Technology and Aesthetics (DITE) Blekinge Institute of Technology Karlskrona, Sweden fomer.nawaz, tahir.nawaz.minhas, markus.fi[email protected] Abstract—Quality of Experience (QoE) management is a prime the subsequent inter-frames are dependent will result in more topic of research community nowadays as video streaming, quality loss as compared to lower priority frame. Hence, the online gaming and security applications are completely reliant traditional QoS metrics simply fails to analyze the network on the network service quality. Moreover, there are no standard models to map Quality of Service (QoS) into QoE. HTTP measurement’s impact on the end-user service satisfaction. media streaming is primarily used for such applications due The other approach to measure the user-satisfaction is by to its coherence with the Internet and simplified management. direct interaction via subjective assessment. But the downside The most common video codecs used for video streaming are is the time and cost associated with these qualitative subjective H.264/AVC and Google’s VP8. In this paper, we have analyzed assessments and their inability to be applied in real-time the performance of these two codecs from the perspective of QoE. The most common end-user medium for accessing video content networks. The objective measurement quality tools like Mean is via home based wireless networks. We have emulated an error- Squared Error (MSE), Peak signal-to-noise ratio (PSNR), prone wireless network with different scenarios involving packet Structural Similarity Index (SSIM), etc. -

Digital Audio Processor Vp-8

VP-8 DIGITAL AUDIO PROCESSOR TECHNICAL MANUAL ORSIS ® 600 Industrial Drive, New Bern, North Carolina, USA 28562 VP-8 Digital Audio Processor Technical Manual - 1st Edition - Revised [VP8GuiSetup_2_3_x(and above).exe] ©2009 Vorsis* Vorsis 600 Industrial Drive New Bern, North Carolina 28562 tel 252-638-7000 / fax 252-637-1285 * a division of Wheatstone Corporation VP-8 / Apr 2009 Attention! A TTENTION Federal Communications Commission (FCC) Compliance Notice: Radio Frequency Notice NOTE: This equipment has been tested and found to comply with the limits for a Class A digital device, pursuant to Part 15 of the FCC rules. These limits are designed to provide reasonable protection against harmful interference when the equipment is operated in a commercial environment. This equipment generates, uses, and can radiate radio frequency energy and, if not installed and used in accordance with the instruction manual, may cause harmful interference to radio communications. Operation of this equipment in a residential area is likely to cause harmful interference in which case the user will be required to correct the interference at his own expense. This is a Class A product. In a domestic environment, this product may cause radio interference, in which case, the user may be required to take appropriate measures. This equipment must be installed and wired properly in order to assure compliance with FCC regulations. Caution! Any modifications not expressly approved in writing by Wheatstone could void the user's authority to operate this equipment. VP-8 / May 2008 READ ME! Making Audio Processing History In 2005 Wheatstone returned to its roots in audio processing with the creation of Vorsis, a new division of the company. -

LOW COMPLEXITY H.264 to VC-1 TRANSCODER by VIDHYA

LOW COMPLEXITY H.264 TO VC-1 TRANSCODER by VIDHYA VIJAYAKUMAR Presented to the Faculty of the Graduate School of The University of Texas at Arlington in Partial Fulfillment of the Requirements for the Degree of MASTER OF SCIENCE IN ELECTRICAL ENGINEERING THE UNIVERSITY OF TEXAS AT ARLINGTON AUGUST 2010 Copyright © by Vidhya Vijayakumar 2010 All Rights Reserved ACKNOWLEDGEMENTS As true as it would be with any research effort, this endeavor would not have been possible without the guidance and support of a number of people whom I stand to thank at this juncture. First and foremost, I express my sincere gratitude to my advisor and mentor, Dr. K.R. Rao, who has been the backbone of this whole exercise. I am greatly indebted for all the things that I have learnt from him, academically and otherwise. I thank Dr. Ishfaq Ahmad for being my co-advisor and mentor and for his invaluable guidance and support. I was fortunate to work with Dr. Ahmad as his research assistant on the latest trends in video compression and it has been an invaluable experience. I thank my mentor, Mr. Vishy Swaminathan, and my team members at Adobe Systems for giving me an opportunity to work in the industry and guide me during my internship. I would like to thank the other members of my advisory committee Dr. W. Alan Davis and Dr. William E Dillon for reviewing the thesis document and offering insightful comments. I express my gratitude Dr. Jonathan Bredow and the Electrical Engineering department for purchasing the software required for this thesis and giving me the chance to work on cutting edge technologies. -

Perceptual Audio Coding Contents

12/6/2007 Perceptual Audio Coding Henrique Malvar Managing Director, Redmond Lab UW Lecture – December 6, 2007 Contents • Motivation • “Source coding”: good for speech • “Sink coding”: Auditory Masking • Block & Lapped Transforms • Audio compression •Examples 2 1 12/6/2007 Contents • Motivation • “Source coding”: good for speech • “Sink coding”: Auditory Masking • Block & Lapped Transforms • Audio compression •Examples 3 Many applications need digital audio • Communication – Digital TV, Telephony (VoIP) & teleconferencing – Voice mail, voice annotations on e-mail, voice recording •Business – Internet call centers – Multimedia presentations • Entertainment – 150 songs on standard CD – thousands of songs on portable music players – Internet / Satellite radio, HD Radio – Games, DVD Movies 4 2 12/6/2007 Contents • Motivation • “Source coding”: good for speech • “Sink coding”: Auditory Masking • Block & Lapped Transforms • Audio compression •Examples 5 Linear Predictive Coding (LPC) LPC periodic excitation N coefficients x()nen= ()+−∑ axnrr ( ) gains r=1 pitch period e(n) Synthesis x(n) Combine Filter noise excitation synthesized speech 6 3 12/6/2007 LPC basics – analysis/synthesis synthesis parameters Analysis Synthesis algorithm Filter residual waveform N en()= xn ()−−∑ axnr ( r ) r=1 original speech synthesized speech 7 LPC variant - CELP selection Encoder index LPC original gain coefficients speech . Synthesis . Filter Decoder excitation codebook synthesized speech 8 4 12/6/2007 LPC variant - multipulse LPC coefficients excitation Synthesis -

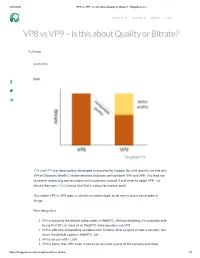

VP8 Vs VP9 – Is This About Quality Or Bitrate?

4/27/2020 VP8 vs VP9 - Is this about Quality or Bitrate? • BlogGeek.me PRODUCTS SERVICES ABOUT BLOG VP8 vs VP9 – Is this about Quality or Bitrate? Technology 09/05/2016 Both. VP8 and VP9 are video codecs developed and pushed by Google. Up until recently, we had only VP8 in Chrome’s WebRTC implementation and now, we have both VP8 and VP9. This lead me to several interesting conversations with customers around if and when to adopt VP9 – or should they use H.264 instead (but that’s a story for another post). This whole VP8 vs VP9 topic is usually misunderstood, so let me try to put some order in things. First things ƒrst: 1. VP8 is currently the default video codec in WebRTC. Without checking, it is probably safe to say that 90% or more of all WebRTC video sessions use VP8 2. VP9 is o∆cially and publicly available from Chrome 49 or so (give or take a version). But it isn’t the default codec in WebRTC. Yet 3. VP8 is on par with H.264 4. VP9 is better than VP8 when it comes to resultant quality of the compressed video https://bloggeek.me/vp8-vs-vp9-quality-or-bitrate/ 1/5 4/27/2020 VP8 vs VP9 - Is this about Quality or Bitrate? • BlogGeek.me 5. VP8 takes up less resources (=CPU) to compress video With that in mind, the following can be deduced: You can use the migration to VP9 for one of two things (or both): 1. Improve the quality of your video experience 2. -

20.1 Data Sheet - Supported File Formats

20.1 Data Sheet - Supported File Formats Target release 20.1 Epic Document status DRAFT Document owner Dieter Van Rijsselbergen Designer Not applicable Architecture Dieter Van Rijsselbergen QA Assumptions Implementation of Avid proxy formats produced by Edge impose a number of known Avid-specific conversions. Avid proxies are under consideration and will be included upon binding commitment. Implementation of ingest through rewrapping (instead of transcoding) of formats with Avid-supported video and audio codecs are under consideration and will be included upon binding commitment. Implementation of ingest through transcoding to Avid-supported video codecs other than DNxHD or DNxHR are under consideration and will be included upon binding commitment. Limecraft Flow and Edge - Ingest File Formats # File Codecs and Variants Edge Flow Status Notes Container ingest ingest 1 MXF MXF OP1a Deployed No P2 spanned clips supported at the Sony XDCAM (DV25, MPEG moment. IMX codecs), XDCAM HD and Referencing original OP1a media from Flow XDCAM HD 422 AAFs is possible using AMA media linking in also for Canon Avid. C300/C500 and XF series AAF workflows for P2 are not implemented end-to-end yet. Sony XAVC (incl. XAVC Intra and XAVC-L codecs) ARRI Alexa MXF (DNxHD codec) AS-11 MXF (MPEG IMX/D10, AVC-I codecs) MXF OP-atom Deployed. P2 (DV codec) and P2 HD Only (DVCPro HD, AVC-I 50 and available AVC-I 100 codecs) in Edge. 1.1 MXF Sony RAW and X-OCN (XT, LT, Deployed. Due to the heavy data rates involved in ST) Only processing these files, a properly provisioned for Sony Venice, F65, available system is required, featuring fast storage PMW-F55, PMW-F5 and NEX in Edge. -

Using Daala Intra Frames for Still Picture Coding

Using Daala Intra Frames for Still Picture Coding Nathan E. Egge, Jean-Marc Valin, Timothy B. Terriberry, Thomas Daede, Christopher Montgomery Mozilla Mountain View, CA, USA Abstract—Recent advances in video codec technology have techniques. Unsignaled horizontal and vertical prediction (see been successfully applied to the field of still picture coding. Daala Section II-D), and low complexity prediction of chroma coef- is a new royalty-free video codec under active development that ficients from their spatially coincident luma coefficients (see contains several novel coding technologies. We consider using Daala intra frames for still picture coding and show that it is Section II-E) are two examples. competitive with other video-derived image formats. A finished version of Daala could be used to create an excellent, royalty-free image format. I. INTRODUCTION The Daala video codec is a joint research effort between Xiph.Org and Mozilla to develop a next-generation video codec that is both (1) competitive performance with the state- of-the-art and (2) distributable on a royalty free basis. In work- ing to produce a royalty free video codec, Daala introduces new coding techniques to replace those used in the traditional block-based video codec pipeline. These techniques, while not Fig. 1. State of blocks within the decode pipeline of a codec using lapped completely finished, already demonstrate that it is possible to transforms. Immediate neighbors of the target block (bold lines) cannot be deliver a video codec that meets these goals. used for spatial prediction as they still require post-filtering (dotted lines). All video codecs have, in some form, the ability to code a still frame without prior information. -

Speeding up VP9 Intra Encoder with Hierarchical Deep Learning Based Partition Prediction Somdyuti Paul, Andrey Norkin, and Alan C

IEEE TRANSACTIONS ON IMAGE PROCESSING 1 Speeding up VP9 Intra Encoder with Hierarchical Deep Learning Based Partition Prediction Somdyuti Paul, Andrey Norkin, and Alan C. Bovik Abstract—In VP9 video codec, the sizes of blocks are decided during encoding by recursively partitioning 64×64 superblocks using rate-distortion optimization (RDO). This process is com- putationally intensive because of the combinatorial search space of possible partitions of a superblock. Here, we propose a deep learning based alternative framework to predict the intra- mode superblock partitions in the form of a four-level partition tree, using a hierarchical fully convolutional network (H-FCN). We created a large database of VP9 superblocks and the corresponding partitions to train an H-FCN model, which was subsequently integrated with the VP9 encoder to reduce the intra- mode encoding time. The experimental results establish that our approach speeds up intra-mode encoding by 69.7% on average, at the expense of a 1.71% increase in the Bjøntegaard-Delta bitrate (BD-rate). While VP9 provides several built-in speed levels which are designed to provide faster encoding at the expense of decreased rate-distortion performance, we find that our model is able to outperform the fastest recommended speed level of the reference VP9 encoder for the good quality intra encoding configuration, in terms of both speedup and BD-rate. Fig. 1. Recursive partition of VP9 superblock at four levels showing the possible types of partition at each level. Index Terms—VP9, video encoding, block partitioning, intra prediction, convolutional neural networks, machine learning. supports partitioning a block into four square quadrants, VP9 I. -

Lapped Transforms in Perceptual Coding of Wideband Audio

Lapped Transforms in Perceptual Coding of Wideband Audio Sien Ruan Department of Electrical & Computer Engineering McGill University Montreal, Canada December 2004 A thesis submitted to McGill University in partial fulfillment of the requirements for the degree of Master of Engineering. c 2004 Sien Ruan ° i To my beloved parents ii Abstract Audio coding paradigms depend on time-frequency transformations to remove statistical redundancy in audio signals and reduce data bit rate, while maintaining high fidelity of the reconstructed signal. Sophisticated perceptual audio coding further exploits perceptual redundancy in audio signals by incorporating perceptual masking phenomena. This thesis focuses on the investigation of different coding transformations that can be used to compute perceptual distortion measures effectively; among them the lapped transform, which is most widely used in nowadays audio coders. Moreover, an innovative lapped transform is developed that can vary overlap percentage at arbitrary degrees. The new lapped transform is applicable on the transient audio by capturing the time-varying characteristics of the signal. iii Sommaire Les paradigmes de codage audio d´ependent des transformations de temps-fr´equence pour enlever la redondance statistique dans les signaux audio et pour r´eduire le taux de trans- mission de donn´ees, tout en maintenant la fid´elit´e´elev´ee du signal reconstruit. Le codage sophistiqu´eperceptuel de l’audio exploite davantage la redondance perceptuelle dans les signaux audio en incorporant des ph´enom`enes de masquage perceptuels. Cette th`ese se concentre sur la recherche sur les diff´erentes transformations de codage qui peuvent ˆetre employ´ees pour calculer des mesures de d´eformation perceptuelles efficacement, parmi elles, la transformation enroul´e, qui est la plus largement r´epandue dans les codeurs audio de nos jours.