Encoding, Fast and Slow: Low-Latency Video Processing Using Thousands of Tiny Threads Sadjad Fouladi, Riad S

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Encoding H.264 Video for Streaming and Progressive Download

W4: KEY ENCODING SKILLS, TECHNOLOGIES TECHNIQUES STREAMING MEDIA EAST - 2019 Jan Ozer www.streaminglearningcenter.com [email protected]/ 276-235-8542 @janozer Agenda • Introduction • Lesson 5: How to build encoding • Lesson 1: Delivering to Computers, ladder with objective quality metrics Mobile, OTT, and Smart TVs • Lesson 6: Current status of CMAF • Lesson 2: Codec review • Lesson 7: Delivering with dynamic • Lesson 3: Delivering HEVC over and static packaging HLS • Lesson 4: Per-title encoding Lesson 1: Delivering to Computers, Mobile, OTT, and Smart TVs • Computers • Mobile • OTT • Smart TVs Choosing an ABR Format for Computers • Can be DASH or HLS • Factors • Off-the-shelf player vendor (JW Player, Bitmovin, THEOPlayer, etc.) • Encoding/transcoding vendor Choosing an ABR Format for iOS • Native support (playback in the browser) • HTTP Live Streaming • Playback via an app • Any, including DASH, Smooth, HDS or RTMP Dynamic Streaming iOS Media Support Native App Codecs H.264 (High, Level 4.2), HEVC Any (Main10, Level 5 high) ABR formats HLS Any DRM FairPlay Any Captions CEA-608/708, WebVTT, IMSC1 Any HDR HDR10, DolbyVision ? http://bit.ly/hls_spec_2017 iOS Encoding Ladders H.264 HEVC http://bit.ly/hls_spec_2017 HEVC Hardware Support - iOS 3 % bit.ly/mobile_HEVC http://bit.ly/glob_med_2019 Android: Codec and ABR Format Support Codecs ABR VP8 (2.3+) • Multiple codecs and ABR H.264 (3+) HLS (3+) technologies • Serious cautions about HLS • DASH now close to 97% • HEVC VP9 (4.4+) DASH 4.4+ Via MSE • Main Profile Level 3 – mobile HEVC (5+) -

Google Chrome Browser Dropping H.264 Support 14 January 2011, by John Messina

Google Chrome Browser dropping H.264 support 14 January 2011, by John Messina with the codecs already supported by the open Chromium project. Specifically, we are supporting the WebM (VP8) and Theora video codecs, and will consider adding support for other high-quality open codecs in the future. Though H.264 plays an important role in video, as our goal is to enable open innovation, support for the codec will be removed and our resources directed towards completely open codec technologies." Since Google is developing the WebM technology, they can develop a good video standard using open source faster and better than a current standard video player can. The problem with H.264 is that it cost money and On January 11, Google announced that Chrome’s the patents for the technologies in H.264 are held HTML5 video support will change to match codecs by 27 companies, including Apple and Microsoft supported by the open source Chromium project. and controlled by MPEG LA. This makes H.264 Chrome will support the WebM (VP8) and Theora video expensive for content owners and software makers. codecs, and support for the H.264 codec will be removed to allow resources to focus on open codec Since Apple and Microsoft hold some of the technologies. patents for the H.264 technology and make money off the licensing fees, it's in their best interest not to change the technology in their browsers. (PhysOrg.com) -- Google will soon stop supporting There is however concerns that Apple and the H.264 video codec in their Chrome browser Microsoft's lack of support for WebM may impact and will support its own WebM and Ogg Theora the Chrome browser. -

Qoe Based Comparison of H.264/AVC and Webm/VP8 in Error-Prone Wireless Networkqoe Based Comparison of H.264/AVC and Webm/VP8 In

QoE based comparison of H.264/AVC and WebM/VP8 in an error-prone wireless network Omer Nawaz, Tahir Nawaz Minhas, Markus Fiedler Department of Technology and Aesthetics (DITE) Blekinge Institute of Technology Karlskrona, Sweden fomer.nawaz, tahir.nawaz.minhas, markus.fi[email protected] Abstract—Quality of Experience (QoE) management is a prime the subsequent inter-frames are dependent will result in more topic of research community nowadays as video streaming, quality loss as compared to lower priority frame. Hence, the online gaming and security applications are completely reliant traditional QoS metrics simply fails to analyze the network on the network service quality. Moreover, there are no standard models to map Quality of Service (QoS) into QoE. HTTP measurement’s impact on the end-user service satisfaction. media streaming is primarily used for such applications due The other approach to measure the user-satisfaction is by to its coherence with the Internet and simplified management. direct interaction via subjective assessment. But the downside The most common video codecs used for video streaming are is the time and cost associated with these qualitative subjective H.264/AVC and Google’s VP8. In this paper, we have analyzed assessments and their inability to be applied in real-time the performance of these two codecs from the perspective of QoE. The most common end-user medium for accessing video content networks. The objective measurement quality tools like Mean is via home based wireless networks. We have emulated an error- Squared Error (MSE), Peak signal-to-noise ratio (PSNR), prone wireless network with different scenarios involving packet Structural Similarity Index (SSIM), etc. -

Digital Audio Processor Vp-8

VP-8 DIGITAL AUDIO PROCESSOR TECHNICAL MANUAL ORSIS ® 600 Industrial Drive, New Bern, North Carolina, USA 28562 VP-8 Digital Audio Processor Technical Manual - 1st Edition - Revised [VP8GuiSetup_2_3_x(and above).exe] ©2009 Vorsis* Vorsis 600 Industrial Drive New Bern, North Carolina 28562 tel 252-638-7000 / fax 252-637-1285 * a division of Wheatstone Corporation VP-8 / Apr 2009 Attention! A TTENTION Federal Communications Commission (FCC) Compliance Notice: Radio Frequency Notice NOTE: This equipment has been tested and found to comply with the limits for a Class A digital device, pursuant to Part 15 of the FCC rules. These limits are designed to provide reasonable protection against harmful interference when the equipment is operated in a commercial environment. This equipment generates, uses, and can radiate radio frequency energy and, if not installed and used in accordance with the instruction manual, may cause harmful interference to radio communications. Operation of this equipment in a residential area is likely to cause harmful interference in which case the user will be required to correct the interference at his own expense. This is a Class A product. In a domestic environment, this product may cause radio interference, in which case, the user may be required to take appropriate measures. This equipment must be installed and wired properly in order to assure compliance with FCC regulations. Caution! Any modifications not expressly approved in writing by Wheatstone could void the user's authority to operate this equipment. VP-8 / May 2008 READ ME! Making Audio Processing History In 2005 Wheatstone returned to its roots in audio processing with the creation of Vorsis, a new division of the company. -

Improving Mobile IP Handover Latency on End-To-End TCP in UMTS/WCDMA Networks

Improving Mobile IP Handover Latency on End-to-End TCP in UMTS/WCDMA Networks Chee Kong LAU A thesis submitted in fulfilment of the requirements for the degree of Master of Engineering (Research) in Electrical Engineering School of Electrical Engineering and Telecommunications The University of New South Wales March, 2006 CERTIFICATE OF ORIGINALITY I hereby declare that this submission is my own work and to the best of my knowledge it contains no materials previously published or written by another person, nor material which to a substantial extent has been accepted for the award of any other degree or diploma at UNSW or any other educational institution, except where due acknowledgement is made in the thesis. Any contribution made to the research by others, with whom I have worked at UNSW or elsewhere, is explicitly acknowledged in the thesis. I also declare that the intellectual content of this thesis is the product of my own work, except to the extent that assistance from others in the project's design and conception or in style, presentation and linguistic expression is acknowledged. (Signed)_________________________________ i Dedication To: My girl-friend (Jeslyn) for her love and caring, my parents for their endurance, and to my friends and colleagues for their understanding; because while doing this thesis project I did not manage to accompany them. And Lord Buddha for His great wisdom and compassion, and showing me the actual path to liberation. For this, I take refuge in the Buddha, the Dharma, and the Sangha. ii Acknowledgement There are too many people for their dedications and contributions to make this thesis work a reality; without whom some of the contents of this thesis would not have been realised. -

OEM Cobranet Module Design Guide

CDK-8 OEM CobraNet Module Design Guide Date 8/29/2008 Revision 1.1 © Attero Tech, LLC 1315 Directors Row, Suite 107, Ft Wayne, IN 46808 Phone 260-496-9668 • Fax 260-496-9879 620-00001-01 CDK-8 Design Guide Contents 1 – Overview .....................................................................................................................................................................................................................................2 1.1 – Notes on Modules .................................................................................................................................................... 2 2 – Digital Audio Interface Connectivity...........................................................................................................................................................................3 2.1 – Pin Descriptions ....................................................................................................................................................... 3 2.1.1 - Audio clocks ..................................................................................................................................................... 3 2.1.2 – Digital audio..................................................................................................................................................... 3 2.1.3 – Serial bridge ..................................................................................................................................................... 4 2.1.4 – Control............................................................................................................................................................ -

Public Address System Network Design Considerations

AtlasIED APPLICATION NOTE Public Address System Network Design Considerations Background AtlasIED provides network based Public Address Systems (PAS) that are deployed on a wide variety of networks at end user facilities worldwide. As such, a primary factor, directly impacting the reliability of the PAS, is a properly configured, reliable, well-performing network on which the PAS resides/functions. AtlasIED relies solely upon the end user’s network owner/manager for the design, provision, configuration and maintenance of the network, in a manner that enables proper PAS functionability/functionality. Should the network on which the PAS resides be improperly designed, configured, maintained, malfunctions or undergoes changes or modifications, impacts to the reliability, functionality or stability of the PAS can be expected, resulting in system anomalies that are outside the control of AtlasIED. In such instances, AtlasIED can be a resource to, and support the end user’s network owner/manager in diagnosing the problems and restoring the PAS to a fully functioning and reliable state. However, for network related issues, AtlasIED would look to the end user to recover the costs associated with such activities. While AtlasIED should not be expected to actually design a facility’s network, nor make formal recommendations on specific network equipment to use, this application note provides factors to consider – best practices – when designing a network for public address equipment, along with some wisdom and possible pitfalls that have been gleaned from past experiences in deploying large scale systems. This application note is divided into the following sections: n Local Network – The network that typically hosts one announcement controller and its peripherals. -

Aperformance Analysis for Umts Packet Switched

International Journal of Next-Generation Networks (IJNGN), Vol.2, No.1, M arch 2010 A PERFORMANCE ANALYSIS FOR UMTS PACKET SWITCHED NETWORK BASED ON MULTIVARIATE KPIS Ye Ouyang and M. Hosein Fallah, Ph.D., P.E. Howe School of Technology Management Stevens Institute of Technology, Hoboken, NJ, USA 07030 [email protected] [email protected] ABSTRACT Mobile data services are penetrating mobile markets rapidly. The mobile industry relies heavily on data service to replace the traditional voice services with the evolution of the wireless technology and market. A reliable packet service network is critical to the mobile operators to maintain their core competence in data service market. Furthermore, mobile operators need to develop effective operational models to manage the varying mix of voice, data and video traffic on a single network. Application of statistical models could prove to be an effective approach. This paper first introduces the architecture of Universal Mobile Telecommunications System (UMTS) packet switched (PS) network and then applies multivariate statistical analysis to Key Performance Indicators (KPI) monitored from network entities in UMTS PS network to guide the long term capacity planning for the network. The approach proposed in this paper could be helpful to mobile operators in operating and maintaining their 3G packet switched networks for the long run. KEYWORDS UMTS; Packet Switch; Multidimensional Scaling; Network Operations, Correspondence Analysis; GGSN; SGSN; Correlation Analysis. 1. INTRODUCTION Packet switched domain of 3G UMTS network serves all data related services for the mobile subscribers. Nowadays people have a certain expectation for their experience of mobile data services that the mobile wireless environment has not fully met since the speed at which they can access their packet switching services has been limited. -

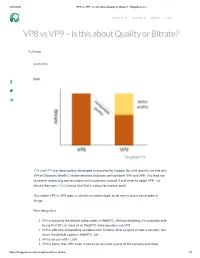

VP8 Vs VP9 – Is This About Quality Or Bitrate?

4/27/2020 VP8 vs VP9 - Is this about Quality or Bitrate? • BlogGeek.me PRODUCTS SERVICES ABOUT BLOG VP8 vs VP9 – Is this about Quality or Bitrate? Technology 09/05/2016 Both. VP8 and VP9 are video codecs developed and pushed by Google. Up until recently, we had only VP8 in Chrome’s WebRTC implementation and now, we have both VP8 and VP9. This lead me to several interesting conversations with customers around if and when to adopt VP9 – or should they use H.264 instead (but that’s a story for another post). This whole VP8 vs VP9 topic is usually misunderstood, so let me try to put some order in things. First things ƒrst: 1. VP8 is currently the default video codec in WebRTC. Without checking, it is probably safe to say that 90% or more of all WebRTC video sessions use VP8 2. VP9 is o∆cially and publicly available from Chrome 49 or so (give or take a version). But it isn’t the default codec in WebRTC. Yet 3. VP8 is on par with H.264 4. VP9 is better than VP8 when it comes to resultant quality of the compressed video https://bloggeek.me/vp8-vs-vp9-quality-or-bitrate/ 1/5 4/27/2020 VP8 vs VP9 - Is this about Quality or Bitrate? • BlogGeek.me 5. VP8 takes up less resources (=CPU) to compress video With that in mind, the following can be deduced: You can use the migration to VP9 for one of two things (or both): 1. Improve the quality of your video experience 2. -

Action-Sound Latency: Are Our Tools Fast Enough?

Action-Sound Latency: Are Our Tools Fast Enough? Andrew P. McPherson Robert H. Jack Giulio Moro Centre for Digital Music Centre for Digital Music Centre for Digital Music School of EECS School of EECS School of EECS Queen Mary U. of London Queen Mary U. of London Queen Mary U. of London [email protected] [email protected] [email protected] ABSTRACT accepted guidelines for latency and jitter (variation in la- The importance of low and consistent latency in interactive tency). music systems is well-established. So how do commonly- Where previous papers have focused on latency in a sin- used tools for creating digital musical instruments and other gle component (e.g. audio throughput latency [21, 20] and tangible interfaces perform in terms of latency from user ac- roundtrip network latency [17]), this paper measures the tion to sound output? This paper examines several common latency of complete platforms commonly employed to cre- configurations where a microcontroller (e.g. Arduino) or ate digital musical instruments. Within these platforms we wireless device communicates with computer-based sound test the most reductive possible setup: producing an audio generator (e.g. Max/MSP, Pd). We find that, perhaps \click" in response to a change on a single digital or ana- surprisingly, almost none of the tested configurations meet log pin. This provides a minimum latency measurement for generally-accepted guidelines for latency and jitter. To ad- any tool created on that platform; naturally, the designer's dress this limitation, the paper presents a new embedded application may introduce additional latency through filter platform, Bela, which is capable of complex audio and sen- group delay, block-based processing or other buffering. -

20.1 Data Sheet - Supported File Formats

20.1 Data Sheet - Supported File Formats Target release 20.1 Epic Document status DRAFT Document owner Dieter Van Rijsselbergen Designer Not applicable Architecture Dieter Van Rijsselbergen QA Assumptions Implementation of Avid proxy formats produced by Edge impose a number of known Avid-specific conversions. Avid proxies are under consideration and will be included upon binding commitment. Implementation of ingest through rewrapping (instead of transcoding) of formats with Avid-supported video and audio codecs are under consideration and will be included upon binding commitment. Implementation of ingest through transcoding to Avid-supported video codecs other than DNxHD or DNxHR are under consideration and will be included upon binding commitment. Limecraft Flow and Edge - Ingest File Formats # File Codecs and Variants Edge Flow Status Notes Container ingest ingest 1 MXF MXF OP1a Deployed No P2 spanned clips supported at the Sony XDCAM (DV25, MPEG moment. IMX codecs), XDCAM HD and Referencing original OP1a media from Flow XDCAM HD 422 AAFs is possible using AMA media linking in also for Canon Avid. C300/C500 and XF series AAF workflows for P2 are not implemented end-to-end yet. Sony XAVC (incl. XAVC Intra and XAVC-L codecs) ARRI Alexa MXF (DNxHD codec) AS-11 MXF (MPEG IMX/D10, AVC-I codecs) MXF OP-atom Deployed. P2 (DV codec) and P2 HD Only (DVCPro HD, AVC-I 50 and available AVC-I 100 codecs) in Edge. 1.1 MXF Sony RAW and X-OCN (XT, LT, Deployed. Due to the heavy data rates involved in ST) Only processing these files, a properly provisioned for Sony Venice, F65, available system is required, featuring fast storage PMW-F55, PMW-F5 and NEX in Edge. -

Speeding up VP9 Intra Encoder with Hierarchical Deep Learning Based Partition Prediction Somdyuti Paul, Andrey Norkin, and Alan C

IEEE TRANSACTIONS ON IMAGE PROCESSING 1 Speeding up VP9 Intra Encoder with Hierarchical Deep Learning Based Partition Prediction Somdyuti Paul, Andrey Norkin, and Alan C. Bovik Abstract—In VP9 video codec, the sizes of blocks are decided during encoding by recursively partitioning 64×64 superblocks using rate-distortion optimization (RDO). This process is com- putationally intensive because of the combinatorial search space of possible partitions of a superblock. Here, we propose a deep learning based alternative framework to predict the intra- mode superblock partitions in the form of a four-level partition tree, using a hierarchical fully convolutional network (H-FCN). We created a large database of VP9 superblocks and the corresponding partitions to train an H-FCN model, which was subsequently integrated with the VP9 encoder to reduce the intra- mode encoding time. The experimental results establish that our approach speeds up intra-mode encoding by 69.7% on average, at the expense of a 1.71% increase in the Bjøntegaard-Delta bitrate (BD-rate). While VP9 provides several built-in speed levels which are designed to provide faster encoding at the expense of decreased rate-distortion performance, we find that our model is able to outperform the fastest recommended speed level of the reference VP9 encoder for the good quality intra encoding configuration, in terms of both speedup and BD-rate. Fig. 1. Recursive partition of VP9 superblock at four levels showing the possible types of partition at each level. Index Terms—VP9, video encoding, block partitioning, intra prediction, convolutional neural networks, machine learning. supports partitioning a block into four square quadrants, VP9 I.