Web Searching I: History and Basic Notions, Crawling II: Link Analysis Techniques III: Web Spam Page Identification

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Alan Emtage Coach Nick's Fav Tech Innovators

Coach Nick’s Fav Tech Innovators Alan Emtage Innovation - The Search Engine: In 1989, Alan Emtage conceived of and implemented Archie, the world’s first Internet search engine. In doing so, he pioneered many of the techniques used by public search engines today. Coach Nick Says: “The search engine was named after, Archie, a famous comic book that I read as a kid! It changed the way people learn.” Coach Nick’s Fav Tech Innovators Ray Tomlinson Innovation - E-mail: In 1971, Ray Tomlinson developed an e-mail program and the @ sign. He is internationally known and credited as the inventor of email. WVMEN Coach Nick Says: “When I worked in the WV State Department of Education, we started using e-mail in our high schools in 1984 as part of WVMEN, the first statewide micro-computing network in the Nation!” Coach Nick’s Fav Tech Innovators Doug Engelbart Innovation - The Computer Mouse: Doug Engelbart invented the computer mouse in the early 1960s in his research lab at Stanford Research Institute (now SRI International). The first prototype was built in 1964, the patent application was filed in 1967, and US Patent was awarded in 1970. Coach Nick Says: “Steve Jobs acquired — some say stole — the mouse concept from the Xerox PARC Labs in Palo Alto in 1979 and changed the world.” Coach Nick’s Fav Tech Innovators Steve Wozniak & Steve Jobs Innovation - The First Apple: The Apple I, also known as the Apple-1, was designed and hand-built by Steve Wozniak. Wozniak’s friend Steve Jobs had the idea of selling the computer. -

Leaving Reality Behind Etoy Vs Etoys Com Other Battles to Control Cyberspace By: Adam Wishart Regula Bochsler ISBN: 0066210763 See Detail of This Book on Amazon.Com

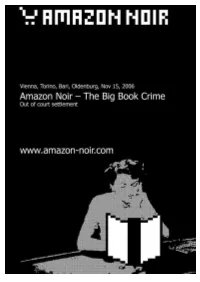

Leaving Reality Behind etoy vs eToys com other battles to control cyberspace By: Adam Wishart Regula Bochsler ISBN: 0066210763 See detail of this book on Amazon.com Book served by AMAZON NOIR (www.amazon-noir.com) project by: PAOLO CIRIO paolocirio.net UBERMORGEN.COM ubermorgen.com ALESSANDRO LUDOVICO neural.it Page 1 discovering a new toy "The new artist protests, he no longer paints." -Dadaist artist Tristan Tzara, Zh, 1916 On the balmy evening of June 1, 1990, fleets of expensive cars pulled up outside the Zurich Opera House. Stepping out and passing through the pillared porticoes was a Who's Who of Swiss society-the head of state, national sports icons, former ministers and army generals-all of whom had come to celebrate the sixty-fifth birthday of Werner Spross, the owner of a huge horticultural business empire. As one of Zurich's wealthiest and best-connected men, it was perhaps fitting that 650 of his "close friends" had been invited to attend the event, a lavish banquet followed by a performance of Romeo and Juliet. Defiantly greeting the guests were 200 demonstrators standing in the square in front of the opera house. Mostly young, wearing scruffy clothes and sporting punky haircuts, they whistled and booed, angry that the opera house had been sold out, allowing itself for the first time to be taken over by a rich patron. They were also chanting slogans about the inequity of Swiss society and the wealth of Spross's guests. The glittering horde did its very best to ignore the disturbance. The protest had the added significance of being held on the tenth anniversary of the first spark of the city's most explosive youth revolt of recent years, The Movement. -

Tim Berners-Lee

Le Web Quelques repères Informations compilées par Omer Pesquer - http://infonum.omer.mobi/ - @_omr Internet Mapping Project, Kevin Kelly, 1999, https://kk.org/ct2/the-internet-mapping-project La proposition d'Alan Levin pour l'Internet Mapping Project https://www.flickr.com/photos/cogdog/24868066787/ 3 Hypertexte «Une structure de fchier pour l'information complexe, changeante et indéterminée » Ted Nelson, 1965 http://www.hyperfiction.org/texts/whatHypertextIs.pdf + https://fr.wikipedia.org/wiki/Hypertexte http://xanadu.com.au/ted/XUsurvey/xuDation.html THE INTERNET 2015 - THE OPTE PROJECT (juillet 2015) - Barrett Lyon http://www.opte.org/the-intern et/ 5 1990... Un accès universel à un large univers de documents En mars 1989, Tim Berners-Lee soumettait une Le 6 août 1991, Tim Berners-Lee annonce proposition d'un nouveau système de gestion de publiquement sur « alt.hypertext » l'existence du l'information à son supérieur. WorldWideWeb. http://info.cern.ch/Proposal.html http://info.cern.ch Le Web « Les sites (Web) doivent être en mesure d'interagir dans un espace unique et universel. » Tim Berners-Lee http://fr.wikiquote.org/wiki/Tim_Berners-Lee +http://www.w3.org/People/Berners-Lee/WorldWideWeb.html 8 9/73 Les Horribles Cernettes - première photo publiée sur World Wide Web en 1992. http://en.wikipedia.org/wiki/Les_Horribles_Cernettes Logo historique du World Wide Web par Robert Cailliau http://fr.wikipedia.org/wiki/Fichier:WWW_logo_by_Robert_Cailliau.svg Le site Web du Ministère de la Culture en novembre 1996 Le logo est GIF animé : https://twitter.com/_omr/status/711125480477487105 "Une archéologie des premiers sites web de musées en France" https://www.facebook.com/804024616337535/photos/?tab=album&album_id=1054783704594957 11/73 1990.. -

The People Who Invented the Internet Source: Wikipedia's History of the Internet

The People Who Invented the Internet Source: Wikipedia's History of the Internet PDF generated using the open source mwlib toolkit. See http://code.pediapress.com/ for more information. PDF generated at: Sat, 22 Sep 2012 02:49:54 UTC Contents Articles History of the Internet 1 Barry Appelman 26 Paul Baran 28 Vint Cerf 33 Danny Cohen (engineer) 41 David D. Clark 44 Steve Crocker 45 Donald Davies 47 Douglas Engelbart 49 Charles M. Herzfeld 56 Internet Engineering Task Force 58 Bob Kahn 61 Peter T. Kirstein 65 Leonard Kleinrock 66 John Klensin 70 J. C. R. Licklider 71 Jon Postel 77 Louis Pouzin 80 Lawrence Roberts (scientist) 81 John Romkey 84 Ivan Sutherland 85 Robert Taylor (computer scientist) 89 Ray Tomlinson 92 Oleg Vishnepolsky 94 Phil Zimmermann 96 References Article Sources and Contributors 99 Image Sources, Licenses and Contributors 102 Article Licenses License 103 History of the Internet 1 History of the Internet The history of the Internet began with the development of electronic computers in the 1950s. This began with point-to-point communication between mainframe computers and terminals, expanded to point-to-point connections between computers and then early research into packet switching. Packet switched networks such as ARPANET, Mark I at NPL in the UK, CYCLADES, Merit Network, Tymnet, and Telenet, were developed in the late 1960s and early 1970s using a variety of protocols. The ARPANET in particular led to the development of protocols for internetworking, where multiple separate networks could be joined together into a network of networks. In 1982 the Internet Protocol Suite (TCP/IP) was standardized and the concept of a world-wide network of fully interconnected TCP/IP networks called the Internet was introduced. -

BACHELORARBEIT Browser Und Ihr Einfluss Auf Die Entwicklung Des

BACHELORARBEIT im Studiengang Informatik/Computer Science Browser und ihr Einfluss auf die Entwicklung des Web Ausgeführt von: Thomas Greiner Personenkennzeichen: 0910257037 BegutachterIn: Dipl.-Ing. Dr. Gerd Holweg Gols, 15.01.2012 Eidesstattliche Erklärung „Ich erkläre hiermit an Eides statt, dass ich die vorliegende Arbeit selbständig angefertigt habe. Die aus fremden Quellen direkt oder indirekt übernommenen Gedanken sind als solche kenntlich gemacht. Die Arbeit wurde bisher weder in gleicher noch in ähnlicher Form einer anderen Prüfungsbehörde vorgelegt und auch noch nicht veröffentlicht. Ich versichere, dass die abgegebene Version jener im Uploadtool entspricht.“ Gols, 15.01.2012 Ort, Datum Unterschrift Kurzfassung Der Browser ist von heutigen Computern nicht mehr wegzudenken. Seit der Entstehung des World Wide Web und mit ihm des ersten Browsers gab es Ereignisse, die die Geschichte beider maßgeblich verändert haben. Vom Ursprung des Web, über den ersten Browser Krieg zwischen Microsoft und Netscape und die folgende Ära des Internet Explorer 6, bis hin zu den Anfängen des heute noch vorherrschenden zweiten Browser Krieges, war es stets ein Auf und Ab, wenn es darum ging, das Web zu dem zu formen, wie wir es heute kennen. Die Frage, die sich hierbei auftut, ist doch, wie viel Einfluss die Browser tatsächlich auf die Entwicklung des Web gehabt haben oder ob möglicherweise sogar das Web die Entwicklung der Browser beeinflusst hat. Das ist die zentrale Frage hinter dieser Arbeit. Diese wird durch die Kombination aktueller Zahlen über die Marktanteile der jeweiligen Browser und Aussagen einflussreicher Personen wie Steve Jobs, Eric Schmidt und Mitchell Baker, sowie die Meinungen zweier österreichischer Unternehmer, die von ihren Erfahrungen mit dem Web berichten, beantwortet. -

DRI2020 Rettskilder Og Informasjonssøking

DRI2020 Rettskilder og informasjonssøking Søkemotorer: Troverdighet og synlighet Gisle Hannemyr Ifi, høstsemesteret 2014 Opprinnelsen til fritekstsøk • Vedtak i Pennsylvania en gang på slutten av 1950-tallet om å bytte ut begrepet “retarded child” i diverse lover med det mer politisk korrekte “special child”. • Uoverkommelig å finne alle forekomster manuellt. • Deler av lovsamlingen var tastet inn på hullkort. John F. Horty fikk utvided databasen til å omfatte hele lovsamlingen med komemntarer. • Kilde: John F. Horty, “Experience with the Application of Electronic Data Processing Systems in General Law”, Modern Uses of Logic in Law, December 1960. 2014 Gisle Hannemyr Side #2 1 The Internet: The Resource discovery problem • The existence of digital resources on the Internet led to formulation of “The Resource Discovery Problem”. • First formulated by Alan Emtage and Peter Deutsch in Archie - an Electronic Directory Service for the Internet1 (1992) . «Before a user can effectively exploit any of the services offered by the Internet community or access any information provided by such services, that user must be aware of both the existence of the service and the host or hosts on which it is available.» 1) Archie was a search engine into ftp-space that pre-dated any web-oriented search engines. 2014 Gisle Hannemyr Page #3 The Resource discovery problem • So the resorce discovery problem encompasses not only to establish the existence and location of resources, but: . If the discovery process yields pointers to several alternative resources, some means to qualify them and to identify the resource or resoures that provides the “best fit” for the problem at hand. -

List of Internet Pioneers

List of Internet pioneers Instead of a single "inventor", the Internet was developed by many people over many years. The following are some Internet pioneers who contributed to its early development. These include early theoretical foundations, specifying original protocols, and expansion beyond a research tool to wide deployment. The pioneers Contents Claude Shannon The pioneers Claude Shannon Claude Shannon (1916–2001) called the "father of modern information Vannevar Bush theory", published "A Mathematical Theory of Communication" in J. C. R. Licklider 1948. His paper gave a formal way of studying communication channels. It established fundamental limits on the efficiency of Paul Baran communication over noisy channels, and presented the challenge of Donald Davies finding families of codes to achieve capacity.[1] Charles M. Herzfeld Bob Taylor Vannevar Bush Larry Roberts Leonard Kleinrock Vannevar Bush (1890–1974) helped to establish a partnership between Bob Kahn U.S. military, university research, and independent think tanks. He was Douglas Engelbart appointed Chairman of the National Defense Research Committee in Elizabeth Feinler 1940 by President Franklin D. Roosevelt, appointed Director of the Louis Pouzin Office of Scientific Research and Development in 1941, and from 1946 John Klensin to 1947, he served as chairman of the Joint Research and Development Vint Cerf Board. Out of this would come DARPA, which in turn would lead to the ARPANET Project.[2] His July 1945 Atlantic Monthly article "As We Yogen Dalal May Think" proposed Memex, a theoretical proto-hypertext computer Peter Kirstein system in which an individual compresses and stores all of their books, Steve Crocker records, and communications, which is then mechanized so that it may Jon Postel [3] be consulted with exceeding speed and flexibility. -

Use of Theses

THESES SIS/LIBRARY TELEPHONE: +61 2 6125 4631 R.G. MENZIES LIBRARY BUILDING NO:2 FACSIMILE: +61 2 6125 4063 THE AUSTRALIAN NATIONAL UNIVERSITY EMAIL: [email protected] CANBERRA ACT 0200 AUSTRALIA USE OF THESES This copy is supplied for purposes of private study and research only. Passages from the thesis may not be copied or closely paraphrased without the written consent of the author. The Irony of the Information Age: US Power and the Internet in International Relations Madeline Carr May, 2011 A thesis submitted for the degree of Doctor of Philosophy of The Australian National University Acknowledgements What a journey! It feels amazing to be writing this page. This has been an exercise in patience, perseverance and personal growth and I'm extremely grateful for the lessons I have learned throughout the past few years. Writing a doctoral thesis is challenging on many levels but I also considered it to be an extraordinary privilege. It is a time of reading, thinking and writing which I realize I was very fortunate to be able to indulge in and I have tried to remain very aware of that privilege. I began my PhD candidature with four others - Lacy Davey, Jason Hall, Jae·Jeok Park and Tomohiko Satake. The five of us spent many, many hours together reading one another's work, challenging one another's ideas and supporting one another through the ups and downs of life as a PhD candidate. We established a bond which will last our lifetimes and I count them dearly amongst the wonderful outcomes of this experience. -

The Commodification of Search

San Jose State University SJSU ScholarWorks Master's Theses Master's Theses and Graduate Research 2008 The commodification of search Hsiao-Yin Chen San Jose State University Follow this and additional works at: https://scholarworks.sjsu.edu/etd_theses Recommended Citation Chen, Hsiao-Yin, "The commodification of search" (2008). Master's Theses. 3593. DOI: https://doi.org/10.31979/etd.wnaq-h6sz https://scholarworks.sjsu.edu/etd_theses/3593 This Thesis is brought to you for free and open access by the Master's Theses and Graduate Research at SJSU ScholarWorks. It has been accepted for inclusion in Master's Theses by an authorized administrator of SJSU ScholarWorks. For more information, please contact [email protected]. THE COMMODIFICATION OF SEARCH A Thesis Presented to The School of Journalism and Mass Communications San Jose State University In Partial Fulfillment of the Requirement for the Degree Master of Science by Hsiao-Yin Chen December 2008 UMI Number: 1463396 INFORMATION TO USERS The quality of this reproduction is dependent upon the quality of the copy submitted. Broken or indistinct print, colored or poor quality illustrations and photographs, print bleed-through, substandard margins, and improper alignment can adversely affect reproduction. In the unlikely event that the author did not send a complete manuscript and there are missing pages, these will be noted. Also, if unauthorized copyright material had to be removed, a note will indicate the deletion. ® UMI UMI Microform 1463396 Copyright 2009 by ProQuest LLC. All rights reserved. This microform edition is protected against unauthorized copying under Title 17, United States Code. ProQuest LLC 789 E. -

Issue 23, 2019

UWI Cave Hill Campus ISSUE 23 September 2019 Student-Athlete Extraordinaire Redonda Restoration Lessons in the Key of Life ISSUE 23 : SEPTEMBER 2019 Contents DISCOURSE 47 ‘Workload’ of Diabetes Greater than 1 Education: A Renewable Resource that of HIV NEWS PUBLICATIONS A PUBLICATION OF 2 Highest Seal of Approval 48 Kamugisha Goes Beyond Coloniality THE UNIVERSITY OF THE WEST INDIES, 49 Nuts and Bolts of Researching CAVE HILL CAMPUS, BARBADOS. 3 Transformative Education Key to Economic Growth 50 Stronger Together We welcome your comments and feedback which 4 Promoting Homegrown IT Solutions 51 Urging a Bigger Role from Civil Society can be directed to [email protected] 5 Caribbean Science Foundation Attends 52 Watson Interrogates Barrow or CHILL c/o Office of Public Information Clinton Foundation Conference The UWI, Cave Hill Campus, Bridgetown BB11000 53 Management Under Scrutiny Barbados. 6 Supporting Regional Civil Servant 54 Pan-Africanism: A History Development Tel: (246) 417-4076/77 54 Pioneering a Shipshape Enterprise 7 Expanding Vistas into Japan EDITOR: 8 A Call to Come Home OUTREACH Chelston Lovell 10 Student-Athlete Extraordinaire 55 Regional Ministers Discuss Climate Change Threats CONSULTANT EDITOR: 12 Youth Internet Forum Ann St. Hill 57 Law for Development 13 Senior Staff on the Move 58 Blackbirds Conquer Ocean Challenge PHOTO EDITORS: IN FOCUS Rasheeta Dorant 60 SALISES Conference Celebrates & Brian Elcock 14 Cave Hill Provides Medical Cannabis Rethinks Caribbean Futures ......................................................................... Training AWARDS CONTRIBUTORS: 15 More Dorm Space on the Cards 61 Dr. Madhuvanti Murphy’s Research Win Professor Eudine Barriteau, PhD, GCM 16 People Empowerment Dwayne Devonish, PhD 62 Recognising Unsung Heroes Franchero Ellis 17 Writing Across the Curriculum 63 Six Certified in Ethereum Blockchain Caribbean Science Foundation 19 Transport Gift Strengthens All-inclusive April A. -

Internet Accuracy Is Information on the Web Reliable?

Researcher Published by CQ Press, a division of SAGE Publications CQ www.cqresearcher.com Internet Accuracy Is information on the Web reliable? he Internet has been a huge boon for information- seekers. In addition to sites maintained by newspa- pers and other traditional news sources, there are untraditional sources ranging from videos, personal TWeb pages and blogs to postings by interest groups of all kinds — from government agencies to hate groups. But experts caution that determining the credibility of online data can be tricky, and that critical-reading skills are not being taught in most schools. In the new online age, readers no longer have the luxury of Wikipedia banned Stephen Colbert, host of Comedy Central’s “The Colbert Report,” from editing articles on the popular online site after he made joke edits. depending on a reference librarian’s expertise in finding reliable sources. Anyone can post an article, book or opinion online with I no second pair of eyes checking it for accuracy, as in traditional N publishing and journalism. Now many readers are turning to THIS REPORT S user-created sources like Wikipedia, or powerful search engines THE ISSUES ......................627 I like Google, which tally how many people previously have BACKGROUND ..................634 D CHRONOLOGY ..................635 accessed online documents and sources — a process that is E open to manipulation. CURRENT SITUATION ..........640 AT ISSUE ..........................641 OUTLOOK ........................643 CQ Researcher • Aug. 1, 2008 • www.cqresearcher.com Volume 18, Number 27 • Pages 625-648 BIBLIOGRAPHY ..................646 RECIPIENT OF SOCIETY OF PROFESSIONAL JOURNALISTS AWARD FOR THE NEXT STEP ................647 EXCELLENCE N AMERICAN BAR ASSOCIATION SILVER GAVEL AWARD INTERNET ACCURACY CQ Researcher Aug. -

World Wide Web - Wikipedia, the Free Encyclopedia

World Wide Web - Wikipedia, the free encyclopedia http://en.wikipedia.org/w/index.php?title=World_Wide_Web&printabl... World Wide Web From Wikipedia, the free encyclopedia The World Wide Web , abbreviated as WWW and commonly known as The Web , is a system of interlinked hypertext documents contained on the Internet. With a web browser, one can view web pages that may contain text, images, videos, and other multimedia and navigate between them by using hyperlinks. Using concepts from earlier hypertext systems, British engineer and computer scientist Sir Tim Berners Lee, now the Director of the World Wide Web Consortium, wrote a proposal in March 1989 for what would eventually become the World Wide Web. [1] He was later joined by Belgian computer scientist Robert Cailliau while both were working at CERN in Geneva, Switzerland. In 1990, they proposed using "HyperText [...] to link and access information of various kinds as a web of nodes in which the user can browse at will",[2] and released that web in December. [3] "The World-Wide Web (W3) was developed to be a pool of human knowledge, which would allow collaborators in remote sites to share their ideas and all aspects of a common project." [4] If two projects are independently created, rather than have a central figure make the changes, the two bodies of information could form into one cohesive piece of work. Contents 1 History 2 Function 2.1 What does W3 define? 2.2 Linking 2.3 Ajax updates 2.4 WWW prefix 3 Privacy 4 Security 5 Standards 6 Accessibility 7 Internationalization 8 Statistics 9 Speed issues 10 Caching 11 See also 12 Notes 13 References 14 External links History Main article: History of the World Wide Web In March 1989, Tim BernersLee wrote a proposal [5] that referenced ENQUIRE, a database and 1 of 13 2/7/2010 02:31 PM World Wide Web - Wikipedia, the free encyclopedia http://en.wikipedia.org/w/index.php?title=World_Wide_Web&printabl..