Terrorism & Internet Governance

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

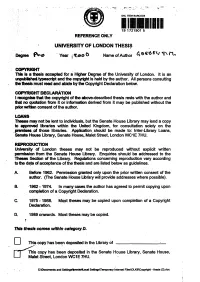

This Thesis Comes Within Category D

* SHL ITEM BARCODE 19 1721901 5 REFERENCE ONLY UNIVERSITY OF LONDON THESIS Degree Year i ^Loo 0 Name of Author COPYRIGHT This Is a thesis accepted for a Higher Degree of the University of London, it is an unpubfished typescript and the copyright is held by the author. All persons consulting the thesis must read and abide by the Copyright Declaration below. COPYRIGHT DECLARATION I recognise that the copyright of the above-described thesis rests with the author and that no quotation from it or information derived from it may be published without the prior written consent of the author. LOANS Theses may not be lent to individuals, but the Senate House Library may lend a copy to approved libraries within the United Kingdom, for consultation solely on the .premises of those libraries. Application should be made to: Inter-Library Loans, Senate House Library, Senate House, Malet Street, London WC1E 7HU. REPRODUCTION University of London theses may not be reproduced without explicit written permission from the Senate House Library. Enquiries should be addressed to the Theses Section of the Library. Regulations concerning reproduction vary according to the date of acceptance of the thesis and are listed below as guidelines. A. Before 1962. Permission granted only upon the prior written consent of the author. (The Senate House Library will provide addresses where possible). B. 1962 -1974. In many cases the author has agreed to permit copying upon completion of a Copyright Declaration. C. 1975 -1988. Most theses may be copied upon completion of a Copyright Declaration. D. 1989 onwards. Most theses may be copied. -

Argumentative Euphemisms, Political Correctness and Relevance

Argumentative Euphemisms, Political Correctness and Relevance Thèse présentée à la Faculté des lettres et sciences humaines Institut des sciences du langage et de la communication Université de Neuchâtel Pour l'obtention du grade de Docteur ès Lettres Par Andriy Sytnyk Directeur de thèse: Professeur Louis de Saussure, Université de Neuchâtel Rapporteurs: Dr. Christopher Hart, Senior Lecturer, Lancaster University Dr. Steve Oswald, Chargé de cours, Université de Fribourg Dr. Manuel Padilla Cruz, Professeur, Universidad de Sevilla Thèse soutenue le 17 septembre 2014 Université de Neuchâtel 2014 2 Key words: euphemisms, political correctness, taboo, connotations, Relevance Theory, neo-Gricean pragmatics Argumentative Euphemisms, Political Correctness and Relevance Abstract The account presented in the thesis combines insights from relevance-theoretic (Sperber and Wilson 1995) and neo-Gricean (Levinson 2000) pragmatics in arguing that a specific euphemistic effect is derived whenever it is mutually manifest to participants of a communicative exchange that a speaker is trying to be indirect by avoiding some dispreferred saliently unexpressed alternative lexical unit(s). This effect is derived when the indirectness is not conventionally associated with the particular linguistic form-trigger relative to some context of use and, therefore, stands out as marked in discourse. The central theoretical claim of the thesis is that the cognitive processing of utterances containing novel euphemistic/politically correct locutions involves meta-representations of saliently unexpressed dispreferred alternatives, as part of relevance-driven recognition of speaker intentions. It is argued that hearers are “invited” to infer the salient dispreferred alternatives in the process of deriving explicatures of utterances containing lexical units triggering euphemistic/politically correct interpretations. -

Page Proof Instructions and Queries

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by The IT University of Copenhagen's Repository Page Proof Instructions and Queries Journal Title: Current Sociology 837536CSI Article Number: 837536 Thank you for choosing to publish with us. This is your final opportunity to ensure your article will be accurate at publication. Please review your proof carefully and respond to the queries using the circled tools in the image below, which are available by clicking “Comment” from the right-side menu in Adobe Reader DC.* Please use only the tools circled in the image, as edits via other tools/methods can be lost during file conversion. For com- ments, questions, or formatting requests, please use . Please do not use comment bubbles/sticky notes . *If you do not see these tools, please ensure you have opened this file with Adobe Reader DC, available for free a t https://get.adobe.com/reader or by going to Help > Check for Updates within other versions of Reader. For more detailed instructions, please see https://us.sagepub.com/ReaderXProofs. No. Query Please note, only ORCID iDs validated prior toNo acceptance queries will be authorized for publication; we are unable to add or amend ORCID iDs at this stage. Please confirm that all author information, including names, affiliations, sequence, and contact details, is correct. Please review the entire document for typographical errors, mathematical errors, and any other necessary corrections; check headings, tables, and figures. Please ensure that you have obtained and enclosed all necessary permissions for the reproduction of art works (e.g. -

Founding Fathers" in American History Dissertations

EVOLVING OUR HEROES: AN ANALYSIS OF FOUNDERS AND "FOUNDING FATHERS" IN AMERICAN HISTORY DISSERTATIONS John M. Stawicki A Thesis Submitted to the Graduate College of Bowling Green State University in partial fulfillment of the requirements for the degree of MASTER OF ARTS December 2019 Committee: Andrew Schocket, Advisor Ruth Herndon Scott Martin © 2019 John Stawicki All Rights Reserved iii ABSTRACT Andrew Schocket, Advisor This thesis studies scholarly memory of the American founders and “Founding Fathers” via inclusion in American dissertations. Using eighty-one semi-randomly and diversely selected founders as case subjects to examine and trace how individual, group, and collective founder interest evolved over time, this thesis uniquely analyzes 20th and 21st Century Revolutionary American scholarship on the founders by dividing it five distinct periods, with the most recent period coinciding with “founders chic.” Using data analysis and topic modeling, this thesis engages three primary historiographic questions: What founders are most prevalent in Revolutionary scholarship? Are social, cultural, and “from below” histories increasing? And if said histories are increasing, are the “New Founders,” individuals only recently considered vital to the era, posited by these histories outnumbering the Top Seven Founders (George Washington, Thomas Jefferson, John Adams, James Madison, Alexander Hamilton, Benjamin Franklin, and Thomas Paine) in founder scholarship? The thesis concludes that the Top Seven Founders have always dominated founder dissertation scholarship, that social, cultural, and “from below” histories are increasing, and that social categorical and “New Founder” histories are steadily increasing as Top Seven Founder studies are slowly decreasing, trends that may shift the Revolutionary America field away from the Top Seven Founders in future years, but is not yet significantly doing so. -

Fake News Outbreak 2021: Can We Stop the Viral Spread?

SOK: Fake News Outbreak 2021: Can We Stop the Viral Spread? TANVEER KHAN, Tampere University ANTONIS MICHALAS, Tampere University ADNAN AKHUNZADA, Technical University of Denmark Social Networks’ omnipresence and ease of use has revolutionized the generation and distribution of information in today’s world. However, easy access to information does not equal an increased level of public knowledge. Unlike traditional media channels, social networks also facilitate faster and wider spread of disinformation and misinformation. Viral spread of false information has serious implications on the behaviours, attitudes and beliefs of the public, and ultimately can seriously endanger the democratic processes. Limiting false information’s negative impact through early detection and control of extensive spread presents the main challenge facing researchers today. In this survey paper, we extensively analyse a wide range of different solutions for the early detection of fake news in the existing literature. More precisely, we examine Machine Learning (ML) models for the identification and classification of fake news, online fake news detection competitions, statistical outputs as well as the advantages and disadvantages of some of the available data sets. Finally, we evaluate the online web browsing tools available for detecting and mitigating fake news and present some open research challenges. 1 INTRODUCTION The popularity of Online Social Networks (OSNs) has rapidly increased in recent years. Social media has shaped the digital world to an extent it is now an indispensable part of life for most of us [54]. Rapid and extensive adoption of online services is influencing and changing how we access information, how we organize to demand political change and how we find partners. -

Anatomy of a Buzzword: the Emergence of ‘The WaterEnergy Food Nexus’ in UK Natural Resource Debates

Anatomy of a buzzword: the emergence of `the water-energy- food nexus' in UK natural resource debates Article (Accepted Version) Cairns, Rose and Krzywoszynska, Anna (2016) Anatomy of a buzzword: the emergence of ‘the water-energy-food nexus’ in UK natural resource debates. Environmental Science and Policy, 64. pp. 164-170. ISSN 1462-9011 This version is available from Sussex Research Online: http://sro.sussex.ac.uk/id/eprint/63663/ This document is made available in accordance with publisher policies and may differ from the published version or from the version of record. If you wish to cite this item you are advised to consult the publisher’s version. Please see the URL above for details on accessing the published version. Copyright and reuse: Sussex Research Online is a digital repository of the research output of the University. Copyright and all moral rights to the version of the paper presented here belong to the individual author(s) and/or other copyright owners. To the extent reasonable and practicable, the material made available in SRO has been checked for eligibility before being made available. Copies of full text items generally can be reproduced, displayed or performed and given to third parties in any format or medium for personal research or study, educational, or not-for-profit purposes without prior permission or charge, provided that the authors, title and full bibliographic details are credited, a hyperlink and/or URL is given for the original metadata page and the content is not changed in any way. http://sro.sussex.ac.uk This is a pre-print, post-review version of the article. -

Socialist Sacrilege: the Provocative Contributions of George Bernard

SOCIALIST SACRILEGE: THE PROVOCATIVE CONTRIBUTIONS OF GEORGE BERNARD SHAW AND GEORGE ORWELL TO SOCIALISM IN THE 20TH CENTURY A Thesis Presented to The Graduate Faculty of The University of Akron In Partial Fulfillment of the Requirements for the Degree Master of Arts Matthew Fleagle August, 2009 SOCIALIST SACRILEGE: THE PROVOCATIVE CONTRIBUTIONS OF GEORGE BERNARD SHAW AND GEORGE ORWELL TO SOCIALISM IN THE 20TH CENTURY Matthew Fleagle Thesis Approved: Accepted: __________________________ __________________________ Advisor Dean of the College Dr. Alan Ambrisco Dr. Chand Midha __________________________ __________________________ Faculty Reader Dean of the Graduate School Dr. Hillary Nunn Dr. George R. Newkome __________________________ __________________________ Faculty Reader Date Mr. Robert Pope __________________________ Department Chair Dr. Michael Schuldiner ii TABLE OF CONTENTS Page CHAPTER I. THE TRICKLE-DOWN SOCIALISM OF SHAW .......................................................1 Works Cited ..........................................................................................................42 II. THE RADICAL AMONG REVOLUTIONARIES .....................................................43 Works Cited ..........................................................................................................79 III. MARXIST COLLECTIVISM AND THE LITERARY AESTHETIC ......................81 Works Cited ........................................................................................................105 iii CHAPTER I THE TRICKLE-DOWN -

Declining Journalism Freedom in Turkey

The University of Maine DigitalCommons@UMaine Honors College Spring 5-2018 Declining Journalism Freedom in Turkey Aliya Uteuova University of Maine, [email protected] Follow this and additional works at: https://digitalcommons.library.umaine.edu/honors Part of the Journalism Studies Commons, and the Political Science Commons Recommended Citation Uteuova, Aliya, "Declining Journalism Freedom in Turkey" (2018). Honors College. 464. https://digitalcommons.library.umaine.edu/honors/464 This Honors Thesis is brought to you for free and open access by DigitalCommons@UMaine. It has been accepted for inclusion in Honors College by an authorized administrator of DigitalCommons@UMaine. For more information, please contact [email protected]. DECLINING JOURNALISTIC FREEDOM IN TURKEY by Aliya Uteuova A Thesis Submitted in Partial Fulfillment of the Requirements for a Degree with Honors (Political Science and Journalism) The Honors College University of Maine May 2018 Advisory Committee: James W. Warhola, Professor of Political Science, Advisor Paul Holman, Adjunct Professor of Political Science Jordan LaBouff, Associate Professor of Psychology and Honors Holly Schreiber, Assistant Professor of Communication and Journalism Seth Singleton, Adjunct Professor and Libra Professor of International Relations © 2018 Aliya Uteuova All Rights Reserved ABSTRACT Currently, Turkey is the country with the most jailed journalists. According to the Journalists Union of Turkey, 145 journalists and media workers are in prison as of February 2018. In the decades that press freedom was monitored in Turkey, the suppression of press and violations of the free expression rights under the regime of Recep Tayyip Erdogan is unprecedented. Turkey once had a potential of emerging as the first modern democracy in a Muslim majority nation. -

Banished Words Posters

LSSU Issues List Of Banished Words For 2003 January 1st, 2003 SAULT STE. MARIE, Mich. – 'Make no mistakes about it,' Lake Superior State University issued its 28th annual 'extreme' List of Words Banished from the Queen's English for Mis-Use, Over-Use and General Uselessness, which the world needs 'now, more than ever.' LSSU has been compiling the list since 1976, choosing from nominations sent from around the world. This year, words and phrases were pulled from a record 3,000 nominations. Most were sent through the school's website: http://www.lssu.edu/banished. Word-watchers pull nominations throughout the year from everyday speech, as well as from the news, fields of education, technology, advertising, politics, and more. A committee gathers the entries and chooses the best in December. The list is released on New Year's Day. The complete 2003 list follows: POLITICS AND THE MEDIA MATERIAL BREACH -- "Suggests an obstetrical complication that pulls a physician off the golf course," says a nominator from Washington, D.C. Sounds like contract lawyer-speak rather than the world-worn parlance of war planners and diplomats. At one time, UN resolutions were violated. Violators were held in contempt. How long until treaties are ripped up in the presence of attorneys? MUST-SEE TV -- "Must find remote. Must change channel," laments Nan Heflin from Colorado Springs, Colorado. Television once pitched entertainment. Apparently now it's taken on a greater imperative. Assumes herd mentality over program taste. UNTIMELY DEATH -- Balky attempt to make some deaths more tragic than others. "Has anyone yet died a timely death?" asks Donald Burgess of South Pasadena, California. -

Remembering and Forgetting the War

Remembering and Forgetting the War Remembering and Forgetting the War Elite Mythmaking, Mass Reaction, and Sino-Japanese Relations, 1950–2006 YINAN HE Ruling elites often make pernicious national myths for instrumental purposes, creating divergent historical memories of the same events in different countries. But they tend to exploit international history disputes only when they feel insecure domestically. Societal reactions to elite mythmaking, reflected in radicalized public opinion, can reinforce history disputes. During the 1950s–1970s, China avoided history disputes with Japan to focus on geostrategic interests. Only from the early 1980s did domestic political incentives motivate Beijing to attack Japanese historical memory and promote assertive nationalism through patriotic history propaganda, which radicalized Chinese popular views about Japan. Media highlighting of Japan’s historical revisionism exacerbated societal demands to settle war accounts with Japan, while factional politics within the Chinese Communist Party made it difficult for the top leaders to compromise on the bilateral “history issue.” INTRODUCTION On 13 August 2001 Japan’s new prime minister, Koizumi Junichiro, paid homage at the Shintoist Yasukuni Shrine in Tokyo, dedicated to the spir- its of those who died fighting on behalf of the Emperor of Japan, and a long-time symbol of Japanese imperialist aggression in the eyes of China. While he claimed that his visit to the shrine was intended to “convey to all victims of the war my heartfelt repentance and condolences” and “pledge for peace,” it was immediately denounced by the Chinese government 43 Yinan He as an “erroneous act that has damaged the political foundation of Sino- Japanese relations as well as the feelings of the Chinese people and other Asian victims.”1 Nonetheless, Koizumi continued his annual visits to the shrine until shortly before stepping down in September 2006. -

Manfred B. Steger Political Ideologies and Social Imaginaries in the Global Age1

MANFRED B. STEGER Political Ideologies and Social Imaginaries in the Global Age1 Abstract: This article argues that proliferation of prefixes like ‘neo’ and ‘post’ that adorn conventional ‘isms’ have cast a long shadow on the contemporary relevance of traditional political ideologies. Suggesting that there is, indeed, something new about today’s political belief systems, the essay draws on the concept of ‘social imaginaries’ to make sense of the changing nature of the contemporary ideological landscape. The core thesis presented here is that today’s ideologies are increasingly translating the rising global imaginary into competing political programs and agendas. But these subjective dynamics of denationalization at the heart of globalization have not yet dispensed with the declining national imaginary. The twenty-first century promises to be an ideational interregnum in which both the global and national stimulate people’s deep-seated understandings of community. Suggesting a new classification scheme dividing contemporary political ideologies into ‘market globalism’, ‘justice globalism’, and ‘jihadist globalism’, the article ends with a brief assessment of the main ideological features of justice globalism. KEY WORDS: globalization, global imaginary, national imaginary, ideology, market globalism, justice globalism, global justice, jihadist globalism, American Empire, modernity, proliferation of prefixes. • Introduction The defeat of Nazi Germany in 1945 and the collapse of the Soviet Empire in 1991 enticed scores of Western commentators to relegate ‘ideology’ to the dustbin of history. Proclaiming a radically new era in human history, they argued that ideology had ended with the final triumph of liberal capitalism. This dream of a universal set of political ideas ruling the world came crashing down with the Twin Towers of the World Trade Center on September 11, 2001. -

Business Buzzwords (Buzzword: Modewort, Schlagwort)

Business buzzwords (buzzword: Modewort, Schlagwort) A stilted compound word that means getting accustomed to a new environment. Westerners Acculturation in Japan start the process by learning to eat with chopsticks. Managers in a new corporate culture start the process by trying to understand what the chairman wants. The tendency to substitute ad hoc, or short-term, initiatives for serious, rational plans. Such Ad hocracy behavior works in a works in an emergency, but when institutionalized it becomes a problem. Forecasting into virgin territory; any bold economic prediction based on insufficient data. A Armchair good example is the economic waffling over the impact of Europe's post-1992 single market; economics no region has tried such an ambitious integration of economic forces before, thus the results are necessarily a matter of guesswork. A person, a company or any effort that has collapsed so hopelessly that it would have to be Basket case loaded into a basket to continue. Figuratively speaking, the Soviet economy is a basket case. So are many state-owned companies in Europe. Another gem inspired by computer technology, this describes the person who tends to think in absolute terms- yes and no, black and white, good and bad-with no nuances in between. The Binary thinker binary feature of the computer is its capacity to answer all commands with a series of on-off, 0-1 type choices that eventually formulate a reply. The binary feature of the thinker is the ability to avoid gray areas. Everything in life becomes a yes-or-no proposition. This is what tough guys do when the pressure is one.