Disinformation and Propaganda – Impact on the Functioning of the Rule of Law in the EU and Its Member States

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

An Examination of the Impact of Astroturfing on Nationalism: A

social sciences $€ £ ¥ Article An Examination of the Impact of Astroturfing on Nationalism: A Persuasion Knowledge Perspective Kenneth M. Henrie 1,* and Christian Gilde 2 1 Stiller School of Business, Champlain College, Burlington, VT 05401, USA 2 Department of Business and Technology, University of Montana Western, Dillon, MT 59725, USA; [email protected] * Correspondence: [email protected]; Tel.: +1-802-865-8446 Received: 7 December 2018; Accepted: 23 January 2019; Published: 28 January 2019 Abstract: One communication approach that lately has become more common is astroturfing, which has been more prominent since the proliferation of social media platforms. In this context, astroturfing is a fake grass-roots political campaign that aims to manipulate a certain audience. This exploratory research examined how effective astroturfing is in mitigating citizens’ natural defenses against politically persuasive messages. An experimental method was used to examine the persuasiveness of social media messages related to coal energy in their ability to persuade citizens’, and increase their level of nationalism. The results suggest that citizens are more likely to be persuaded by an astroturfed message than people who are exposed to a non-astroturfed message, regardless of their political leanings. However, the messages were not successful in altering an individual’s nationalistic views at the moment of exposure. The authors discuss these findings and propose how in a long-term context, astroturfing is a dangerous addition to persuasive communication. Keywords: astroturfing; persuasion knowledge; nationalism; coal energy; social media 1. Introduction Astroturfing is the simulation of a political campaign which is intended to manipulate the target audience. Often, the purpose of such a campaign is not clearly recognizable because a political effort is disguised as a grassroots operation. -

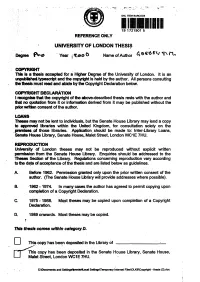

This Thesis Comes Within Category D

* SHL ITEM BARCODE 19 1721901 5 REFERENCE ONLY UNIVERSITY OF LONDON THESIS Degree Year i ^Loo 0 Name of Author COPYRIGHT This Is a thesis accepted for a Higher Degree of the University of London, it is an unpubfished typescript and the copyright is held by the author. All persons consulting the thesis must read and abide by the Copyright Declaration below. COPYRIGHT DECLARATION I recognise that the copyright of the above-described thesis rests with the author and that no quotation from it or information derived from it may be published without the prior written consent of the author. LOANS Theses may not be lent to individuals, but the Senate House Library may lend a copy to approved libraries within the United Kingdom, for consultation solely on the .premises of those libraries. Application should be made to: Inter-Library Loans, Senate House Library, Senate House, Malet Street, London WC1E 7HU. REPRODUCTION University of London theses may not be reproduced without explicit written permission from the Senate House Library. Enquiries should be addressed to the Theses Section of the Library. Regulations concerning reproduction vary according to the date of acceptance of the thesis and are listed below as guidelines. A. Before 1962. Permission granted only upon the prior written consent of the author. (The Senate House Library will provide addresses where possible). B. 1962 -1974. In many cases the author has agreed to permit copying upon completion of a Copyright Declaration. C. 1975 -1988. Most theses may be copied upon completion of a Copyright Declaration. D. 1989 onwards. Most theses may be copied. -

Post-Truth Politics and Richard Rorty's Postmodernist Bourgeois Liberalism

Ash Center Occasional Papers Tony Saich, Series Editor Something Has Cracked: Post-Truth Politics and Richard Rorty’s Postmodernist Bourgeois Liberalism Joshua Forstenzer University of Sheffield (UK) July 2018 Ash Center for Democratic Governance and Innovation Harvard Kennedy School Ash Center Occasional Papers Series Series Editor Tony Saich Deputy Editor Jessica Engelman The Roy and Lila Ash Center for Democratic Governance and Innovation advances excellence and innovation in governance and public policy through research, education, and public discussion. By training the very best leaders, developing powerful new ideas, and disseminating innovative solutions and institutional reforms, the Center’s goal is to meet the profound challenges facing the world’s citizens. The Ford Foundation is a founding donor of the Center. Additional information about the Ash Center is available at ash.harvard.edu. This research paper is one in a series funded by the Ash Center for Democratic Governance and Innovation at Harvard University’s John F. Kennedy School of Government. The views expressed in the Ash Center Occasional Papers Series are those of the author(s) and do not necessarily reflect those of the John F. Kennedy School of Government or of Harvard University. The papers in this series are intended to elicit feedback and to encourage debate on important public policy challenges. This paper is copyrighted by the author(s). It cannot be reproduced or reused without permission. Ash Center Occasional Papers Tony Saich, Series Editor Something Has Cracked: Post-Truth Politics and Richard Rorty’s Postmodernist Bourgeois Liberalism Joshua Forstenzer University of Sheffield (UK) July 2018 Ash Center for Democratic Governance and Innovation Harvard Kennedy School Letter from the Editor The Roy and Lila Ash Center for Democratic Governance and Innovation advances excellence and innovation in governance and public policy through research, education, and public discussion. -

Freepint Report: Product Review of Factiva

FreePint Report: Product Review of Factiva December 2014 Product Review of Factiva In-depth, independent review of the product, plus links to related resources “...has some 32,000 sources spanning all forms of content of which thousands are not available on the free web. Some source archives go back to 1951...” [SAMPLE] www.freepint.com © Free Pint Limited 2014 Contents Introduction & Contact Details 4 Sources - Content and Coverage 5 Technology - Search and User Interface 8 Technology - Outputs, Analytics, Alerts, Help 18 Value - Competitors, Development & Pricing 29 FreePint Buyer’s Guide: News 33 Other Products 35 About the Reviewer 36 ^ Back to Contents | www.freepint.com - 2 - © Free Pint Limited 2014 About this Report Reports FreePint raises the value of information in the enterprise, by publishing articles, reports and resources that support information sources, information technology and information value. A FreePint Subscription provides customers with full access to everything we publish. Customers can share individual articles and reports with anyone at their organisations as part of the terms and conditions of their license. Some license levels also enable customers to place materials on their intranets. To learn more about FreePint, visit http://www.freepint.com/ Disclaimer FreePint Report: Product Review of Factiva (ISBN 978-1-78123-181-4) is a FreePint report published by Free Pint Limited. The opinions, advice, products and services offered herein are the sole responsibility of the contributors. Whilst all reasonable care has been taken to ensure the accuracy of the publication, the publishers cannot accept responsibility for any errors or omissions. Except as covered by subscriber or purchaser licence agreement, this publication MAY NOT be copied and/or distributed without the prior written agreement of the publishers. -

Political Astroturfing Across the World

Political astroturfing across the world Franziska B. Keller∗ David Schochy Sebastian Stierz JungHwan Yang§ Paper prepared for the Harvard Disinformation Workshop Update 1 Introduction At the very latest since the Russian Internet Research Agency’s (IRA) intervention in the U.S. presidential election, scholars and the broader public have become wary of coordi- nated disinformation campaigns. These hidden activities aim to deteriorate the public’s trust in electoral institutions or the government’s legitimacy, and can exacerbate political polarization. But unfortunately, academic and public debates on the topic are haunted by conceptual ambiguities, and rely on few memorable examples, epitomized by the often cited “social bots” who are accused of having tried to influence public opinion in various contemporary political events. In this paper, we examine “political astroturfing,” a particular form of centrally co- ordinated disinformation campaigns in which participants pretend to be ordinary citizens acting independently (Kovic, Rauchfleisch, Sele, & Caspar, 2018). While the accounts as- sociated with a disinformation campaign may not necessarily spread incorrect information, they deceive the audience about the identity and motivation of the person manning the ac- count. And even though social bots have been in the focus of most academic research (Fer- rara, Varol, Davis, Menczer, & Flammini, 2016; Stella, Ferrara, & De Domenico, 2018), seemingly automated accounts make up only a small part – if any – of most astroturf- ing campaigns. For instigators of astroturfing, relying exclusively on social bots is not a promising strategy, as humans are good at detecting low-quality information (Pennycook & Rand, 2019). On the other hand, many bots detected by state-of-the-art social bot de- tection methods are not part of an astroturfing campaign, but unrelated non-political spam ∗Hong Kong University of Science and Technology yThe University of Manchester zGESIS – Leibniz Institute for the Social Sciences §University of Illinois at Urbana-Champaign 1 bots. -

Argumentative Euphemisms, Political Correctness and Relevance

Argumentative Euphemisms, Political Correctness and Relevance Thèse présentée à la Faculté des lettres et sciences humaines Institut des sciences du langage et de la communication Université de Neuchâtel Pour l'obtention du grade de Docteur ès Lettres Par Andriy Sytnyk Directeur de thèse: Professeur Louis de Saussure, Université de Neuchâtel Rapporteurs: Dr. Christopher Hart, Senior Lecturer, Lancaster University Dr. Steve Oswald, Chargé de cours, Université de Fribourg Dr. Manuel Padilla Cruz, Professeur, Universidad de Sevilla Thèse soutenue le 17 septembre 2014 Université de Neuchâtel 2014 2 Key words: euphemisms, political correctness, taboo, connotations, Relevance Theory, neo-Gricean pragmatics Argumentative Euphemisms, Political Correctness and Relevance Abstract The account presented in the thesis combines insights from relevance-theoretic (Sperber and Wilson 1995) and neo-Gricean (Levinson 2000) pragmatics in arguing that a specific euphemistic effect is derived whenever it is mutually manifest to participants of a communicative exchange that a speaker is trying to be indirect by avoiding some dispreferred saliently unexpressed alternative lexical unit(s). This effect is derived when the indirectness is not conventionally associated with the particular linguistic form-trigger relative to some context of use and, therefore, stands out as marked in discourse. The central theoretical claim of the thesis is that the cognitive processing of utterances containing novel euphemistic/politically correct locutions involves meta-representations of saliently unexpressed dispreferred alternatives, as part of relevance-driven recognition of speaker intentions. It is argued that hearers are “invited” to infer the salient dispreferred alternatives in the process of deriving explicatures of utterances containing lexical units triggering euphemistic/politically correct interpretations. -

The Future of Free Speech, Trolls, Anonymity and Fake News Online.” Pew Research Center, March 2017

NUMBERS, FACTS AND TRENDS SHAPING THE WORLD FOR RELEASE MARCH 29, 2017 BY Lee Rainie, Janna Anderson, and Jonathan Albright FOR MEDIA OR OTHER INQUIRIES: Lee Rainie, Director, Internet, Science and Technology research Prof. Janna Anderson, Director, Elon University’s Imagining the Internet Center Asst. Prof. Jonathan Albright, Elon University Dana Page, Senior Communications Manager 202.419.4372 www.pewresearch.org RECOMMENDED CITATION: Rainie, Lee, Janna Anderson and Jonathan Albright. The Future of Free Speech, Trolls, Anonymity and Fake News Online.” Pew Research Center, March 2017. Available at: http://www.pewinternet.org/2017/03/29/the-future-of-free-speech- trolls-anonymity-and-fake-news-online/ 1 PEW RESEARCH CENTER About Pew Research Center Pew Research Center is a nonpartisan fact tank that informs the public about the issues, attitudes and trends shaping America and the world. It does not take policy positions. The Center conducts public opinion polling, demographic research, content analysis and other data-driven social science research. It studies U.S. politics and policy; journalism and media; internet, science, and technology; religion and public life; Hispanic trends; global attitudes and trends; and U.S. social and demographic trends. All of the Center’s reports are available at www.pewresearch.org. Pew Research Center is a subsidiary of The Pew Charitable Trusts, its primary funder. For this project, Pew Research Center worked with Elon University’s Imagining the Internet Center, which helped conceive the research as well as collect and analyze the data. © Pew Research Center 2017 www.pewresearch.org 2 PEW RESEARCH CENTER The Future of Free Speech, Trolls, Anonymity and Fake News Online The internet supports a global ecosystem of social interaction. -

Political Rhetoric and Minority Health: Introducing the Rhetoric- Policy-Health Paradigm

Saint Louis University Journal of Health Law & Policy Volume 12 Issue 1 Public Health Law in the Era of Alternative Facts, Isolationism, and the One Article 7 Percent 2018 Political Rhetoric and Minority Health: Introducing the Rhetoric- Policy-Health Paradigm Kimberly Cogdell Grainger North Carolina Central University, [email protected] Follow this and additional works at: https://scholarship.law.slu.edu/jhlp Part of the Health Law and Policy Commons Recommended Citation Kimberly C. Grainger, Political Rhetoric and Minority Health: Introducing the Rhetoric-Policy-Health Paradigm, 12 St. Louis U. J. Health L. & Pol'y (2018). Available at: https://scholarship.law.slu.edu/jhlp/vol12/iss1/7 This Symposium Article is brought to you for free and open access by Scholarship Commons. It has been accepted for inclusion in Saint Louis University Journal of Health Law & Policy by an authorized editor of Scholarship Commons. For more information, please contact Susie Lee. SAINT LOUIS UNIVERSITY SCHOOL OF LAW POLITICAL RHETORIC AND MINORITY HEALTH: INTRODUCING THE RHETORIC-POLICY-HEALTH PARADIGM KIMBERLY COGDELL GRAINGER* ABSTRACT Rhetoric is a persuasive device that has been studied for centuries by philosophers, thinkers, and teachers. In the political sphere of the Trump era, the bombastic, social media driven dissemination of rhetoric creates the perfect space to increase its effect. Today, there are clear examples of how rhetoric influences policy. This Article explores the link between divisive political rhetoric and policies that negatively affect minority health in the U.S. The rhetoric-policy-health (RPH) paradigm illustrates the connection between rhetoric and health. Existing public health policy research related to Health in All Policies and the social determinants of health combined with rhetorical persuasive tools create the foundation for the paradigm. -

Digital Populism: Trolls and Political Polarization of Twitter in Turkey

International Journal of Communication 11(2017), 4093–4117 1932–8036/20170005 Digital Populism: Trolls and Political Polarization of Twitter in Turkey ERGİN BULUT Koç University, Turkey ERDEM YÖRÜK Koç University, Turkey University of Oxford, UK This article analyzes political trolling in Turkey through the lens of mediated populism. Twitter trolling in Turkey has diverged from its original uses (i.e., poking fun, flaming, etc.) toward government-led polarization and right-wing populism. Failing to develop an effective strategy to mobilize online masses, Turkey’s ruling Justice and Development Party (JDP/AKP) relied on the polarizing performances of a large progovernment troll army. Trolls deploy three features of JDP’s populism: serving the people, fetish of the will of the people, and demonization. Whereas trolls traditionally target and mock institutions, Turkey’s political trolls act on behalf of the establishment. They produce a digital culture of lynching and censorship. Trolls’ language also impacts pro-JDP journalists who act like trolls and attack journalists, academics, and artists critical of the government. Keywords: trolls, mediated populism, Turkey, political polarization, Twitter Turkish media has undergone a transformation during the uninterrupted tenure of the ruling Justice and Development Party (JDP) since 2002. Not supported by the mainstream media when it first came to power, JDP created its own media army and transformed the mainstream media’s ideological composition. What has, however, destabilized the entire media environment was the Gezi Park protests of summer 2013.1 Activists’ use of social media not only facilitated political organizing, but also turned the news environment upside down. Having recognized that the mainstream media was not trustworthy, oppositional groups migrated to social media for organizing and producing content. -

How the Chinese Government Fabricates Social Media Posts

American Political Science Review (2017) 111, 3, 484–501 doi:10.1017/S0003055417000144 c American Political Science Association 2017 How the Chinese Government Fabricates Social Media Posts for Strategic Distraction, Not Engaged Argument GARY KING Harvard University JENNIFER PAN Stanford University MARGARET E. ROBERTS University of California, San Diego he Chinese government has long been suspected of hiring as many as 2 million people to surrep- titiously insert huge numbers of pseudonymous and other deceptive writings into the stream of T real social media posts, as if they were the genuine opinions of ordinary people. Many academics, and most journalists and activists, claim that these so-called 50c party posts vociferously argue for the government’s side in political and policy debates. As we show, this is also true of most posts openly accused on social media of being 50c. Yet almost no systematic empirical evidence exists for this claim https://doi.org/10.1017/S0003055417000144 . or, more importantly, for the Chinese regime’s strategic objective in pursuing this activity. In the first large-scale empirical analysis of this operation, we show how to identify the secretive authors of these posts, the posts written by them, and their content. We estimate that the government fabricates and posts about 448 million social media comments a year. In contrast to prior claims, we show that the Chinese regime’s strategy is to avoid arguing with skeptics of the party and the government, and to not even discuss controversial issues. We show that the goal of this massive secretive operation is instead to distract the public and change the subject, as most of these posts involve cheerleading for China, the revolutionary history of the Communist Party, or other symbols of the regime. -

Digital Microtargeting Political Party Innovation Primer 1 Digital Microtargeting

Digital Microtargeting Political Party Innovation Primer 1 Digital Microtargeting Political Party Innovation Primer 1 International Institute for Democracy and Electoral Assistance © 2018 International Institute for Democracy and Electoral Assistance International IDEA publications are independent of specific national or political interests. Views expressed in this publication do not necessarily represent the views of International IDEA, its Board or its Council members. The electronic version of this publication is available under a Creative Commons Attribute- NonCommercial-ShareAlike 3.0 (CC BY-NC-SA 3.0) licence. You are free to copy, distribute and transmit the publication as well as to remix and adapt it, provided it is only for non-commercial purposes, that you appropriately attribute the publication, and that you distribute it under an identical licence. For more information visit the Creative Commons website: <http://creativecommons.org/licenses/by-nc-sa/3.0/>. International IDEA Strömsborg SE–103 34 Stockholm Sweden Telephone: +46 8 698 37 00 Email: [email protected] Website: <http://www.idea.int> Design and layout: International IDEA Cover illustration: © 123RF, <http://www.123rf.com> ISBN: 978-91-7671-176-7 Created with Booktype: <https://www.booktype.pro> International IDEA Contents 1. Introduction ............................................................................................................ 6 2. What is the issue? The rationale of digital microtargeting ................................ 7 3. Perspectives on digital -

Read All About It! Understanding the Role of Media in Economic Development

Kyklos_2004-01_UG2+UG3.book Seite 21 Mittwoch, 28. Januar 2004 9:15 09 KYKLOS, Vol. 57 – 2004 – Fasc. 1, 21–44 Read All About It! Understanding the Role of Media in Economic Development Christopher J. Coyne and Peter T. Leeson* I. INTRODUCTION The question of what factors lead to economic development has been at the center of economics for over two centuries. Adam Smith, writing in 1776, at- tempted to determine the factors that led to the wealth of nations. He concluded that low taxes, peace and a fair administration of justice would lead to eco- nomic growth (1776: xliii). Despite the straightforward prescription put forth by Smith, many countries have struggled to achieve the goal of economic pros- perity. One can find many examples – Armenia, Bulgaria, Moldova, Romania and Ukraine to name a few – where policies aimed at economic development have either not been effectively implemented or have failed. If the key to eco- nomic development is as simple as the principles outlined by Smith, then why do we see many countries struggling to achieve it? The development process involves working within the given political and economic order to adopt policies that bring about economic growth. Given that political agents are critical to the process, the development of market institu- tions that facilitate economic growth is therefore a problem in ‘public choice’. There have been many explanations for the failure of certain economies to de- velop. A lack of investment in capital, foreign financial aid (Easterly 2001: 26– 45), culture (Lal 1998) and geographic location (Gallup et al. 1998) have all been postulated as potential explanations for the failure of economies to de- velop.