Fake News Outbreak 2021: Can We Stop the Viral Spread?

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

She Is Not a Criminal

SHE IS NOT A CRIMINAL THE IMPACT OF IRELAND’S ABORTION LAW Amnesty International is a global movement of more than 7 million people who campaign for a world where human rights are enjoyed by all. Our vision is for every person to enjoy all the rights enshrined in the Universal Declaration of Human Rights and other international human rights standards. We are independent of any government, political ideology, economic interest or religion and are funded mainly by our membership and public donations. First published in 2015 by Amnesty International Ltd Peter Benenson House 1 Easton Street London WC1X 0DW United Kingdom © Amnesty International 2015 Index: EUR 29/1597/2015 Original language: English Printed by Amnesty International, International Secretariat, United Kingdom All rights reserved. This publication is copyright, but may be reproduced by any method without fee for advocacy, campaigning and teaching purposes, but not for resale. The copyright holders request that all such use be registered with them for impact assessment purposes. For copying in any other circumstances, or for reuse in other publications, or for translation or adaptation, prior written permission must be obtained from the publishers, and a fee may be payable. To request permission, or for any other inquiries, please contact [email protected] Cover photo: Stock image: Female patient sitting on a hospital bed. © Corbis amnesty.org CONTENTS 1. Executive summary ................................................................................................... 6 -

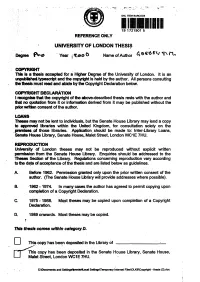

This Thesis Comes Within Category D

* SHL ITEM BARCODE 19 1721901 5 REFERENCE ONLY UNIVERSITY OF LONDON THESIS Degree Year i ^Loo 0 Name of Author COPYRIGHT This Is a thesis accepted for a Higher Degree of the University of London, it is an unpubfished typescript and the copyright is held by the author. All persons consulting the thesis must read and abide by the Copyright Declaration below. COPYRIGHT DECLARATION I recognise that the copyright of the above-described thesis rests with the author and that no quotation from it or information derived from it may be published without the prior written consent of the author. LOANS Theses may not be lent to individuals, but the Senate House Library may lend a copy to approved libraries within the United Kingdom, for consultation solely on the .premises of those libraries. Application should be made to: Inter-Library Loans, Senate House Library, Senate House, Malet Street, London WC1E 7HU. REPRODUCTION University of London theses may not be reproduced without explicit written permission from the Senate House Library. Enquiries should be addressed to the Theses Section of the Library. Regulations concerning reproduction vary according to the date of acceptance of the thesis and are listed below as guidelines. A. Before 1962. Permission granted only upon the prior written consent of the author. (The Senate House Library will provide addresses where possible). B. 1962 -1974. In many cases the author has agreed to permit copying upon completion of a Copyright Declaration. C. 1975 -1988. Most theses may be copied upon completion of a Copyright Declaration. D. 1989 onwards. Most theses may be copied. -

Tutorial Blogspot Plus Blogger Templates

Tutorial Blogspot Plus Blogger Templates To Bloggers Everywhere 1 2 Contents Contact Us 25 Cara daftar Gmail 25 Cara daftar Blogger pertama kali 27 Cara login ke blogger pertama kali 28 Kontrol panel blogger (dashboard) 29 Cara posting di blogger 30 Halaman Pengaturan (menu dasar) 31 Banyak malware yang ditemukan google 32 Google ! Mesin pembobol yang menakutkan 32 Web Proxy (Anonymous) 33 Daftar alamat google lengkap 34 Google: tampil berdasarkan Link 37 Oom - Pemenang kontes programming VB6 source code 38 (www.planet-sourc... Oom - Keyboard Diagnostic 2002 (VB6 - Open Source) 39 Oom - Access Siemens GSM CellPhone With Full 40 AT+Command (VB6 - Ope... Oom - How to know speed form access (VB6) 40 Para blogger haus akan link blog 41 Nama blog cantik yang disia-siakan dan apakah pantas nama 41 blog dipe... Otomatisasi firewalling IP dan MAC Address dengan bash script 43 Firewalling IP Address dan MAC Address dengan iptables 44 Meminimalis serangan Denial of Service Attacks di Win Y2K/XP 47 Capek banget hari ini.. 48 3 daftar blog ke search engine 48 Etika dan cara promosi blog 49 Tool posting dan edit text blogger 52 Setting Blog : Tab Publikasi 53 Wordpress plugins untuk google adsense 54 Google meluncurkan pemanggilan META tag terbaru 54 “unavailable after” Setting Blog : Tab Format 55 Melacak posisi keyword di Yahoo 56 Mengetahui page ranking dan posisi keyword (kata kunci) anda 56 pada S... Percantik halaman blog programmer dengan "New Code 57 Scrolling Ticke... 20 Terbaik Situs Visual Basic 58 BEST BUY : 11 CD Full Source Code Untuk Programmer 60 Tips memulai blog untuk pemula 62 Lijit: Alternatif search untuk blogger 62 Berpartisipasi dalam Blog "17 Agustus Indonesia MERDEKA" 63 Trafik di blog lumayan, tapi kenapa masih aja minim komentar? 64 Editor posting compose blogger ternyata tidak "wysiwyg" 65 Google anti jual beli link 65 Tips blogger css validator menggunakan "JavaScript Console" 65 pada Fl.. -

Argumentative Euphemisms, Political Correctness and Relevance

Argumentative Euphemisms, Political Correctness and Relevance Thèse présentée à la Faculté des lettres et sciences humaines Institut des sciences du langage et de la communication Université de Neuchâtel Pour l'obtention du grade de Docteur ès Lettres Par Andriy Sytnyk Directeur de thèse: Professeur Louis de Saussure, Université de Neuchâtel Rapporteurs: Dr. Christopher Hart, Senior Lecturer, Lancaster University Dr. Steve Oswald, Chargé de cours, Université de Fribourg Dr. Manuel Padilla Cruz, Professeur, Universidad de Sevilla Thèse soutenue le 17 septembre 2014 Université de Neuchâtel 2014 2 Key words: euphemisms, political correctness, taboo, connotations, Relevance Theory, neo-Gricean pragmatics Argumentative Euphemisms, Political Correctness and Relevance Abstract The account presented in the thesis combines insights from relevance-theoretic (Sperber and Wilson 1995) and neo-Gricean (Levinson 2000) pragmatics in arguing that a specific euphemistic effect is derived whenever it is mutually manifest to participants of a communicative exchange that a speaker is trying to be indirect by avoiding some dispreferred saliently unexpressed alternative lexical unit(s). This effect is derived when the indirectness is not conventionally associated with the particular linguistic form-trigger relative to some context of use and, therefore, stands out as marked in discourse. The central theoretical claim of the thesis is that the cognitive processing of utterances containing novel euphemistic/politically correct locutions involves meta-representations of saliently unexpressed dispreferred alternatives, as part of relevance-driven recognition of speaker intentions. It is argued that hearers are “invited” to infer the salient dispreferred alternatives in the process of deriving explicatures of utterances containing lexical units triggering euphemistic/politically correct interpretations. -

Unconscionability 2.0 and the Ip Boilerplate: a R Evised Doctrine of Unconscionability for the Information

UNCONSCIONABILITY 2.0 AND THE IP BOILERPLATE: A REVISED DOCTRINE OF UNCONSCIONABILITY FOR THE INFORMATION AGE Amit Elazari Bar On† ABSTRACT In the information age, where fewer goods and more innovations are produced, intellectual property law has become the most crucial governing system. Yet, rather than evolving to fit its purpose, it has seemingly devolved—standard form contracts, governing countless creations, have formed an alternative de facto intellectual property regime. The law governing the information society is often prescribed not by legislators or courts, but rather by private entities, using technology and contracts to regulate much of the creative discourse. The same phenomena persist in other emerging areas of information law, such as data protection and cybersecurity laws. This Article offers a new analytical perspective on private ordering in intellectual property (IP) focusing on the rise of IP boilerplate, the standard form contracts that regulate innovations and creations. It distinguishes between contracts drafted by the initial owners of the IP (such as End-User-License-Agreements (EULAs)) and contracts drafted by nonowners (such as platforms’ terms of use), and highlights the ascendancy of the latter in the user- generated content era. In this era, the drafter of the contract owns nothing, yet seeks to regulate the layman adherent’s creations, and sometimes even to redefine the contours of the public domain. Private ordering is expanding its governing role in IP, creating new problems and undermining the rights that legislators bestow on creators and users. While scholars often discuss the problems caused by IP boilerplate, solutions are left wanting. Inter-doctrinal solutions have been unjustly overlooked. -

Terrorism & Internet Governance

Terrorism & Internet Governance: Core Issues Maura Conway School of Law & Government Dublin City University Glasnevin Dublin 9 Ireland [email protected] ______________________________________________________________________ Introduction Both global governance and the sub-set of issues that may be termed ‘internet governance’ are vast and complex issue areas. The difficulties of trying to ‘legislate’ at the global level – efforts that must encompass the economic, cultural, developmental, legal, and political concerns of diverse states and other stakeholders – are further complicated by the technological conundrums encountered in cyberspace. The unleashing of the so-called ‘Global War on Terrorism’ (GWOT) complicates things yet further. Today, both sub-state and non-state actors are said to be harnessing – or preparing to harness – the power of the internet to harass and attack their foes. Clearly, international terrorism had already been a significant security issue prior to 11 September 2001 (hereafter ‘9/11’) and the emergence of the internet in the decade before. Together, however, the events of 9/11 and advancements in ICTs have added new dimensions to the problem. In newspapers and magazines, in film and on television, and in research and analysis, ‘cyber-terrorism’ has become a buzzword. Since the events of 9/11, the question on everybody’s lips appears to be ‘is cyber- terrorism next?’ It is generally agreed that the potential for a ‘digital 9/11’ in the near future is not great. This does not mean that IR scholars may continue to ignore the transformative powers of the internet. This paper explores the difficulties of internet governance in the light of terrorists’ increasing use of the medium. -

Covert Networks a Comparative Study Of

COVERT NETWORKS A COMPARATIVE STUDY OF INTELLIGENCE TECHNIQUES USED BY FOREIGN INTELLIGENCE AGENCIES TO WEAPONIZE SOCIAL MEDIA by Sarah Ogar A thesis submitted to Johns Hopkins University in conformity with the requirements for the degree of Master of Arts Baltimore, Maryland December 2019 2019 Sarah Ogar All Rights Reserved Abstract From the Bolshevik Revolution to the Brexit Vote, the covert world of intelligence has attempted to influence global events with varying degrees of success. In 2016, one of the most brazen manifestations of Russian intelligence operations was directed against millions of Americans when they voted to elect a new president. Although this was not the first time that Russia attempted to influence an American presidential election, it was undoubtedly the largest attempt in terms of its scope and the most publicized to date. Although much discussion has followed the 2016 election, there have not been much concerted historical analysis which situates the events of 2016 within the global timeline of foreign intelligence collection. This paper argues that the onset of social media has altered intelligence collection in terms of its form, but not in terms of its essence. Using the case study method, this paper illustrates how three different nations apply classical intelligence techniques to the modern environment of social media. This paper examines how China has utilized classical agent recruitment techniques through sites like LinkedIn, how Iran has used classical honey trap techniques through a combination of social media sites, and how Russia has employed the classical tactics of kompromat, forgery, agents of influence and front groups in its modern covert influence campaigns. -

End Smear Campaign Against Bishop Lisboa

First UA:132/20 Index: AFR 41/2914/2020 Mozambique Date: 26 August 2020 URGENT ACTION END SMEAR CAMPAIGN AGAINST BISHOP LISBOA Bishop Don Luis Fernando Lisboa of Pemba city, in Northern Mozambique, has been the subject of an ongoing smear campaign to undermine and delegitimize his vital human rights work in the province of Cabo Delgado. President Nyusi, and government affiliates, have directly and indirectly singled out Bishop Lisboa in their critique of dissidents. The authorities must ensure a safe and enabling environment for Bishop Lisboa to continue his human rights work without fear of intimidation, harassment and any reprisals. TAKE ACTION: WRITE AN APPEAL IN YOUR OWN WORDS OR USE THIS MODEL LETTER President Filipe Jacinto Nyusi President of the Republic of Mozambique Address: Avenida Julius Nyerere, PABX 2000 Maputo – Mozambique Hounorable President Filipe Nyusi, I am writing to you concerning the ongoing smear campaign against human rights defender (HRD), Bishop Don Luis Fernando Lisboa, of Pemba city. On 14 August, in a press conference you gave in Pemba city, the capital of the Cabo Delgado province, you lamented those ‘foreigners’, who freely choose to live in Mozambique, of using human rights to disrespect the sacrifice of those who keep this young homeland. This statement triggered an onslaught of attacks on social media against Bishop Lisboa and his human rights work, with many users accusing Bishop Lisboa of associating with terrorists and insurgents. Furthermore, on 16 August, Egidio Vaz, a well-known government affiliate referred to Bishop Lisboa on his social media platform as “a criminal [who] should be expelled from Mozambique”. -

Diversion Tactics

Diversion Tactics U N D E R S T A N D I N G M A L A D A P T I V E B E H A V I O R S I N R E L A T I O N S H I P S Toxic people often engage in maladaptive behaviors in relationships that ultimately exploit, demean and hurt their intimate partners, family members and friends. They use a plethora of diversionary tactics that distort the reality of their victims and deflect responsibility. Abusive people may employ these tactics to an excessive extent in an effort to escape accountability for their actions. Here are 20 diversionary tactics toxic people use to silence and degrade you. 1 Diversion Tactics G A S L I G H T I N G Gaslighting is a manipulative tactic that can be described in different variations three words: “That didn’t happen,” “You imagined it,” and “Are you crazy?” Gaslighting is perhaps one of the most insidious manipulative tactics out there because it works to distort and erode your sense of reality; it eats away at your ability to trust yourself and inevitably disables you from feeling justified in calling out abuse and mistreatment. When someone gaslights you, you may be prone to gaslighting yourself as a way to reconcile the cognitive dissonance that might arise. Two conflicting beliefs battle it out: is this person right or can I trust what I experienced? A manipulative person will convince you that the former is an inevitable truth while the latter is a sign of dysfunction on your end. -

Page Proof Instructions and Queries

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by The IT University of Copenhagen's Repository Page Proof Instructions and Queries Journal Title: Current Sociology 837536CSI Article Number: 837536 Thank you for choosing to publish with us. This is your final opportunity to ensure your article will be accurate at publication. Please review your proof carefully and respond to the queries using the circled tools in the image below, which are available by clicking “Comment” from the right-side menu in Adobe Reader DC.* Please use only the tools circled in the image, as edits via other tools/methods can be lost during file conversion. For com- ments, questions, or formatting requests, please use . Please do not use comment bubbles/sticky notes . *If you do not see these tools, please ensure you have opened this file with Adobe Reader DC, available for free a t https://get.adobe.com/reader or by going to Help > Check for Updates within other versions of Reader. For more detailed instructions, please see https://us.sagepub.com/ReaderXProofs. No. Query Please note, only ORCID iDs validated prior toNo acceptance queries will be authorized for publication; we are unable to add or amend ORCID iDs at this stage. Please confirm that all author information, including names, affiliations, sequence, and contact details, is correct. Please review the entire document for typographical errors, mathematical errors, and any other necessary corrections; check headings, tables, and figures. Please ensure that you have obtained and enclosed all necessary permissions for the reproduction of art works (e.g. -

'Krym Nash': an Analysis of Modern Russian Deception Warfare

‘Krym Nash’: An Analysis of Modern Russian Deception Warfare ‘De Krim is van ons’ Een analyse van hedendaagse Russische wijze van oorlogvoeren – inmenging door misleiding (met een samenvatting in het Nederlands) Proefschrift ter verkrijging van de graad van doctor aan de Universiteit Utrecht op gezag van de rector magnificus, prof. dr. H.R.B.M. Kummeling, ingevolge het besluit van het college voor promoties in het openbaar te verdedigen op woensdag 16 december 2020 des middags te 12.45 uur door Albert Johan Hendrik Bouwmeester geboren op 25 mei 1962 te Enschede Promotoren: Prof. dr. B.G.J. de Graaff Prof. dr. P.A.L. Ducheine Dit proefschrift werd mede mogelijk gemaakt met financiële steun van het ministerie van Defensie. ii Table of contents Table of contents .................................................................................................. iii List of abbreviations ............................................................................................ vii Abbreviations and Acronyms ........................................................................................................................... vii Country codes .................................................................................................................................................... ix American State Codes ....................................................................................................................................... ix List of figures ...................................................................................................... -

Founding Fathers" in American History Dissertations

EVOLVING OUR HEROES: AN ANALYSIS OF FOUNDERS AND "FOUNDING FATHERS" IN AMERICAN HISTORY DISSERTATIONS John M. Stawicki A Thesis Submitted to the Graduate College of Bowling Green State University in partial fulfillment of the requirements for the degree of MASTER OF ARTS December 2019 Committee: Andrew Schocket, Advisor Ruth Herndon Scott Martin © 2019 John Stawicki All Rights Reserved iii ABSTRACT Andrew Schocket, Advisor This thesis studies scholarly memory of the American founders and “Founding Fathers” via inclusion in American dissertations. Using eighty-one semi-randomly and diversely selected founders as case subjects to examine and trace how individual, group, and collective founder interest evolved over time, this thesis uniquely analyzes 20th and 21st Century Revolutionary American scholarship on the founders by dividing it five distinct periods, with the most recent period coinciding with “founders chic.” Using data analysis and topic modeling, this thesis engages three primary historiographic questions: What founders are most prevalent in Revolutionary scholarship? Are social, cultural, and “from below” histories increasing? And if said histories are increasing, are the “New Founders,” individuals only recently considered vital to the era, posited by these histories outnumbering the Top Seven Founders (George Washington, Thomas Jefferson, John Adams, James Madison, Alexander Hamilton, Benjamin Franklin, and Thomas Paine) in founder scholarship? The thesis concludes that the Top Seven Founders have always dominated founder dissertation scholarship, that social, cultural, and “from below” histories are increasing, and that social categorical and “New Founder” histories are steadily increasing as Top Seven Founder studies are slowly decreasing, trends that may shift the Revolutionary America field away from the Top Seven Founders in future years, but is not yet significantly doing so.