How Does Sound Affect User Experience When Handling Digital Fabrics Using a Touchscreen Device?

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Buckram Is a Heavy-Duty Bookbinding Cloth That Offers a Distinct, Woven Texture

B UCKRAM BOODLE BOOKS Buckram is a heavy-duty bookbinding cloth that offers a distinct, woven texture. PRICING Our two lines, Conservation and English, are formulated with a matte finish in Prices are per book, nonpadded with square corners. Includes Summit Leatherette or Arrestox B lining. a variety of well-saturated colors. Both are stain resistant and washable. Your See options below. logo can be foil or blind debossed or silk screened. PART No. SHEET SIZE 25 50 100 SINGLE PANEL 1 view Conservation Buckram 7001C-BUC 8.5 x 5.5 10.95 9.80 8.60 Strong, thick poly-cotton with subtle linen look 7001D-BUC 11 x 4.25 10.45 9.30 8.10 logo 7001E-BUC 11 x 8.5 13.25 12.10 10.90 7001F-BUC 14 x 4.25 11.55 10.40 9.20 red maroon green army green CBU-RED CBU-MAR CBU-GRN CBU-AGR 7001G-BUC 14 x 8.5 14.40 13.25 12.10 front back 7001H-BUC 11 x 5.5 11.65 10.50 9.30 7001I-BUC 14 x 5.5 12.75 11.60 10.40 7001J-BUC 17 x 11 19.65 18.50 17.30 SINGLE PANEL - DOUBLE-SIDED 2 views royal navy rust medium grey 7001C/2-BUC 8.5 x 5.5 10.95 9.80 8.60 CBU-ROY CBU-NAV CBU-RUS CBU-MGY 7001D/2-BUC 11 x 4.25 10.45 9.30 8.10 7001E/2-BUC 11 x 8.5 13.25 12.10 10.90 7001F/2-BUC 14 x 4.25 11.55 10.40 9.20 front back 7001G/2-BUC 14 x 8.5 14.40 13.25 12.10 7001H/2-BUC 11 x 5.5 11.65 10.50 9.30 tan brown black 7001I/2-BUC 14 x 5.5 12.75 11.60 10.40 CBU-TAN CBU-BRO CBU-BLK 7001J/2-BUC 17 x 11 19.65 18.50 17.30 DOUBLE PANEL 2 views Royal Conservation Buckram 7002C-BUC 8.5 x 5.5 18.50 17.20 15.80 CBU-ROY 7002D-BUC 11 x 4.25 19.15 17.80 16.45 7002E-BUC 11 x 8.5 23.30 21.80 20.40 7002F-BUC -

![IS 1102 (1968): Handloom Buckram Cloth [TXD 8: Handloom and Khadi]](https://docslib.b-cdn.net/cover/4501/is-1102-1968-handloom-buckram-cloth-txd-8-handloom-and-khadi-734501.webp)

IS 1102 (1968): Handloom Buckram Cloth [TXD 8: Handloom and Khadi]

इंटरनेट मानक Disclosure to Promote the Right To Information Whereas the Parliament of India has set out to provide a practical regime of right to information for citizens to secure access to information under the control of public authorities, in order to promote transparency and accountability in the working of every public authority, and whereas the attached publication of the Bureau of Indian Standards is of particular interest to the public, particularly disadvantaged communities and those engaged in the pursuit of education and knowledge, the attached public safety standard is made available to promote the timely dissemination of this information in an accurate manner to the public. “जान का अधकार, जी का अधकार” “परा को छोड न 5 तरफ” Mazdoor Kisan Shakti Sangathan Jawaharlal Nehru “The Right to Information, The Right to Live” “Step Out From the Old to the New” IS 1102 (1968): Handloom Buckram Cloth [TXD 8: Handloom and Khadi] “ान $ एक न भारत का नमण” Satyanarayan Gangaram Pitroda “Invent a New India Using Knowledge” “ान एक ऐसा खजाना > जो कभी चराया नह जा सकताह ै”ै Bhartṛhari—Nītiśatakam “Knowledge is such a treasure which cannot be stolen” I6 : 1192- 1968 Indian Standard SPECIFICATION FOR HANDLOOM BUCKRAM CLOTH ( First Revision ) Handloom and Khadi Sectional Committee, TDC 13 Chairman Rcpresetiing Sntu C. S. RAMANATHAN Textiles Committee, Bombay Members SHRI DINA NATH ACARWAL The Ludhiana Textile Board, Ludhiana SHRI G~RDHARILAL LOOMBA( Altematr ) SHRI T. BALAKRISHNAN In personal capacity ( C/o National Stores, Cannanore) B~J~INE~SMANAGER The Andhra Handloom Weavers’ Co-operative Society Ltd, Vijayawada BUSINESS MANAGER The Tamil Nadu (Madras State) Handloom Weavers’ Co-operative Society Ltd, Madras SHRI A. -

2013 B. Tech. Textile Chemistry Programme Objectives

ANNA UNIVERSITY, CHENNAI AFFILIATED INSTITUTIONS R – 2013 B. TECH. TEXTILE CHEMISTRY PROGRAMME OBJECTIVES: Prepare the students to demonstrate technical competence in their profession by applying knowledge of basic and contemporary science, engineering and experimentation skills for identifying manufacturing problems and providing practical and innovative solutions. Prepare the students to understand the professional and ethical responsibilities in the local and global context and hence utilize their knowledge and skills for the benefit of the society. Enable the students to work successfully in a manufacturing environment and function well as a team member and also exhibit continuous improvement in their understanding of their technical specialization through self learning and the skill to apply it to further research and development. Enable the students to have sound education in selected subjects essential to develop their ability to initiate and conduct independent investigations. Develop comprehensive understanding in the area of textile chemistry through course work, practical training and independent study. PROGRAMME OUTCOMES: The students will be able to Apply knowledge of mathematics, science and engineering in textile chemical processing applications Design and conduct experiments, as well as to analyze and interpret data Design a system, component, or process to meet desired needs within realistic constraints such as economic, environmental, social, political, ethical, health and safety, manufacturability, and sustainability Function on multidisciplinary teams Identify, formulate, and solve engineering problems related to textile chemical processing Understand the professional and ethical responsibility Prepare technical documents and present effectively Use the techniques, skills, and modern engineering tools necessary for practicing in the textile chemical processing industry. Build high moral character 1 ANNA UNIVERSITY, CHENNAI AFFILIATED INSTITUTIONS R - 2013 B. -

THE ARMOURER and HIS CRAFT from the Xith to the Xvith CENTURY by CHARLES FFOULKES, B.Litt.Oxon

GQ>0<J> 1911 CORNELL UNIVERSITY LIBRARY BOUGHT WITH THE INCOME OF THE SAGE ENDOWMENT FUND GIVEN IN 1891 BY HENRY WILLIAMS SAGE Cornell University Ubrary NK6606 .F43 1912 The armourer and his craft from the xith C Date iSIORAGE 3 1924 030 681 278 Overs olin a^(Mr;= :3fff=iqfPfr.g^h- r^ n .^ I aAri.^ ^ Cornell University Library XI The original of this book is in the Cornell University Library. There are no known copyright restrictions in the United States on the use of the text. http://www.archive.org/details/cu31924030681278 THE ARMOURER AND HIS CRAFT UNIFORM WITH THIS VOLUME PASTE By A. Beresford Ryley < 'A w <1-1 K 2; < > o 2 o 2; H ffi Q 2; < w K o w u > w o o w K H H P W THE ARMOURER AND HIS CRAFT FROM THE XIth TO THE XVIth CENTURY By CHARLES FFOULKES, B.Litt.Oxon. WITH SIXTY-NINE DIAGRAMS IN THE TEXT AND THIRTY-TWO PLATES METHUEN & CO. LTD. 36 ESSEX STREET W.G. LONDON Kc tf , First Published in igi2 TO THE RIGHT HONOURABLE THE VISCOUNT DILLON, Hon. M.A. Oxon. V.P.S.A., Etc. Etc. CURATOR OF THE TOWER ARMOURIES PREFACE DO not propose, in this work, to consider the history or develop- ment of defensive armour, for this has been more or less fully I discussed in v^orks which deal with the subject from the historical side of the question. I have rather endeavoured to compile a work which will, in some measure, fill up a gap in the subject, by collecting all the records and references, especially in English documents, which relate to the actual making of armour and the regulations which con- trolled the Armourer and his Craft. -

Textiles and Clothing the Macmillan Company

Historic, Archive Document Do not assume content reflects current scientific knowledge, policies, or practices. LIBRARY OF THE UNITED STATES DEPARTMENT OF AGRICULTURE C/^ss --SOA Book M l X TEXTILES AND CLOTHING THE MACMILLAN COMPANY NEW YORK • BOSTON • CHICAGO • DALLAS ATLANTA • SAN FRANCISCO MACMILLAN & CO., Limited LONDON • BOMBAY • CALCUTTA MELBOURNE THE MACMILLAN CO. OF CANADA, Ltd. TORONTO TEXTILES AXD CLOTHIXG BY ELLEX BEERS >McGO WAX. B.S. IXSTEUCTOR IX HOUSEHOLD ARTS TEACHERS COLLEGE. COLUMBIA U>aVERSITY AXD CHARLOTTE A. WAITE. M.A. HEAD OF DEPARTMENT OF DOMESTIC ART JULIA RICHMAX HIGH SCHOOL, KEW YORK CITY THE MACMILLAX COMPAXY 1919 All righU, reserved Copyright, 1919, By the MACMILLAN company. Set up and electrotyped. Published February, 1919. J. S. Gushing Co. — Berwick & Smith Co. Norwood, Mass., U.S.A. ; 155688 PREFACE This book has been written primarily to meet a need arising from the introduction of the study of textiles into the curriculum of the high school. The aim has been, there- fore, to present the subject matter in a form sufficiently simple and interesting to be grasped readily by the high school student, without sacrificing essential facts. It has not seemed desirable to explain in detail the mechanism of the various machines used in modern textile industries, but rather to show the student that the fundamental principles of textile manufacture found in the simple machines of primitive times are unchanged in the highl}^ developed and complicated machinerj^ of to-day. Minor emphasis has been given to certain necessarily technical paragraphs by printing these in type of a smaller size than that used for the body of the text. -

A Dictionary of Men's Wear Works by Mr Baker

LIBRARY v A Dictionary of Men's Wear Works by Mr Baker A Dictionary of Men's Wear (This present book) Cloth $2.50, Half Morocco $3.50 A Dictionary of Engraving A handy manual for those who buy or print pictures and printing plates made by the modern processes. Small, handy volume, uncut, illustrated, decorated boards, 75c A Dictionary of Advertising In preparation A Dictionary of Men's Wear Embracing all the terms (so far as could be gathered) used in the men's wear trades expressiv of raw and =; finisht products and of various stages and items of production; selling terms; trade and popular slang and cant terms; and many other things curious, pertinent and impertinent; with an appendix con- taining sundry useful tables; the uniforms of "ancient and honorable" independent military companies of the U. S.; charts of correct dress, livery, and so forth. By William Henry Baker Author of "A Dictionary of Engraving" "A good dictionary is truly very interesting reading in spite of the man who declared that such an one changed the subject too often." —S William Beck CLEVELAND WILLIAM HENRY BAKER 1908 Copyright 1908 By William Henry Baker Cleveland O LIBRARY of CONGRESS Two Copies NOV 24 I SOB Copyright tntry _ OL^SS^tfU XXc, No. Press of The Britton Printing Co Cleveland tf- ?^ Dedication Conforming to custom this unconventional book is Dedicated to those most likely to be benefitted, i. e., to The 15000 or so Retail Clothiers The 15000 or so Custom Tailors The 1200 or so Clothing Manufacturers The 5000 or so Woolen and Cotton Mills The 22000 -

Case- 27. 47. Asy 62-264.772-24Attorneys UNITED STATES PATENT OFFICE

No. 686,993. Patented Nov. 19, 190t. H. H. SHUMWAY. WOWEN BUCKRAM FABRC. (Application filed Aug. 23, 1901.) (to Model.) A z z VVitnesses. /4 // Inventor. 27-ber-7 // 5uerrieval/ Case- 27. 47. asy 62-264.772-24Attorneys UNITED STATES PATENT OFFICE. HERBERT H. SHUMWAY, OF TAUNTON, MASSACHUSETTS, WOVE N BUCKRAM FABRIC. SPECIFICATION forming part of Letters Patent No. 686,993, dated November 19, 1901. Application filed August 23, 190l., Serial No. 72,986, (No specimens.) To all, whom it may concert: is an edge view, of the woven fabric before Beit known that I, HERBERTH. SHUMWAY, finishing. In both figures, bb indicate the 45 a resident of the city of Taunton, in the county two webs, and a. a. are the narrow stripes of Bristol and State of Massachusetts, have where the two webs b b are united together invented certain new and useful Improve in the process of weaving. In making the ments in Woven Buckram Fabrics; and I do two thicknesses of cloth required for buckram hereby declare that the following is a full, in this manner they are united together in clear, and exact description thereof, refer the same uniform manner throughout, there ence being had to the accompanying drawings, by showing the light construction on both O and to the letters of reference marked there sides and also making sure that each thread on, which form a part of this specification. of the upper web lies directly over a thread This invention consists of a new article of of the lower web, for the two threads of the buckram cloth constructed in an entirely new two warps run through between the same 55 way, having for its object to avoid the diffi dents in the reed, and the upper and lower (5 culties of the old construction and to produce filling or weft threads are beaten up simul a better article at a less cost. -

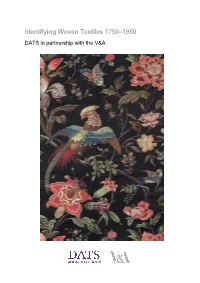

Identifying Woven Textiles 1750-1950 Identification

Identifying Woven Textiles 1750–1950 DATS in partnership with the V&A 1 Identifying Woven Textiles 1750–1950 This information pack has been produced to accompany two one-day workshops taught by Katy Wigley (Director, School of Textiles) and Mary Schoeser (Hon. V&A Senior Research Fellow), held at the V&A Clothworkers’ Centre on 19 April and 17 May 2018. The workshops are produced in collaboration between DATS and the V&A. The purpose of the workshops is to enable participants to improve the documentation and interpretation of collections and make them accessible to the widest audience. Participants will have the chance to study objects at first hand to help increase their confidence in identifying woven textile materials and techniques. This information pack is intended as a means of sharing the knowledge communicated in the workshops with colleagues and the wider public and is also intended as a stand-alone guide for basic weave identification. Other workshops / information packs in the series: Identifying Textile Types and Weaves Identifying Printed Textiles in Dress 1740–1890 Identifying Handmade and Machine Lace Identifying Fibres and Fabrics Identifying Handmade Lace Front Cover: Lamy et Giraud, Brocaded silk cannetille (detail), 1878. This Lyonnais firm won a silver gilt medal at the Paris Exposition Universelle with a silk of this design, probably by Eugene Prelle, their chief designer. Its impact partly derives from the textures within the many-coloured brocaded areas and the markedly twilled cannetille ground. Courtesy Francesca Galloway. 2 Identifying Woven Textiles 1750–1950 Table of Contents Page 1. Introduction 4 2. Tips for Dating 4 3. -

The Complete Costume Dictionary

The Complete Costume Dictionary Elizabeth J. Lewandowski The Scarecrow Press, Inc. Lanham • Toronto • Plymouth, UK 2011 Published by Scarecrow Press, Inc. A wholly owned subsidiary of The Rowman & Littlefield Publishing Group, Inc. 4501 Forbes Boulevard, Suite 200, Lanham, Maryland 20706 http://www.scarecrowpress.com Estover Road, Plymouth PL6 7PY, United Kingdom Copyright © 2011 by Elizabeth J. Lewandowski Unless otherwise noted, all illustrations created by Elizabeth and Dan Lewandowski. All rights reserved. No part of this book may be reproduced in any form or by any electronic or mechanical means, including information storage and retrieval systems, without written permission from the publisher, except by a reviewer who may quote passages in a review. British Library Cataloguing in Publication Information Available Library of Congress Cataloging-in-Publication Data Lewandowski, Elizabeth J., 1960– The complete costume dictionary / Elizabeth J. Lewandowski ; illustrations by Dan Lewandowski. p. cm. Includes bibliographical references. ISBN 978-0-8108-4004-1 (cloth : alk. paper) — ISBN 978-0-8108-7785-6 (ebook) 1. Clothing and dress—Dictionaries. I. Title. GT507.L49 2011 391.003—dc22 2010051944 ϱ ™ The paper used in this publication meets the minimum requirements of American National Standard for Information Sciences—Permanence of Paper for Printed Library Materials, ANSI/NISO Z39.48-1992. Printed in the United States of America For Dan. Without him, I would be a lesser person. It is the fate of those who toil at the lower employments of life, to be rather driven by the fear of evil, than attracted by the prospect of good; to be exposed to censure, without hope of praise; to be disgraced by miscarriage or punished for neglect, where success would have been without applause and diligence without reward. -

Download Textile Design 5 Sem Structural Fabric Design 5 032551 Dec

SECTION-C No. of Printed Pages : 4 Roll No. .................. 032551/2562 Note: Long answer type questions. Attempt any three questions. 3x10=30 5th Sem. / Textile Design Q.3 Discuss characteristics of tapestry fabrics. Subject : Structural Fabric Design-V Classify them and draw any one tapestry design along with sectional diagram. Time : 3 Hrs. M.M. : 100 Q.4 Make weave effects of twill and plain weaves SECTION-A using suitable colour patterns in warp and weft Note: Very Short Answer type questions. Attempt any direction. 15 parts. (15x2=30) Q.5 What is figure shading? Show the figure Q.1 a) What do you mean by weave design? shading effect with the help of a suitable example. b) What is card cutting? Q.6 Draw a jacquard design with the help of a c) What do you mean by jacquard harness? suitable motif and also give the harness calculations. d) What is terry towel fabric? Q.7 What are upholstery fabrics? Explain various e) What are Rugs? types of upholstery fabrics with their f) What are honeycomb fabrics? characteristics. g) What are velveteen? h) What is carduroy fabric? i) What are leno fabrics? j) What is sponge? (40) (4) 032551/2562 (1) 032551/2562 k) What is tweed fabric? iv) Distinguish between buckram & brocade fabrics. l) What do you mean by water resistant fabric? v) What is honey comb fabric? Discuss its features. m) What are welts? vi) Distinguish between velvet and velveteen n) What is reversible cloth? fabrics. o) What is blazer fabric? vii) Explain some important characteristics of p) What do you mean by quilts? the upholstery fabrics. -

General Coporation Tax Allocation Percentage Report 2003

2003 General Corporation Tax Allocation Percentage Report Page - 1- @ONCE.COM INC .02 A AND J TITLE SEARCHING CO INC .01 @RADICAL.MEDIA INC 25.08 A AND L AUTO RENTAL SERVICES INC 1.00 @ROAD INC 1.47 A AND L CESSPOOL SERVICE CORP 96.51 "K" LINE AIR SERVICE U.S.A. INC 20.91 A AND L GENERAL CONTRACTORS INC 2.38 A OTTAVINO PROPERTY CORP 29.38 A AND L INDUSTRIES INC .01 A & A INDUSTRIAL SUPPLIES INC 1.40 A AND L PEN MANUFACTURING CORP 53.53 A & A MAINTENANCE ENTERPRISE INC 2.92 A AND L SEAMON INC 4.46 A & D MECHANICAL INC 64.91 A AND L SHEET METAL FABRICATIONS CORP 69.07 A & E MANAGEMENT SYSTEMS INC 77.46 A AND L TWIN REALTY INC .01 A & E PRO FLOOR AND CARPET .01 A AND M AUTO COLLISION INC .01 A & F MUSIC LTD 91.46 A AND M ROSENTHAL ENTERPRISES INC 51.42 A & H BECKER INC .01 A AND M SPORTS WEAR CORP .01 A & J REFIGERATION INC 4.09 A AND N BUSINESS SERVICES INC 46.82 A & M BRONX BAKING INC 2.40 A AND N DELIVERY SERVICE INC .01 A & M FOOD DISTRIBUTORS INC 93.00 A AND N ELECTRONICS AND JEWELRY .01 A & M LOGOS INTERNATIONAL INC 81.47 A AND N INSTALLATIONS INC .01 A & P LAUNDROMAT INC .01 A AND N PERSONAL TOUCH BILLING SERVICES INC 33.00 A & R CATERING SERVICE INC .01 A AND P COAT APRON AND LINEN SUPPLY INC 32.89 A & R ESTATE BUYERS INC 64.87 A AND R AUTO SALES INC 16.50 A & R MEAT PROVISIONS CORP .01 A AND R GROCERY AND DELI CORP .01 A & S BAGEL INC .28 A AND R MNUCHIN INC 41.05 A & S MOVING & PACKING SERVICE INC 73.95 A AND R SECURITIES CORP 62.32 A & S WHOLESALE JEWELRY CORP 78.41 A AND S FIELD SERVICES INC .01 A A A REFRIGERATION SERVICE INC 31.56 A AND S TEXTILE INC 45.00 A A COOL AIR INC 99.22 A AND T WAREHOUSE MANAGEMENT CORP 88.33 A A LINE AND WIRE CORP 70.41 A AND U DELI GROCERY INC .01 A A T COMMUNICATIONS CORP 10.08 A AND V CONTRACTING CORP 10.87 A A WEINSTEIN REALTY INC 6.67 A AND W GEMS INC 71.49 A ADLER INC 87.27 A AND W MANUFACTURING CORP 13.53 A AND A ALLIANCE MOVING INC .01 A AND X DEVELOPMENT CORP. -

Francisco Rico Vanessa Coley the Hat Stand Pinokpok and More... the E-Magazine for Those Who Make Hats Issue 125 August 2016 Contents

Issue 125 August 2016 Francisco Rico Vanessa Coley The Hat Stand Pinokpok and more... the e-magazine for those who make hats Issue 125 August 2016 Contents: Francisco Rico 2 Meet one of London's hottest new designers - maker of will.i.am's Met Gala hat. Hat of the Month 6 A veiled mini topper by Vanessa Coley. The Hat Stand 11 The Sheffield pop-up returns as a testament to the power of teamwork. Know Your Millinery Materials 15 LilyM Millinery Supplies show us how pinokpok is made. Letter to the Editor 18 Using leather in millinery. The Back Page 19 A South American hat competition and how to contact us. Cover/Back Page: Images by Ellie Grace Photography Hats by Imogen’s Imagination and Amanda Moon Headwear 1 www.hatalk.com FRANCISCO RICO 2 www.hatalk.com Francisco Rico was born and raised move to London in 2012 to pursue working for him. He creates two in Seville, Spain. After spending his new passion - designing and couture hat collections a year, as well several years undertaking business producing hats. Since then, he has as concept collections and private studies and working in management studied under royal milliner Rose commissions. He has worked on and development for a number of Cory, taken classes at Central Saint pieces for some very high profile international companies, Francisco Martins and completed the HND clients, including a recent project for stumbled upon the world of hats Millinery programme at Kensington recording artist will.i.am. in Madrid and realised that he had and Chelsea College.