Performance Rating Algorithms

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Regulations for the FIDE World Chess Cup 2015 1

Regulations for the FIDE World Chess Cup 2015 1. Organisation 1.1 The FIDE World Chess Cup (World Cup) is an integral part of the World Championship Cycle 2014-2016. 1.2 Governing Body: the World Chess Federation (FIDE). For the purpose of creating the regulations, communicating with the players and negotiating with the organisers, the FIDE President has nominated a committee, hereby called the FIDE Commission for World Championships and Olympiads (hereinafter referred to as WCOC) 1.3 FIDE, or its appointed commercial agency, retains all commercial and media rights of the World Chess Cup 2015, including internet rights. 1.4 Upon recommendation by the WCOC, the body responsible for any changes to these Regulations is the FIDE Presidential Board. 2. Qualifying Events for World Cup 2015 2. 1. National Chess Championships - National Chess Championships are the responsibility of the Federations who retain all rights in their internal competitions. 2. 2. Zonal Tournaments - Zonals can be organised by the Continents according to their regulations that have to be approved by the FIDE Presidential Board. 2. 3. Continental Chess Championships - The Continents, through their respective Boards and in co-operation with FIDE, shall organise Continental Chess Championships. The regulations for these events have to be approved by the FIDE Presidential Board nine months before they start if they are to be part of the qualification system of the World Chess Championship cycle. 2. 3. 1. FIDE shall guarantee a minimum grant of USD 92,000 towards the total prize fund for Continental Championships, divided among the following continents: 1. Americas 32,000 USD (minimum prize fund in total: 50,000 USD) 2. -

Opening Moves - Player Facts

DVD Chess Rules Chess puzzles Classic games Extras - Opening moves - Player facts General Rules The aim in the game of chess is to win by trapping your opponent's king. White always moves first and players take turns moving one game piece at a time. Movement is required every turn. Each type of piece has its own method of movement. A piece may be moved to another position or may capture an opponent's piece. This is done by landing on the appropriate square with the moving piece and removing the defending piece from play. With the exception of the knight, a piece may not move over or through any of the other pieces. When the board is set up it should be positioned so that the letters A-H face both players. When setting up, make sure that the white queen is positioned on a light square and the black queen is situated on a dark square. The two armies should be mirror images of one another. Pawn Movement Each player has eight pawns. They are the least powerful piece on the chess board, but may become equal to the most powerful. Pawns always move straight ahead unless they are capturing another piece. Generally pawns move only one square at a time. The exception is the first time a pawn is moved, it may move forward two squares as long as there are no obstructing pieces. A pawn cannot capture a piece directly in front of him but only one at a forward angle. When a pawn captures another piece the pawn takes that piece’s place on the board, and the captured piece is removed from play If a pawn gets all the way across the board to the opponent’s edge, it is promoted. -

Chess for Dummies‰

01_584049 ffirs.qxd 7/29/05 9:19 PM Page iii Chess FOR DUMmIES‰ 2ND EDITION by James Eade 01_584049 ffirs.qxd 7/29/05 9:19 PM Page ii 01_584049 ffirs.qxd 7/29/05 9:19 PM Page i Chess FOR DUMmIES‰ 2ND EDITION 01_584049 ffirs.qxd 7/29/05 9:19 PM Page ii 01_584049 ffirs.qxd 7/29/05 9:19 PM Page iii Chess FOR DUMmIES‰ 2ND EDITION by James Eade 01_584049 ffirs.qxd 7/29/05 9:19 PM Page iv Chess For Dummies®, 2nd Edition Published by Wiley Publishing, Inc. 111 River St. Hoboken, NJ 07030-5774 www.wiley.com Copyright © 2005 by Wiley Publishing, Inc., Indianapolis, Indiana Published simultaneously in Canada No part of this publication may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, electronic, mechanical, photocopying, recording, scanning, or otherwise, except as permit- ted under Sections 107 or 108 of the 1976 United States Copyright Act, without either the prior written permission of the Publisher, or authorization through payment of the appropriate per-copy fee to the Copyright Clearance Center, 222 Rosewood Drive, Danvers, MA 01923, 978-750-8400, fax 978-646-8600. Requests to the Publisher for permission should be addressed to the Legal Department, Wiley Publishing, Inc., 10475 Crosspoint Blvd., Indianapolis, IN 46256, 317-572-3447, fax 317-572-4355, or online at http://www.wiley.com/go/permissions. Trademarks: Wiley, the Wiley Publishing logo, For Dummies, the Dummies Man logo, A Reference for the Rest of Us!, The Dummies Way, Dummies Daily, The Fun and Easy Way, Dummies.com, and related trade dress are trademarks or registered trademarks of John Wiley & Sons, Inc., and/or its affiliates in the United States and other countries, and may not be used without written permission. -

Here Should That It Was Not a Cheap Check Or Spite Check Be Better Collaboration Amongst the Federations to Gain Seconds

Chess Contents Founding Editor: B.H. Wood, OBE. M.Sc † Executive Editor: Malcolm Pein Editorial....................................................................................................................4 Editors: Richard Palliser, Matt Read Malcolm Pein on the latest developments in the game Associate Editor: John Saunders Subscriptions Manager: Paul Harrington 60 Seconds with...Chris Ross.........................................................................7 Twitter: @CHESS_Magazine We catch up with Britain’s leading visually-impaired player Twitter: @TelegraphChess - Malcolm Pein The King of Wijk...................................................................................................8 Website: www.chess.co.uk Yochanan Afek watched Magnus Carlsen win Wijk for a sixth time Subscription Rates: United Kingdom A Good Start......................................................................................................18 1 year (12 issues) £49.95 Gawain Jones was pleased to begin well at Wijk aan Zee 2 year (24 issues) £89.95 3 year (36 issues) £125 How Good is Your Chess?..............................................................................20 Europe Daniel King pays tribute to the legendary Viswanathan Anand 1 year (12 issues) £60 All Tied Up on the Rock..................................................................................24 2 year (24 issues) £112.50 3 year (36 issues) £165 John Saunders had to work hard, but once again enjoyed Gibraltar USA & Canada Rocking the Rock ..............................................................................................26 -

Regulations for the FIDE World Chess Cup 2017 2. Qualifying Events for World Cup 2017

Regulations for the FIDE World Chess Cup 2017 1. Organisation 1.1 The FIDE World Chess Cup (World Cup) is an integral part of the World Championship Cycle 2016-2018. 1.2 Governing Body: the World Chess Federation (FIDE). For the purpose of creating the regulations, communicating with the players and negotiating with the organisers, the FIDE President has nominated a committee, hereby called the FIDE Commission for World Championships and Olympiads (hereinafter referred to as WCOC) 1.3 FIDE, or its appointed commercial agency, retains all commercial and media rights of the World Chess Cup 2017, including internet rights. 1.4 Upon recommendation by the WCOC, the body responsible for any changes to these Regulations is the FIDE Presidential Board. 2. Qualifying Events for World Cup 2017 2. 1. National Chess Championships - National Chess Championships are the responsibility of the Federations who retain all rights in their internal competitions. 2. 2. Zonal Tournaments - Zonals can be organised by the Continents according to their regulations that have to be approved by the FIDE Presidential Board. 2. 3. Continental Chess Championships - The Continents, through their respective Boards and in co-operation with FIDE, shall organise Continental Chess Championships. The regulations for these events have to be approved by the FIDE Presidential Board nine months before they start if they are to be part of the qualification system of the World Chess Championship cycle. 2. 3. 1. FIDE shall guarantee a minimum grant of USD 92,000 towards the total prize fund for Continental Championships, divided among the following continents: 1. Americas 32,000 USD (minimum prize fund in total: 50,000 USD) 2. -

A Feast of Chess in Time of Plague – Candidates Tournament 2020

A FEAST OF CHESS IN TIME OF PLAGUE CANDIDATES TOURNAMENT 2020 Part 1 — Yekaterinburg by Vladimir Tukmakov www.thinkerspublishing.com Managing Editor Romain Edouard Assistant Editor Daniël Vanheirzeele Translator Izyaslav Koza Proofreader Bob Holliman Graphic Artist Philippe Tonnard Cover design Mieke Mertens Typesetting i-Press ‹www.i-press.pl› First edition 2020 by Th inkers Publishing A Feast of Chess in Time of Plague. Candidates Tournament 2020. Part 1 — Yekaterinburg Copyright © 2020 Vladimir Tukmakov All rights reserved. No part of this publication may be reproduced, stored in a retrieval system or transmitted in any form or by any means, electronic, mechanical, photocopying, recording or otherwise, without the prior written permission from the publisher. ISBN 978-94-9251-092-1 D/2020/13730/26 All sales or enquiries should be directed to Th inkers Publishing, 9850 Landegem, Belgium. e-mail: [email protected] website: www.thinkerspublishing.com TABLE OF CONTENTS KEY TO SYMBOLS 5 INTRODUCTION 7 PRELUDE 11 THE PLAY Round 1 21 Round 2 44 Round 3 61 Round 4 80 Round 5 94 Round 6 110 Round 7 127 Final — Round 8 141 UNEXPECTED CONCLUSION 143 INTERIM RESULTS 147 KEY TO SYMBOLS ! a good move ?a weak move !! an excellent move ?? a blunder !? an interesting move ?! a dubious move only move =equality unclear position with compensation for the sacrifi ced material White stands slightly better Black stands slightly better White has a serious advantage Black has a serious advantage +– White has a decisive advantage –+ Black has a decisive advantage with an attack with initiative with counterplay with the idea of better is worse is Nnovelty +check #mate INTRODUCTION In the middle of the last century tournament compilations were ex- tremely popular. -

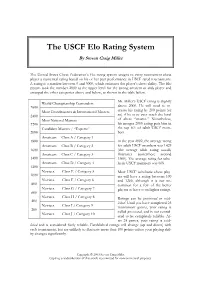

The USCF Elo Rating System by Steven Craig Miller

The USCF Elo Rating System By Steven Craig Miller The United States Chess Federation’s Elo rating system assigns to every tournament chess player a numerical rating based on his or her past performance in USCF rated tournaments. A rating is a number between 0 and 3000, which estimates the player’s chess ability. The Elo system took the number 2000 as the upper level for the strong amateur or club player and arranged the other categories above and below, as shown in the table below. Mr. Miller’s USCF rating is slightly World Championship Contenders 2600 above 2000. He will need to in- Most Grandmasters & International Masters crease his rating by 200 points (or 2400 so) if he is to ever reach the level Most National Masters of chess “master.” Nonetheless, 2200 his meager 2000 rating puts him in Candidate Masters / “Experts” the top 6% of adult USCF mem- 2000 bers. Amateurs Class A / Category 1 1800 In the year 2002, the average rating Amateurs Class B / Category 2 for adult USCF members was 1429 1600 (the average adult rating usually Amateurs Class C / Category 3 fluctuates somewhere around 1400 1500). The average rating for scho- Amateurs Class D / Category 4 lastic USCF members was 608. 1200 Novices Class E / Category 5 Most USCF scholastic chess play- 1000 ers will have a rating between 100 Novices Class F / Category 6 and 1200, although it is not un- 800 common for a few of the better Novices Class G / Category 7 players to have even higher ratings. 600 Novices Class H / Category 8 Ratings can be provisional or estab- 400 lished. -

Elo World, a Framework for Benchmarking Weak Chess Engines

Elo World, a framework for with a rating of 2000). If the true outcome (of e.g. a benchmarking weak chess tournament) doesn’t match the expected outcome, then both player’s scores are adjusted towards values engines that would have produced the expected result. Over time, scores thus become a more accurate reflection DR. TOM MURPHY VII PH.D. of players’ skill, while also allowing for players to change skill level. This system is carefully described CCS Concepts: • Evaluation methodologies → Tour- elsewhere, so we can just leave it at that. naments; • Chess → Being bad at it; The players need not be human, and in fact this can facilitate running many games and thereby getting Additional Key Words and Phrases: pawn, horse, bishop, arbitrarily accurate ratings. castle, queen, king The problem this paper addresses is that basically ACH Reference Format: all chess tournaments (whether with humans or com- Dr. Tom Murphy VII Ph.D.. 2019. Elo World, a framework puters or both) are between players who know how for benchmarking weak chess engines. 1, 1 (March 2019), to play chess, are interested in winning their games, 13 pages. https://doi.org/10.1145/nnnnnnn.nnnnnnn and have some reasonable level of skill. This makes 1 INTRODUCTION it hard to give a rating to weak players: They just lose every single game and so tend towards a rating Fiddly bits aside, it is a solved problem to maintain of −∞.1 Even if other comparatively weak players a numeric skill rating of players for some game (for existed to participate in the tournament and occasion- example chess, but also sports, e-sports, probably ally lose to the player under study, it may still be dif- also z-sports if that’s a thing). -

Rulebook Changes Since the 6Th Edition Rulebook Changes

Rulebook changes since the 6th edition Rulebook Changes Compiled by Dave Kuhns and Tim Just (2016) Since the publication of the 6th edition of the US Chess rulebook, changes have been made in US Chess policy or in the wording of the rules. Below is a collection of those changes. Any additions or corrections can be sent to US Chess. US Chess Policy Changes Please note that policy changes are different than rules changes and often occur more than once per year. Check the US Chess web page for the most current changes. Scholastic Policy Change: When taking entries for all National Scholastic Chess tournaments with ‘under’ and/or unrated sections, US Chess will require players to disclose at the time they enter the tournament whether they have one or more ratings in other over the board rating system(s). US Chess shall seriously consider, in consultation with the Scholastic Council and the Ratings Committee, using this rating information to determine section and prize eligibility in accordance with US Chess rules 28D and 28E. This policy will take effect immediately and will be in effect for all future US Chess national scholastic tournaments that have ‘under’ and/or unrated sections (new, 2014). Rationale: There are areas of the country in which many scholastic tournaments are being held that are not submitted to US Chess to be rated. As a result, some players come to the national scholastic tournaments with ratings that do not reflect their current playing strength. In order to ensure fair competition, we need to be able to use all available information about these players’ true playing strength. -

A Comparison of Rating Systems for Competitive Women's Beach Volleyball

Statistica Applicata - Italian Journal of Applied Statistics Vol. 30 (2) 233 doi.org/10.26398/IJAS.0030-010 A COMPARISON OF RATING SYSTEMS FOR COMPETITIVE WOMEN’S BEACH VOLLEYBALL Mark E. Glickman1 Department of Statistics, Harvard University, Cambridge, MA, USA Jonathan Hennessy Google, Mountain View, CA, USA Alister Bent Trillium Trading, New York, NY, USA Abstract Women’s beach volleyball became an official Olympic sport in 1996 and continues to attract the participation of amateur and professional female athletes. The most well-known ranking system for women’s beach volleyball is a non-probabilistic method used by the Fédération Internationale de Volleyball (FIVB) in which points are accumulated based on results in designated competitions. This system produces rankings which, in part, determine qualification to elite events including the Olympics. We investigated the application of several alternative probabilistic rating systems for head-to-head games as an approach to ranking women’s beach volleyball teams. These include the Elo (1978) system, the Glicko (Glickman, 1999) and Glicko-2 (Glickman, 2001) systems, and the Stephenson (Stephenson and Sonas, 2016) system, all of which have close connections to the Bradley- Terry (Bradley and Terry, 1952) model for paired comparisons. Based on the full set of FIVB volleyball competition results over the years 2007-2014, we optimized the parameters for these rating systems based on a predictive validation approach. The probabilistic rating systems produce 2014 end-of-year rankings that lack consistency with the FIVB 2014 rankings. Based on the 2014 rankings for both probabilistic and FIVB systems, we found that match results in 2015 were less predictable using the FIVB system compared to any of the probabilistic systems. -

2016 Year in Review

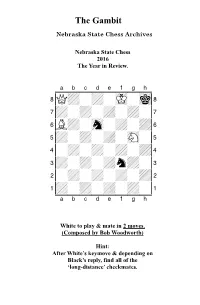

The Gambit Nebraska State Chess Archives Nebraska State Chess 2016 The Year in Review. XABCDEFGHY 8Q+-+-mK-mk( 7+-+-+-+-' 6L+-sn-+-+& 5+-+-+-sN-% 4-+-+-+-+$ 3+-+-+n+-# 2-+-+-+-+" 1+-+-+-+-! xabcdefghy White to play & mate in 2 moves. (Composed by Bob Woodworth) Hint: After White’s keymove & depending on Black’s reply, find all of the ‘long-distance’ checkmates. Gambit Editor- Kent Nelson The Gambit serves as the official publication of the Nebraska State Chess Association and is published by the Lincoln Chess Foundation. Send all games, articles, and editorial materials to: Kent Nelson 4014 “N” St Lincoln, NE 68510 [email protected] NSCA Officers President John Hartmann Treasurer Lucy Ruf Historical Archivist Bob Woodworth Secretary Gnanasekar Arputhaswamy Webmaster Kent Smotherman Regional VPs NSCA Committee Members Vice President-Lincoln- John Linscott Vice President-Omaha- Michael Gooch Vice President (Western) Letter from NSCA President John Hartmann January 2017 Hello friends! Our beloved game finds itself at something of a crossroads here in Nebraska. On the one hand, there is much to look forward to. We have a full calendar of scholastic events coming up this spring and a slew of promising juniors to steal our rating points. We have more and better adult players playing rated chess. If you’re reading this, we probably (finally) have a functional website. And after a precarious few weeks, the Spence Chess Club here in Omaha seems to have found a new home. And yet, there is also cause for concern. It’s not clear that we will be able to have tournaments at UNO in the future. -

YEARBOOK the Information in This Yearbook Is Substantially Correct and Current As of December 31, 2020

OUR HERITAGE 2020 US CHESS YEARBOOK The information in this yearbook is substantially correct and current as of December 31, 2020. For further information check the US Chess website www.uschess.org. To notify US Chess of corrections or updates, please e-mail [email protected]. U.S. CHAMPIONS 2002 Larry Christiansen • 2003 Alexander Shabalov • 2005 Hakaru WESTERN OPEN BECAME THE U.S. OPEN Nakamura • 2006 Alexander Onischuk • 2007 Alexander Shabalov • 1845-57 Charles Stanley • 1857-71 Paul Morphy • 1871-90 George H. 1939 Reuben Fine • 1940 Reuben Fine • 1941 Reuben Fine • 1942 2008 Yury Shulman • 2009 Hikaru Nakamura • 2010 Gata Kamsky • Mackenzie • 1890-91 Jackson Showalter • 1891-94 Samuel Lipchutz • Herman Steiner, Dan Yanofsky • 1943 I.A. Horowitz • 1944 Samuel 2011 Gata Kamsky • 2012 Hikaru Nakamura • 2013 Gata Kamsky • 2014 1894 Jackson Showalter • 1894-95 Albert Hodges • 1895-97 Jackson Reshevsky • 1945 Anthony Santasiere • 1946 Herman Steiner • 1947 Gata Kamsky • 2015 Hikaru Nakamura • 2016 Fabiano Caruana • 2017 Showalter • 1897-06 Harry Nelson Pillsbury • 1906-09 Jackson Isaac Kashdan • 1948 Weaver W. Adams • 1949 Albert Sandrin Jr. • 1950 Wesley So • 2018 Samuel Shankland • 2019 Hikaru Nakamura Showalter • 1909-36 Frank J. Marshall • 1936 Samuel Reshevsky • Arthur Bisguier • 1951 Larry Evans • 1952 Larry Evans • 1953 Donald 1938 Samuel Reshevsky • 1940 Samuel Reshevsky • 1942 Samuel 2020 Wesley So Byrne • 1954 Larry Evans, Arturo Pomar • 1955 Nicolas Rossolimo • Reshevsky • 1944 Arnold Denker • 1946 Samuel Reshevsky • 1948 ONLINE: COVID-19 • OCTOBER 2020 1956 Arthur Bisguier, James Sherwin • 1957 • Robert Fischer, Arthur Herman Steiner • 1951 Larry Evans • 1952 Larry Evans • 1954 Arthur Bisguier • 1958 E.