Analysi of Dream of the Red Chamber

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Utopia/Wutuobang As a Travelling Marker of Time*

The Historical Journal, , (), pp. – © The Author(s), . Published by Cambridge University Press. This is an Open Access article, distributed under the terms of the Creative Commons Attribution-NonCommercial-NoDerivatives licence (http://creativecommons.org/ licenses/by-nc-nd/./), which permits non-commercial re-use, distribution, and reproduction in any medium, provided the original work is unaltered and is properly cited. The written permission of Cambridge University Press must be obtained for commercial re-use or in order to create a derivative work. doi:./SX UTOPIA/WUTUOBANG AS A TRAVELLING MARKER OF TIME* LORENZO ANDOLFATTO Heidelberg University ABSTRACT. This article argues for the understanding of ‘utopia’ as a cultural marker whose appearance in history is functional to the a posteriori chronologization (or typification) of historical time. I develop this argument via a comparative analysis of utopia between China and Europe. Utopia is a marker of modernity: the coinage of the word wutuobang (‘utopia’) in Chinese around is analogous and complementary to More’s invention of Utopia in ,inthatbothrepresent attempts at the conceptualizations of displaced imaginaries, encounters with radical forms of otherness – the European ‘discovery’ of the ‘New World’ during the Renaissance on the one hand, and early modern China’sown‘Westphalian’ refashioning on the other. In fact, a steady stream of utopian con- jecturing seems to mark the latter: from the Taiping Heavenly Kingdom borne out of the Opium wars, via the utopian tendencies of late Qing fiction in the works of literati such as Li Ruzhen, Biheguan Zhuren, Lu Shi’e, Wu Jianren, Xiaoran Yusheng, and Xu Zhiyan, to the reformer Kang Youwei’s monumental treatise Datong shu. -

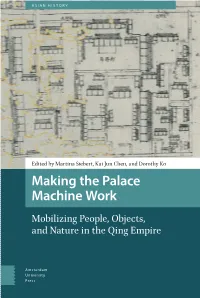

Making the Palace Machine Work Palace Machine the Making

11 ASIAN HISTORY Siebert, (eds) & Ko Chen Making the Machine Palace Work Edited by Martina Siebert, Kai Jun Chen, and Dorothy Ko Making the Palace Machine Work Mobilizing People, Objects, and Nature in the Qing Empire Making the Palace Machine Work Asian History The aim of the series is to offer a forum for writers of monographs and occasionally anthologies on Asian history. The series focuses on cultural and historical studies of politics and intellectual ideas and crosscuts the disciplines of history, political science, sociology and cultural studies. Series Editor Hans Hågerdal, Linnaeus University, Sweden Editorial Board Roger Greatrex, Lund University David Henley, Leiden University Ariel Lopez, University of the Philippines Angela Schottenhammer, University of Salzburg Deborah Sutton, Lancaster University Making the Palace Machine Work Mobilizing People, Objects, and Nature in the Qing Empire Edited by Martina Siebert, Kai Jun Chen, and Dorothy Ko Amsterdam University Press Cover illustration: Artful adaptation of a section of the 1750 Complete Map of Beijing of the Qianlong Era (Qianlong Beijing quantu 乾隆北京全圖) showing the Imperial Household Department by Martina Siebert based on the digital copy from the Digital Silk Road project (http://dsr.nii.ac.jp/toyobunko/II-11-D-802, vol. 8, leaf 7) Cover design: Coördesign, Leiden Lay-out: Crius Group, Hulshout isbn 978 94 6372 035 9 e-isbn 978 90 4855 322 8 (pdf) doi 10.5117/9789463720359 nur 692 Creative Commons License CC BY NC ND (http://creativecommons.org/licenses/by-nc-nd/3.0) The authors / Amsterdam University Press B.V., Amsterdam 2021 Some rights reserved. Without limiting the rights under copyright reserved above, any part of this book may be reproduced, stored in or introduced into a retrieval system, or transmitted, in any form or by any means (electronic, mechanical, photocopying, recording or otherwise). -

“A Preliminary Discussion of Literary Reform” by Hu Shi

Primary Source Document with Questions (DBQs) “ A PRELIMINARY DISCUSSION OF LITERARY REFORM” By Hu Shi Introduction Beginning around 1917, Chinese intellectuals began to engage each other in serious discussion and debate on culture, history, philosophy, and related subjects — all with an eye to the bigger problem of China’s weakness and the possible solutions to that problem. This period of intellectual debate, labeled the May Fourth Movement, lasted to around 1921. Hu Shi (1891-1962) was one of the leading May Fourth intellectuals. A student of agriculture at Cornell University, and then of philosophy under John Dewey at Columbia University, Hu led the way in the movement to write Chinese in the vernacular, as opposed to the elegant, but (to the average Chinese) incomprehensible classical style. Hu also played a leading role in the cultural debates of the time. The following document represents Hu’s ideas on literary reform. Document Excerpts with Questions (Longer selection follows this section) From Sources of Chinese Tradition: From 1600 Through the Twentieth Century, compiled by Wm. Theodore de Bary and Richard Lufrano, 2nd ed., vol. 2 (New York: Columbia University Press, 2000), 357-360. © 2000 Columbia University Press. Reproduced with the permission of the publisher. All rights reserved. “A Preliminary Discussion of Literary Reform” By Hu Shi I believe that literary reform at the present time must begin with these eight items: (1) Write with substance. (2) Do not imitate the ancients. (3) Emphasize grammar. (4) Reject melancholy. (5) Eliminate old clichés. (6) Do not use allusions. (7) Do not use couplets and parallelisms. -

Beijing, a Garden of Violence

Inter-Asia Cultural Studies ISSN: 1464-9373 (Print) 1469-8447 (Online) Journal homepage: http://www.tandfonline.com/loi/riac20 Beijing, a garden of violence Geremie R. Barmé To cite this article: Geremie R. Barmé (2008) Beijing, a garden of violence, Inter-Asia Cultural Studies, 9:4, 612-639, DOI: 10.1080/14649370802386552 To link to this article: http://dx.doi.org/10.1080/14649370802386552 Published online: 15 Nov 2008. Submit your article to this journal Article views: 153 View related articles Full Terms & Conditions of access and use can be found at http://www.tandfonline.com/action/journalInformation?journalCode=riac20 Download by: [Australian National University] Date: 08 April 2016, At: 20:00 Inter-Asia Cultural Studies, Volume 9, Number 4, 2008 Beijing, a garden of violence Geremie R. BARMÉ TaylorRIAC_A_338822.sgm10.1080/14649370802386552Inter-Asia1464-9373Original200894000000DecemberGeremieBarmé[email protected] and& Article Francis Cultural (print)/1469-8447Francis 2008 Studies (online) ABSTRACT This paper examines the history of Beijing in relation to gardens—imperial, princely, public and private—and the impetus of the ‘gardener’, in particular in the twentieth-century. Engag- ing with the theme of ‘violence in the garden’ as articulated by such scholars as Zygmunt Bauman and Martin Jay, I reflect on Beijing as a ‘garden of violence’, both before the rise of the socialist state in 1949, and during the years leading up to the 2008 Olympics. KEYWORDS: gardens, violence, party culture, Chinese history, Chinese politics, cultivation, revolution The gardening impulse This paper offers a brief examination of the history of Beijing in relation to gardens— imperial, princely, socialist, public and private—and the impetus of the ‘gardener’, in particular during the twentieth century. -

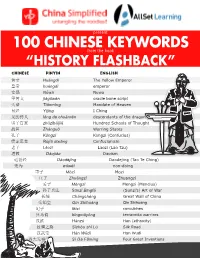

100 Chinese Keywords

present 100 CHINESE KEYWORDS from the book “HISTORY FLASHBACK” Chinese Pinyin English 黄帝 Huángdì The Yellow Emperor 皇帝 huángdì emperor 女娲 Nǚwā Nuwa 甲骨文 jiǎgǔwén oracle bone script 天命 Tiānmìng Mandate of Heaven 易经 Yìjīng I Ching 龙的传人 lóng de chuánrén descendants of the dragon 诸子百家 zhūzǐbǎijiā Hundred Schools of Thought 战国 Zhànguó Warring States 孔子 Kǒngzǐ Kongzi (Confucius) 儒家思想 Rújiā sīxiǎng Confucianism 老子 Lǎozǐ Laozi (Lao Tzu) 道教 Dàojiào Daoism 道德经 Dàodéjīng Daodejing (Tao Te Ching) 无为 wúwéi non-doing 墨子 Mòzǐ Mozi 庄子 Zhuāngzǐ Zhuangzi 孟子 Mèngzǐ Mengzi (Mencius) 孙子兵法 Sūnzǐ Bīngfǎ (Sunzi’s) Art of War 长城 Chángchéng Great Wall of China 秦始皇 Qín Shǐhuáng Qin Shihuang 妃子 fēizi concubines 兵马俑 bīngmǎyǒng terracotta warriors 汉族 Hànzú Han (ethnicity) 丝绸之路 Sīchóu zhī Lù Silk Road 汉武帝 Hàn Wǔdì Han Wudi 四大发明 Sì Dà Fāmíng Four Great Inventions Chinese Pinyin English 指南针 zhǐnánzhēn compass 火药 huǒyào gunpowder 造纸术 zàozhǐshù paper-making 印刷术 yìnshuāshù printing press 司马迁 Sīmǎ Qiān Sima Qian 史记 Shǐjì Records of the Grand Historian 太监 tàijiàn eunuch 三国 Sānguó Three Kingdoms (period) 竹林七贤 Zhúlín Qīxián Seven Bamboo Sages 花木兰 Huā Mùlán Hua Mulan 京杭大运河 Jīng-Háng Dàyùnhé Grand Canal 佛教 Fójiào Buddhism 武则天 Wǔ Zétiān Wu Zetian 四大美女 Sì Dà Měinǚ Four Great Beauties 唐诗 Tángshī Tang poetry 李白 Lǐ Bái Li Bai 杜甫 Dù Fǔ Du Fu Along the River During the Qingming 清明上河图 Qīngmíng Shàng Hé Tú Festival (painting) 科举 kējǔ imperial examination system 西藏 Xīzàng Tibet, Tibetan 书法 shūfǎ calligraphy 蒙古 Měnggǔ Mongolia, Mongolian 成吉思汗 Chéngjí Sīhán Genghis Khan 忽必烈 Hūbìliè Kublai -

Dreams of Timeless Beauties: a Deconstruction of the Twelve Beauties of Jinling in Dream of the Red Chamber and an Analysis of Their Image in Modern Adaptations

Dreams of Timeless Beauties: A Deconstruction of the Twelve Beauties of Jinling in Dream of the Red Chamber and an Analysis of Their Image in Modern Adaptations Xiaolu (Sasha) Han Submitted in Partial Fulfillment of the Prerequisite for Honors in East Asian Studies April 2014 ©2014 Xiaolu (Sasha) Han Acknowledgements First of all, I thank Professor Ellen Widmer not only for her guidance and encouragement throughout this thesis process, but also for her support throughout my time here at Wellesley. Without her endless patience this study would have not been possible and I am forever grateful to be one of her advisees. I would also like to thank the Wellesley College East Asian Studies Department for giving me the opportunity to take on such a project and for challenging me to expand my horizons each and every day Sincerest thanks to my sisters away from home, Amy, Irene, Cristina, and Beatriz, for the many late night snacks, funny notes, and general reassurance during hard times. I would also like to thank Joe for never losing faith in my abilities and helping me stay motivated. Finally, many thanks to my family and friends back home. Your continued support through all of my endeavors and your ability to endure the seemingly endless thesis rambles has been invaluable to this experience. Table of Contents INTRODUCTION ....................................................................................... 3 CHAPTER 1: THE PAIRING OF WOOD AND GOLD Lin Daiyu ................................................................................................. -

Hu Shih and Education Reform

Syracuse University SURFACE Theses - ALL June 2020 Moderacy and Modernity: Hu Shih and Education Reform Travis M. Ulrich Syracuse University Follow this and additional works at: https://surface.syr.edu/thesis Part of the Arts and Humanities Commons Recommended Citation Ulrich, Travis M., "Moderacy and Modernity: Hu Shih and Education Reform" (2020). Theses - ALL. 464. https://surface.syr.edu/thesis/464 This Thesis is brought to you for free and open access by SURFACE. It has been accepted for inclusion in Theses - ALL by an authorized administrator of SURFACE. For more information, please contact [email protected]. Abstract This paper focuses on the use of the term “moderate” “moderacy” as a term applied to categorize some Chinese intellectuals and categorize their political positions throughout the 1920’s and 30’s. In the early decades of the twentieth century, the label of “moderate” (温和 or 温和派)became associated with an inability to align with a political or intellectual faction, thus preventing progress for either side or in some cases, advocating against certain forms of progress. Hu Shih, however, who was one of the most influential intellectuals in modern Chinese history, proudly advocated for pragmatic moderation, as suggested by his slogan: “Boldness is suggesting hypotheses coupled with a most solicitous regard for control and verification.” His advocacy of moderation—which for him became closely associated with pragmatism—brought criticism from those on the left and right. This paper seeks to address these analytical assessments of Hu Shih by questioning not just the labeling of Hu Shih as a moderate, but also questioning the negative connotations attached to moderacy as a political and intellectual label itself. -

China (1750 –1918) Chinese Society Underwent Dramatic Transformations Between 1750 and 1918

Unit 2 Australia and Asia China (1750 –1918) Chinese society underwent dramatic transformations between 1750 and 1918. At the start of this period, China was a vast and powerful empire, dominating much of Asia. The emperor believed that China, as a superior civilisation, had little need for foreign contact. Foreign merchants were only permitted to trade from one Chinese port. By 1918, China was divided and weak. Foreign nations such as Britain, France and Japan had key trading ports and ‘spheres of influence’ within China. New ideas were spreading from the West, challenging Chinese traditions about education, the position of women and the right of the emperor to rule. chapter Source 1 An artist’s impression of Shanghai Harbour, C. 1875. Shanghai was one11 of the treaty ports established for British traders after China was defeated in the first Opium War (1839–42) 11A 11B 11C 11D What were the main features of What was the impact ofDRAFT How did the Chinese respond What was the situation in China Chinese society around 1750? Europeans on Chinese society to European influence? by 1918? 1 Chinese society was isolated from the rest of the from 1750 onwards? 1 The Chinese attempted to restrict foreign influence 1 By 1918, the Chinese emperor had been removed world, particularly the West. Suggest two possible and crush the opium trade, with little success. Why and a Chinese republic established in which the 1 One of the products that Western nations used, consequences of this isolation. do you think this was the case? nation’s leader was elected by the people. -

Hong Lou Meng

Dreaming across Languages and Cultures Dreaming across Languages and Cultures: A Study of the Literary Translations of the Hong lou meng By Laurence K. P. Wong Dreaming across Languages and Cultures: A Study of the Literary Translations of the Hong lou meng By Laurence K. P. Wong This book first published 2014 Cambridge Scholars Publishing 12 Back Chapman Street, Newcastle upon Tyne, NE6 2XX, UK British Library Cataloguing in Publication Data A catalogue record for this book is available from the British Library Copyright © 2014 by Laurence K. P. Wong All rights for this book reserved. No part of this book may be reproduced, stored in a retrieval system, or transmitted, in any form or by any means, electronic, mechanical, photocopying, recording or otherwise, without the prior permission of the copyright owner. ISBN (10): 1-4438-5887-0, ISBN (13): 978-1-4438-5887-8 CONTENTS Preface ....................................................................................................... vi Acknowledgements ................................................................................... ix Note on Romanization ............................................................................... xi Note on Abbreviations .............................................................................. xii Note on Glossing .................................................................................... xvii Introduction ................................................................................................ 1 Chapter One ............................................................................................. -

Private Life and Social Commentary in the Honglou Meng

University of Pennsylvania ScholarlyCommons Honors Program in History (Senior Honors Theses) Department of History March 2007 Authorial Disputes: Private Life and Social Commentary in the Honglou meng Carina Wells [email protected] Follow this and additional works at: https://repository.upenn.edu/hist_honors Wells, Carina, "Authorial Disputes: Private Life and Social Commentary in the Honglou meng" (2007). Honors Program in History (Senior Honors Theses). 6. https://repository.upenn.edu/hist_honors/6 A Senior Thesis Submitted in Partial Fulfillment of the Requirements for Honors in History. Faculty Advisor: Siyen Fei This paper is posted at ScholarlyCommons. https://repository.upenn.edu/hist_honors/6 For more information, please contact [email protected]. Authorial Disputes: Private Life and Social Commentary in the Honglou meng Comments A Senior Thesis Submitted in Partial Fulfillment of the Requirements for Honors in History. Faculty Advisor: Siyen Fei This thesis or dissertation is available at ScholarlyCommons: https://repository.upenn.edu/hist_honors/6 University of Pennsylvania Authorial Disputes: Private Life and Social Commentary in the Honglou meng A senior thesis submitted in partial fulfillment of the requirements for Honors in History by Carina L. Wells Philadelphia, PA March 23, 2003 Faculty Advisor: Siyen Fei Honors Director: Julia Rudolph Contents Acknowledgements………………………………………………………………………...i Explanatory Note…………………………………………………………………………iv Dynasties and Periods……………………………………………………………………..v Selected Reign -

China's Last Great Classical Novel at the Crossroads Of

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Klaipeda University Open Journal Systems 176 RES HUMANITARIAE XIV ISSN 1822-7708 August Sladek – Flensburgo universiteto germanistikos prof. dr. pensininkas. Moksliniai interesai: matematinė lingvistika, lyginamoji filologija, filosofija, ontologija. Adresas: Landwehr 27, D-22087 Hamburgas, Vokietija. El. p.: [email protected]. August Sladek – retired professor of Germanistik, Univer- sity of Flensburg. Research interests: mathematical linguistics, comparative philology, philosophy, ontology. Address: Landwehr 27, D-22087 Hamburgas, Vokietija. El. p.: [email protected]. August Sladek University of Flensburg CHINA’S LAST GREAT CLASSICAL NOVEL AT THE CROSSROADS OF TRADITION AND MODERNIZATION1 Anotacija XVII a. viduryje parašytas „Raudonojo kambario sapnas“ yra naujausias iš Kinijos ketu- rių didžiųjų klasikos romanų. Tradicinių nusidavimų romanų kontekste jis apima daugybę pasakojimo būdų – realistinį, psichologinį, simbolinį, fantastinį. Parašytas kasdiene kalba (Pekino dialektu), jis tapo svarbus moderniosios, standartinės kinų kalbos (putong hua) formavimo šaltiniu naujojo kultūros judėjimo procese nuo praeito amžiaus 3-iojo dešim- tmečio. Mao Zeddongo labai vertinamam romanui komunistai bandė uždėti „progresyvų“ antspaudą. Romano paslėpta religinė (budizmo) intencija liko nepastebėta nei skaitytojų, nei religijotyrininkų. PAGRINDINIAI ŽODŽIAI: kinų literatūra, klasikinis romanas, tradicija ir modernumas. Abstract “The Red Chamber’s Dream” from the middle of 18th century is the youngest of Chi- nas “Four Great Classical Novels”. In the cloak of traditional story-telling it deploys a 1 Quotations from the novel as well as translations of names and terms are taken from Hawkes & Minford (1973–1986). References to their translation are given by the number of volume (Roman numerals) and of pages (Arabic numerals). -

Haiqing Sun Debo a La Conjunción De Un Espejo Y De Una Enciclopedia El

HONG LOU MENG IN JORGE LUIS BORGES’S NARRATIVE Haiqing Sun Debo a la conjunción de un espejo y de una enciclopedia el descubrimiento […] Jorge Luis Borges ao Xueqin’s Hong Lou Meng (Dream of the Red Chamber) represents the highest achievement of the classical narra- C tive during the Ming-Qing period of China.1 Studies of the text have long become an important subject for scholars worldwide. In 1937, Jorge Luis Borges dedicates one of his es- says on world literature to Hong Lou Meng, which displays a curious observation of this masterpiece.2 Based on German scholar Franz Kuhn’s translation, Borges presents the Chinese novel as “[…] la novela más famosa de una literatura casi tres veces milenaria […] Abunda lo fantástico” (4: 329). Known as a master of fictitious narrative himself, Borges seems not ready to 1 The English versions of the title and author of Hong Lou Meng in the referen- ces include the following: Hung Lou Meng and Tsao Hsue Kin (by Borges), Hong Lou Meng and Cao Xue Qin (by Xiao, and Scott), and Hung Lu Meng and Tsao Hsueh Chin (by Balderston). Others use the translation Dream of the Red Chamber or the original title The Story of the Stone. 2 Borges wrote this series of essays for the journal El Hogar in Argentina. Variaciones Borges 22 (2006) 16 HAIQING SUN present any further understanding of Hong Lou Meng in the es- say. He reviews the first three chapters of the novel in an abstract and somehow schematic way: El primer capítulo cuenta la historia de una piedra de origen celestial, destinada a soldar una avería del firmamento y que no logra ejecutar su divina misión; el segundo narra que el héroe de la obra ha nacido con una lámina de jade bajo la lengua; el tercero nos hace conocer al héroe (…) (4: 329) Then, he comes to admit that he feels confused by the “grand view” of Hung Lou Meng’s narrative: “[l]a novela prosigue de una manera un tanto irresponsable o insípida; los personajes secundarios pululan y no sabemos bien cuál es cuál.