Identifying Indicators of Program Quality in U.S. Baccalaureate Aviation Programs Paul Douglas Lindseth

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

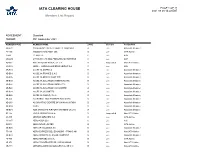

IATA CLEARING HOUSE PAGE 1 of 21 2021-09-08 14:22 EST Member List Report

IATA CLEARING HOUSE PAGE 1 OF 21 2021-09-08 14:22 EST Member List Report AGREEMENT : Standard PERIOD: P01 September 2021 MEMBER CODE MEMBER NAME ZONE STATUS CATEGORY XB-B72 "INTERAVIA" LIMITED LIABILITY COMPANY B Live Associate Member FV-195 "ROSSIYA AIRLINES" JSC D Live IATA Airline 2I-681 21 AIR LLC C Live ACH XD-A39 617436 BC LTD DBA FREIGHTLINK EXPRESS C Live ACH 4O-837 ABC AEROLINEAS S.A. DE C.V. B Suspended Non-IATA Airline M3-549 ABSA - AEROLINHAS BRASILEIRAS S.A. C Live ACH XB-B11 ACCELYA AMERICA B Live Associate Member XB-B81 ACCELYA FRANCE S.A.S D Live Associate Member XB-B05 ACCELYA MIDDLE EAST FZE B Live Associate Member XB-B40 ACCELYA SOLUTIONS AMERICAS INC B Live Associate Member XB-B52 ACCELYA SOLUTIONS INDIA LTD. D Live Associate Member XB-B28 ACCELYA SOLUTIONS UK LIMITED A Live Associate Member XB-B70 ACCELYA UK LIMITED A Live Associate Member XB-B86 ACCELYA WORLD, S.L.U D Live Associate Member 9B-450 ACCESRAIL AND PARTNER RAILWAYS D Live Associate Member XB-280 ACCOUNTING CENTRE OF CHINA AVIATION B Live Associate Member XB-M30 ACNA D Live Associate Member XB-B31 ADB SAFEGATE AIRPORT SYSTEMS UK LTD. A Live Associate Member JP-165 ADRIA AIRWAYS D.O.O. D Suspended Non-IATA Airline A3-390 AEGEAN AIRLINES S.A. D Live IATA Airline KH-687 AEKO KULA LLC C Live ACH EI-053 AER LINGUS LIMITED B Live IATA Airline XB-B74 AERCAP HOLDINGS NV B Live Associate Member 7T-144 AERO EXPRESS DEL ECUADOR - TRANS AM B Live Non-IATA Airline XB-B13 AERO INDUSTRIAL SALES COMPANY B Live Associate Member P5-845 AERO REPUBLICA S.A. -

Vurdering Av Om Geografisk Tilknytning for Et Flyselskap Har Betydning for Flyrutetilbudet I Et Land

Vurdering av om geografisk tilknytning for et flyselskap har betydning for flyrutetilbudet i et land Frode Steen Professor Department of Economics Norwegian School of Economics and Business Administration Helleveien 30, 5045 Bergen NORWAY Email: [email protected] 25. mai 2005 Denne rapporten er skrevet på oppdrag av Samferdselsdepartementet. (Ref. nr. 6742). Alle meninger og eventuelle feil som måtte komme frem i rapporten står jeg selvfølgelig alene ansvarlig for. © Frode Steen 1 Innholdsfortegnelse 1. Introduksjon 3 2. Nettverk innen luftfart 4 3. Nettverksøkonomi innen luftfart 6 3.1 Kostnadsbasert nettverksøkonomi i luftfart 8 3.1.1 Skalaøkonomi i luftfart 8 3.1.2 Samdriftsfordeler i luftfart 11 3.1.1 Tetthetsøkonomi i luftfart 12 3.2 Etterspørselsdrevet nettverksøkonomi i luftfart 15 4. Luftfartsnettverk i Norge og Skandinavia 18 5. Lavkostselskaper – direkteruter med LCCer som nettverksløsning 25 6. Essensielle ingredienser i lokaldrift av nettverk – lokalt flyrutetilbud i Norge 29 6.1 Plassering av nasjonalt nav 30 6.2 Enkeltrutene – Lokal rutestruktur i Norge 31 6.3 Essensielle lokale innsatsfaktorer 34 6.4 Gir konkurranse optimale nasjonale rutenett? 35 7. Nettverksdesign og fremtiden til det Norske luftfartsmarkedet 37 8. Referanser 38 Vedlegg 1 – Flyselskapskodeoversikt 41 © Frode Steen 2 1. Introduksjon I første del av rapporten diskuterer jeg hvilke nettverk man typisk finner innen luftfart. Deretter går jeg gjennom teorien rundt nettverksøkonomi som er det som definerer hvordan flyselskapene setter opp sine nettverksdesign. Kostnadseffekter som skalaøkonomi, samdriftsfordeler og tetthetsøkonomi gjennomgås før vi ser på hvordan etterspørselskarakteristika også påvirker nettverksøkonomi. Luftfartsnettverket i Norge og Skandinavia diskuteres så spesielt, der både nettverksselskapenes og lavkostselskapenes nettverk gjennomgås. -

Big V Supermarkets, Inc. Et Al., V. Wakefern Food Corporation

FOR PUBLICATION UNITED STATES BANKRUPTCY COURT FOR THE DISTRICT OF DELAWARE ------------------------------------------------------X In re: Chapter 11 Case No. 00-4372 (RTL) BIG V HOLDING CORP., et al., (Jointly Administered) Debtors. ------------------------------------------------------X BIG V SUPERMARKETS, INC., et al., Adversary Proceeding Plaintiffs, v. No. 00-1976 WAKEFERN FOOD CORPORATION, Defendant. -------------------------------------------------------X OPINION APPEARANCES: WILLKIE, FARR & GALLAGHER Brian E. O’Connor, Esq. Stephen W. Greiner, Esq. 787 Seventh Avenue New York, New York 10019-6099 Counsel for Plaintiffs CADWALADER, WICKERSHAM & TAFT Dennis J. Block, Esq. Barry J. Dichter, Esq. Kenneth A. Freeling, Esq. Jason M. Halper, Esq. 100 Maiden Lane New York, New York 10038 Co-Counsel for Defendant, Wakefern Food Corp. WILENTZ, GOLDMAN & SPITZER Frederick K. Becker, Esq. Suite 900 Box 10 90 Woodbridge Center Drive Woodbridge, New Jersey 07095 Co-Counsel for Defendant, Wakefern Food Corp. RAYMOND T. LYONS, U.S.B.J.1 TABLE OF CONTENTS A. INTRODUCTION .............................................................................................. 5 B. FINDINGS OF FACT ........................................................................................ 6 1. Historical Background .............................................................................. 6 a. Wakefern’s Founding .................................................................... 6 b. Wakefern’s Growth .......................................................... -

Nantucket Memorial Airport Page 32

OFFICIAL PUBLICATION OF THE NATIONAL AIR TRANSPORTATION ASSOCIATION 2nd Quarter 2011 Nantucket Memorial Airport page 32 Also Inside: • A Workers Compensation Controversy • Swift Justice: DOT Enforcement • Benefits of Airport Minimum Standards GET IT ALL AT AVFUEL All Aviation Fuels / Contract Fuel / Pilot Incentive Programs Fuel Quality Assurance / Refueling Equipment / Aviation Insurance Fuel Storage Systems / Flight Planning and Trip Support Global Supplier of Aviation Fuel and Services 800.521.4106 • www.avfuel.com • facebook.com/avfuel • twitter.com/AVFUELtweeter NetJets Ad - FIRST, BEST, ONLY – AVIATION BUSINESS JOURNAL – Q2 2011 First. Best. Only. NetJets® pioneered the concept of fractional jet ownership in 1986 and became a Berkshire Hathaway company in 1998. And to this day, we are driven to be the best in the business without compromise. It’s why our safety standards are so exacting, our global infrastructure is so extensive, and our service is so sophisticated. When it comes to the best in private aviation, discerning fl iers know there’s Only NetJets®. SHARE | LEASE | CARD | ON ACCOUNT | MANAGEMENT 1.877.JET.0139 | NETJETS.COM A Berkshire Hathaway company All fractional aircraft offered by NetJets® in the United States are managed and operated by NetJets Aviation, Inc. Executive Jet® Management, Inc. provides management services for customers with aircraft that are not fractionally owned, and provides charter air transportation services using select aircraft from its managed fleet. Marquis Jet® Partners, Inc. sells the Marquis Jet Card®. Marquis Jet Card flights are operated by NetJets Aviation under its 14 CFR Part 135 Air Carrier Certificate. Each of these companies is a wholly owned subsidiary of NetJets Inc. -

IS-BAO Registrations : Operators | IS-BAO Organizations On-Line Listing Sep-25-2021 09:51 AM

IS-BAO Registrations : Operators | IS-BAO Organizations On-Line Listing Sep-25-2021 09:51 AM IS-BAO Organizations On-Line Listing Organization City Country Registration ID# BestFly Limitada Luanda Angola 750 Revesco Aviation Pty Ltd Perth Australia 187 Walker Air Service Mascot Australia 2628 Royal Flying Doctor Service of Australia (Queensland Section) Brisbane Australia 2384 Australian Corporate Jet Centres Pty Ltd Essendon Australia 756 Consolidated Press Holdings Pty Ltd Sydney Australia 361 Westfield/LFG Aviation Group Australia Sydney Australia 339 Business Aviation Solutions Bilinga Australia 2283 ExecuJet Australia Mascot Australia 510 International Jet Management GmbH Schwechat Austria 2319 Avcon Jet Wien Austria 2290 Sparfell Luftfahrt GmbH Schwechat Austria 2385 Tyrolean Jet Services GmbH Innsbruck Austria 274 Squadron Aviation Services Ltd Hamilton Bermuda 189 Trans World Oil Ltd. dba T.W.O. Air (Bermuda) Ltd Hamilton Bermuda 197 S&K Bermuda Ltd. Pembroke Bermuda 45 Minera San Cristobal S.A. La Paz Bolivia 733 Vale SA Rio de Janeiro Brazil 560 AVANTTO Administração de Aeronaves Sao Paulo Brazil 654 PAIC Participacoes Ltda Sao Paulo Brazil 480 Lider Taxi Aereo S/A Brasil Belo Horizonte Brazil 48 EMAR Taxi Aereo Rio das Ostras Brazil 2615 ICON Taxi Aereo Ltda. São Paulo Brazil 2476 Banco Bradesco S/A Osasco Brazil 2527 M. Square Holding Ltd. Road Town British Virgin Islands 2309 London Air Services Limited dba London Air Services South Richmond Canada 2289 Chartright Air Group Mississauga Canada 432 ACASS Canada Ltd. Montreal Canada 102 Sunwest Aviation Ltd Calgary Canada 105 Air Partners Corporation Calgary Canada 764 Coulson Aviation (USA) Inc. -

The Future of Airline Distribution, 2016 - 2021

The Future of Airline Distribution, 2016 - 2021 By Henry H. Harteveldt, Atmosphere Research Group CONTENTS 3 INTRODUCTION 5 RESEARCH METHODOLOGY 7 EXECUTIVE SUMMARY 9 HOW SHOULD AIRLINES PREPARE TO SERVE THE AIRLINE TRAVELER OF 2021? 26 TECHNOLOGY INNOVATION AND THE EVOLVING TECHNOLOGY LANDSCAPE 29 AIRLINE DISTRIBUTION IN 2021 70 CONCLUSION 72 ENDNOTES © 2016 International Air Transport Association. All rights reserved. 2 INTRODUCTION Introduction from Atmosphere Research Atmosphere Research Group is honored to have Airlines that want to become true retailers are once again been selected by IATA to prepare this well-positioned to do so. Carriers have an abun- report on the future of airline distribution. We dance of technologies, including cloud comput- believe that the five-year timeframe this report ing, artificial intelligence, and mobility, that they covers – 2016 to 2021 – will see the successful can use to help them bring their products to mar- introduction of true retailing among the world’s ket in more meaningful ways. IATA’s NDC, One airlines and their distribution partners. Order, and NGISS initiatives are being brought to market to help airlines be more successful busi- This report reflects Atmosphere Research’s in- nesses. As each airline independently contem- dependent and objective analysis based on our plates its distribution strategies and tactics, we extensive industry and consumer research (for hope this report will serve as a helpful resource. more information about how the research was conducted, please refer to the “Research Method- ology” section). © 2016 International Air Transport Association. All rights reserved. 3 Future of Distribution Report 2016-2021 Introduction from IATA In 2012 IATA commissioned Atmosphere Research Game changes are prompted by consumer needs, to conduct a survey on the Future of Airline Dis- or by the ability to offer new solutions. -

Prior Compliance List of Aircraft Operators Specifying the Administering Member State for Each Aircraft Operator – June 2014

Prior compliance list of aircraft operators specifying the administering Member State for each aircraft operator – June 2014 Inclusion in the prior compliance list allows aircraft operators to know which Member State will most likely be attributed to them as their administering Member State so they can get in contact with the competent authority of that Member State to discuss the requirements and the next steps. Due to a number of reasons, and especially because a number of aircraft operators use services of management companies, some of those operators have not been identified in the latest update of the EEA- wide list of aircraft operators adopted on 5 February 2014. The present version of the prior compliance list includes those aircraft operators, which have submitted their fleet lists between December 2013 and January 2014. BELGIUM CRCO Identification no. Operator Name State of the Operator 31102 ACT AIRLINES TURKEY 7649 AIRBORNE EXPRESS UNITED STATES 33612 ALLIED AIR LIMITED NIGERIA 29424 ASTRAL AVIATION LTD KENYA 31416 AVIA TRAFFIC COMPANY TAJIKISTAN 30020 AVIASTAR-TU CO. RUSSIAN FEDERATION 40259 BRAVO CARGO UNITED ARAB EMIRATES 908 BRUSSELS AIRLINES BELGIUM 25996 CAIRO AVIATION EGYPT 4369 CAL CARGO AIRLINES ISRAEL 29517 CAPITAL AVTN SRVCS NETHERLANDS 39758 CHALLENGER AERO PHILIPPINES f11336 CORPORATE WINGS LLC UNITED STATES 32909 CRESAIR INC UNITED STATES 32432 EGYPTAIR CARGO EGYPT f12977 EXCELLENT INVESTMENT UNITED STATES LLC 32486 FAYARD ENTERPRISES UNITED STATES f11102 FedEx Express Corporate UNITED STATES Aviation 13457 Flying -

NACC Contact List July 2015 Update

ID POC Name POC Email Office Cell Filer Other Comments ABS Jets (Czech Republic) ABS Michal Pazourek (Chf Disp) [email protected] +420 220 111 388 + 420 602 205 (LKPRABPX & LKPRABY) [email protected] 852 ABX Air ABX Alain Terzakis [email protected] 937-366-2464 937-655-0703 (800) 736-3973 x62450 KILNABXD Ron Spanbauer [email protected] 937-366-2435 (937) 366-2450 24hr. AeroMexico AMX Raul Aguirre (FPF) [email protected] 011 (5255) 9132-5500 (281) 233-3406 Files thru HP/EDS Air Berlin BER Recep Bayindir [email protected] 49-30-3434-3705 EDDTBERA [email protected] AirBridgeCargo Airlines ABW Dmitry Levushkin [email protected] Chief Flight Dispatcher 7 8422 590370 Also see Volga-Dnepr Airlines Volga-Dnepr Airlines 7 8422 590067 (VDA) Air Canada ACA Richard Steele (Mgr Flt Supt) [email protected] 905 861 7572 647 328-3895 905 861 7528 CYYZACAW thru LIDO Rod Stone [email protected] 905 861 7570 Air China CCA Weston Li (Mgr. American Ops) [email protected] 604-233-1682 778-883-3315 Zhang Yuenian [email protected] Air Europa AEA Bernardo Salleras [email protected] Flight Ops [email protected] 34 971 178 281 (Ops Mgr) Air France AFR Thierry Vuillaume Thierry Vuillaume <[email protected]> +33 (0)1 41 56 78 65 LFPGAFRN Air India AIC Puneet Kataria [email protected] 718-632-0125 917-9811807 + 91-22-66858028 KJFKAICO [email protected] 718-632-0162direct Use SABRE for flights Files thru HP/EDS arriving/departing USA Air New Zealand -

For Immediate Release Qatar Airways Announces

FOR IMMEDIATE RELEASE QATAR AIRWAYS ANNOUNCES INVESTMENT IN JETSUITE, INC. International aviation leader joins JetBlue and private investors in fueling the future of private and semiprivate aviation Washington, D.C. – April 10, 2018 – Qatar Airways, the national airline of the State of Qatar and one of the world’s fastest-growing airlines, and JetSuite, Inc., a leading U.S. private aviation company, announced today in the presence of the Qatari Minister of Finance and Chairman of Qatar Airways, His Excellency Mr. Ali Sharif Al Emadi, that Qatar Airways has taken a minority stake in JetSuite, Inc. and indirectly in JetSuiteX, Inc. Qatar Airways joins JetBlue, which will be increasing its investment, and a number of private investors as stakeholders in both JetSuite and JetSuiteX. With this investment in JetSuite, Inc., Qatar Airways will help fuel the growth of JetSuite’s private aviation business, which currently focuses on light and very light jets. The investment extends to JetSuiteX, the sibling company to JetSuite, further accelerating the expansion of its acclaimed semi-private air service on the U.S. West Coast and beyond. JetSuiteX fills a niche in underserved short haul markets and small airports, and enjoys a Net Promoter Score over 90, higher than the major U.S. airlines. JetSuiteX operates its fleet of Embraer 135 aircraft from private terminals in California and Nevada, with no lines, no waiting and no stress, at a price competitive with commercial travel. The Qatar Airways investment will enable faster expansion of this fleet and the ability to reach new destinations, including the potential to bring the semi- private model to additional U.S. -

I ■Ii Wm ■ I ■'M■ Hi PI M HI :Ii I >M L M .'T, •■ " ' '^V.: I K M 11# I !;■: ■ • -,

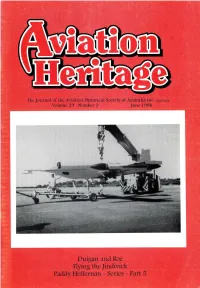

I ■ii Wm ■ I ■'M■ Hi PI m HI :iI I >M l m .'T, •■ " ' '^v.: I K m 11# I !;■: ■ • -, - . -V. , 'Y_^ IIH IRSIII K I .:SJ i /• i.:» . ■ .^m ■i ■ k i m I ■ m I ii 1 I I I n I - -I M ii il Hi I ’m pi IH M "I Ii I a ii :MJ lii^H il ................................ ...............Ii- ', ilipf - 1:W I ^He Journal of the Aviation Historical Society of Australia Inc. aoossgssp WM VI- Volume 29 Numbers M i .... m liillii iii i ill ‘ iiii II lipl ill ii ill IIP m mmm sS :i■ 1 Ii ■ Duigan and Ro m ■ 11 iiil ■ II •3 Flying the Jindivick _ IIPIi ■ Paddy Heffernan ~ Series - Part 5 I mPlH 'Mil II m 8#' 1 fc. IP The Journal of the AVIATION HISTORICAL SOCIETY im of AUSTRALIA Inc. A00336533P Volume 29 - Number 3 - June 1998 EDITORS, DESIGN & PRODUCTION Bill and Judith Baker EDITORIAL Address all correspondence to; This month we say goodbye to another Australian The Editor, AHSA, Aviation legend - The Jindivick. Sold down the river, P.O. Box 2007, gone is the manufacturing capabilities, the trained flying South Melbourne 3205 Victoria, Australia. controllers. To be replaced by an American product. 03 9583 4072 Phone & Fax Sound familiar? Nomad, Victa, Wamira and on and on! Subscription Rates; What is wrong with our, as in most of afore mentioned Australia A$40. products, service personnel who are responsible for Rest of World A$50. Surface Mail these decisions. As in the case of Wamira the goal A$65. -

Sea King Salute P16 41 Air Mail Tim Ripley Looks at the Operational History of the Westland Sea King in UK Military Service

UK SEA KING SALUTE NEWS N N IO IO AT NEWS VI THE PAST, PRESENT AND FUTURE OF FLIGHT Incorporating A AVIATION UK £4.50 FEBRUARY 2016 www.aviation-news.co.uk Low-cost NORWEGIAN Scandinavian Style AMBITIONS EXCLUSIVE FIREFIGHTING A-7 CORSAIR II BAe 146s & RJ85s LTV’s Bomb Truck Next-gen Airtankers SUKHOI SUPERJET Russia’s Rising Star 01_AN_FEB_16_UK.indd 1 05/01/2016 12:29 CONTENTS p20 FEATURES p11 REGULARS 20 Spain’s Multi-role Boeing 707s 04 Headlines Rodrigo Rodríguez Costa details the career of the Spanish Air Force’s Boeing 707s which have served 06 Civil News the country’s armed forces since the late 1980s. 11 Military News 26 BAe 146 & RJ85 Airtankers In North America and Australia, converted BAe 146 16 Preservation News and RJ85 airliners are being given a new lease of life working as airtankers – Frédéric Marsaly explains. 40 Flight Bag 32 Sea King Salute p16 41 Air Mail Tim Ripley looks at the operational history of the Westland Sea King in UK military service. 68 Airport Movements 42 Sukhoi Superjet – Russia’s 71 Air Base Movements Rising Star Aviation News Assistant Editor James Ronayne 74 Register Review pro les the Russian regional jet with global ambitions. 48 A-7 Corsair II – LTV’s Bomb Truck p74 A veteran of both the Vietnam con ict and the rst Gulf War, the Ling-Temco-Vought A-7 Corsair II packed a punch, as Patrick Boniface describes. 58 Norwegian Ambitions Aviation News Editor Dino Carrara examines the rapid expansion of low-cost carrier Norwegian and its growing long-haul network. -

February 2019 ~ the BRIEFING SHEET Page 2 of 8

T H E B RIE F ING S HEET www.deltaclippedwings.org CO-PRESIDENTS: RoyAnne Donnelly EDITOR: Sue Zino Donna Wilson VOLUME 02:2019 Welcome Aboard We hope everyone is easing into the new year and hasn’t blown any resolutions yet!!! Our luncheon on January 31st will be our last one before our Annual Fundraiser featuring a Silent Auction and Raffle on March 22nd. Please keep in mind, soliciting items for our fundraiser is not limited to those members who live in and around Atlanta, we gladly accept donations from across our system. For example, rental/vacation properties, hotel packages in fun and interesting cities or countryside inns/B&Bs, etc. In other words, there is a vast world out there to pick and choose auction items from. Please help us make this year’s fundraiser better than ever; our charities depend on us and we are proud to help support their efforts. Looking forward to seeing many of you on January 31st!! RoyAnne Donnelly Donna Wilson Co-President Co-President [email protected] • (770) 855.5476 [email protected] • (678) 517.7454 Delta Clipped Wings VICE PRESIDENTS, PROGRAMS – FUNDRAISER Retta Christoforatos • [email protected] • (770) 597.3054 Annual Fundraiser Kathy Roper • [email protected] • (404) 401.1234 Friday, March 22, 2019 | Atlanta Airport Marriott Featuring A Mobile Bidding Solutions Company GRAND PRIZ E $2,000 eGift Card for Travel Anywhere on the Delta System Plus $1,000 cash nd Time is slipping away … March 22 will be here before we know it Alone we can do so little, together we can do so much.