A Framework for Embedded Digital Musical Instruments

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

PRODUCT CATALOG WINTER 2014 the Original Red Keyboards the Nord Factory Is Located in the Creative Area of Stockholm Also Known As Sofo, in the District of Södermalm

Nord Keyboards Product Catalog Winter Catalog Product Keyboards Nord SYNTHESIZERS • STAGE PIANOS • COMBO ORGAN Handmade in Sweden by Clavia DMI AB 2014 PRODUCT CATALOG WINTER 2014 The Original Red Keyboards The Nord factory is located in the creative area of Stockholm also known as SoFo, in the district of Södermalm. With everything located in the same building, communication between development and production is only a matter of walking a few meters. We are proud to say all our Nord products are assembled by hand and they all go through a series of tough tests to ensure they’ll be ready for a long and happy life ‘on the road’. CONTENTS SYNTHESIZERS NORD LEAD A1 6 NEW NORD LEAD 4 14 NORD DRUM 2 22 STAGE PIANOS NORD ELECTRO 4 26 NORD PIANO 2 34 NORD STAGE 2 40 COMBO ORGAN NORD C2D 48 SOUND LIBRARIES 56 Manufacturer: Clavia DMI AB, Box 4214, SE-102 65 Stockholm, Sweden Phone: +46 8 442 73 60 | Fax: +46 8 644 26 50 | Email: [email protected] | www.nordkeyboards.com 3 IT ALL STARTED BACK IN 1983... In 1983 founder Hans Nordelius created the Digital In 2001 the first Nord Electro was released, In 2008 we released the Nord Electro 3 and the Percussion Plate 1 – the first drum pad allowing for introducing stunning emulations of classic vintage exclusively licensed sounds from the Mellotron and dynamic playing using sampled sounds. The DPP1 electro-mechanical instruments with a level of Chamberlin. The Electro 3 became one of the most was an instant success and soon thereafter the portability generally not associated with the original successful products we’ve ever made. -

Extending the Faust VST Architecture with Polyphony, Portamento and Pitch Bend Yan Michalevsky Julius O

Extending the Faust VST Architecture with Polyphony, Portamento and Pitch Bend Yan Michalevsky Julius O. Smith Andrew Best Department of Electrical Center for Computer Research in Blamsoft, Inc. Engineering, Music and Acoustics (CCRMA), [email protected] Stanford University Stanford University [email protected] AES Fellow [email protected] Abstract VST (Virtual Studio Technology) plugin stan- We introduce the vsti-poly.cpp architecture for dard was released by Steinberg GmbH (famous the Faust programming language. It provides sev- for Cubase and other music and sound produc- eral features that are important for practical use of tion products) in 1996, and was followed by the Faust-generated VSTi synthesizers. We focus on widespread version 2.0 in 1999 [8]. It is a partic- the VST architecture as one that has been used tra- ularly common format supported by many older ditionally and is supported by many popular tools, and newer tools. and add several important features: polyphony, note Some of the features expected from a VST history and pitch-bend support. These features take plugin can be found in the VST SDK code.2 Faust-generated VST instruments a step forward in Examining the list of MIDI events [1] can also terms of generating plugins that could be used in Digital Audio Workstations (DAW) for real-world hint at what capabilities are expected to be im- music production. plemented by instrument plugins. We also draw from our experience with MIDI instruments and Keywords commercial VST plugins in order to formulate sound feature requirements. Faust, VST, Plugin, DAW In order for Faust to be a practical tool for generating such plugins, it should support most 1 Introduction of the features expected, such as the following: Faust [5] is a popular music/audio signal pro- • Responding to MIDI keyboard events cessing language developed by Yann Orlarey et al. -

Computer Music

THE OXFORD HANDBOOK OF COMPUTER MUSIC Edited by ROGER T. DEAN OXFORD UNIVERSITY PRESS OXFORD UNIVERSITY PRESS Oxford University Press, Inc., publishes works that further Oxford University's objective of excellence in research, scholarship, and education. Oxford New York Auckland Cape Town Dar es Salaam Hong Kong Karachi Kuala Lumpur Madrid Melbourne Mexico City Nairobi New Delhi Shanghai Taipei Toronto With offices in Argentina Austria Brazil Chile Czech Republic France Greece Guatemala Hungary Italy Japan Poland Portugal Singapore South Korea Switzerland Thailand Turkey Ukraine Vietnam Copyright © 2009 by Oxford University Press, Inc. First published as an Oxford University Press paperback ion Published by Oxford University Press, Inc. 198 Madison Avenue, New York, New York 10016 www.oup.com Oxford is a registered trademark of Oxford University Press All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any form or by any means, electronic, mechanical, photocopying, recording, or otherwise, without the prior permission of Oxford University Press. Library of Congress Cataloging-in-Publication Data The Oxford handbook of computer music / edited by Roger T. Dean. p. cm. Includes bibliographical references and index. ISBN 978-0-19-979103-0 (alk. paper) i. Computer music—History and criticism. I. Dean, R. T. MI T 1.80.09 1009 i 1008046594 789.99 OXF tin Printed in the United Stares of America on acid-free paper CHAPTER 12 SENSOR-BASED MUSICAL INSTRUMENTS AND INTERACTIVE MUSIC ATAU TANAKA MUSICIANS, composers, and instrument builders have been fascinated by the expres- sive potential of electrical and electronic technologies since the advent of electricity itself. -

B Ienna Le Des M Usiq Ues Exp Lo Ra to Ires

Biennale des musiques explo ratoires DU 14 MARS AU 4 AVRIL P.2 P.3 14 MARS — 14H & 16H30 14 & 15 MARS 15 MARS 15 MARS — 18H Kurt Schwitters, Sonate 21 MARS — 20H30 SALLE DU BALLET PARVIS DE L’AUDITORIUM PERFOMANCE — 12H15 GRANDE SALLE in Urlauten, avec cadence GRANDE SALLE ATELIER — 14H À 17H de Georges Aperghis Comme La Bulle BAS-ATRIUM Morciano, Charlie Chaplin, “The Hynkel Le Papillon Noir Speech” à la radio -Environnement Veggie Orchestra Dufourt, Ravel Benjamin de La Fuente, Bypass → Tarif plein : 10€ / Tarif réduit : 5€ SON PRIMORDIAL THEÂTRE DE LA RENAISSANCE, OULLINS DU 16 AU 20 MARS — 20H PETITE SALLE Tourniquet Installée pour toute la durée Orchestre national de Lyon Playtronica Lara Morciano, Riji, AUDITORIUM du week-end, La Bulle - Un concert suivi d'un déjeuner création mondiale -ORCHESTRE Environnement accueillera et d'un atelier à expérimenter Ensemble Multilatérale NATIONAL DE LYON de courtes formes artistiques Hugues Dufourt, Ur-Geräusch en famille qui fait chanter les fruits Maurice Ravel, Boléro Les Métaboles David Jisse, conception, voix, et des ateliers pour petits et grands. et les légumes. Yann Robin, musique 13 MARS – 20H → Gratuit ! → Tarifs : de 8 à 39€ échantillons et électronique → Gratuit ! Yannick H aenel, livret GRANDE SALLE Kasper T. Toeplitz, basse Elise Chauvin, actrice-chanteuse 14 & 15 MARS — 16H30 et percussions 15 MARS — 11H → Tarifs : de 5 à 25€ SALLE PROTON Quintette pour → Tarif plein : 8€ / Tarif réduit : 5€ GRANDE SALLE ombre et LE SUCRE Marie Nachury, texte, chant et jeu 24 MARS — 20H Leading -

How to Create Music with GNU/Linux

How to create music with GNU/Linux Emmanuel Saracco [email protected] How to create music with GNU/Linux by Emmanuel Saracco Copyright © 2005-2009 Emmanuel Saracco How to create music with GNU/Linux Warning WORK IN PROGRESS Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.2 or any later version published by the Free Software Foundation; with no Invariant Sections, no Front-Cover Texts, and no Back-Cover Texts. A copy of the license is available on the World Wide Web at http://www.gnu.org/licenses/fdl.html. Revision History Revision 0.0 2009-01-30 Revised by: es Not yet versioned: It is still a work in progress. Dedication This howto is dedicated to all GNU/Linux users that refuse to use proprietary software to work with audio. Many thanks to all Free developers and Free composers that help us day-by-day to make this possible. Table of Contents Forword................................................................................................................................................... vii 1. System settings and tuning....................................................................................................................1 1.1. My Studio....................................................................................................................................1 1.2. File system..................................................................................................................................1 1.3. Linux Kernel...............................................................................................................................2 -

MOTIF XS Editor Installation Guide

MOTIF XS Editor Installation Guide ATTENTION SOFTWARE LICENSING AGREEMENT PLEASE READ THIS SOFTWARE LICENSE AGREEMENT (“AGREEMENT”) CAREFULLY BEFORE USING THIS SOFTWARE. YOU ARE ONLY PERMITTED TO USE THIS SOFTWARE PURSUANT TO THE TERMS AND CONDITIONS OF THIS AGREEMENT. THIS AGREEMENT IS BETWEEN YOU (AS AN INDIVIDUAL OR LEGAL ENTITY) AND YAMAHA CORPORATION (“YAMAHA”). BY DOWNLOADING, INSTALLING, COPYING, OR OTHERWISE USING THIS SOFTWARE YOU ARE AGREEING TO BE BOUND BY THE TERMS OF THIS LICENSE. IF YOU DO NOT AGREE WITH THE TERMS, DO NOT DOWNLOAD, INSTALL, COPY, OR OTHERWISE USE THIS SOFTWARE. IF YOU HAVE DOWNLOADED OR INSTALLED THE SOFTWARE AND DO NOT AGREE TO THE TERMS, PROMPTLY DELETE THE SOFTWARE. 1. GRANT OF LICENSE AND COPYRIGHT Yamaha hereby grants you the right to use one copy of the software program(s) and data (“SOFTWARE”) accompanying this Agreement. The term SOFTWARE shall encompass any updates to the accompanying software and data. The SOFTWARE is owned by Yamaha and/or Yamaha’s licensor(s), and is protected by relevant copyright laws and all applicable treaty provisions. While you are entitled to claim ownership of the data created with the use of SOFTWARE, the SOFTWARE will continue to be protected under relevant copyrights. • You may use the SOFTWARE on your computer(s). • You may make one copy of the SOFTWARE in machine-readable form for backup purposes only, if the SOFTWARE is on media where such backup copy is permitted. On the backup copy, you must reproduce Yamaha's copyright notice and any other proprietary legends that were on the original copy of the SOFTWARE. -

Aaltomanual1.5.Pdf

Making and organizing sounds with AALTO A comprehensive guide to signals, scribbles and patching by Madrona Labs is manual is released under the Creative Commons Attribution 3.0 Unported License. You may copy, distribute, transmit and adapt it, for any purpose, provided you include the following attribution: Aalto and the Aalto manual by Madrona Labs. http://madronalabs.com. Version 1.5, February 2014. Written by George Cochrane and Randy Jones. Illustrated by David Chandler. Typeset in Adobe Minion using the TEX document processing system. Any trademarks mentioned are the sole property of their respective owners. Such mention does not imply any endorsement of or associ- ation with Madrona Labs. Introduction What is Aalto? It’s tempting to think of Aalto as a mere soware synthe- sizer, yet another sound source, huddling amongst the teeming masses of such instruments that lurk within the menus of your favorite audio program. However, that would be doing it a disservice, for Aalto is, we think, really special. We like to think of it as a carefully craed box of sonic tools, few enough to learn easily, but flexible enough to combine in surprisingly powerful ways. Of course, it’s also just a good everyday instrument, if that’s what you want. Aalto, like many modular synthesizers, comes stocked with oscil- lators, filters, envelope generators, and goodies such as a waveshaper section and an especially nice one-knob reverb. Aalto’s twist (at least, the one we chortle about as we sip vintage armagnac in our secret lair halfway up the Space Needle,) is that thanks to the unique patching in- terface, making your own sounds with Aalto, even complicated ones, need not be a chore. -

2016-Program-Book-Corrected.Pdf

A flagship project of the New York Philharmonic, the NY PHIL BIENNIAL is a wide-ranging exploration of today’s music that brings together an international roster of composers, performers, and curatorial voices for concerts presented both on the Lincoln Center campus and with partners in venues throughout the city. The second NY PHIL BIENNIAL, taking place May 23–June 11, 2016, features diverse programs — ranging from solo works and a chamber opera to large scale symphonies — by more than 100 composers, more than half of whom are American; presents some of the country’s top music schools and youth choruses; and expands to more New York City neighborhoods. A range of events and activities has been created to engender an ongoing dialogue among artists, composers, and audience members. Partners in the 2016 NY PHIL BIENNIAL include National Sawdust; 92nd Street Y; Aspen Music Festival and School; Interlochen Center for the Arts; League of Composers/ISCM; Lincoln Center for the Performing Arts; LUCERNE FESTIVAL; MetLiveArts; New York City Electroacoustic Music Festival; Whitney Museum of American Art; WQXR’s Q2 Music; and Yale School of Music. Major support for the NY PHIL BIENNIAL is provided by The Andrew W. Mellon Foundation, The Fan Fox and Leslie R. Samuels Foundation, and The Francis Goelet Fund. Additional funding is provided by the Howard Gilman Foundation and Honey M. Kurtz. NEW YORK CITY ELECTROACOUSTIC MUSIC FESTIVAL __ JUNE 5-7, 2016 JUNE 13-19, 2016 __ www.nycemf.org CONTENTS ACKNOWLEDGEMENTS 4 DIRECTOR’S WELCOME 5 LOCATIONS 5 FESTIVAL SCHEDULE 7 COMMITTEE & STAFF 10 PROGRAMS AND NOTES 11 INSTALLATIONS 88 PRESENTATIONS 90 COMPOSERS 92 PERFORMERS 141 ACKNOWLEDGEMENTS THE NEW YORK PHILHARMONIC ORCHESTRA THE AMPHION FOUNDATION DIRECTOR’S LOCATIONS WELCOME NATIONAL SAWDUST 80 North Sixth Street Brooklyn, NY 11249 Welcome to NYCEMF 2016! Corner of Sixth Street and Wythe Avenue. -

Pdf Nord Modular

Table of Contents 1 Introduction 1.1 The Purpose of this Document 1.2 Acknowledgements 2 Oscillator Waveform Modification 2.1 Sync 2.2 Frequency Modulation Techniques 2.3 Wave Shaping 2.4 Vector Synthesis 2.5 Wave Sequencing 2.6 Audio-Rate Crossfading 2.7 Wave Terrain Synthesis 2.8 VOSIM 2.9 FOF Synthesis 2.10 Granular Synthesis 3 Filter Techniques 3.1 Resonant Filters as Oscillators 3.2 Serial and Parallel Filter Techniques 3.3 Audio-Rate Filter Cutoff Modulation 3.4 Adding Analog Feel 3.5 Wet Filters 4 Noise Generation 4.1 White Noise 4.2 Brown Noise 4.3 Pink Noise 4.4 Pitched Noise 5 Percussion 5.1 Bass Drum Synthesis 5.2 Snare Drum Synthesis 5.3 Synthesis of Gongs, Bells and Cymbals 5.4 Synthesis of Hand Claps 6 Additive Synthesis 6.1 What is Additive Synthesis? 6.2 Resynthesis 6.3 Group Additive Synthesis 6.4 Morphing 6.5 Transients 6.7 Which Oscillator to Use 7 Physical Modeling 7.1 Introduction to Physical Modeling 7.2 The Karplus-Strong Algorithm 7.3 Tuning of Delay Lines 7.4 Delay Line Details 7.5 Physical Modeling with Digital Waveguides 7.6 String Modeling 7.7 Woodwind Modeling 7.8 Related Links 8 Speech Synthesis and Processing 8.1 Vocoder Techniques 8.2 Speech Synthesis 8.3 Pitch Tracking 9 Using the Logic Modules 9.1 Complex Logic Functions 9.2 Flipflops, Counters other Sequential Elements 9.3 Asynchronous Elements 9.4 Arpeggiation 10 Algorithmic Composition 10.1 Chaos and Fractal Music 10.2 Cellular Automata 10.3 Cooking Noodles 11 Reverb and Echo Effects 11.1 Synthetic Echo and Reverb 11.2 Short-Time Reverb 11.3 Low-Fidelity -

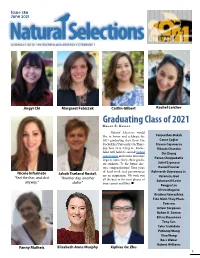

Graduating Class of 2021 M EGAN E

Issue !"# June $#$! A NEWSLETTER OF THE ROCKEFELLER UNIVERSITY COMMUNITY Jingyi Chi Margaret Fabiszak Caitlin Gilbert Rachel Leicher Graduating Class of 2021 M EGAN E . KELLEY Natural Selections would like to honor and celebrate the Sanjeethan Baksh 2021 graduating class from !e Caner Çağlar Rockefeller University. On !urs- Steven Cajamarca day, June 10 at 2:30 p.m., Rocke- Vikram Chandra feller will hold its second virtual Du Cheng convocation and confer doctorate Pavan Choppakatla degrees upon thirty-three gradu- ate students. To the future doc- Juliel Espinosa tors, congratulations! Your years Daniel Firester Rohiverth Guarecuco Jr. Nicole Infarinato Jakob Træland Rostøl, of hard work and perseverance are an inspiration. We wish you “Feel the fear, and do it “Another day, another Veronica Jové all the best in the next phases of Solomon N Levin anyway.” dollar” your careers and lives. n Fangyu Liu Olivia Maguire Kristina Navrazhina Tiên Minh Thủy Phan- Everson Artem Serganov Rohan R. Soman Elitsa Stoyanova Tony Sun Taku Tsukidate Putianqi Wang Xiao Wang Ross Weber Robert Williams Fanny Matheis Elisabeth Anne Murphy Xiphias Ge Zhu ! Editorial Board Megan Elizabeth Kelley Editor-in-Chief and Managing Editor, Communication Anna Amelianchik Associate Editor Nicole Infarinato Editorial Assistant, Copy Editor, Distribution Evan Davis Copy Editor, Photographer-in-Residence PHOTO COURTESY OF METROGRAPH PICTURES Melissa Jarmel Copy Editor, Website Tra!c Sisters with Transistors Audrey Goldfarb LANA NORRIS Copy Editor, Webmaster, Website Design Women have o"en been overlooked Barron built her own circuitry, and Laurie Jennifer Einstein, Emma Garst in the history of electronic music. !eir Spiegel programmed compositional so"- Copy Editors mastery of new technology and alien ware for Macs. -

Musik Und Kosmos« R

. r t S r e s s u e N . lstr na Ka re ne In . r t S r. A t r S TICKETKAUF 5 e »Musik und Kosmos« r 7 s e s l h ACHT BRÜCKEN Spielstätten u ie ße e R stra N asar ... online auf achtbruecken.de Balth 11 Tage 46 Veranstaltungen 14 Uraufführungen 7 . r t s n . a r 6 Über Stunden neue Musik, elektronische Musik, t m Rund um die Uhr bis zwei Stunden vor Konzert - 65 r T s heodor r -H e euss-Rin e g tr. d u s d s l la S beginn (Konzerte in der Kölner Philharmonie), G e f . Jazz, Weltmusik, alles dazwischen und darüber hinaus . r e r t t r S s r K n e bzw. bis zum Vortag (alle anderen Spielstätten). h n t r. e a ß f r t Da a l a go s r o be e l t rts . h tr m b s . n a r r b h ß e t u n c t Ihr online gebuchtes Ticket können Sie auch i n s . S a a 5 h r S r . b g a W t K y r n r e t s a i Media- r r K e line-up 2020 n s g r M a r r s e k m Musik I per Print@Home zuhause selbst ausdrucken. e park n ö e a n c . n i K b H r n r t ro n . -

An Application of Max/MSP in the Field of Live Electro-Acoustic Music

An Application of Max/MSP in the Field of Live Electro-Acoustic Music. A Case Study Oles Protsidym Faculty of Mosic, McGill University, Montreal Aognst 1999 Thesis snbmitted to the Facuity of Gradnate Studies and Research in partial fulfillment of the rrguirements for the degree of Masar of Arts in Cornputer Applications in Music @ Oles Pmtsidym, 1999 The author bas granted a non- L'auteia a accord6 une licence non exclusive licence allowing the National Li* of Canada to Bibiiotbkqut nationale du Canada de reproduce, loan, distribute or se1 ceprodirire,*, distribuer ou copies of this thesis in microfq vendre des copies de cette thèse sous paper or electrdc fomüts. la fmede microfiche/Iiim, de reproâuction m papier ou sur format The author =tains ownership of the L'auteur cansave la Propriété du copyright in tbis thesis. N* the droit d'auteur qpi protège cette diese. thesis nor subdal extracts fiom it Ni la thèse ni des extraits substantiels may be printed or otherwise de celle-ci ne doivent êtn imprimés reproduced without the avthor's ou autrement reproduits sans son permission. autorisation. Cana! For many yean the integration of real-time digital audio signal processing with dynarnic control has been one of the most significant issues facing live electro-acoustic music. While real-time electroacoustic music systems began to appear as early as the 1970's. for approximately two decades a lack of portability and prohibitive cost restricted their availability to a limited nurnber of electronic music studios and research centers. The rapid development of computer and music technologies in the 1990's enabled desktop-based real-time digital audio signal processing, making it available for general use.