Introduction to Graphics Software Development for OMAP™ 2/3 February 2008 Texas Instruments 3

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

GLSL 4.50 Spec

The OpenGL® Shading Language Language Version: 4.50 Document Revision: 7 09-May-2017 Editor: John Kessenich, Google Version 1.1 Authors: John Kessenich, Dave Baldwin, Randi Rost Copyright (c) 2008-2017 The Khronos Group Inc. All Rights Reserved. This specification is protected by copyright laws and contains material proprietary to the Khronos Group, Inc. It or any components may not be reproduced, republished, distributed, transmitted, displayed, broadcast, or otherwise exploited in any manner without the express prior written permission of Khronos Group. You may use this specification for implementing the functionality therein, without altering or removing any trademark, copyright or other notice from the specification, but the receipt or possession of this specification does not convey any rights to reproduce, disclose, or distribute its contents, or to manufacture, use, or sell anything that it may describe, in whole or in part. Khronos Group grants express permission to any current Promoter, Contributor or Adopter member of Khronos to copy and redistribute UNMODIFIED versions of this specification in any fashion, provided that NO CHARGE is made for the specification and the latest available update of the specification for any version of the API is used whenever possible. Such distributed specification may be reformatted AS LONG AS the contents of the specification are not changed in any way. The specification may be incorporated into a product that is sold as long as such product includes significant independent work developed by the seller. A link to the current version of this specification on the Khronos Group website should be included whenever possible with specification distributions. -

3D Graphics Fundamentals

11BegGameDev.qxd 9/20/04 5:20 PM Page 211 chapter 11 3D Graphics Fundamentals his chapter covers the basics of 3D graphics. You will learn the basic concepts so that you are at least aware of the key points in 3D programming. However, this Tchapter will not go into great detail on 3D mathematics or graphics theory, which are far too advanced for this book. What you will learn instead is the practical implemen- tation of 3D in order to write simple 3D games. You will get just exactly what you need to 211 11BegGameDev.qxd 9/20/04 5:20 PM Page 212 212 Chapter 11 ■ 3D Graphics Fundamentals write a simple 3D game without getting bogged down in theory. If you have questions about how matrix math works and about how 3D rendering is done, you might want to use this chapter as a starting point and then go on and read a book such as Beginning Direct3D Game Programming,by Wolfgang Engel (Course PTR). The goal of this chapter is to provide you with a set of reusable functions that can be used to develop 3D games. Here is what you will learn in this chapter: ■ How to create and use vertices. ■ How to manipulate polygons. ■ How to create a textured polygon. ■ How to create a cube and rotate it. Introduction to 3D Programming It’s a foregone conclusion today that everyone has a 3D accelerated video card. Even the low-end budget video cards are equipped with a 3D graphics processing unit (GPU) that would be impressive were it not for all the competition in this market pushing out more and more polygons and new features every year. -

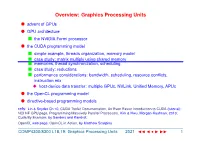

Overview: Graphics Processing Units

Overview: Graphics Processing Units l advent of GPUs l GPU architecture n the NVIDIA Fermi processor l the CUDA programming model n simple example, threads organization, memory model n case study: matrix multiply using shared memory n memories, thread synchronization, scheduling n case study: reductions n performance considerations: bandwidth, scheduling, resource conflicts, instruction mix u host-device data transfer: multiple GPUs, NVLink, Unified Memory, APUs l the OpenCL programming model l directive-based programming models refs: Lin & Snyder Ch 10, CUDA Toolkit Documentation, An Even Easier Introduction to CUDA (tutorial); NCI NF GPU page, Programming Massively Parallel Processors, Kirk & Hwu, Morgan-Kaufman, 2010; Cuda By Example, by Sanders and Kandrot; OpenCL web page, OpenCL in Action, by Matthew Scarpino COMP4300/8300 L18,19: Graphics Processing Units 2021 JJJ • III × 1 Advent of General-purpose Graphics Processing Units l many applications have massive amounts of mostly independent calculations n e.g. ray tracing, image rendering, matrix computations, molecular simulations, HDTV n can be largely expressed in terms of SIMD operations u implementable with minimal control logic & caches, simple instruction sets l design point: maximize number of ALUs & FPUs and memory bandwidth to take advantage of Moore’s’ Law (shown here) n put this on a co-processor (GPU); have a normal CPU to co-ordinate, run the operating system, launch applications, etc l architecture/infrastructure development requires a massive economic base for its development (the gaming industry!) n pre 2006: only specialized graphics operations (integer & float data) n 2006: ‘General Purpose’ (GPGPU): general computations but only through a graphics library (e.g. -

Implementing FPGA Design with the Opencl Standard

Implementing FPGA Design with the OpenCL Standard WP-01173-3.0 White Paper Utilizing the Khronos Group’s OpenCL™ standard on an FPGA may offer significantly higher performance and at much lower power than is available today from hardware architectures such as CPUs, graphics processing units (GPUs), and digital signal processing (DSP) units. In addition, an FPGA-based heterogeneous system (CPU + FPGA) using the OpenCL standard has a significant time-to-market advantage compared to traditional FPGA development using lower level hardware description languages (HDLs) such as Verilog or VHDL. 1 OpenCL and the OpenCL logo are trademarks of Apple Inc. used by permission by Khronos. Introduction The initial era of programmable technologies contained two different extremes of programmability. As illustrated in Figure 1, one extreme was represented by single core CPU and digital signal processing (DSP) units. These devices were programmable using software consisting of a list of instructions to be executed. These instructions were created in a manner that was conceptually sequential to the programmer, although an advanced processor could reorder instructions to extract instruction-level parallelism from these sequential programs at run time. In contrast, the other extreme of programmable technology was represented by the FPGA. These devices are programmed by creating configurable hardware circuits, which execute completely in parallel. A designer using an FPGA is essentially creating a massively- fine-grained parallel application. For many years, these extremes coexisted with each type of programmability being applied to different application domains. However, recent trends in technology scaling have favored technologies that are both programmable and parallel. Figure 1. -

Steep Parallax Mapping

I3D 2005 Posters Session Steep Parallax Mapping Morgan McGuire* Max McGuire Brown University Iron Lore Entertainment N E E E E True Surface I I I I Bump x P Surface P P P Parallax Mapping Steep Parallax Mapping Bump Mapping Parallax Mapping Steep Parallax Mapping Fig. 2 The viewer perceives an intersection at (P) from Fig. 1 A single polygon with a high-frequency bump map. shading, although the true intersection occurred at (I). Abstract. We propose a new bump mapping scheme that We choose t to be the first ti for which NB [ti]α > 1.0 – i / n can produce parallax, self-occlusion, and self-shadowing for and then shade as with other bump mapping methods: arbitrary bump maps yet is efficient enough for games when float step = 1.0 / n implemented in a pixel shader and uses existing data formats. vec2 dt = E.xy * bumpScale / (n * E.z) Related Work float height = 1.0; Let E and L be unit vectors from the eye and light in a local vec2 t = texCoord.xy; vec4 nb = texture2DLod (NB, t, LOD); tangent space. At a pixel, let 2-vector s be the texture coordinate (i.e. where the eye ray hits the true, flat surface) while (nb.a < height) { and t be the coordinate of the texel where the ray would have height -= step; t += dt; nb = texture2DLod (NB, t, LOD); hit the surface if it were actually displaced by the bump map. } Shading is computed by Phong illumination, using the // ... Shade using N = nb.rgb normal to the scalar bump map surface B at t and the color of the texture map at t. -

Multi-User Game Development

California State University, San Bernardino CSUSB ScholarWorks Theses Digitization Project John M. Pfau Library 2007 Multi-user game development Cheng-Yu Hung Follow this and additional works at: https://scholarworks.lib.csusb.edu/etd-project Part of the Software Engineering Commons Recommended Citation Hung, Cheng-Yu, "Multi-user game development" (2007). Theses Digitization Project. 3122. https://scholarworks.lib.csusb.edu/etd-project/3122 This Project is brought to you for free and open access by the John M. Pfau Library at CSUSB ScholarWorks. It has been accepted for inclusion in Theses Digitization Project by an authorized administrator of CSUSB ScholarWorks. For more information, please contact [email protected]. ' MULTI ;,..USER iGAME DEVELOPMENT '.,A,.'rr:OJ~c-;t.··. PJ:es·~nted ·t•o '.the·· Fa.8lllty· of. Calif0rr1i~ :Siat~:, lJniiV~r~s'ity; .•, '!' San. Bernardinti . - ' .Th P~rt±al Fu1fillrnent: 6f the ~~q11l~~fuents' for the ;pe'gree ···•.:,·.',,_ .. ·... ··., Master. o.f.·_s:tience•· . ' . ¢ornput~r •· ~6i~n¢e by ,•, ' ' .- /ch~ng~Yu Hung' ' ' Jutie .2001. MULTI-USER GAME DEVELOPMENT A Project Presented to the Faculty of California State University, San Bernardino by Cheng-Yu Hung June 2007 Approved by: {/4~2 Dr. David Turner, Chair, Computer Science ate ABSTRACT In the Current game market; the 3D multi-user game is the most popular game. To develop a successful .3D multi-llger game, we need 2D artists, 3D artists and programme.rs to work together and use tools to author the game artd a: game engine to perform \ the game. Most of this.project; is about the 3D model developmept using too.ls such as Blender, and integration of the 3D models with a .level editor arid game engine. -

Comparison of Technologies for General-Purpose Computing on Graphics Processing Units

Master of Science Thesis in Information Coding Department of Electrical Engineering, Linköping University, 2016 Comparison of Technologies for General-Purpose Computing on Graphics Processing Units Torbjörn Sörman Master of Science Thesis in Information Coding Comparison of Technologies for General-Purpose Computing on Graphics Processing Units Torbjörn Sörman LiTH-ISY-EX–16/4923–SE Supervisor: Robert Forchheimer isy, Linköpings universitet Åsa Detterfelt MindRoad AB Examiner: Ingemar Ragnemalm isy, Linköpings universitet Organisatorisk avdelning Department of Electrical Engineering Linköping University SE-581 83 Linköping, Sweden Copyright © 2016 Torbjörn Sörman Abstract The computational capacity of graphics cards for general-purpose computing have progressed fast over the last decade. A major reason is computational heavy computer games, where standard of performance and high quality graphics con- stantly rise. Another reason is better suitable technologies for programming the graphics cards. Combined, the product is high raw performance devices and means to access that performance. This thesis investigates some of the current technologies for general-purpose computing on graphics processing units. Tech- nologies are primarily compared by means of benchmarking performance and secondarily by factors concerning programming and implementation. The choice of technology can have a large impact on performance. The benchmark applica- tion found the difference in execution time of the fastest technology, CUDA, com- pared to the slowest, OpenCL, to be twice a factor of two. The benchmark applica- tion also found out that the older technologies, OpenGL and DirectX, are compet- itive with CUDA and OpenCL in terms of resulting raw performance. iii Acknowledgments I would like to thank Åsa Detterfelt for the opportunity to make this thesis work at MindRoad AB. -

Gscale: Scaling up GPU Virtualization with Dynamic Sharing of Graphics

gScale: Scaling up GPU Virtualization with Dynamic Sharing of Graphics Memory Space Mochi Xue, Shanghai Jiao Tong University and Intel Corporation; Kun Tian, Intel Corporation; Yaozu Dong, Shanghai Jiao Tong University and Intel Corporation; Jiacheng Ma, Jiajun Wang, and Zhengwei Qi, Shanghai Jiao Tong University; Bingsheng He, National University of Singapore; Haibing Guan, Shanghai Jiao Tong University https://www.usenix.org/conference/atc16/technical-sessions/presentation/xue This paper is included in the Proceedings of the 2016 USENIX Annual Technical Conference (USENIX ATC ’16). June 22–24, 2016 • Denver, CO, USA 978-1-931971-30-0 Open access to the Proceedings of the 2016 USENIX Annual Technical Conference (USENIX ATC ’16) is sponsored by USENIX. gScale: Scaling up GPU Virtualization with Dynamic Sharing of Graphics Memory Space Mochi Xue1,2, Kun Tian2, Yaozu Dong1,2, Jiacheng Ma1, Jiajun Wang1, Zhengwei Qi1, Bingsheng He3, Haibing Guan1 {xuemochi, mjc0608, jiajunwang, qizhenwei, hbguan}@sjtu.edu.cn {kevin.tian, eddie.dong}@intel.com [email protected] 1Shanghai Jiao Tong University, 2Intel Corporation, 3National University of Singapore Abstract As one of the key enabling technologies of GPU cloud, GPU virtualization is intended to provide flexible and With increasing GPU-intensive workloads deployed on scalable GPU resources for multiple instances with high cloud, the cloud service providers are seeking for practi- performance. To achieve such a challenging goal, sev- cal and efficient GPU virtualization solutions. However, eral GPU virtualization solutions were introduced, i.e., the cutting-edge GPU virtualization techniques such as GPUvm [28] and gVirt [30]. gVirt, also known as GVT- gVirt still suffer from the restriction of scalability, which g, is a full virtualization solution with mediated pass- constrains the number of guest virtual GPU instances. -

Khronos Template 2015

Ecosystem Overview Neil Trevett | Khronos President NVIDIA Vice President Developer Ecosystem [email protected] | @neilt3d © Copyright Khronos Group 2016 - Page 1 Khronos Mission Software Silicon Khronos is an Industry Consortium of over 100 companies creating royalty-free, open standard APIs to enable software to access hardware acceleration for graphics, parallel compute and vision © Copyright Khronos Group 2016 - Page 2 http://accelerateyourworld.org/ © Copyright Khronos Group 2016 - Page 3 Vision Pipeline Challenges and Opportunities Growing Camera Diversity Diverse Vision Processors Sensor Proliferation 22 Flexible sensor and camera Use efficient acceleration to Combine vision output control to GENERATE PROCESS with other sensor data an image stream the image stream on device © Copyright Khronos Group 2016 - Page 4 OpenVX – Low Power Vision Acceleration • Higher level abstraction API - Targeted at real-time mobile and embedded platforms • Performance portability across diverse architectures - Multi-core CPUs, GPUs, DSPs and DSP arrays, ISPs, Dedicated hardware… • Extends portable vision acceleration to very low power domains - Doesn’t require high-power CPU/GPU Complex - Lower precision requirements than OpenCL - Low-power host can setup and manage frame-rate graph Vision Engine Middleware Application X100 Dedicated Vision Processing Hardware Efficiency Vision DSPs X10 GPU Compute Accelerator Multi-core Accelerator Power Efficiency Power X1 CPU Accelerator Computation Flexibility © Copyright Khronos Group 2016 - Page 5 OpenVX Graphs -

Texture Mapping

Texture Mapping Slides from Rosalee Wolfe DePaul University http://www.siggraph.org/education/materials/HyperGraph/mapping/r_wolf e/r_wolfe_mapping_1.htm 1 1. Mapping techniques add realism and interest to computer graphics images. Texture mapping applies a pattern of color to an object. Bump mapping alters the surface of an object so that it appears rough, dented or pitted. In this example, the umbrella, background, beachball and beach blanket have texture maps. The sand has been bump mapped. These and other mapping techniques are the subject of this slide set. 2 2: When creating image detail, it is cheaper to employ mapping techniques that it is to use myriads of tiny polygons. The image on the right portrays a brick wall, a lawn and the sky. In actuality the wall was modeled as a rectangular solid, and the lawn and the sky were created from rectangles. The entire image contains eight polygons.Imagine the number of polygon it would require to model the blades of grass in the lawn! Texture mapping creates the appearance of grass without the cost of rendering thousands of polygons. 3 3: Knowing the difference between world coordinates and object coordinates is important when using mapping techniques. In object coordinates the origin and coordinate axes remain fixed relative to an object no matter how the object’s position and orientation change. Most mapping techniques use object coordinates. Normally, if a teapot’s spout is painted yellow, the spout should remain yellow as the teapot flies and tumbles through space. When using world coordinates, the pattern shifts on the object as the object moves through space. -

USER GUIDE 1 CONTENTS Overview

USER GUIDE 1 CONTENTS Overview ......................................................................................................................................... 2 System requirements .................................................................................................................... 2 Installation ...................................................................................................................................... 2 Workflow ......................................................................................................................................... 3 Interface .......................................................................................................................................... 8 Tool Panel ................................................................................................................................................. 8 Texture panel .......................................................................................................................................... 10 Tools ............................................................................................................................................. 11 Poly Lasso Tool ...................................................................................................................................... 11 Poly Lasso tool actions ........................................................................................................................... 12 Construction plane ................................................................................................................................. -

Game Developers Conference Europe Wrap, New Women’S Group Forms, Licensed to Steal Super Genre Break Out, and More

>> PRODUCT REVIEWS SPEEDTREE RT 1.7 * SPACEPILOT OCTOBER 2005 THE LEADING GAME INDUSTRY MAGAZINE >>POSTMORTEM >>WALKING THE PLANK >>INNER PRODUCT ART & ARTIFICE IN DANIEL JAMES ON DEBUG? RELEASE? RESIDENT EVIL 4 CASUAL MMO GOLD LET’S DEVELOP! Thanks to our publishers for helping us create a new world of video games. GameTapTM and many of the video game industry’s leading publishers have joined together to create a new world where you can play hundreds of the greatest games right from your broadband-connected PC. It’s gaming freedom like never before. START PLAYING AT GAMETAP.COM TM & © 2005 Turner Broadcasting System, Inc. A Time Warner Company. Patent Pending. All Rights Reserved. GTP1-05-116-104_mstrA_v2.indd 1 9/7/05 10:58:02 PM []CONTENTS OCTOBER 2005 VOLUME 12, NUMBER 9 FEATURES 11 TOP 20 PUBLISHERS Who’s the top dog on the publishing block? Ranked by their revenues, the quality of the games they release, developer ratings, and other factors pertinent to serious professionals, our annual Top 20 list calls attention to the definitive movers and shakers in the publishing world. 11 By Tristan Donovan 21 INTERVIEW: A PIRATE’S LIFE What do pirates, cowboys, and massively multiplayer online games have in common? They all have Daniel James on their side. CEO of Three Rings, James’ mission has been to create an addictive MMO (or two) that has the pick-up-put- down rhythm of a casual game. In this interview, James discusses the barriers to distributing and charging for such 21 games, the beauty of the web, and the trouble with executables.