CPU and GPU Co-Processing for Sound

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

ATI Radeon™ HD 4870 Computation Highlights

AMD Entering the Golden Age of Heterogeneous Computing Michael Mantor Senior GPU Compute Architect / Fellow AMD Graphics Product Group [email protected] 1 The 4 Pillars of massively parallel compute offload •Performance M’Moore’s Law Î 2x < 18 Month s Frequency\Power\Complexity Wall •Power Parallel Î Opportunity for growth •Price • Programming Models GPU is the first successful massively parallel COMMODITY architecture with a programming model that managgped to tame 1000’s of parallel threads in hardware to perform useful work efficiently 2 Quick recap of where we are – Perf, Power, Price ATI Radeon™ HD 4850 4x Performance/w and Performance/mm² in a year ATI Radeon™ X1800 XT ATI Radeon™ HD 3850 ATI Radeon™ HD 2900 XT ATI Radeon™ X1900 XTX ATI Radeon™ X1950 PRO 3 Source of GigaFLOPS per watt: maximum theoretical performance divided by maximum board power. Source of GigaFLOPS per $: maximum theoretical performance divided by price as reported on www.buy.com as of 9/24/08 ATI Radeon™HD 4850 Designed to Perform in Single Slot SP Compute Power 1.0 T-FLOPS DP Compute Power 200 G-FLOPS Core Clock Speed 625 Mhz Stream Processors 800 Memory Type GDDR3 Memory Capacity 512 MB Max Board Power 110W Memory Bandwidth 64 GB/Sec 4 ATI Radeon™HD 4870 First Graphics with GDDR5 SP Compute Power 1.2 T-FLOPS DP Compute Power 240 G-FLOPS Core Clock Speed 750 Mhz Stream Processors 800 Memory Type GDDR5 3.6Gbps Memory Capacity 512 MB Max Board Power 160 W Memory Bandwidth 115.2 GB/Sec 5 ATI Radeon™HD 4870 X2 Incredible Balance of Performance,,, Power, Price -

An Evolution of Mobile Graphics

AN EVOLUTION OF MOBILE GRAPHICS Michael C. Shebanow Vice President, Advanced Processor Lab Samsung Electronics July 20, 20131 DISCLAIMER • The views herein are my own • They do not represent Samsung’s vision nor product plans 2 • The Mobile Market • Review of GPU Tech • GPU Efficiency • User Experience • Tech Challenges • Summary 3 The Rise of the Mobile GPU & Connectivity A NEW WORLD COMING? 4 DISCRETE GPU MARKET Flattening 5 MOBILE GPU MARKET Smart • In 2012, an estimated 800+ Phones million mobile GPUs shipped “Phablets” • ~123M tablets • ~712M smart phones Tablets • Will easily exceed 1B in the coming years • Trend: • Discrete GPU relatively flat • Mobile is growing rapidly 6 WW INTERNET TRAFFIC • Source: Cisco VNI Mobile INET IP Traffic growth Traffic • Internet traffic growth Year (TB/sec) rate (TB/sec) rate is staggering 2005 0.9 0.00 2006 1.5 65% 0.00 • 2012 total traffic is 2007 2.5 61% 0.01 13.7 GB per person 2008 3.8 54% 0.01 per month 2009 5.6 45% 0.04 2010 7.8 40% 0.10 • 2012 smart phone 2011 10.6 36% 0.23 traffic at 2012 12.4 17% 0.34 0.342 GB per person per month • 2017 smart phone traffic expected at 2.7 GB per person per month 7 WHERE ARE WE HEADED?… • Enormous quantity of GPUs • Large amount of interconnectivity • Better I/O 8 GPU Pipelines A BRIEF REVIEW OF GPU TECH 9 MOBILE GPU PIPELINE ARCHITECTURES Tile-based immediate mode rendering IA VS CCV RS PS ROP (TBIMR) Tile-based deferred IA VS CCV scene rendering (TBDR) RS PS ROP IA = input assembler VS = vertex shader CCV = cull, clip, viewport transform RS = rasterization, -

NVIDIA Quadro P4000

NVIDIA Quadro P4000 GP104 1792 112 64 8192 MB GDDR5 256 bit GRAPHICS PROCESSOR CORES TMUS ROPS MEMORY SIZE MEMORY TYPE BUS WIDTH The Quadro P4000 is a professional graphics card by NVIDIA, launched in February 2017. Built on the 16 nm process, and based on the GP104 graphics processor, the card supports DirectX 12.0. The GP104 graphics processor is a large chip with a die area of 314 mm² and 7,200 million transistors. Unlike the fully unlocked GeForce GTX 1080, which uses the same GPU but has all 2560 shaders enabled, NVIDIA has disabled some shading units on the Quadro P4000 to reach the product's target shader count. It features 1792 shading units, 112 texture mapping units and 64 ROPs. NVIDIA has placed 8,192 MB GDDR5 memory on the card, which are connected using a 256‐bit memory interface. The GPU is operating at a frequency of 1227 MHz, which can be boosted up to 1480 MHz, memory is running at 1502 MHz. We recommend the NVIDIA Quadro P4000 for gaming with highest details at resolutions up to, and including, 5760x1080. Being a single‐slot card, the NVIDIA Quadro P4000 draws power from 1x 6‐pin power connectors, with power draw rated at 105 W maximum. Display outputs include: 4x DisplayPort. Quadro P4000 is connected to the rest of the system using a PCIe 3.0 x16 interface. The card measures 241 mm in length, and features a single‐slot cooling solution. Graphics Processor Graphics Card GPU Name: GP104 Released: Feb 6th, 2017 Architecture: Pascal Production Active Status: Process Size: 16 nm Bus Interface: PCIe 3.0 x16 Transistors: 7,200 -

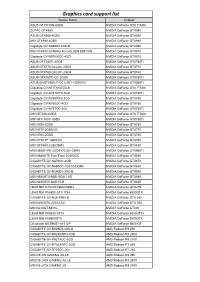

Graphics Card Support List

Graphics card support list Device Name Chipset ASUS GTXTITAN-6GD5 NVIDIA GeForce GTX TITAN ZOTAC GTX980 NVIDIA GeForce GTX980 ASUS GTX980-4GD5 NVIDIA GeForce GTX980 MSI GTX980-4GD5 NVIDIA GeForce GTX980 Gigabyte GV-N980D5-4GD-B NVIDIA GeForce GTX980 MSI GTX970 GAMING 4G GOLDEN EDITION NVIDIA GeForce GTX970 Gigabyte GV-N970IXOC-4GD NVIDIA GeForce GTX970 ASUS GTX780TI-3GD5 NVIDIA GeForce GTX780Ti ASUS GTX770-DC2OC-2GD5 NVIDIA GeForce GTX770 ASUS GTX760-DC2OC-2GD5 NVIDIA GeForce GTX760 ASUS GTX750TI-OC-2GD5 NVIDIA GeForce GTX750Ti ASUS ENGTX560-Ti-DCII/2D1-1GD5/1G NVIDIA GeForce GTX560Ti Gigabyte GV-NTITAN-6GD-B NVIDIA GeForce GTX TITAN Gigabyte GV-N78TWF3-3GD NVIDIA GeForce GTX780Ti Gigabyte GV-N780WF3-3GD NVIDIA GeForce GTX780 Gigabyte GV-N760OC-4GD NVIDIA GeForce GTX760 Gigabyte GV-N75TOC-2GI NVIDIA GeForce GTX750Ti MSI NTITAN-6GD5 NVIDIA GeForce GTX TITAN MSI GTX 780Ti 3GD5 NVIDIA GeForce GTX780Ti MSI N780-3GD5 NVIDIA GeForce GTX780 MSI N770-2GD5/OC NVIDIA GeForce GTX770 MSI N760-2GD5 NVIDIA GeForce GTX760 MSI N750 TF 1GD5/OC NVIDIA GeForce GTX750 MSI GTX680-2GB/DDR5 NVIDIA GeForce GTX680 MSI N660Ti-PE-2GD5-OC/2G-DDR5 NVIDIA GeForce GTX660Ti MSI N680GTX Twin Frozr 2GD5/OC NVIDIA GeForce GTX680 GIGABYTE GV-N670OC-2GD NVIDIA GeForce GTX670 GIGABYTE GV-N650OC-1GI/1G-DDR5 NVIDIA GeForce GTX650 GIGABYTE GV-N590D5-3GD-B NVIDIA GeForce GTX590 MSI N580GTX-M2D15D5/1.5G NVIDIA GeForce GTX580 MSI N465GTX-M2D1G-B NVIDIA GeForce GTX465 LEADTEK GTX275/896M-DDR3 NVIDIA GeForce GTX275 LEADTEK PX8800 GTX TDH NVIDIA GeForce 8800GTX GIGABYTE GV-N26-896H-B -

AMD Radeon E8860

Components for AMD’s Embedded Radeon™ E8860 GPU INTRODUCTION The E8860 Embedded Radeon GPU available from CoreAVI is comprised of temperature screened GPUs, safety certi- fiable OpenGL®-based drivers, and safety certifiable GPU tools which have been pre-integrated and validated together to significantly de-risk the challenges typically faced when integrating hardware and software components. The plat- form is an off-the-shelf foundation upon which safety certifiable applications can be built with confidence. Figure 1: CoreAVI Support for E8860 GPU EXTENDED TEMPERATURE RANGE CoreAVI provides extended temperature versions of the E8860 GPU to facilitate its use in rugged embedded applications. CoreAVI functionally tests the E8860 over -40C Tj to +105 Tj, increasing the manufacturing yield for hardware suppliers while reducing supply delays to end customers. coreavi.com [email protected] Revision - 13Nov2020 1 E8860 GPU LONG TERM SUPPLY AND SUPPORT CoreAVI has provided consistent and dedicated support for the supply and use of the AMD embedded GPUs within the rugged Mil/Aero/Avionics market segment for over a decade. With the E8860, CoreAVI will continue that focused support to ensure that the software, hardware and long-life support are provided to meet the needs of customers’ system life cy- cles. CoreAVI has extensive environmentally controlled storage facilities which are used to store the GPUs supplied to the Mil/ Aero/Avionics marketplace, ensuring that a ready supply is available for the duration of any program. CoreAVI also provides the post Last Time Buy storage of GPUs and is often able to provide additional quantities of com- ponents when COTS hardware partners receive increased volume for existing products / systems requiring additional inventory. -

Graphics: Mesa, AMDVLK, Adreno and Protected Xe Path

Published on Tux Machines (http://www.tuxmachines.org) Home > content > Graphics: Mesa, AMDVLK, Adreno and Protected Xe Path Graphics: Mesa, AMDVLK, Adreno and Protected Xe Path By Roy Schestowitz Created 08/02/2021 - 11:48pm Submitted by Roy Schestowitz on Monday 8th of February 2021 11:48:11 PM Filed under Graphics/Benchmarks [1] Panfrost Gallium3D Lands Its New Bifrost Scheduler In Mesa 21.1 - Phoronix[2] Hitting Mesa 21.1 this morning is a scheduler implementation for Panfrost Gallium3D, the open-source Arm Mali graphics driver. Lead Panfrost developer Alyssa Rosenzweig has been working to implement a scheduler in panfrost for the Arm Bifrost graphics code path. The scheduler has been in the works for a number of months and is passing the relevant conformance tests and has now been merged. AMDVLK 2021.Q1.3 Brings Performance Tuning For War Thunder - Phoronix[3] AMDVLK 2021.Q1.3 is out this morning as the latest snapshot of the official open-source AMD Radeon Vulkan driver for Linux systems that is derived from their shared platform driver sources. AMDVLK 2021.Q1.3 is on the lighter side with AMDVLK 2021.Q1.2 having arrived just over one week ago. Of the two listed driver changes, AMDVLK 2021.Q1.3 is rebuilt against the Vulkan API 1.2.168 headers. Freedreno's MSM DRM Driver Adds More Adreno Support, Speedbin Capability For Linux 5.12 - Phoronix[4] The MSM Direct Rendering Manager driver originally developed as part of the Freedreno effort for open-source Qualcomm Adreno graphics on Linux while now supported by the likes of Google and Qualcomm's Code Aurora engineers has some notable changes in store for the next Linux kernel cycle. -

Graphics Processing Units

Graphics Processing Units Graphics Processing Units (GPUs) are coprocessors that traditionally perform the rendering of 2-dimensional and 3-dimensional graphics information for display on a screen. In particular computer games request more and more realistic real-time rendering of graphics data and so GPUs became more and more powerful highly parallel specialist computing units. It did not take long until programmers realized that this computational power can also be used for tasks other than computer graphics. For example already in 1990 Lengyel, Re- ichert, Donald, and Greenberg used GPUs for real-time robot motion planning [43]. In 2003 Harris introduced the term general-purpose computations on GPUs (GPGPU) [28] for such non-graphics applications running on GPUs. At that time programming general-purpose computations on GPUs meant expressing all algorithms in terms of operations on graphics data, pixels and vectors. This was feasible for speed-critical small programs and for algorithms that operate on vectors of floating-point values in a similar way as graphics data is typically processed in the rendering pipeline. The programming paradigm shifted when the two main GPU manufacturers, NVIDIA and AMD, changed the hardware architecture from a dedicated graphics-rendering pipeline to a multi-core computing platform, implemented shader algorithms of the rendering pipeline in software running on these cores, and explic- itly supported general-purpose computations on GPUs by offering programming languages and software- development toolchains. This chapter first gives an introduction to the architectures of these modern GPUs and the tools and languages to program them. Then it highlights several applications of GPUs related to information security with a focus on applications in cryptography and cryptanalysis. -

Radeon GPU Profiler Documentation

Radeon GPU Profiler Documentation Release 1.11.0 AMD Developer Tools Jul 21, 2021 Contents 1 Graphics APIs, RDNA and GCN hardware, and operating systems3 2 Compute APIs, RDNA and GCN hardware, and operating systems5 3 Radeon GPU Profiler - Quick Start7 3.1 How to generate a profile.........................................7 3.2 Starting the Radeon GPU Profiler....................................7 3.3 How to load a profile...........................................7 3.4 The Radeon GPU Profiler user interface................................. 10 4 Settings 13 4.1 General.................................................. 13 4.2 Themes and colors............................................ 13 4.3 Keyboard shortcuts............................................ 14 4.4 UI Navigation.............................................. 16 5 Overview Windows 17 5.1 Frame summary (DX12 and Vulkan).................................. 17 5.2 Profile summary (OpenCL)....................................... 20 5.3 Barriers.................................................. 22 5.4 Context rolls............................................... 25 5.5 Most expensive events.......................................... 28 5.6 Render/depth targets........................................... 28 5.7 Pipelines................................................. 30 5.8 Device configuration........................................... 33 6 Events Windows 35 6.1 Wavefront occupancy.......................................... 35 6.2 Event timing............................................... 48 6.3 -

AI Chips: What They Are and Why They Matter

APRIL 2020 AI Chips: What They Are and Why They Matter An AI Chips Reference AUTHORS Saif M. Khan Alexander Mann Table of Contents Introduction and Summary 3 The Laws of Chip Innovation 7 Transistor Shrinkage: Moore’s Law 7 Efficiency and Speed Improvements 8 Increasing Transistor Density Unlocks Improved Designs for Efficiency and Speed 9 Transistor Design is Reaching Fundamental Size Limits 10 The Slowing of Moore’s Law and the Decline of General-Purpose Chips 10 The Economies of Scale of General-Purpose Chips 10 Costs are Increasing Faster than the Semiconductor Market 11 The Semiconductor Industry’s Growth Rate is Unlikely to Increase 14 Chip Improvements as Moore’s Law Slows 15 Transistor Improvements Continue, but are Slowing 16 Improved Transistor Density Enables Specialization 18 The AI Chip Zoo 19 AI Chip Types 20 AI Chip Benchmarks 22 The Value of State-of-the-Art AI Chips 23 The Efficiency of State-of-the-Art AI Chips Translates into Cost-Effectiveness 23 Compute-Intensive AI Algorithms are Bottlenecked by Chip Costs and Speed 26 U.S. and Chinese AI Chips and Implications for National Competitiveness 27 Appendix A: Basics of Semiconductors and Chips 31 Appendix B: How AI Chips Work 33 Parallel Computing 33 Low-Precision Computing 34 Memory Optimization 35 Domain-Specific Languages 36 Appendix C: AI Chip Benchmarking Studies 37 Appendix D: Chip Economics Model 39 Chip Transistor Density, Design Costs, and Energy Costs 40 Foundry, Assembly, Test and Packaging Costs 41 Acknowledgments 44 Center for Security and Emerging Technology | 2 Introduction and Summary Artificial intelligence will play an important role in national and international security in the years to come. -

External Graphics Under Windows 10

External Graphics under Windows 10 Thunderbolt 3 PCs with 64-bit Windows 10 External Graphics using AMD and NVIDIA graphics cards are supported on some, but not all Windows computers. a. Sonnet supports only Thunderbolt 3 Windows computers. (Thunderbolt 2 and Thunderbolt computers are not supported.) b. Sonnet supports only computers running 64-bit Windows 10 (32-bit Windows 10, Windows 7 and Linux are not supported.) 1. Check that your computer is compatible with external graphics. Sonnet has compiled a partial list. ASUS, Razer, and Intel report their compatible computers. Other listed computers may be Sonnet tested or user reported. If a listed computer has an optional discrete NVIDIA GPU, then it may not currently be compatible with external NVIDIA GTX graphics, even if the model is listed as compatible (these models should be compatible with AMD cards). Compatibility List Dell Acer Dell Latitude 7280 Acer Aspire R 13 Dell XPS 13 9350 Acer Aspire Switch 12 Dell XPS 13 9360 Acer Aspire V15 Nitro Dell XPS 13 9365 Acer Aspire V17 Nitro Dell XPS 15 9550 Dell XPS 15 9560 ASUS ASUS ROG G501VW Gigabyte ASUS ROG GL502VM Aero 15 ASUS ROG G701VI ASUS ROG GL702VM HP ASUS ROG G752VL HP EliteBook x360 G2 ASUS ROG G752VM HP Spectre 13-ac076nr ASUS ROG G752VS HP Spectre 13-w063nr ASUS ROG G752VT HP Spectre 13-v011dx ASUS ROG G752VY HP Spectre x360 ASUS ROG GX800VH HP Spectre x360 (2nd Generation 2017) ASUS Transformer 3 Pro T303UA HP ZBook 15 G2 without dGPU ASUS Transformer 3 T305CA HP ZBook 15 G3 without dGPU ASUS UX501VW HP ZBook 17 G3 without dGPU ASUS ZenBook Pro UX490UA HP ZBook Studio G3 ASUS ZenBook Pro UX501VW HP ZBook Studio G4 ASUS ZenBook Pro UX550VD ASUS ZenBook Pro UX550VE Intel Razer Intel NUC6i7KYK NUC Razer Blade Intel NUC7i5BNH NUC Razer Blade Pro Intel NUC7i5BNK NUC Razer Blade Stealth 12.5" Intel NUC7i7BNH NUC Razer Blade Stealth New 12.5" 4K Razer Blade Stealth New (Late 2016) Lenovo Razer Blade Stealth New 12.5" 4K Lenovo ThinkPad X1 Carbon (5th Gen 2017) Razer Blade Stealth New (Late 2016) 2. -

Summarizing CPU and GPU Design Trends with Product Data

Summarizing CPU and GPU Design Trends with Product Data Yifan Sun, Nicolas Bohm Agostini, Shi Dong, and David Kaeli Northeastern University Email: fyifansun, agostini, shidong, [email protected] Abstract—Moore’s Law and Dennard Scaling have guided the products. Equipped with this data, we answer the following semiconductor industry for the past few decades. Recently, both questions: laws have faced validity challenges as transistor sizes approach • Are Moore’s Law and Dennard Scaling still valid? If so, the practical limits of physics. We are interested in testing the validity of these laws and reflect on the reasons responsible. In what are the factors that keep the laws valid? this work, we collect data of more than 4000 publicly-available • Do GPUs still have computing power advantages over CPU and GPU products. We find that transistor scaling remains CPUs? Is the computing capability gap between CPUs critical in keeping the laws valid. However, architectural solutions and GPUs getting larger? have become increasingly important and will play a larger role • What factors drive performance improvements in GPUs? in the future. We observe that GPUs consistently deliver higher performance than CPUs. GPU performance continues to rise II. METHODOLOGY because of increases in GPU frequency, improvements in the thermal design power (TDP), and growth in die size. But we We have collected data for all CPU and GPU products (to also see the ratio of GPU to CPU performance moving closer to our best knowledge) that have been released by Intel, AMD parity, thanks to new SIMD extensions on CPUs and increased (including the former ATI GPUs)1, and NVIDIA since January CPU core counts. -

AMD Raven Ridge

DELIVERING A NEW LEVEL OF VISUAL PERFORMANCE IN AN SOC AMD “RAVEN RIDGE” APU Dan Bouvier, Jim Gibney, Alex Branover, Sonu Arora Presented by: Dan Bouvier Corporate VP, Client Products Chief Architect AMD CONFIDENTIAL RAISING THE BAR FOR THE APU VISUAL EXPERIENCE Up to MOBILE APU GENERATIONAL 200% MORE CPU PERFORMANCE PERFORMANCE GAINS Up to 128% MORE GPU PERFORMANCE Up to 58% LESS POWER FIRST “Zen”-based APU CPU Performance GPU Performance Power HIGH-PERFORMANCE AMD Ryzen™ 7 2700U 7th Gen AMD A-Series APU On-die “Vega”-based graphics Scaled GPU Managed Improved Upgraded Increased LONG BATTERY LIFE and CPU up to power delivery memory display package Premium form factors reach target and thermal bandwidth experience performance frame rate dissipation efficiency density 2 | AMD Ryzen™ Processors with Radeon™ Vega Graphics - Hot Chips 30 | * See footnotes for details. “RAVEN RIDGE” APU AMD “ZEN” x86 CPU CORES CPU 0 “ZEN” CPU CPU 1 (4 CORE | 8 THREAD) USB 3.1 NVMe PCIe FULL PCIe GPP ----------- ----------- Discrete SYSTEM 4MB USB 2.0 SATA GFX CONNECTIVITY CPU 2 CPU 3 L3 Cache X64 DDR4 HIGH BANDWIDTH SOC FABRIC System Infinity Fabric & MEMORY Management SYSTEM Unit ACCELERATED Platform Multimedia Security MULTIMEDIA Processor Engines AMD GFX+ 1MB L2 EXPERIENCE X64 DDR4 (11 COMPUTE UNITS) Cache Video Audio Sensor INTEGRATED CU CU CU CU CU CU Display Codec ACP Fusion Controller Next SENSOR Next Hub FUSION HUB CU CU CU CU CU AMD “VEGA” GPU UPGRADED DISPLAY ENGINE 3 | AMD Ryzen™ Processors with Radeon™ Vega Graphics - Hot Chips 30 | SIGNIFICANT DENSITY INCREASE “Raven Ridge” die BGA Package: 25 x 35 x 1.38mm Technology: GLOBALFOUNDRIES 14nm – 11 layer metal Transistor count: 4.94B 59% 16% Die Size: 209.78mm2 more transistors smaller die than prior generation “Bristol Ridge” APU 4 | AMD Ryzen™ Processors with Radeon™ Vega Graphics - Hot Chips 30 | * See footnotes for details.