Security and Dependability Modelling

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

FUNDAMENTALS of COMPUTING (2019-20) COURSE CODE: 5023 502800CH (Grade 7 for ½ High School Credit) 502900CH (Grade 8 for ½ High School Credit)

EXPLORING COMPUTER SCIENCE NEW NAME: FUNDAMENTALS OF COMPUTING (2019-20) COURSE CODE: 5023 502800CH (grade 7 for ½ high school credit) 502900CH (grade 8 for ½ high school credit) COURSE DESCRIPTION: Fundamentals of Computing is designed to introduce students to the field of computer science through an exploration of engaging and accessible topics. Through creativity and innovation, students will use critical thinking and problem solving skills to implement projects that are relevant to students’ lives. They will create a variety of computing artifacts while collaborating in teams. Students will gain a fundamental understanding of the history and operation of computers, programming, and web design. Students will also be introduced to computing careers and will examine societal and ethical issues of computing. OBJECTIVE: Given the necessary equipment, software, supplies, and facilities, the student will be able to successfully complete the following core standards for courses that grant one unit of credit. RECOMMENDED GRADE LEVELS: 9-12 (Preference 9-10) COURSE CREDIT: 1 unit (120 hours) COMPUTER REQUIREMENTS: One computer per student with Internet access RESOURCES: See attached Resource List A. SAFETY Effective professionals know the academic subject matter, including safety as required for proficiency within their area. They will use this knowledge as needed in their role. The following accountability criteria are considered essential for students in any program of study. 1. Review school safety policies and procedures. 2. Review classroom safety rules and procedures. 3. Review safety procedures for using equipment in the classroom. 4. Identify major causes of work-related accidents in office environments. 5. Demonstrate safety skills in an office/work environment. -

Florida Course Code Directory Computer Science Course Information 2019-2020

FLORIDA COURSE CODE DIRECTORY COMPUTER SCIENCE COURSE INFORMATION 2019-2020 Section 1007.2616, Florida Statutes, was amended by the Florida Legislature to include the definition of computer science and a requirement that computer science courses be identified in the Course Code Directory published on the Department of Education’s website. DEFINITION: The study of computers and algorithmic processes, including their principles, hardware and software designs, applications, and their impact on society, and includes computer coding and computer programming. SECONDARY COMPUTER SCIENCE COURSES Middle and high schools in each district, including combination schools in which any of grades 6-12 are taught, must provide an opportunity for students to enroll in a computer science course. If a school district does not offer an identified computer science course the district must provide students access to the course through the Florida Virtual School (FLVS) or through other means. COURSE NUMBER COURSE TITLE 0200000 M/J Computer Science Discoveries 0200010 M/J Computer Science Discoveries 1 0200020 M/J Computer Science Discoveries 2 0200305 Computer Science Discoveries 0200315 Computer Science Principles 0200320 AP Computer Science A 0200325 AP Computer Science A Innovation 0200335 AP Computer Science Principles 0200435 PRE-AICE Computer Studies IGCSE Level 0200480 AICE Computer Science 1 AS Level 0200485 AICE Computer Science 2 A Level 0200490 AICE Information Technology 1 AS Level 0200495 AICE Information Technology 2 A Level 0200800 IB Computer -

Safety and Security Challenge

SAFETY AND SECURITY CHALLENGE TOP SUPERCOMPUTERS IN THE WORLD - FEATURING TWO of DOE’S!! Summary: The U.S. Department of Energy (DOE) plays a very special role in In fields where scientists deal with issues from disaster relief to the keeping you safe. DOE has two supercomputers in the top ten supercomputers in electric grid, simulations provide real-time situational awareness to the whole world. Titan is the name of the supercomputer at the Oak Ridge inform decisions. DOE supercomputers have helped the Federal National Laboratory (ORNL) in Oak Ridge, Tennessee. Sequoia is the name of Bureau of Investigation find criminals, and the Department of the supercomputer at Lawrence Livermore National Laboratory (LLNL) in Defense assess terrorist threats. Currently, ORNL is building a Livermore, California. How do supercomputers keep us safe and what makes computing infrastructure to help the Centers for Medicare and them in the Top Ten in the world? Medicaid Services combat fraud. An important focus lab-wide is managing the tsunamis of data generated by supercomputers and facilities like ORNL’s Spallation Neutron Source. In terms of national security, ORNL plays an important role in national and global security due to its expertise in advanced materials, nuclear science, supercomputing and other scientific specialties. Discovery and innovation in these areas are essential for protecting US citizens and advancing national and global security priorities. Titan Supercomputer at Oak Ridge National Laboratory Background: ORNL is using computing to tackle national challenges such as safe nuclear energy systems and running simulations for lower costs for vehicle Lawrence Livermore's Sequoia ranked No. -

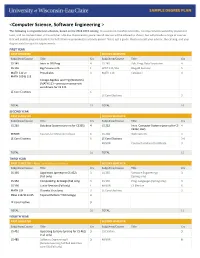

<Computer Science, Software Engineering >

<Computer Science, Software Engineering > The following is a hypothetical schedule, based on the 2018-2019 catalog. It assumes no transferred credits, no requirements waived by placement tests, and no courses taken in the summer. UW-Eau Claire cannot guarantee all courses will be offered as shown, but will provide a range of courses that will enable prepared students to fulfill their requirements in a timely period. This is just a guide. Please consult your advisor, the catalog, and your degree audit for specific requirements. FIRST YEAR FIRST SEMESTER SECOND SEMESTER Subj/Area/Course Title Crs Subj/Area/Course Title Crs CS 145 Intro to OO Prog 4 CS 245 Adv. Prog. Data Structures 4 CS 146 Big Picture in CS 1 WRIT 114/116 Blugold Seminar 5 MATH 112 or Precalculus 4 MATH 114 Calculus I 4 MATH 109 & 113 College Algebra and Trig (Winterim) (MATH 113 – prereq or concurrent enrollment for CS 245 LE Core Electives 6 LE Core Electives 3 TOTAL 15 TOTAL 16 SECOND YEAR FIRST SEMESTER SECOND SEMESTER Subj/Area/Course Title Crs Subj/Area/Course Title Crs CS 260 Database Systems (prereq for CS355) 4 CS 252 Intro. Computer Systems (prereq for CS 4 CS352, 452) MINOR Courses for Minor/Certificate 6 CS 268 Web Systems 3 LE Core Electives 6 LE Core Electives 3-6 MINOR Courses for Minor/Certificate 3 TOTAL 16 TOTAL 15 THIRD YEAR FIRST SEMESTER – Apply for Admission to Major SECOND SEMESTER Subj/Area/Course Title Crs Subj/Area/Course Title Crs CS 335 Algorithms (prereq for CS 452) 3 CS 355 Software Engineering I 3 (Fall only) (Spring only) CS 352 ComputeOrg. -

Artificial Intelligence Program 1

Artificial Intelligence Program 1 Artificial Intelligence Program Reid Simmons, Director of the BSAI program (NSH 3213) 15-122 Principles of Imperative Computation 10 (students without credit or a waiver for 15-112, Jean Harpley, Program Coordinator (NSH 1517) Fundamentals of Programming and Computer www.cs.cmu.edu/bs-in-artificial-intelligence (http://www.cs.cmu.edu/bs-in- Science, must take 15-112 before 15-122) artificial-intelligence/) 15-150 Principles of Functional Programming 10 15-210 Parallel and Sequential Data Structures and 12 Overview Algorithms 15-213 Introduction to Computer Systems 12 Carnegie Mellon University has led the world in artificial intelligence 15-251 Great Ideas in Theoretical Computer Science 12 education and innovation since the field was created. It's only natural, then, that the School of Computer Science would offer the nation's first bachelor's degree in Artificial Intelligence, which started in Fall 2018. Artificial Intelligence The new BSAI program gives students the in-depth knowledge needed to transform large amounts of data into actionable decisions. The program All of the following three AI core courses: Units and its curriculum focus on how complex inputs — such as vision, language 07-180 Concepts in Artificial Intelligence 5 and huge databases — can be used to make decisions or enhance human 15-281 Artificial Intelligence: Representation and 12 capabilities. The curriculum includes coursework in computer science, Problem Solving math, statistics, computational modeling, machine learning and symbolic computation. Because Carnegie Mellon is devoted to AI for social good, 10-315 Introduction to Machine Learning (SCS Majors) 12 students will also take courses in ethics and social responsibility, with the plus one of the following AI core courses: option to participate in independent study projects that change the world for 16-385 Computer Vision 12 the better — in areas like healthcare, transportation and education. -

A Debate on Teaching Computing Science

Teaching Computing Science t the ACM Computer Science Conference last Strategic Defense Initiative. William Scherlis is February, Edsger Dijkstra gave an invited talk known for his articulate advocacy of formal methods called “On the Cruelty of Really Teaching in computer science. M. H. van Emden is known for Computing Science.” He challenged some of his contributions in programming languages and the basic assumptions on which our curricula philosophical insights into science. Jacques Cohen Aare based and provoked a lot of discussion. The edi- is known for his work with programming languages tors of Comwunications received several recommenda- and logic programming and is a member of the Edi- tions to publish his talk in these pages. His comments torial Panel of this magazine. Richard Hamming brought into the foreground some of the background received the Turing Award in 1968 and is well known of controversy that surrounds the issue of what be- for his work in communications and coding theory. longs in the core of a computer science curriculum. Richard M. Karp received the Turing Award in 1985 To give full airing to the controversy, we invited and is known for his contributions in the design of Dijkstra to engage in a debate with selected col- algorithms. Terry Winograd is well known for his leagues, each of whom would contribute a short early work in artificial intelligence and recent work critique of his position, with Dijkstra himself making in the principles of design. a closing statement. He graciously accepted this offer. I am grateful to these people for participating in We invited people from a variety of specialties, this debate and to Professor Dijkstra for creating the backgrounds, and interpretations to provide their opening. -

Computer Science Course Reporting Guidance

Computer Science Course Reporting Guidance The Connecticut State Department of Education is committed to a policy of equal opportunity/affirmative action for all qualified persons. The Connecticut State Department of Education does not discriminate in any employment practice, education program, or educational activity on the basis of race, color, religious creed, sex, age, national origin, ancestry, marital status, sexual orientation, gender identity or expression, disability (including, but not limited to, intellectual disability, past or present history of mental disorder, physical disability or learning disability), genetic information, or any other basis prohibited by Connecticut state and/or federal nondiscrimination laws. The Connecticut State Department of Education does not unlawfully discriminate in employment and licensing against qualified persons with a prior criminal conviction. Inquiries regarding the Connecticut State Department of Education’s nondiscrimination policies should be directed to: Levy Gillespie, Equal Employment Opportunity Director/Americans with Disabilities Act Coordinator, Connecticut State Department of Education, 450 Columbus Blvd, Suite 607, Hartford, CT 06103, 860-807-2071, [email protected] Contents Overview 3 Background 3 Teacher Authorization 4 Graduation Requirements 4 Group 1 Computer Science 4 Definition 4 Courses 4 Programming 4 Databases 8 Networking 9 Cybersecurity 11 Computer Applications 12 Business 13 Other 13 Group 2 Computer Science 13 Definition 13 Courses 14 Networking 14 General Computer Literacy 14 Computer Applications 15 Business 16 Engineering 16 Other 18 References 21 Contributors 22 Acknowledgments 22 Computer Science Course Reporting Guidance Page 2 of 22 Overview Computer science (CS) is defined as the study of computers and algorithmic processes, including their principles, their hardware and software designs, their applications, and their impact on society [1]. -

Introduction to Database Management Systems

Wright State University CORE Scholar Computer Science & Engineering Syllabi College of Engineering & Computer Science Fall 2012 CS 4700/6700: Introduction to Database Management Systems Soon M. Chung Wright State University - Main Campus, [email protected] Follow this and additional works at: https://corescholar.libraries.wright.edu/cecs_syllabi Part of the Computer Engineering Commons, and the Computer Sciences Commons Repository Citation Chung, S. M. (2012). CS 4700/6700: Introduction to Database Management Systems. https://corescholar.libraries.wright.edu/cecs_syllabi/343 This Syllabus is brought to you for free and open access by the College of Engineering & Computer Science at CORE Scholar. It has been accepted for inclusion in Computer Science & Engineering Syllabi by an authorized administrator of CORE Scholar. For more information, please contact [email protected]. CS 4700/6700 Introduction To Database Management Systems, Fall 2012 Description: Survey of logical and physical aspects of database management systems. Entity Relationship and relational models for databases are presented. Physical database design methods, formal DB design concepts, and operating principles of database systems are discussed. Prerequisite: CS 3100 Data Strnctures and Algorithms, or equivalent. Instructor : Dr. Soon M. Chung, 403 Russ Center (937)775-5119, [email protected], http://www.cs. wright.edwschung Class: M. W. F. 3:35-4:30 pm at 154 Russ Center Office hour: M. W. 4:45-5:45 p.m. at 403 Russ, or by appointment. *use e-mail for short questions. Text Book: R. Elmasri and S. B. Navathe, Fundamentals of Database Systems, 6th edition, Addison Wesley, 2010. Topics: DBMS concepts and archltecture (Chap 1, 2) Entity-Relationship model (Chap 7) Relational data model (Chap 3) ER to Relational mapping (Sec. -

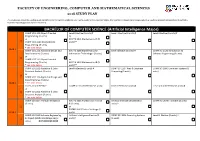

BACHELOR of COMPUTER SCIENCE (Artificial Intelligence

FACULTY OF ENGINEERING, COMPUTER AND MATHEMATICAL SCIENCES 2018 STUDY PLAN This study plan should be used to guide enrolment for the current academic year. Some students may need to modify their enrolment based on previous study (e.g. students granted advanced standing/credit, students repeating previously failed courses). BACHELOR OF COMPUTER SCIENCE (Artificial Intelligence Major) COMP SCI 1102 Object Oriented Level I Elective (3 units) # Level I Elective (3 units) # Level I Elective (3 units) # Programming (3 units) or or MATHS 1011 Mathematics IA (3 S1 COMP SCI 1101 Introduction to units)** Programming (3 units) * See note below YEAR 1 COMP SCI 1103 Algorithm Design and MATHS 1008 Mathematics for Level I Elective (3 units) # COMP SCI 1106 Introduction to Data Structures (3 units) Information Technology I (3 units) Software Engineering (3 units) or S2 COMP SCI 1102 Object Oriented or Programming (3 units) MATHS 1012 Mathematics IB (3 * See note below units)** COMP SCI 2201 Algorithm & Data Level II Elective (3 units) # COMP SCI 2207 Web & Database COMP SCI 2000 Computer Systems (3 Structure Analysis (3 units) Computing (3 units) units) or S1 COMP SCI 1103 Algorithm Design and Data Structures (3 units) YEAR 2 * See note below Level I, II or III Elective COMP SCI Level II Elective (3 units) Level II Elective (3 units) # Level I, II or III Elective (3 units) # or S2 COMP SCI 2201 Algorithm & Data Structure Analysis (3 units) * See note below COMP SCI 3007 Artifical Intelligence MATHS 3025 Professional Practice Artificial Intelligence -

The Future of Supercomputing at NASA

Southwestern Oklahoma State University SWOSU Digital Commons Student Research Business & Computer Science 3-8-2019 The Future of Supercomputing at NASA Ezgi Gursel Southwestern Oklahoma State University Jeremy Evert Southwestern Oklahoma State University Follow this and additional works at: https://dc.swosu.edu/cpgs_edsbt_bcs_student Recommended Citation Gursel, Ezgi and Evert, Jeremy, "The Future of Supercomputing at NASA" (2019). Student Research. 16. https://dc.swosu.edu/cpgs_edsbt_bcs_student/16 This Poster is brought to you for free and open access by the Business & Computer Science at SWOSU Digital Commons. It has been accepted for inclusion in Student Research by an authorized administrator of SWOSU Digital Commons. An ADA compliant document is available upon request. For more information, please contact [email protected]. COLLEGE OF & PROFESSIONAL GRADUATE STUDIES This material is based upon work supported by Dr. Tsengdar Lee, Program Manager of the High• the National Aeronautics and Space Dr. Tsengdar Dr. Stephen Wheat Dr. Henry Neeman End Computing Division, NASA Administration under Grant No. NNX15AK02H NASA Oklahoma Space Grant Consortium Lee Abstract Dr. Tsengdar Lee is the Program Computers are a growing part of everyday life Q1: Why are "Supercomputers are "Supercomputers allow us to model, "Supercomputers are used to solve Manager of the High-End supercomputers considered a scientific simulate, explore, and analyze some of the hardest problems, Computing Program at NASA. in many ways. For some of the biggest and He is responsible for maintaining most interesting problems, people have used important? How instrument. It is important in phenomena at much greater level of scale especially the hardest science and important is the development of new at finer levels of fidelity than can otherwise engineering problems.. -

Computer Science CSC 380 Database: Theory, Application and Organization

Computer Science CSC 380 Database: Theory, Application and Organization Credits and contact hours: Credit hours: 3, Contact Hours: 42 (1 contact hour = 50 minutes) Instructor: Professor Randy Molmen Text books: Database Systems, Foster, Shripad and Godbole, 2014; SQL and Relational Theory, C.J. Date, 2011; Database Design and Relational Theory, C.J. Date, 2012; Database Design and Implementation, R. Molmen, 2015. Specific course information Catalog description: a. This course stresses advanced topics in database management systems, particularly: advanced processing and SQL techniques, recovery and security issues and strategies, advanced database models, performance and tuning issues, distributed databases including data warehousing and related concepts. b. Prerequisites: CSC-280 or CSC-245 c. Required/Elective: i. Computer Science – required ii. Software Engineering - required Specific goals for the course a. Specific outcomes of instruction. At the conclusion of the course, the successful student will be able to: i. Design, construct, and evaluate complex queries in relational databases, including 1. Inner-, outer- end equijoins 2. Queries using exclusion criteria 3. Queries on tables that have internal links 4. Queries using persistent and ad-hos subqueries 5. Single-user and shared sequence generators ii. Explain and protect against contention issues in databases iii. Explain and use checkpoints and rollbacks to protect data integrity iv. Determine approximate efficiencies of common search and indexing procedures v. Explain interactions between the logical and physical mappings of data in databases, including performance and tuning issues vi. Understand and explain the basic principles of common non-relational database models vii. Understand and explain issues related to distributed and multi-user databases viii. -

Edsger Dijkstra and the Shortest-Path Algorithm David Gries

Edsger Dijkstra and the shortest-path algorithm David Gries You probably have all used an app to find a route from one point to another. Here’s a map produce by Google Maps to find a route from Cornell University’s Day Hall to Gates Hall, where Computer Science lives. Somehow, although I didn’t tell it to do this, Google Maps inferred that I wanted walking routes, not driving routes. That shortest-path algorithm is quite likely based on one developed by Edsger W. Dijkstra in 1956. At that time, Edsger had no high-level language in which to think. He knew machine languages and corresponding assembly lan- guages, of course, but he wasn’t about to develop the shortest-path algorithm in them! Here’s what Edsger said about developing the algorithm1. … One morning I was shopping in Amsterdam with my young fiance, and, tired, we sat down on the cafe terrace to drink a cup of coffee. I was just thinking about whether I could do this, and I then designed the algorithm for the shortest path. As I said, it was a 20-minute invention. Imagine that! He developed it in his head in 20 minutes. No paper and pencil. With no language support except Dutch. Further, Edsger didn’t bother publishing it until someone told him he should —2–3 years later2. One could ask: Why wasn’t Edsger talking to his fiancé instead of thinking about an algorithm? Ha! Well, his fiancé, Ria Debets, knew him well, and she was also a programmer. She was one of a dozen women who had completed high school with exceptionally high grades in mathematics and were hired to work in the new computing department at the Mathematical Center in Amsterdam by Director Van Wijngaarden3.