FUNDAMENTALS of COMPUTING (2019-20) COURSE CODE: 5023 502800CH (Grade 7 for ½ High School Credit) 502900CH (Grade 8 for ½ High School Credit)

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Arithmetic and Logic Unit (ALU)

Computer Arithmetic: Arithmetic and Logic Unit (ALU) Arithmetic & Logic Unit (ALU) • Part of the computer that actually performs arithmetic and logical operations on data • All of the other elements of the computer system are there mainly to bring data into the ALU for it to process and then to take the results back out • Based on the use of simple digital logic devices that can store binary digits and perform simple Boolean logic operations ALU Inputs and Outputs Integer Representations • In the binary number system arbitrary numbers can be represented with: – The digits zero and one – The minus sign (for negative numbers) – The period, or radix point (for numbers with a fractional component) – For purposes of computer storage and processing we do not have the benefit of special symbols for the minus sign and radix point – Only binary digits (0,1) may be used to represent numbers Integer Representations • There are 4 commonly known (1 not common) integer representations. • All have been used at various times for various reasons. 1. Unsigned 2. Sign Magnitude 3. One’s Complement 4. Two’s Complement 5. Biased (not commonly known) 1. Unsigned • The standard binary encoding already given. • Only positive value. • Range: 0 to ((2 to the power of N bits) – 1) • Example: 4 bits; (2ˆ4)-1 = 16-1 = values 0 to 15 Semester II 2014/2015 8 1. Unsigned (Cont’d.) Semester II 2014/2015 9 2. Sign-Magnitude • All of these alternatives involve treating the There are several alternative most significant (leftmost) bit in the word as conventions used to -

The Central Processing Unit(CPU). the Brain of Any Computer System Is the CPU

Computer Fundamentals 1'stage Lec. (8 ) College of Computer Technology Dept.Information Networks The central processing unit(CPU). The brain of any computer system is the CPU. It controls the functioning of the other units and process the data. The CPU is sometimes called the processor, or in the personal computer field called “microprocessor”. It is a single integrated circuit that contains all the electronics needed to execute a program. The processor calculates (add, multiplies and so on), performs logical operations (compares numbers and make decisions), and controls the transfer of data among devices. The processor acts as the controller of all actions or services provided by the system. Processor actions are synchronized to its clock input. A clock signal consists of clock cycles. The time to complete a clock cycle is called the clock period. Normally, we use the clock frequency, which is the inverse of the clock period, to specify the clock. The clock frequency is measured in Hertz, which represents one cycle/second. Hertz is abbreviated as Hz. Usually, we use mega Hertz (MHz) and giga Hertz (GHz) as in 1.8 GHz Pentium. The processor can be thought of as executing the following cycle forever: 1. Fetch an instruction from the memory, 2. Decode the instruction (i.e., determine the instruction type), 3. Execute the instruction (i.e., perform the action specified by the instruction). Execution of an instruction involves fetching any required operands, performing the specified operation, and writing the results back. This process is often referred to as the fetch- execute cycle, or simply the execution cycle. -

Florida Course Code Directory Computer Science Course Information 2019-2020

FLORIDA COURSE CODE DIRECTORY COMPUTER SCIENCE COURSE INFORMATION 2019-2020 Section 1007.2616, Florida Statutes, was amended by the Florida Legislature to include the definition of computer science and a requirement that computer science courses be identified in the Course Code Directory published on the Department of Education’s website. DEFINITION: The study of computers and algorithmic processes, including their principles, hardware and software designs, applications, and their impact on society, and includes computer coding and computer programming. SECONDARY COMPUTER SCIENCE COURSES Middle and high schools in each district, including combination schools in which any of grades 6-12 are taught, must provide an opportunity for students to enroll in a computer science course. If a school district does not offer an identified computer science course the district must provide students access to the course through the Florida Virtual School (FLVS) or through other means. COURSE NUMBER COURSE TITLE 0200000 M/J Computer Science Discoveries 0200010 M/J Computer Science Discoveries 1 0200020 M/J Computer Science Discoveries 2 0200305 Computer Science Discoveries 0200315 Computer Science Principles 0200320 AP Computer Science A 0200325 AP Computer Science A Innovation 0200335 AP Computer Science Principles 0200435 PRE-AICE Computer Studies IGCSE Level 0200480 AICE Computer Science 1 AS Level 0200485 AICE Computer Science 2 A Level 0200490 AICE Information Technology 1 AS Level 0200495 AICE Information Technology 2 A Level 0200800 IB Computer -

Safety and Security Challenge

SAFETY AND SECURITY CHALLENGE TOP SUPERCOMPUTERS IN THE WORLD - FEATURING TWO of DOE’S!! Summary: The U.S. Department of Energy (DOE) plays a very special role in In fields where scientists deal with issues from disaster relief to the keeping you safe. DOE has two supercomputers in the top ten supercomputers in electric grid, simulations provide real-time situational awareness to the whole world. Titan is the name of the supercomputer at the Oak Ridge inform decisions. DOE supercomputers have helped the Federal National Laboratory (ORNL) in Oak Ridge, Tennessee. Sequoia is the name of Bureau of Investigation find criminals, and the Department of the supercomputer at Lawrence Livermore National Laboratory (LLNL) in Defense assess terrorist threats. Currently, ORNL is building a Livermore, California. How do supercomputers keep us safe and what makes computing infrastructure to help the Centers for Medicare and them in the Top Ten in the world? Medicaid Services combat fraud. An important focus lab-wide is managing the tsunamis of data generated by supercomputers and facilities like ORNL’s Spallation Neutron Source. In terms of national security, ORNL plays an important role in national and global security due to its expertise in advanced materials, nuclear science, supercomputing and other scientific specialties. Discovery and innovation in these areas are essential for protecting US citizens and advancing national and global security priorities. Titan Supercomputer at Oak Ridge National Laboratory Background: ORNL is using computing to tackle national challenges such as safe nuclear energy systems and running simulations for lower costs for vehicle Lawrence Livermore's Sequoia ranked No. -

Top 10 Reasons to Major in Computing

Top 10 Reasons to Major in Computing 1. Computing is part of everything we do! Computing and computer technology are part of just about everything that touches our lives from the cars we drive, to the movies we watch, to the ways businesses and governments deal with us. Understanding different dimensions of computing is part of the necessary skill set for an educated person in the 21st century. Whether you want to be a scientist, develop the latest killer application, or just know what it really means when someone says “the computer made a mistake”, studying computing will provide you with valuable knowledge. 2. Expertise in computing enables you to solve complex, challenging problems. Computing is a discipline that offers rewarding and challenging possibilities for a wide range of people regardless of their range of interests. Computing requires and develops capabilities in solving deep, multidimensional problems requiring imagination and sensitivity to a variety of concerns. 3. Computing enables you to make a positive difference in the world. Computing drives innovation in the sciences (human genome project, AIDS vaccine research, environmental monitoring and protection just to mention a few), and also in engineering, business, entertainment and education. If you want to make a positive difference in the world, study computing. 4. Computing offers many types of lucrative careers. Computing jobs are among the highest paid and have the highest job satisfaction. Computing is very often associated with innovation, and developments in computing tend to drive it. This, in turn, is the key to national competitiveness. The possibilities for future developments are expected to be even greater than they have been in the past. -

Computer Monitor and Television Recycling

Computer Monitor and Television Recycling What is the problem? Televisions and computer monitors can no longer be discarded in the normal household containers or commercial dumpsters, effective April 10, 2001. Televisions and computer monitors may contain picture tubes called cathode ray tubes (CRT’s). CRT’s can contain lead, cadmium and/or mercury. When disposed of in a landfill, these metals contaminate soil and groundwater. Some larger television sets may contain as much as 15 pounds of lead. A typical 15‐inch CRT computer monitor contains 1.5 pounds of lead. The State Department of Toxic Substances Control has determined that televisions and computer monitors can no longer be disposed with typical household trash, or recycled with typical household recyclables. They are considered universal waste that needs to be disposed of through alternate ways. CRTs should be stored in a safe manner that prevents the CRT from being broken and the subsequent release of hazardous waste into the environment. Locations that will accept televisions, computers, and other electronic waste (e‐waste): If the product still works, trying to find someone that can still use it (donating) is the best option before properly disposing of an electronic product. Non‐profit organizations, foster homes, schools, and places like St. Vincent de Paul may be possible examples of places that will accept usable products. Or view the E‐waste Recycling List at http://www.mercedrecycles.com/pdf's/EwasteRecycling.pdf Where can businesses take computer monitors, televisions, and other electronics? Businesses located within Merced County must register as a Conditionally Exempt Small Quantity Generator (CESQG) prior to the delivery of monitors and televisions to the Highway 59 Landfill. -

Console Games in the Age of Convergence

Console Games in the Age of Convergence Mark Finn Swinburne University of Technology John Street, Melbourne, Victoria, 3122 Australia +61 3 9214 5254 mfi [email protected] Abstract In this paper, I discuss the development of the games console as a converged form, focusing on the industrial and technical dimensions of convergence. Starting with the decline of hybrid devices like the Commodore 64, the paper traces the way in which notions of convergence and divergence have infl uenced the console gaming market. Special attention is given to the convergence strategies employed by key players such as Sega, Nintendo, Sony and Microsoft, and the success or failure of these strategies is evaluated. Keywords Convergence, Games histories, Nintendo, Sega, Sony, Microsoft INTRODUCTION Although largely ignored by the academic community for most of their existence, recent years have seen video games attain at least some degree of legitimacy as an object of scholarly inquiry. Much of this work has focused on what could be called the textual dimension of the game form, with works such as Finn [17], Ryan [42], and Juul [23] investigating aspects such as narrative and character construction in game texts. Another large body of work focuses on the cultural dimension of games, with issues such as gender representation and the always-controversial theme of violence being of central importance here. Examples of this approach include Jenkins [22], Cassell and Jenkins [10] and Schleiner [43]. 45 Proceedings of Computer Games and Digital Cultures Conference, ed. Frans Mäyrä. Tampere: Tampere University Press, 2002. Copyright: authors and Tampere University Press. Little attention, however, has been given to the industrial dimension of the games phenomenon. -

Open Dissertation Draft Revised Final.Pdf

The Pennsylvania State University The Graduate School ICT AND STEM EDUCATION AT THE COLONIAL BORDER: A POSTCOLONIAL COMPUTING PERSPECTIVE OF INDIGENOUS CULTURAL INTEGRATION INTO ICT AND STEM OUTREACH IN BRITISH COLUMBIA A Dissertation in Information Sciences and Technology by Richard Canevez © 2020 Richard Canevez Submitted in Partial Fulfillment of the Requirements for the Degree of Doctor of Philosophy December 2020 ii The dissertation of Richard Canevez was reviewed and approved by the following: Carleen Maitland Associate Professor of Information Sciences and Technology Dissertation Advisor Chair of Committee Daniel Susser Assistant Professor of Information Sciences and Technology and Philosophy Lynette (Kvasny) Yarger Associate Professor of Information Sciences and Technology Craig Campbell Assistant Teaching Professor of Education (Lifelong Learning and Adult Education) Mary Beth Rosson Professor of Information Sciences and Technology Director of Graduate Programs iii ABSTRACT Information and communication technologies (ICTs) have achieved a global reach, particularly in social groups within the ‘Global North,’ such as those within the province of British Columbia (BC), Canada. It has produced the need for a computing workforce, and increasingly, diversity is becoming an integral aspect of that workforce. Today, educational outreach programs with ICT components that are extending education to Indigenous communities in BC are charting a new direction in crossing the cultural barrier in education by tailoring their curricula to distinct Indigenous cultures, commonly within broader science, technology, engineering, and mathematics (STEM) initiatives. These efforts require examination, as they integrate Indigenous cultural material and guidance into what has been a largely Euro-Western-centric domain of education. Postcolonial computing theory provides a lens through which this integration can be investigated, connecting technological development and education disciplines within the parallel goals of cross-cultural, cross-colonial humanitarian development. -

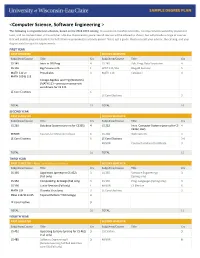

<Computer Science, Software Engineering >

<Computer Science, Software Engineering > The following is a hypothetical schedule, based on the 2018-2019 catalog. It assumes no transferred credits, no requirements waived by placement tests, and no courses taken in the summer. UW-Eau Claire cannot guarantee all courses will be offered as shown, but will provide a range of courses that will enable prepared students to fulfill their requirements in a timely period. This is just a guide. Please consult your advisor, the catalog, and your degree audit for specific requirements. FIRST YEAR FIRST SEMESTER SECOND SEMESTER Subj/Area/Course Title Crs Subj/Area/Course Title Crs CS 145 Intro to OO Prog 4 CS 245 Adv. Prog. Data Structures 4 CS 146 Big Picture in CS 1 WRIT 114/116 Blugold Seminar 5 MATH 112 or Precalculus 4 MATH 114 Calculus I 4 MATH 109 & 113 College Algebra and Trig (Winterim) (MATH 113 – prereq or concurrent enrollment for CS 245 LE Core Electives 6 LE Core Electives 3 TOTAL 15 TOTAL 16 SECOND YEAR FIRST SEMESTER SECOND SEMESTER Subj/Area/Course Title Crs Subj/Area/Course Title Crs CS 260 Database Systems (prereq for CS355) 4 CS 252 Intro. Computer Systems (prereq for CS 4 CS352, 452) MINOR Courses for Minor/Certificate 6 CS 268 Web Systems 3 LE Core Electives 6 LE Core Electives 3-6 MINOR Courses for Minor/Certificate 3 TOTAL 16 TOTAL 15 THIRD YEAR FIRST SEMESTER – Apply for Admission to Major SECOND SEMESTER Subj/Area/Course Title Crs Subj/Area/Course Title Crs CS 335 Algorithms (prereq for CS 452) 3 CS 355 Software Engineering I 3 (Fall only) (Spring only) CS 352 ComputeOrg. -

From Ethnomathematics to Ethnocomputing

1 Bill Babbitt, Dan Lyles, and Ron Eglash. “From Ethnomathematics to Ethnocomputing: indigenous algorithms in traditional context and contemporary simulation.” 205-220 in Alternative forms of knowing in mathematics: Celebrations of Diversity of Mathematical Practices, ed Swapna Mukhopadhyay and Wolff- Michael Roth, Rotterdam: Sense Publishers 2012. From Ethnomathematics to Ethnocomputing: indigenous algorithms in traditional context and contemporary simulation 1. Introduction Ethnomathematics faces two challenges: first, it must investigate the mathematical ideas in cultural practices that are often assumed to be unrelated to math. Second, even if we are successful in finding this previously unrecognized mathematics, applying this to children’s education may be difficult. In this essay, we will describe the use of computational media to help address both of these challenges. We refer to this approach as “ethnocomputing.” As noted by Rosa and Orey (2010), modeling is an essential tool for ethnomathematics. But when we create a model for a cultural artifact or practice, it is hard to know if we are capturing the right aspects; whether the model is accurately reflecting the mathematical ideas or practices of the artisan who made it, or imposing mathematical content external to the indigenous cognitive repertoire. If I find a village in which there is a chain hanging from posts, I can model that chain as a catenary curve. But I cannot attribute the knowledge of the catenary equation to the people who live in the village, just on the basis of that chain. Computational models are useful not only because they can simulate patterns, but also because they can provide insight into this crucial question of epistemological status. -

What Is a Microprocessor?

Behavior Research Methods & Instrumentation 1978, Vol. 10 (2),238-240 SESSION VII MICROPROCESSORS IN PSYCHOLOGY: A SYMPOSIUM MISRA PAVEL, New York University, Presider What is a microprocessor? MISRA PAVEL New York University, New York, New York 10003 A general introduction to microcomputer terminology and concepts is provided. The purpose of this introduction is to provide an overview of this session and to introduce the termi MICROPROCESSOR SYSTEM nology and some of the concepts that will be discussed in greater detail by the rest of the session papers. PERIPNERILS EIPERI MENT II A block diagram of a typical small computer system USS PRINIER KErBOARD INIERfICE is shown in Figure 1. Four distinct blocks can be STDRIGE distinguished: (1) the central processing unit (CPU); (2) external memory; (3) peripherals-mass storage, CONTROL standard input/output, and man-machine interface; 0111 BUS (4) special purpose (experimental) interface. IODiISS The different functional units in the system shown here are connected, more or less in parallel, by a number CENTKll ElIEBUL of lines commonly referred to as a bus. The bus mediates PROCESSING ME MOil transfer of information among the units by carrying UN IT address information, data, and control signals. For example, when the CPU transfers data to memory, it activates appropriate control lines, asserts the desired Figure 1. Block diagram of a smaIl computer system. destination memory address, and then outputs data on the bus. Traditionally, the entire system was built from a CU multitude of relatively simple components mounted on interconnected printed circuit cards. With the advances of integrated circuit technology, large amounts of circuitry were squeezed into a single chip. -

Artificial Intelligence Program 1

Artificial Intelligence Program 1 Artificial Intelligence Program Reid Simmons, Director of the BSAI program (NSH 3213) 15-122 Principles of Imperative Computation 10 (students without credit or a waiver for 15-112, Jean Harpley, Program Coordinator (NSH 1517) Fundamentals of Programming and Computer www.cs.cmu.edu/bs-in-artificial-intelligence (http://www.cs.cmu.edu/bs-in- Science, must take 15-112 before 15-122) artificial-intelligence/) 15-150 Principles of Functional Programming 10 15-210 Parallel and Sequential Data Structures and 12 Overview Algorithms 15-213 Introduction to Computer Systems 12 Carnegie Mellon University has led the world in artificial intelligence 15-251 Great Ideas in Theoretical Computer Science 12 education and innovation since the field was created. It's only natural, then, that the School of Computer Science would offer the nation's first bachelor's degree in Artificial Intelligence, which started in Fall 2018. Artificial Intelligence The new BSAI program gives students the in-depth knowledge needed to transform large amounts of data into actionable decisions. The program All of the following three AI core courses: Units and its curriculum focus on how complex inputs — such as vision, language 07-180 Concepts in Artificial Intelligence 5 and huge databases — can be used to make decisions or enhance human 15-281 Artificial Intelligence: Representation and 12 capabilities. The curriculum includes coursework in computer science, Problem Solving math, statistics, computational modeling, machine learning and symbolic computation. Because Carnegie Mellon is devoted to AI for social good, 10-315 Introduction to Machine Learning (SCS Majors) 12 students will also take courses in ethics and social responsibility, with the plus one of the following AI core courses: option to participate in independent study projects that change the world for 16-385 Computer Vision 12 the better — in areas like healthcare, transportation and education.