Classification of Heavy Metal Subgenres with Machine Learning

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

SEPTEMBER 10, 2021 Featuring: DEVIL’S REEF INFERI MR

NEW RELEASES FOR SEPTEMBER 10, 2021 featuring: DEVIL’S REEF INFERI MR. T EXPERIENCE Exclusively Distributed by DEVIL’S REEF A Whisper From The Cosmos LP/CD Street Date: September 10, 2021 ARTIST HOMETOWN: Frederick, MD KEY MARKETS: Chicago, Montreal, Los Angeles, Denver, Dallas FOR FANS OF: REVOCATION, OBSCURA, DEATH DEVIL’S REEF brings forth the perfect mix of death and thrash metal with A Whisper From The Cosmos. This EP packs a load of grooves laden with shreddy guitars a la the old school stylings of DEATH, but with the more modern approach of bands like OBSCURA and REVOCATION. ARTIST: DEVIL’S REEF MARKETING POINTS: TITLE: A Whisper From • Colored Vinyl The Cosmos • Limited edition of 600 copies LABEL: The Artisan Era • Legendary Canadian band CAT#: LP: AE-49-1 / CD: AE-49-2 FORMAT: LP/CD GENRE: Death Metal BOX LOT: 30/CD SRLP: LP: $39.98/CD: $13.98 UPC: LP: 123184004913 CD: 123184004920 EXPORT: No Restrictions TRACK LIST: 1. Quantum Strings 2. A Whisper From The Cosmos 3. Plague Uncovered 4. Ankida 5. Beyond Eternal Exclusively Distributed by Contact your sales rep: Mike Beer - [email protected] phone 414-672-9948 www.ildistro.com INFERI Vile Genesis LP/CD Street Date: September 10, 2021 ARTIST HOMETOWN: Nashville, TN KEY MARKETS: Chicago, Montreal, Los Angeles, Denver, Dallas FOR FANS OF: NECROPHAGIST, THE BLACK DAHLIA MURDER, ARCHSPIRE, OBSCURA, BEYOND CREATION, REVOCATION, FLESHGOD APOCALYPSE INFERI is back with their first full length in three years! Recorded, mixed, and mastered by Dave Otero (CATTLE DECAPITATION, ALLEGAEON, ARCHSPIRE), Vile Genesis shows that the band is more refined and mature than ever, focusing on over-the-top orchestra ARTIST: INFERI and strangely tasteful death metal riffs and grooves. -

TRUTH CORRODED BIOGRAPHY 2018 Drums: Jake Sproule, Vocals: Jason North, Guitar

TRUTH CORRODED BIOGRAPHY 2018 Drums: Jake Sproule, Vocals: Jason North, Guitar: Trent Simpson, Bass: Greg Shaw, Lead Guitar: Chris Walden TRUTH CORRODED are an Australian band with a sound that captures elements of late 80's/early 90's inspired thrash and death metal, shaped by the likes of bands such as SLAYER, OBITUARY, SEPULTURA, KREATOR and EXHORDER, and then later influenced by acts such as MISERY INDEX, BEHEMOTH, NEUROSIS, DECAPITATED and REVOCATION. Taken together the influences have shaped a sound that is as confronting as the subject matter of the bands lyrics and pays homage to the go for the throat savagery of the bands roots. TRUTH CORRODED signed with leading US extreme metal label UNIQUE LEADER RECORDS which led to the release of the album titled 'Bloodlands' which was released in March 2019. Based on themes surrounding war and displacement, the album depicts the state of our times and the social and political impact that is having both in the bands home country Australia and abroad. The title refers to the growth of new conflict from conflicts past, with the suffering it creates spreading its roots. The album was recorded with session drummer Kevin Talley (SUFFOCATION, DYING FETUS, CHIMAIRA) – his third album with the band – and was mixed and mastered by renowned heavy music producer Chris 'ZEUSS' Harris (HATEBREED, SOULFLY, REVOCATION). The album also features guest appearances by Stephen Carpenter of DEFTONES, Terrance Hobbs of SUFFOCATION, Mark Kloeppel of MISERY INDEX and Ryan Knight, ex-THE BLACK DAHLIA MURDER/ARSIS. Artwork for the album is designed by Gary Ronaldson at Bite Radius Designs (MISERY INDEX, BENIGHTED, PIG DESTROYER, THY ART IS MURDER) Commenting on the industry involved, vocalist Jason North offers "When we first set out to write the album we could not have imagined that we would work with the artists that have become involved. -

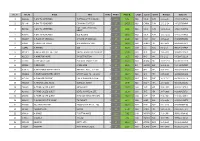

Bertus in Stock 7-4-2014

No. # Art.Id Artist Title Units Media Price €. Origin Label Genre Release Eancode 1 G98139 A DAY TO REMEMBER 7-ATTACK OF THE KILLER.. 1 12in 6,72 NLD VIC.R PUN 21-6-2010 0746105057012 2 P10046 A DAY TO REMEMBER COMMON COURTESY 2 LP 24,23 NLD CAROL PUN 13-2-2014 0602537638949 FOR THOSE WHO HAVE 3 E87059 A DAY TO REMEMBER 1 LP 16,92 NLD VIC.R PUN 14-11-2008 0746105033719 HEART 4 K78846 A DAY TO REMEMBER OLD RECORD 1 LP 16,92 NLD VIC.R PUN 31-10-2011 0746105049413 5 M42387 A FLOCK OF SEAGULLS A FLOCK OF SEAGULLS 1 LP 20,23 NLD MOV POP 13-6-2013 8718469532964 6 L49081 A FOREST OF STARS A SHADOWPLAY FOR.. 2 LP 38,68 NLD PROPH HM. 20-7-2012 0884388405011 7 J16442 A FRAMES 333 3 LP 38,73 USA S-S ROC 3-8-2010 9991702074424 8 M41807 A GREAT BIG PILE OF LEAVE YOU'RE ALWAYS ON MY MIND 1 LP 24,06 NLD PHD POP 10-6-2013 0616892111641 9 K81313 A HOPE FOR HOME IN ABSTRACTION 1 LP 18,53 NLD PHD HM. 5-1-2012 0803847111119 10 L77989 A LIFE ONCE LOST ECSTATIC TRANCE -LTD- 1 LP 32,47 NLD SEASO HC. 15-11-2012 0822603124316 11 P33696 A NEW LINE A NEW LINE 2 LP 29,92 EU HOMEA ELE 28-2-2014 5060195515593 12 K09100 A PALE HORSE NAMED DEATH AND HELL WILL.. -LP+CD- 3 LP 30,43 NLD SPV HM. 16-6-2011 0693723093819 13 M32962 A PALE HORSE NAMED DEATH LAY MY SOUL TO. -

DARKWOODS MAILORDER CATALOGUE October 2016

DARKWOODS MAILORDER CATALOGUE October 2016 DARKWOODS PAGAN BLACK METAL DI STRO / LABEL [email protected] www.darkwoods.eu Next you will find a full list with all available items in our mailorder catalogue alphabetically ordered... With the exception of the respective cover, we have included all relevant information about each item, even the format, the releasing label and the reference comment... This catalogue is updated every month, so it could not reflect the latest received products or the most recent sold-out items... please use it more as a reference than an updated list of our products... CDS / MCDS / SGCDS 1349 - Beyond the Apocalypse [CD] 11.95 EUR Second smash hit of the Norwegians 1349, nine outstanding tracks of intense, very fast and absolutely brutal black metal is what they offer us with “Beyond the Apocalypse”, with Frost even more a beast behind the drum set here than in Satyricon, excellent! [Released by Candlelight] 1349 - Demonoir [CD] 11.95 EUR Fifth full-length album of this Norwegian legion, recovering in one hand the intensity and brutality of the fantastic “Hellfire” but, at the same time, continuing with the experimental and sinister side of their music introduced in their previous work, “Revelations of the Black Flame”... [Released by Indie Recordings] 1349 - Hellfire [CD] 11.95 EUR Brutal third full-length album of the Norwegians, an immense ode to the most furious, powerful and violent black metal that the deepest and flaming hell could vomit... [Released by Candlelight] 1349 - Liberation [CD] 11.95 EUR Fantastic -

A Dead Spot of Light

XVI a dead spot of light... 1 Introduction Another years draws towards its end and I am still sitting here, being unable to write with ten fingers. I am not bad at typing, but the level in which I am actually able to do this could definitely be better. Anyway, not all interviews made it into this edition, but I am somewhat satisfied about the reviews that did. 'Soizu' has finally been deal with, 'Slaughter of the Innocents' / 'Obscure Oath' split – delayed beyond any sane comprehension – appears here as well, 'I, Lord Aveu' is also able to read his review now and so is 'Gamardah Fungus'. The 'Vahrzaw' one was a review request from the Metal Archives board and I hope the person is satisfied with the result. Well, there is enough material available for the next version already; in terms of reviews as well as interviews. Indeed, I had the chance to stumble over a considerable amount of them in the last few weeks. The focus is less metal again and more in the experimental region. What struck me though is the large amount of releases which I had not written on and the old reviews that need some polishing. The one on Epoch would be one example. It had been partially re-written, extended and hopefully with less errors than before. More of this type will be added to future edition of this magazine. Requests of interviews and reviews are still possible … I am always open to get in touch with new bands and artists. Also from non-metal genres. -

Maman, J'entends Des Voix

TOUTE L’ACTUALITÉ BRÛLANTE DU ROCK EN ROMANDIE GRATUIT MAI 2014 76 Daily Rockwww.daily-rock.com Les concerts © Jacques Apothéloz 2 – 3 • Kilbi • Les interviews 4 – 7 • Birth Of Joy • The Rambling Wheels • • Herod • Avatar • Gotthard • Pegasus Le calendrier 8 – 9 Les dossiers VOICE OF RUIN 10 – 11 • Plein le culte • Les chroniques MAMAN, J'ENTENDS 12 – 15 • Impure Wilhelmina • Yakari • Jupiter Zeus • Impaled Nazarene DES VOIX • Loudblast • Le premier album avait Justement ce dernier album, que peux-tu nous en Vous êtes vraiment méchants ou c'est juste une dire ? Des ballades au programme ? image ? mis le feu aux poudres, Haha, non, il n'y pas de ballade. En revanche, Non, on ne veut pas faire peur ou forcément voici que Voice of Ruin se il est beaucoup plus long que le précédent. Il verser dans le trash. Par exemple, pour le clip redresse, droit et dur, avec dure plus de quarante-cinq minutes, pour douze de 'Cock'n Bulls' qui se passe dans une ferme, titres. Il est aussi très varié, des chansons typées ça faisait un moment qu'on en parlait, peut-être édito un second opus baptisé death metal, très rapides, et d'autres plus groovy, deux ans. On l'avait imaginé en déconnant. Et Rockeuses, Rockeurs, 'Morning Wood'. Interview avec des influences metalcore, stoner et thrash. puis, on s'est dit, pourquoi on ne le ferait pas ? On Je m'émeus. Depuis cinq mois, j'ai Ça a été difficile de mettre les pistes dans le bon avait la chanson qui allait bien avec, et l'ancien l'impression d'avoir atteint un point du chanteur, Randy. -

DARKWOODS MAILORDER CATALOGUE June 2017

DARKWOODS MAILORDER CATALOGUE June 2017 DARKWOODS PAGAN BLACK METAL DI STRO / LABEL [email protected] www.darkwoods.eu Next you w ill find a full list w ith all available items in our mailorder catalogue alphabetically ordered... With the exception of the respective cover, w e have included all relevant information about each item, even the format, the releasing label and the reference comment... This catalogue is updated every month, so it could not reflect the latest received products or the most recent sold-out items... please use it more as a reference than an updated list of our products... CDS / MCDS / SGCDS 1349 - Beyond the Apocalypse [CD] 11.95 EUR Second smash hit of the Norwegians 1349, nine outstanding tracks of intense, very fast and absolutely brutal black metal is what they offer us with “Beyond the Apocalypse”, with Frost even more a beast behind the drum set here than in Satyricon, excellent! [Released by Candlelight] 1349 - Demonoir [CD] 11.95 EUR Fifth full-length album of this Norwegian legion, recovering in one hand the intensity and brutality of the fantastic “Hellfire” but, at the same time, continuing with the experimental and sinister side of their music introduced in their previous work, “Revelations of the Black Flame”... [Released by Indie Recordings] 1349 - Liberation [CD] 11.95 EUR Fantastic debut full-length album of the Nordic hordes 1349 leaded by Frost (Satyricon), ten tracks of furious, violent and merciless black metal is what they show us in "Liberation", ten straightforward tracks of pure Norwegian black metal, superb! [Released by Candlelight] 1349 - Massive Cauldron of Chaos [CD] 12.95 EUR Sixth full-length album of the Norwegians 1349, with which they continue this returning path to their most brutal roots that they starte d with the previous “Demonoir”, perhaps not as chaotic as the title might suggested, but we could place it in the intermediate era of “Hellfire”.. -

Nr Kat Artysta Tytuł Title Supplement Nośnik Liczba Nośników Data

nr kat artysta tytuł title nośnik liczba data supplement nośników premiery 9985841 '77 Nothing's Gonna Stop Us black LP+CD LP / Longplay 2 2015-10-30 9985848 '77 Nothing's Gonna Stop Us Ltd. Edition CD / Longplay 1 2015-10-30 88697636262 *NSYNC The Collection CD / Longplay 1 2010-02-01 88875025882 *NSYNC The Essential *NSYNC Essential Rebrand CD / Longplay 2 2014-11-11 88875143462 12 Cellisten der Hora Cero CD / Longplay 1 2016-06-10 88697919802 2CELLOSBerliner Phil 2CELLOS Three Language CD / Longplay 1 2011-07-04 88843087812 2CELLOS Celloverse Booklet Version CD / Longplay 1 2015-01-27 88875052342 2CELLOS Celloverse Deluxe Version CD / Longplay 2 2015-01-27 88725409442 2CELLOS In2ition CD / Longplay 1 2013-01-08 88883745419 2CELLOS Live at Arena Zagreb DVD-V / Video 1 2013-11-05 88985349122 2CELLOS Score CD / Longplay 1 2017-03-17 0506582 65daysofstatic Wild Light CD / Longplay 1 2013-09-13 0506588 65daysofstatic Wild Light Ltd. Edition CD / Longplay 1 2013-09-13 88985330932 9ELECTRIC The Damaged Ones CD Digipak CD / Longplay 1 2016-07-15 82876535732 A Flock Of Seagulls The Best Of CD / Longplay 1 2003-08-18 88883770552 A Great Big World Is There Anybody Out There? CD / Longplay 1 2014-01-28 88875138782 A Great Big World When the Morning Comes CD / Longplay 1 2015-11-13 82876535502 A Tribe Called Quest Midnight Marauders CD / Longplay 1 2003-08-18 82876535512 A Tribe Called Quest People's Instinctive Travels And CD / Longplay 1 2003-08-18 88875157852 A Tribe Called Quest People'sThe Paths Instinctive Of Rhythm Travels and the CD / Longplay 1 2015-11-20 82876535492 A Tribe Called Quest ThePaths Low of RhythmEnd Theory (25th Anniversary CD / Longplay 1 2003-08-18 88985377872 A Tribe Called Quest We got it from Here.. -

From Crass to Thrash, to Squeakers: the Suspicious Turn to Metal in UK Punk and Hardcore Post ‘85

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by De Montfort University Open Research Archive From Crass to Thrash, to Squeakers: The Suspicious Turn to Metal in UK Punk and Hardcore Post ‘85. Otto Sompank I always loved the simplicity and visceral feel of all forms of punk. From the Pistols take on the New York Dolls rock, or the UK Subs aggressive punk take on rhythm and blues. The stark reality Crass and the anarchist-punk scene was informed with aspects of obscure seventies rock too, for example Pete Wright’s prog bass lines in places. Granted. Perhaps the most famous and intense link to rock and punk was Motorhead. While their early output and LP’s definitely had a clear nod to punk (Lemmy playing for the Damned), they appealed to most punks back then with their sheer aggression and intensity. It’s clear Motorhead and Black Sabbath influenced a lot of street-punk and the ferocious tones of Discharge and their Scandinavian counterparts such as Riistetyt, Kaaos and Anti Cimex. The early links were there but the influence of late 1970s early 80s NWOBM (New Wave of British Heavy Metal) and street punk, Discharge etc. in turn influenced Metallica, Anthrax and Exodus in the early eighties. Most of them can occasionally be seen sporting Discharge, Broken Bones and GBH shirts on their early record-sleeve pictures. Not only that, Newcastle band Venom were equally influential in the mix of new genre forms germinating in the early 1980s. One of the early examples of the incorporation of rock and metal into the UK punk scene came from Discharge. -

DARKWOODS MAILORDER CATALOGUE March 2018

DARKWOODS MAILORDER CATALOGUE March 2018 DARKWOODS PAGAN BLACK METAL DI STRO / LABEL [email protected] www.darkwoods.eu Next you will find a full list with all available items in our mailorder catalogue alphabetically ordered... With the exception of the respective cover, we have included all relevant information about each item, even the format, the releasing label and the reference comment... This catalogue is updated every month, so it could not reflect the latest received products or the most recent sold-out items... please use it more as a reference than an updated list of our products... CDS / MCDS / SGCDS 1349 - Beyond the Apocalypse [CD] 11.95 EUR Second smash hit of the Norwegians 1349, nine outstanding tracks of intense, very fast and absolutely brutal black metal is what they offer us with “Beyond the Apocalypse”, with Frost even more a beast behind the drum set here than in Satyricon, excellent! [Released by Candlelight] 1349 - Demonoir [CD] 11.95 EUR Fifth full-length album of this Norwegian legion, recovering in one hand the intensity and brutality of the fantastic “Hellfire” but, at the same time, continuing with the experimental and sinister side of their music introduced in their previous work, “Revelations of the Black Flame”... [Released by Indie Recordings] 1349 - Liberation [CD] 11.95 EUR Fantastic debut full-length album of the Nordic hordes 1349 leaded by Frost (Satyricon), ten tracks of furious, violent and merciless black metal is what they show us in "Liberation", ten straightforward tracks of pure Norwegian black metal, superb! [Released by Candlelight] 1349 - Massive Cauldron of Chaos [CD] 11.95 EUR Sixth full-length album of the Norwegians 1349, with which they continue this returning path to their most brutal roots that they started with the previous “Demonoir”, perhaps not as chaotic as the title might suggested, but we could place it in the intermediate era of “Hellfire”.. -

Hipster Black Metal?

Hipster Black Metal? Deafheaven’s Sunbather and the Evolution of an (Un) popular Genre Paola Ferrero A couple of months ago a guy walks into a bar in Brooklyn and strikes up a conversation with the bartenders about heavy metal. The guy happens to mention that Deafheaven, an up-and-coming American black metal (BM) band, is going to perform at Saint Vitus, the local metal concert venue, in a couple of weeks. The bartenders immediately become confrontational, denying Deafheaven the BM ‘label of authenticity’: the band, according to them, plays ‘hipster metal’ and their singer, George Clarke, clearly sports a hipster hairstyle. Good thing they probably did not know who they were talking to: the ‘guy’ in our story is, in fact, Jonah Bayer, a contributor to Noisey, the music magazine of Vice, considered to be one of the bastions of hipster online culture. The product of that conversation, a piece entitled ‘Why are black metal fans such elitist assholes?’ was almost certainly intended as a humorous nod to the ongoing debate, generated mainly by music webzines and their readers, over Deafheaven’s inclusion in the BM canon. The article features a promo picture of the band, two young, clean- shaven guys, wearing indistinct clothing, with short haircuts and mild, neutral facial expressions, their faces made to look like they were ironically wearing black and white make up, the typical ‘corpse-paint’ of traditional, early BM. It certainly did not help that Bayer also included a picture of Inquisition, a historical BM band from Colombia formed in the early 1990s, and ridiculed their corpse-paint and black cloaks attire with the following caption: ‘Here’s what you’re defending, black metal purists. -

Immortal Pure Holocaust Mp3, Flac, Wma

Immortal Pure Holocaust mp3, flac, wma DOWNLOAD LINKS (Clickable) Genre: Rock Album: Pure Holocaust Country: Japan Style: Black Metal MP3 version RAR size: 1508 mb FLAC version RAR size: 1266 mb WMA version RAR size: 1518 mb Rating: 4.7 Votes: 484 Other Formats: MP2 MOD AAC MP1 ADX MP4 DXD Tracklist Hide Credits Unsilent Storms In The North Abyss 1 3:14 Lyrics By – DemonazMusic By – Abbath, Demonaz A Sign For The Norse Hordes To Ride 2 2:35 Lyrics By – DemonazMusic By – Abbath, Demonaz The Sun No Longer Rises 3 4:19 Lyrics By – DemonazMusic By – Abbath, Demonaz Frozen By Icewinds 4 4:40 Lyrics By – DemonazMusic By – Abbath, Demonaz Storming Through Red Clouds And Holocaustwinds 5 4:39 Lyrics By – DemonazMusic By – Abbath Eternal Years On The Path To Cemetary Gates 6 3:30 Lyrics By – DemonazMusic By – Abbath As The Eternity Opens 7 5:30 Lyrics By – DemonazMusic By – Abbath Pure Holocaust 8 5:16 Lyrics By – DemonazMusic By – Abbath Companies, etc. Distributed By – SPV GmbH – SPV 084-08772 CD Distributed By – Disk Union – OPCD-019 Phonographic Copyright (p) – Osmose Productions Copyright (c) – Osmose Productions Recorded At – Grieghallen Studio Pressed By – Sony DADC Credits Bass, Vocals – Abbath Doom Occulta* Drums [Credited To] – Erik* Executive-Producer – Osmose Productions Guitar – Demonaz Doom Occulta* Producer – Eirik (Pytten) Hundvin*, Immortal Notes Repress with OBI & Japanese Leaflet. Recorded in Grieghallen Studio 1993. Although Erik is credited as a drummer in the booklet (and he was responsible for the instrument on subsequent