Robot Composite Learning and the Nunchaku Flipping Challenge

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Lilac Cube

University of New Orleans ScholarWorks@UNO University of New Orleans Theses and Dissertations Dissertations and Theses 5-21-2004 The Lilac Cube Sean Murray University of New Orleans Follow this and additional works at: https://scholarworks.uno.edu/td Recommended Citation Murray, Sean, "The Lilac Cube" (2004). University of New Orleans Theses and Dissertations. 77. https://scholarworks.uno.edu/td/77 This Thesis is protected by copyright and/or related rights. It has been brought to you by ScholarWorks@UNO with permission from the rights-holder(s). You are free to use this Thesis in any way that is permitted by the copyright and related rights legislation that applies to your use. For other uses you need to obtain permission from the rights- holder(s) directly, unless additional rights are indicated by a Creative Commons license in the record and/or on the work itself. This Thesis has been accepted for inclusion in University of New Orleans Theses and Dissertations by an authorized administrator of ScholarWorks@UNO. For more information, please contact [email protected]. THE LILAC CUBE A Thesis Submitted to the Graduate Faculty of the University of New Orleans in partial fulfillment of the requirements for the degree of Master of Fine Arts in The Department of Drama and Communications by Sean Murray B.A. Mount Allison University, 1996 May 2004 TABLE OF CONTENTS Chapter 1 1 Chapter 2 9 Chapter 3 18 Chapter 4 28 Chapter 5 41 Chapter 6 52 Chapter 7 62 Chapter 8 70 Chapter 9 78 Chapter 10 89 Chapter 11 100 Chapter 12 107 Chapter 13 115 Chapter 14 124 Chapter 15 133 Chapter 16 146 Chapter 17 154 Chapter 18 168 Chapter 19 177 Vita 183 ii The judge returned with my parents. -

Biblioteca Digital De Cartomagia, Ilusionismo Y Prestidigitación

Biblioteca-Videoteca digital, cartomagia, ilusionismo, prestidigitación, juego de azar, Antonio Valero Perea. BIBLIOTECA / VIDEOTECA INDICE DE OBRAS POR TEMAS Adivinanzas-puzzles -- Magia anatómica Arte referido a los naipes -- Magia callejera -- Música -- Magia científica -- Pintura -- Matemagia Biografías de magos, tahúres y jugadores -- Magia cómica Cartomagia -- Magia con animales -- Barajas ordenadas -- Magia de lo extraño -- Cartomagia clásica -- Magia general -- Cartomagia matemática -- Magia infantil -- Cartomagia moderna -- Magia con papel -- Efectos -- Magia de escenario -- Mezclas -- Magia con fuego -- Principios matemáticos de cartomagia -- Magia levitación -- Taller cartomagia -- Magia negra -- Varios cartomagia -- Magia en idioma ruso Casino -- Magia restaurante -- Mezclas casino -- Revistas de magia -- Revistas casinos -- Técnicas escénicas Cerillas -- Teoría mágica Charla y dibujo Malabarismo Criptografía Mentalismo Globoflexia -- Cold reading Juego de azar en general -- Hipnosis -- Catálogos juego de azar -- Mind reading -- Economía del juego de azar -- Pseudohipnosis -- Historia del juego y de los naipes Origami -- Legislación sobre juego de azar Patentes relativas al juego y a la magia -- Legislación Casinos Programación -- Leyes del estado sobre juego Prestidigitación -- Informes sobre juego CNJ -- Anillas -- Informes sobre juego de azar -- Billetes -- Policial -- Bolas -- Ludopatía -- Botellas -- Sistemas de juego -- Cigarrillos -- Sociología del juego de azar -- Cubiletes -- Teoria de juegos -- Cuerdas -- Probabilidad -

Download Hack Coin Master Apk

Download Hack Coin Master Apk Download Hack Coin Master Apk CLICK HERE TO ACCESS COIN MASTER GENERATOR Coinmaster.Gamescheatspot.Com Coin Master Hack Jackpot Tipsplay.Com/Coin Coin Master Game Cheat Code Cmcheats.Com,Com/Coin Coin Master ... Search This Blog. Slotomania free coins, Slotomania.Slotomania free coins, Slotomania Free Coins, Slotomania Free Coins Links, Slotomania 2000+ Free Coins, free coins for Slotomania, Slotomania free coins are a social and entertaining casino game that is available on Mobile Android, iOS online pc facebook and window. when you will play then get an awesome experience. spin and coin link Want to get the Coin Master Free Spins? You are landed on the right website, this is the best page where you can find them. It is important to collect them all and use them to get the spins in coin master daily. If you are successfully collect up to 9 gold cards, then you will get lots of rewards... Pen spinning is a form of object manipulation that involves the deft manipulation of a writing instrument with hands. Although it is often considered a form of self-entertainment (usually in a school or office setting), multinational competitions and meetings are sometimes held. It is sometimes classified as a form of contact juggling; however, some tricks do leave contact with the body. 1987 has seen many sequels and prequels in video games, such as Dragon Quest II, as well as new titles such as Contra, Double Dragon, Final Fantasy, Metal Gear, Phantasy Star, Street Fighter, The Last Ninja, After Burner and R-Type. -

Materials for a Rejang-Indonesian-English Dictionary

PACIFIC LING U1STICS Series D - No. 58 MATERIALS FOR A REJANG - INDONESIAN - ENGLISH DICTIONARY collected by M.A. Jaspan With a fragmentary sketch of the . Rejang language by W. Aichele, and a preface and additional annotations by P. Voorhoeve (MATERIALS IN LANGUAGES OF INDONESIA, No. 27) W.A.L. Stokhof, Series Editor Department of Linguistics Research School of Pacific Studies THE AUSTRALIAN NATIONAL UNIVERSITY Jaspan, M.A. editor. Materials for a Rejang-Indonesian-English dictionary. D-58, x + 172 pages. Pacific Linguistics, The Australian National University, 1984. DOI:10.15144/PL-D58.cover ©1984 Pacific Linguistics and/or the author(s). Online edition licensed 2015 CC BY-SA 4.0, with permission of PL. A sealang.net/CRCL initiative. PACIFIC LINGUISTICS is issued through the Linguistic Circle of Canberra and consists of four series: SERIES A - Occasional Papers SERIES B - Monographs SERIES C - Books SERIES D - Special Publications EDITOR: S.A. Wurm ASSOCIATE EDITORS: D.C. Laycock, C.L. Voorhoeve, D.T. Tryon, T.E. Dutton EDITORIAL ADVISERS: B.W. Bender K.A. McElhanon University of Hawaii University of Texas David Bradley H.P. McKaughan La Trobe University University of Hawaii A. Capell P. MUhlhiiusler University of Sydney Linacre College, Oxford Michael G. Clyne G.N. O'Grady Monash University University of Victoria, B.C. S.H. Elbert A.K. Pawley University of Hawaii University of Auckland K.J. Franklin K.L. Pike University of Michigan; Summer Institute of Linguistics Summer Institute of Linguistics W.W. Glover E.C. Polome Summer Institute of Linguistics University of Texas G.W. Grace Malcolm Ross University of Hawaii University of Papua New Guinea M.A.K. -

50 Things You Didn't Know

50 Things You Didn’t Know You Didn’t Know About Pens © Tancia Ltd 2013 50 things you didn’t know you didn’t know about pens…. The History of Pens The word ‘pen’ means feather The word ‘pen’ comes from the Latin, penna which translates as ‘feather’ in En- glish. This word was chosen due to the use of feathers to make quill pens from the 8th to the 19th century. Penne pasta is so-called because the shape resembles the pointed part of a quill pen. The very first pens were made from hollow bones and kitchen knives It is thought that cave paintings were created using a sharpened stick whose main purpose was skinning and cutting meat. The stick would have been dipped in a primitive ink made from berries or soot mixed with animal fats or human saliva. Hollow bones were also used to create an airbrushing technique where hands and other objects were used as stencils. Examples of ink from Biblical times still exist today The Dead Sea Scrolls were discovered in the 1940s in a collection of caves and contain an extensive collection of religious writings that have been dated between the 3rd century BC and the 1st century AD. They were written with three different types of ink; two of which were black and the third, red. The black inks were made from several ingredients including carbon soot from olive oil lamps whilst the red ink contained mercury sulphide, also known as cinnabar from which vermillion pigment is obtained. Goose feathers from living birds make the best quills The strongest quills come from living birds and are most commonly goose feath- ers. -

Explorations in Ethnic Studies

Vol. 15, No. 1 January, 1992 EXPLORATIONS IN ETHNIC STUDIES The Journal of the National Association for Ethnic Studies Published by NAES General Editorial Board Wolfgang Binder, Americanistik Universitat Erlangen, West Germany Lucia Birnbaum, Italian American Historical Society Berkeley, California Russell Endo, University of Colorado Boulder, Colorado Manuel deOrtega, California State University Los Angeles, California David Gradwohl, Iowa State University Ames, Iowa Jack Forbes, University of California Davis, California Lee Hadley, Iowa State University Ames, Iowa Clifton H. Johnson, Amistad Research Center New Orleans, Louisiana Paul Lauter, Trinity College Hartford, Connecticut William Oandasan, University of New Orleans New Orleans, Louisiana Alan Spector, Purdue University Calumet Hammond, Indiana Ronald Takaki, University of California Berkeley, California John C. Walter, University of Washington Seattle, Washington Vol.15, No.1 January, 1992 Explorations in Ethnic Studies Table of Contents Introduction: New Vistas in Art, Culture, and Ethnicity by Bernard young .... .............................. ............ ...... .. .. .... .... 1 An Art-Historical Paradigm fo r Investigating Native American Pictographs of the Lower Pecos Region, Texas by John Antoine Labadie . .............. ...... ..... .......... .. .. .......... ... 5 Sources of Chicano Art: Our Lady of Guadalupe by Jacinto Quirarte . .............. ... ......... ...... .. .... .... .. ............... 13 Narrative Quilts and Quilted Narratives: The Art of Faith Ringgold -

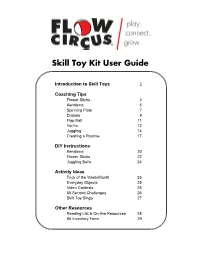

Skill Toy Kit User Guide

Skill Toy Kit User Guide Introduction to Skill Toys 2 Coaching Tips Flower Sticks 3 Kendama 5 Spinning Plate 7 Diabolo 9 Flop Ball 11 Yo-Yo 12 Juggling 14 Creating a Routine 17 DIY Instructions Kendama 20 Flower Sticks 22 Juggling Balls 24 Activity Ideas Trick of the Week/Month 25 Everyday Objects 25 Video Contests 25 60 Second Challenges 26 Skill Toy Bingo 27 Other Resources Reading List & On-line Resources 28 Kit Inventory Form 29 Introduction to Skill Toys You and your team are about to embark on a fun adventure into the world of skill toys. Each toy included in this kit has its own story, design, and body of tricks. Most of them have a lower barrier to entry than juggling, and the following lessons of good posture, mindfulness, problem solving, and goal setting apply to all. Before getting started with any of the skill toys, it is important to remember to relax. Stand with legs shoulder width apart, bend your knees, and take deep breaths. Many of them require you to absorb energy. We have found that the more stressed a player is, the more likely he/she will be to struggle. Not surprisingly, adults tend to have this problem more than kids. If you have players getting frustrated, remind them to relax. Playing should be fun! Also, remind yourself and other players that even the drops or mess-ups can give us valuable information. If they observe what is happening when they attempt to use the skill toys, they can use this information to problem solve. -

Parent-Child Interaction Therapy: Second Edition (Issues in Clinical Child Psychology)

Issues in Clinical Child Psychology Series Editor Michael C. Roberts, University of Kansas, Lawrence For further volumes: http://www.springer.com/series/6082 Cheryl Bodiford McNeil · Toni L. Hembree-Kigin Parent-Child Interaction Therapy Second Edition With Contributions by Karla Anhalt, Åse Bjørseth, Joaquin Borrego, Yi-Chuen Chen, Gus Diamond, Kimberly P. Foley, Matthew E. Goldfine, Amy D. Herschell, Joshua Masse, Ashley B. Tempel, Jennifer Tiano, Stephanie Wagner, Lisa M. Ware and Anne Kristine Wormdal 123 Cheryl Bodiford McNeil Toni L. Hembree-Kigin Department of Psychology Early Childhood Mental Health Services West Virginia University 2500 S. Power Road 53 Campus Drive Suite 108, Mesa, AZ 85209 Morgantown, WV 26506-6040 USA USA [email protected] [email protected] ISSN 1574-0471 ISBN 978-0-387-88638-1 e-ISBN 978-0-387-88639-8 DOI 10.1007/978-0-387-88639-8 Springer New York Dordrecht Heidelberg London Library of Congress Control Number: 2009942276 © Springer Science+Business Media, LLC 1995, 2010 All rights reserved. This work may not be translated or copied in whole or in part without the written permission of the publisher (Springer Science+Business Media, LLC, 233 Spring Street, New York, NY 10013, USA), except for brief excerpts in connection with reviews or scholarly analysis. Use in connection with any form of information storage and retrieval, electronic adaptation, computer software, or by similar or dissimilar methodology now known or hereafter developed is forbidden. The use in this publication of trade names, trademarks, service marks, and similar terms, even if they are not identified as such, is not to be taken as an expression of opinion as to whether or not they are subject to proprietary rights. -

International

O R F A L N L I O ! S - U L T C E N I N . - H GOING W T U W O W - Y . S A L T O INTERNATIONAL OPPORTUNITIES FOR ALL! A booklet with practical inclusion methods and advice for preparing, implementing and following-up on international projects with young people with fewer opportunities. Download this and other SALTO Inclusion booklets for free at: www.SALTO-YOUTH.net/Inclusion/ SALTO-YOUTH I N C L U S I O N RESOURCE CENTRE Education and Culture GOING INTERNATIONAL OPPORTUNITIES FOR ALL! 2 SALTO-YOUTH STANDS FOR… …‘Support for Advanced Learning and Training Opportunities within the YOUTH programme’. The European Commission has created a network of eight SALTO-YOUTH Resource Centres to enhance the implementation of the European YOUTH programme which provides young people with valuable non-formal learning experiences. SALTO’s aim is to support European YOUTH projects dealing with important issues such as Social Inclusion or Cultural Diversity, with actions such as Youth Initiatives, with regions such as EuroMed, South-East Europe or Eastern Europe & Caucasus, with training and co-operation activities and with information tools for the National Agencies of the YOUTH programme. In these European priority areas, SALTO-YOUTH provides resources, information and training for National Agencies and European youth workers. Several resources in the above areas are offered via www.SALTO-YOUTH.net. Find online the European Training Calendar, the Toolbox for Training and Youth Work, Trainers Online for Youth, links to online resources and much more… SALTO-YOUTH actively co-operates with other actors in European youth work such as the National Agencies of the YOUTH programme, the Council of Europe, European youth workers and trainers and training organisers. -

Daily Eastern News: November 03, 2014 Eastern Illinois University

Eastern Illinois University The Keep November 2014 11-3-2014 Daily Eastern News: November 03, 2014 Eastern Illinois University Follow this and additional works at: http://thekeep.eiu.edu/den_2014_nov Recommended Citation Eastern Illinois University, "Daily Eastern News: November 03, 2014" (2014). November. 1. http://thekeep.eiu.edu/den_2014_nov/1 This Article is brought to you for free and open access by the 2014 at The Keep. It has been accepted for inclusion in November by an authorized administrator of The Keep. For more information, please contact [email protected]. TARBLE 20 BALANCING ACT Winners of the Tarble Art Center’s 20th The Eastern football team took down Biennial exhibition were announced, with Tennessee Tech with the offense balancing contestants submitting pieces from all over Illinois. the way head coach Kim Dameron wanted. PAGE 3 PAGE 8 WWW.DAILYEASTERNNEWS.COM HE DT ailyEastErn nEws Monday, Nov. 3, 2014 “TELL THE TRUTH AND DON’T BE AFRAID” VOL. 99 | NO. 49 MEET THE 2014 CANDIDATES FOR GOVERNOR PENSION REFORM MINIMUM WAGE He wants to raise Illinois’ Rauner is pension reform, not- minimum wage from ing that he plans to ensure $8.25 to at least $10 over pay and benefits do not rise the next two years faster than the rate of inflation, eliminate massive pay raises JOBS He created construction jobs JOBS with the Illinois Jobs Now! law Rauner wants to create jobs and initiated the Illinois Small by lowering the cost of Business Tax Credit to benefit running a business; to do that, small businesses. he plans to eliminate Quinn’s tax increases, allow com- munities to decide whether or not workers must join a union, and reform workers’ compensation. -

Academic Integrity Violations Rise Fredonia Squirrel Club Open Letter About Communism and the Uyghur Genocide Bryant Estate

05.04.2021 Issue 9 | Volume CXXVII THE EADER SUNY FREDONIA’S STUDENT-RUN NEWSPAPER Academic Integrity Violations Rise SUNYFEST: Kesha and AJR Bryant Estate Has Left Nike Fredonia Squirrel Club Open Letter About Communism and the Uyghur Genocide 2 The Leader Issue 9 THE LEADER What’s in this issue? S206 Williams Center Fredonia, NY 14063 www.fredonialeader.org News - 3 Opinion - 14 - This Semester in COVID - FTDO James Mead Email [email protected] - Violations of Academic - FTDO Jessica Meditz Twitter @LeaderFredonia Integrity Policy Rise with - Open Letter About Instagram @leaderfredonia Online Learning Communism and the Uyghur Genocide Life & Arts - 6 - What Fredonia Student - Empathy: The Showpiece Mental Health Looks Like of Resumes Editor in Chief Asst. Design Editor - Kesha and AJR Take on Photography - 20 Jessica Meditz Open SUNYFEST - Derek Raymond: Blue Managing Editor Art Director - Student Art Sale Raises Skies with the Blue Devil James Mead Open Record-High Donation for The Literacy Volunteers Scallion - 28 News Editor Asst. Art Director - Music Industry Club - Catching Up with the Alisa Oppenheimer Lydia Turcios Presents Soulstice Jam Gecko Student - MTG vs. AOC: Asst. News Editor Photo Editor Sports - 12 Smackdown of the Century Chloe Kowalyk Alexis Carney - Fredonia Spring Sports - Fredonia Squirrel Club Season Winds Down - Horoscopes Life & Arts Editor Asst. Photo Editor Alyssa Bump Derek Raymond - Bryant Estate Has Left Nike Asst. Life & Arts Editor Copy Editor Open Anna Gagliano Cover and Back Photo: The Blue Devil smiles as he walks toward his future. Photographs by Derek Raymond. Sports Editor Asst. Copy Editor Anthony Gettino Open Asst. Sports Editor Business Manager Open Kemari Wright Scallion Editor Asst. -

Coin Master Daily Free Rewards

Coin Master Daily Free Rewards Coin Master Daily Free Rewards CLICK HERE TO ACCESS COIN MASTER GENERATOR coin master hack mobile Free Slot Spins. Be in with the chance of winning real money every day with our selection of slot games that offer free spins, including Doubly Bubbly and Search for the Phoenix. There's a chance to play every day if you choose to, just make sure you have made at least one £10 deposit at Virgin Games and you could potentially… download coin master hack apk spin coin master hack The Coin Master Chetas that actually work. You probably saw quite a few generators online that didn’t work properly. This is not the case with our tool, this works 100%! With our tool, you will be able to get all the coins you might want and you will also be able to get those sweet, sweet spins for your game! Coin master free spin coin master free spins 2020 S k y b l o c k S t o c k B r o k e r s The B E S T tool to making millions of coins on the bazaar W I T H O U T A N Y E F F O R T OVER 2000 POSITIVE REVIEWS We've done it. We hit an outstanding 10000+ members in just one week.For those not familiar with our bots, I have designed a set of a couple discord bots that track the bazaar 24/7. All MCODS Manipal Confessions- Ultimate - All MCPE Thailand แจกสงิ ตา่ งๆ ของ MCPE; All MCQ - All MCQ; All MCQs Material World Level - All MCQs PDF; All MCU - All MCU ex students known as OLD BOYS.