Performance Evaluation of a Transport System Supporting the Mobihealth Banip: Methodology and Assessment VOLUME 1

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

UNIX and Computer Science Spreading UNIX Around the World: by Ronda Hauben an Interview with John Lions

Winter/Spring 1994 Celebrating 25 Years of UNIX Volume 6 No 1 "I believe all significant software movements start at the grassroots level. UNIX, after all, was not developed by the President of AT&T." Kouichi Kishida, UNIX Review, Feb., 1987 UNIX and Computer Science Spreading UNIX Around the World: by Ronda Hauben An Interview with John Lions [Editor's Note: This year, 1994, is the 25th anniversary of the [Editor's Note: Looking through some magazines in a local invention of UNIX in 1969 at Bell Labs. The following is university library, I came upon back issues of UNIX Review from a "Work In Progress" introduced at the USENIX from the mid 1980's. In these issues were articles by or inter- Summer 1993 Conference in Cincinnati, Ohio. This article is views with several of the pioneers who developed UNIX. As intended as a contribution to a discussion about the sig- part of my research for a paper about the history and devel- nificance of the UNIX breakthrough and the lessons to be opment of the early days of UNIX, I felt it would be helpful learned from it for making the next step forward.] to be able to ask some of these pioneers additional questions The Multics collaboration (1964-1968) had been created to based on the events and developments described in the UNIX "show that general-purpose, multiuser, timesharing systems Review Interviews. were viable." Based on the results of research gained at MIT Following is an interview conducted via E-mail with John using the MIT Compatible Time-Sharing System (CTSS), Lions, who wrote A Commentary on the UNIX Operating AT&T and GE agreed to work with MIT to build a "new System describing Version 6 UNIX to accompany the "UNIX hardware, a new operating system, a new file system, and a Operating System Source Code Level 6" for the students in new user interface." Though the project proceeded slowly his operating systems class at the University of New South and it took years to develop Multics, Doug Comer, a Profes- Wales in Australia. -

1St Slide Set Operating Systems

Organizational Information Operating Systems Generations of Computer Systems and Operating Systems 1st Slide Set Operating Systems Prof. Dr. Christian Baun Frankfurt University of Applied Sciences (1971–2014: Fachhochschule Frankfurt am Main) Faculty of Computer Science and Engineering [email protected] Prof. Dr. Christian Baun – 1st Slide Set Operating Systems – Frankfurt University of Applied Sciences – WS2021 1/24 Organizational Information Operating Systems Generations of Computer Systems and Operating Systems Organizational Information E-Mail: [email protected] !!! Tell me when problems problems exist at an early stage !!! Homepage: http://www.christianbaun.de !!! Check the course page regularly !!! The homepage contains among others the lecture notes Presentation slides in English and German language Exercise sheets in English and German language Sample solutions of the exercise sheers Old exams and their sample solutions What is the password? There is no password! The content of the English and German slides is identical, but please use the English slides for the exam preparation to become familiar with the technical terms Prof. Dr. Christian Baun – 1st Slide Set Operating Systems – Frankfurt University of Applied Sciences – WS2021 2/24 Organizational Information Operating Systems Generations of Computer Systems and Operating Systems Literature My slide sets were the basis for these books The two-column layout (English/German) of the bilingual book is quite useful for this course You can download both books for free via the FRA-UAS library from the intranet Prof. Dr. Christian Baun – 1st Slide Set Operating Systems – Frankfurt University of Applied Sciences – WS2021 3/24 Organizational Information Operating Systems Generations of Computer Systems and Operating Systems Learning Objectives of this Slide Set At the end of this slide set You know/understand. -

Computing Science Technical Report No. 99 a History of Computing Research* at Bell Laboratories (1937-1975)

Computing Science Technical Report No. 99 A History of Computing Research* at Bell Laboratories (1937-1975) Bernard D. Holbrook W. Stanley Brown 1. INTRODUCTION Basically there are two varieties of modern electrical computers, analog and digital, corresponding respectively to the much older slide rule and abacus. Analog computers deal with continuous information, such as real numbers and waveforms, while digital computers handle discrete information, such as letters and digits. An analog computer is limited to the approximate solution of mathematical problems for which a physical analog can be found, while a digital computer can carry out any precisely specified logical proce- dure on any symbolic information, and can, in principle, obtain numerical results to any desired accuracy. For these reasons, digital computers have become the focal point of modern computer science, although analog computing facilities remain of great importance, particularly for specialized applications. It is no accident that Bell Labs was deeply involved with the origins of both analog and digital com- puters, since it was fundamentally concerned with the principles and processes of electrical communication. Electrical analog computation is based on the classic technology of telephone transmission, and digital computation on that of telephone switching. Moreover, Bell Labs found itself, by the early 1930s, with a rapidly growing load of design calculations. These calculations were performed in part with slide rules and, mainly, with desk calculators. The magnitude of this load of very tedious routine computation and the necessity of carefully checking it indicated a need for new methods. The result of this need was a request in 1928 from a design department, heavily burdened with calculations on complex numbers, to the Mathe- matical Research Department for suggestions as to possible improvements in computational methods. -

BYOD Revisited: BUILD Your Own Device

BYOD Revisited: BUILD Your Own Device Ron Munitz The PSCG about://Ron_Munitz ● Distributed Fault Tolerant Avionic Systems ● Linux, VxWorks, very esoteric libraries, 0’s and 1’s ●Highly distributed video routers ● Linux ●Real Time, Embedded, Server bringups ● Linux, Android , VxWorks, Windows, devices, BSPs, DSPs,... ●Distributed Android ● Rdroid? Cloudroid? Too busy working to get over the legal naming, so no name is officially claimed for my open source about://Ron_Munitz What currently keeps me busy: ● Running The PSCG, an Embedded/Android consulting and Training ● Managing R&D at Nubo and advising on Remote Display Protocols ● Promoting open source with The New Circle expert network ● Lecturing, Researching and Project Advising an Afeka’s college of Engineering ● Amazing present, endless opportunities. (Wish flying took less time) Agenda ● History 101: Evolution of embedded systems ● Software Product 101: Past, Present, Future. ● Software Product 201: The Cloud Era. ● Hardware Product 101: Building Devices ● Hardware Product 201: The IoT Era History 101: 20th Century, 21st Century, Computers, Embedded Devices and Operating Systems http://i2.cdn.turner.com/cnn/dam/assets/121121034453-witch-computer-restoration-uk-story-top.jpg Selected keypoints in the evolution of Embedded systems: The 20th century ● Pre 40’s: Mechanical Computers, Turing Machine ● The 40’s: Mechanical Computers, Embedded Mechanical Computers(V2...), Digital Computers (Z3...) ● The 50’s: Digital Computers, Integrated Circuit, BESYS ● The 60’s: MULTICS, Modem, Moore’s -

MTS on Wikipedia Snapshot Taken 9 January 2011

MTS on Wikipedia Snapshot taken 9 January 2011 PDF generated using the open source mwlib toolkit. See http://code.pediapress.com/ for more information. PDF generated at: Sun, 09 Jan 2011 13:08:01 UTC Contents Articles Michigan Terminal System 1 MTS system architecture 17 IBM System/360 Model 67 40 MAD programming language 46 UBC PLUS 55 Micro DBMS 57 Bruce Arden 58 Bernard Galler 59 TSS/360 60 References Article Sources and Contributors 64 Image Sources, Licenses and Contributors 65 Article Licenses License 66 Michigan Terminal System 1 Michigan Terminal System The MTS welcome screen as seen through a 3270 terminal emulator. Company / developer University of Michigan and 7 other universities in the U.S., Canada, and the UK Programmed in various languages, mostly 360/370 Assembler Working state Historic Initial release 1967 Latest stable release 6.0 / 1988 (final) Available language(s) English Available programming Assembler, FORTRAN, PL/I, PLUS, ALGOL W, Pascal, C, LISP, SNOBOL4, COBOL, PL360, languages(s) MAD/I, GOM (Good Old Mad), APL, and many more Supported platforms IBM S/360-67, IBM S/370 and successors History of IBM mainframe operating systems On early mainframe computers: • GM OS & GM-NAA I/O 1955 • BESYS 1957 • UMES 1958 • SOS 1959 • IBSYS 1960 • CTSS 1961 On S/360 and successors: • BOS/360 1965 • TOS/360 1965 • TSS/360 1967 • MTS 1967 • ORVYL 1967 • MUSIC 1972 • MUSIC/SP 1985 • DOS/360 and successors 1966 • DOS/VS 1972 • DOS/VSE 1980s • VSE/SP late 1980s • VSE/ESA 1991 • z/VSE 2005 Michigan Terminal System 2 • OS/360 and successors -

UNIX History Page 1 Tuesday, December 10, 2002 7:02 PM

UNIX History Page 1 Tuesday, December 10, 2002 7:02 PM CHAPTER 1 UNIX Evolution and Standardization This chapter introduces UNIX from a historical perspective, showing how the various UNIX versions have evolved over the years since the very first implementation in 1969 to the present day. The chapter also traces the history of the different attempts at standardization that have produced widely adopted standards such as POSIX and the Single UNIX Specification. The material presented here is not intended to document all of the UNIX variants, but rather describes the early UNIX implementations along with those companies and bodies that have had a major impact on the direction and evolution of UNIX. A Brief Walk through Time There are numerous events in the computer industry that have occurred since UNIX started life as a small project in Bell Labs in 1969. UNIX history has been largely influenced by Bell Labs’ Research Editions of UNIX, AT&T’s System V UNIX, Berkeley’s Software Distribution (BSD), and Sun Microsystems’ SunOS and Solaris operating systems. The following list shows the major events that have happened throughout the history of UNIX. Later sections describe some of these events in more detail. 1 UNIX History Page 2 Tuesday, December 10, 2002 7:02 PM 2 UNIX Filesystems—Evolution, Design, and Implementation 1969. Development on UNIX starts in AT&T’s Bell Labs. 1971. 1st Edition UNIX is released. 1973. 4th Edition UNIX is released. This is the first version of UNIX that had the kernel written in C. 1974. Ken Thompson and Dennis Ritchie publish their classic paper, “The UNIX Timesharing System” [RITC74]. -

1St Slide Set Operating Systems

Organizational Information Operating Systems Generations of Computer Systems and Operating Systems 1st Slide Set Operating Systems Prof. Dr. Christian Baun Frankfurt University of Applied Sciences (1971–2014: Fachhochschule Frankfurt am Main) Faculty of Computer Science and Engineering [email protected] Prof. Dr. Christian Baun – 1st Slide Set Operating Systems – Frankfurt University of Applied Sciences – WS1920 1/31 Organizational Information Operating Systems Generations of Computer Systems and Operating Systems Organizational Information E-Mail: [email protected] !!! Tell me when problems problems exist at an early stage !!! Homepage: http://www.christianbaun.de !!! Check the course page regularly !!! The homepage contains among others the lecture notes Presentation slides in English and German language Exercise sheets in English and German language Sample solutions of the exercise sheers Old exams and their sample solutions Participating in the exercises is not a precondition for exam participation But it is recommended to participate the exercises The content of the English and German slides is identical, but please use the English slides for the exam preparation to become familiar with the technical terms Prof. Dr. Christian Baun – 1st Slide Set Operating Systems – Frankfurt University of Applied Sciences – WS1920 2/31 Organizational Information Operating Systems Generations of Computer Systems and Operating Systems The Way a good Course works. Image Source: Google Mr. Miyagi says: „Not only the student learns from his master, also the master learns from his student.“ Active participation please! Prof. Dr. Christian Baun – 1st Slide Set Operating Systems – Frankfurt University of Applied Sciences – WS1920 3/31 Organizational Information Operating Systems Generations of Computer Systems and Operating Systems Things, which are bad during a Course. -

FULLTEXT01.Pdf

Copyright © IEEE. Citation for the published paper: Title: Author: Journal: Year: Vol: Issue: Pagination: URL/DOI to the paper: This material is posted here with permission of the IEEE. Such permission of the IEEE does not in any way imply IEEE endorsement of any of BTH's products or services Internal or personal use of this material is permitted. However, permission to reprint/republish this material for advertising or promotional purposes or for creating new collective works for resale or redistribution must be obtained from the IEEE by sending a blank email message to [email protected]. By choosing to view this document, you agree to all provisions of the copyright laws protecting it. This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication. IEEE TRANSACTIONS ON MOBILE COMPUTING, VOL. X, NO. X, JUNE 2012 1 Estimating Performance of Mobile Services from Comparative Output-Input Analysis of End-to-End Throughput Markus Fiedler, Member, IEEE, Katarzyna Wac, Member, IEEE, Richard Bults, and Patrik Arlos, Member, IEEE Abstract—Mobile devices with ever-increasing functionality and the ubiquitous availability of wireless communication networks are driving forces behind innovative mobile applications enriching our daily life. One of the performance measures for a successful application deployment is the ability to support application-data flows by heterogeneous networks within certain delay boundaries. However, the quantitative impact of this measure is unknown and practically infeasible to determine at real-time due to the mobile device resource constraints. We research practical methods for measurement-based performance evaluation of heterogeneous data communication networks that support mobile application-data flows. -

A Statistical Examination of the Evolution of the UNIX System

1 IMPERIAL COLLEGE OF SCIENCE, TECHNOLOGY AND MEDICINE University of London A Statistical Examination of The Evolution of the UNIX System by Shamim Sharifuddin Pirzada September 1988 A thesis submitted for the Degree of Doctor of Philosophy of the University of London and for the Diploma of Membership of the Imperial College. 2 ABSTRACT The UNIX system is one of the most successful operating systems in use today. However, due to its age, and in view of the tendencies of other operating systems to degenerate over time, concern has been expressed about its potential for further evolution. Modelling techniques have been proposed to view and predict the evolution of software but they have not yet been sufficiently evaluated. The project uses one such technique, developed by Lehman and others, to examine the evolution of UNIX and attempt a prognosis for its future. Hence it critically evaluates Lehman's concepts of program evolution. A brief survey of quantitative software modelling techniques is given with particular emphasis on models which predict the behaviour of software systems already in use. The development of Lehman's "Theory of Program Evolution" is reviewed and the implications of the hypotheses proposed in the theory are discussed. Also, the history of UNIX is presented as a sequence of releases from the main UNIX centres in the Bell System and the University of California, Berkeley. An attempt is made to construct statistical models of the UNIX evolution process by plotting the progress of the three main branches of the UNIX evolution tree (Research UNIX, the System V stream and BSD/UNDC) in terms of changes in various system and process attributes such as size, growth-rate, work- rate and staffing. -

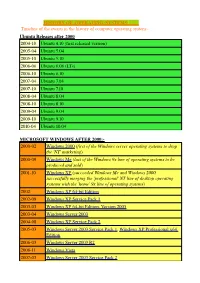

HISTORY of OPERATING SYSTEMS Timeline of The

HISTORY OF OPERATING SYSTEMS Timeline of the events in the history of computer operating system:- Ubuntu Releases after 2000 2004-10 Ubuntu 4.10 (first released version) 2005-04 Ubuntu 5.04 2005-10 Ubuntu 5.10 2006-06 Ubuntu 6.06 (LTs) 2006-10 Ubuntu 6.10 2007-04 Ubuntu 7.04 2007-10 Ubuntu 7.10 2008-04 Ubuntu 8.04 2008-10 Ubuntu 8.10 2009-04 Ubuntu 9.04 2009-10 Ubuntu 9.10 2010-04 Ubuntu 10.04 MICROSOFT WINDOWS AFTER 2000:- 2000-02 Windows 2000 (first of the Windows server operating systems to drop the ©NT© marketing) 2000-09 Windows Me (last of the Windows 9x line of operating systems to be produced and sold) 2001-10 Windows XP (succeeded Windows Me and Windows 2000, successfully merging the ©professional© NT line of desktop operating systems with the ©home© 9x line of operating systems) 2002 Windows XP 64-bit Edition 2002-09 Windows XP Service Pack 1 2003-03 Windows XP 64-bit Edition, Version 2003 2003-04 Windows Server 2003 2004-08 Windows XP Service Pack 2 2005-03 Windows Server 2003 Service Pack 1, Windows XP Professional x64 Edition 2006-03 Windows Server 2003 R2 2006-11 Windows Vista 2007-03 Windows Server 2003 Service Pack 2 2007-11 Windows Home Server 2008-02 Windows Vista Service Pack 1, Windows Server 2008 2008-04 Windows XP Service Pack 3 2009-05 Windows Vista Service Pack 2 2009-10 Windows 7(22 occtober 2009), Windows Server 2008 R2 EVENT IN HISTORY OF OS SINCE 1954:- 1950s 1954 MIT©s operating system made for UNIVAC 1103 1955 General Motors Operating System made for IBM 701 1956 GM-NAA I/O for IBM 704, based on General Motors -

Chapter 9 on the Early History and Impact of Unix Tools to Build the Tools for a New Millennium

Chapter 9 On the Early History and Impact of Unix Tools to Build the Tools for a New Millennium “When the barbarian, advancing step by step, had discovered the native metals, and learned to melt them in the crucible and to cast them in moulds; when he had alloyed native copper with tin and produced bronze; and, finally, when by a still greater effort of thought he had invented the furnace, and produced iron from the ore, nine tenths of the battle for civilization was gained. Furnished, with iron tools capable of holding both an edge and a point, mankind were certain of attaining to civilization.” Lewis Henry Morgan “When Unix evolved within Bell Laboratories, it was not a result of some deliberate management initiative. It spread through channels of technical need and technical contact.... This was typical of the way Unix spread around Bell Laboratories.... I brought out that little hunk of history to point out that the spread and success of Unix, first in the Bell organizations and then in the rest of the world, was due to the fact that it was used, modified, and tinkered up in a whole variety of organizations.” Victor Vyssotsky “UNIX is a lever for the intellect.” John R. Mashey Iron Tools and Software Tools Our era is witnessing the birth of an important new technology, different from any in the past. This new technology is the technology of software production. The new tools of our era are tools that make it possible to produce software. Unlike the tools forged in the past, software tools are not something you can grab or hold. -

Linux and Windows

International Journal of Advanced Technology in Engineering and Science www.ijates.com Volume No.02, Special Issue 01, September 2014 ISSN (online): 2348 – 7550 LINUX AND WINDOWS Aarti Singh1, Ananya Anikesh2 1, 2 Maharshi Dyanand University, (India) I. INTRODUCTION An operating system (OS) is a collection of software that manages computer hardware resources and provides common services for computer programs. The operating system is an essential component of the system software in a computer system. Application programs usually require an operating system to function. Time- sharing operating systems schedule tasks for efficient use of the system and may also include accounting software for cost allocation of processor time, mass storage, printing, and other resources. For hardware functions such as input and output and memory allocation, the operating system acts as an intermediary between programs and the computer hardware,[1][2] although the application code is usually executed directly by the hardware and will frequently make a system call to an OS function or be interrupted by it. Operating systems can be found on almost any device that contains a computer—from cellular phones and video game consoles to supercomputers and web servers. II. FUNCTIONS OF AN OPERATING SYSTEM Booting the computer The process of starting or restarting the computer is known as booting. A cold boot is when you turn on a computer that has been turned off completely. A warm boot is the process of using the operating system to restart the computer. Performs basic computer tasks The operating system performs basic computer tasks, such as managing the various peripheral devices such as the mouse, keyboard and printers.