Pose Estimation for Objects with Rotational Symmetry

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

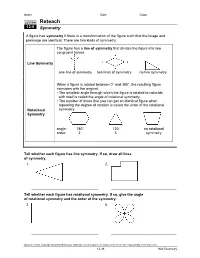

In Order for the Figure to Map Onto Itself, the Line of Reflection Must Go Through the Center Point

3-5 Symmetry State whether the figure appears to have line symmetry. Write yes or no. If so, copy the figure, draw all lines of symmetry, and state their number. 1. SOLUTION: A figure has reflectional symmetry if the figure can be mapped onto itself by a reflection in a line. The figure has reflectional symmetry. In order for the figure to map onto itself, the line of reflection must go through the center point. Two lines of reflection go through the sides of the figure. Two lines of reflection go through the vertices of the figure. Thus, there are four possible lines that go through the center and are lines of reflections. Therefore, the figure has four lines of symmetry. eSolutionsANSWER: Manual - Powered by Cognero Page 1 yes; 4 2. SOLUTION: A figure has reflectional symmetry if the figure can be mapped onto itself by a reflection in a line. The given figure does not have reflectional symmetry. There is no way to fold or reflect it onto itself. ANSWER: no 3. SOLUTION: A figure has reflectional symmetry if the figure can be mapped onto itself by a reflection in a line. The given figure has reflectional symmetry. The figure has a vertical line of symmetry. It does not have a horizontal line of symmetry. The figure does not have a line of symmetry through the vertices. Thus, the figure has only one line of symmetry. ANSWER: yes; 1 State whether the figure has rotational symmetry. Write yes or no. If so, copy the figure, locate the center of symmetry, and state the order and magnitude of symmetry. -

The Cubic Groups

The Cubic Groups Baccalaureate Thesis in Electrical Engineering Author: Supervisor: Sana Zunic Dr. Wolfgang Herfort 0627758 Vienna University of Technology May 13, 2010 Contents 1 Concepts from Algebra 4 1.1 Groups . 4 1.2 Subgroups . 4 1.3 Actions . 5 2 Concepts from Crystallography 6 2.1 Space Groups and their Classification . 6 2.2 Motions in R3 ............................. 8 2.3 Cubic Lattices . 9 2.4 Space Groups with a Cubic Lattice . 10 3 The Octahedral Symmetry Groups 11 3.1 The Elements of O and Oh ..................... 11 3.2 A Presentation of Oh ......................... 14 3.3 The Subgroups of Oh ......................... 14 2 Abstract After introducing basics from (mathematical) crystallography we turn to the description of the octahedral symmetry groups { the symmetry group(s) of a cube. Preface The intention of this account is to provide a description of the octahedral sym- metry groups { symmetry group(s) of the cube. We first give the basic idea (without proofs) of mathematical crystallography, namely that the 219 space groups correspond to the 7 crystal systems. After this we come to describing cubic lattices { such ones that are built from \cubic cells". Finally, among the cubic lattices, we discuss briefly the ones on which O and Oh act. After this we provide lists of the elements and the subgroups of Oh. A presentation of Oh in terms of generators and relations { using the Dynkin diagram B3 is also given. It is our hope that this account is accessible to both { the mathematician and the engineer. The picture on the title page reflects Ha¨uy'sidea of crystal structure [4]. -

Chapter 1 – Symmetry of Molecules – P. 1

Chapter 1 – Symmetry of Molecules – p. 1 - 1. Symmetry of Molecules 1.1 Symmetry Elements · Symmetry operation: Operation that transforms a molecule to an equivalent position and orientation, i.e. after the operation every point of the molecule is coincident with an equivalent point. · Symmetry element: Geometrical entity (line, plane or point) which respect to which one or more symmetry operations can be carried out. In molecules there are only four types of symmetry elements or operations: · Mirror planes: reflection with respect to plane; notation: s · Center of inversion: inversion of all atom positions with respect to inversion center, notation i · Proper axis: Rotation by 2p/n with respect to the axis, notation Cn · Improper axis: Rotation by 2p/n with respect to the axis, followed by reflection with respect to plane, perpendicular to axis, notation Sn Formally, this classification can be further simplified by expressing the inversion i as an improper rotation S2 and the reflection s as an improper rotation S1. Thus, the only symmetry elements in molecules are Cn and Sn. Important: Successive execution of two symmetry operation corresponds to another symmetry operation of the molecule. In order to make this statement a general rule, we require one more symmetry operation, the identity E. (1.1: Symmetry elements in CH4, successive execution of symmetry operations) 1.2. Systematic classification by symmetry groups According to their inherent symmetry elements, molecules can be classified systematically in so called symmetry groups. We use the so-called Schönfliess notation to name the groups, Chapter 1 – Symmetry of Molecules – p. 2 - which is the usual notation for molecules. -

12-5 Worksheet Part C

Name ________________________________________ Date __________________ Class__________________ LESSON Reteach 12-5 Symmetry A figure has symmetry if there is a transformation of the figure such that the image and preimage are identical. There are two kinds of symmetry. The figure has a line of symmetry that divides the figure into two congruent halves. Line Symmetry one line of symmetry two lines of symmetry no line symmetry When a figure is rotated between 0° and 360°, the resulting figure coincides with the original. • The smallest angle through which the figure is rotated to coincide with itself is called the angle of rotational symmetry. • The number of times that you can get an identical figure when repeating the degree of rotation is called the order of the rotational Rotational symmetry. Symmetry angle: 180° 120° no rotational order: 2 3 symmetry Tell whether each figure has line symmetry. If so, draw all lines of symmetry. 1. 2. _________________________________________ ________________________________________ Tell whether each figure has rotational symmetry. If so, give the angle of rotational symmetry and the order of the symmetry. 3. 4. _________________________________________ ________________________________________ Original content Copyright © by Holt McDougal. Additions and changes to the original content are the responsibility of the instructor. 12-38 Holt Geometry Name ________________________________________ Date __________________ Class__________________ LESSON Reteach 12-5 Symmetry continued Three-dimensional figures can also have symmetry. Symmetry in Three Description Example Dimensions A plane can divide a figure into two Plane Symmetry congruent halves. There is a line about which a figure Symmetry About can be rotated so that the image and an Axis preimage are identical. -

Symmetry and Groups, and Crystal Structures

CHAPTER 3: SYMMETRY AND GROUPS, AND CRYSTAL STRUCTURES Sarah Lambart RECAP CHAP. 2 2 different types of close packing: hcp: tetrahedral interstice (ABABA) ccp: octahedral interstice (ABCABC) Definitions: The coordination number or CN is the number of closest neighbors of opposite charge around an ion. It can range from 2 to 12 in ionic structures. These structures are called coordination polyhedron. RECAP CHAP. 2 Rx/Rz C.N. Type Hexagonal or An ideal close-packing of sphere 1.0 12 Cubic for a given CN, can only be Closest Packing achieved for a specific ratio of 1.0 - 0.732 8 Cubic ionic radii between the anions and 0.732 - 0.414 6 Octahedral Tetrahedral (ex.: the cations. 0.414 - 0.225 4 4- SiO4 ) 0.225 - 0.155 3 Triangular <0.155 2 Linear RECAP CHAP. 2 Pauling’s rule: #1: the coodination polyhedron is defined by the ratio Rcation/Ranion #2: The Electrostatic Valency (e.v.) Principle: ev = Z/CN #3: Shared edges and faces of coordination polyhedra decreases the stability of the crystal. #4: In crystal with different cations, those of high valency and small CN tend not to share polyhedral elements #5: The principle of parsimony: The number of different sites in a crystal tends to be small. CONTENT CHAP. 3 (2-3 LECTURES) Definitions: unit cell and lattice 7 Crystal systems 14 Bravais lattices Element of symmetry CRYSTAL LATTICE IN TWO DIMENSIONS A crystal consists of atoms, molecules, or ions in a pattern that repeats in three dimensions. The geometry of the repeating pattern of a crystal can be described in terms of a crystal lattice, constructed by connecting equivalent points throughout the crystal. -

Math 203 Lecture 12 Notes: Tessellation – a Complete Covering

Math 103 Lecture #12 class notes page 1 Math 203 Lecture 12 notes: Note: this lecture is very “visual,” i.e., lots of pictures illustrating tessellation concepts Tessellation – a complete covering of a plane by one or more figures in a repeating pattern, with no overlapping. There are only 3 regular polygons that can tessellate a plane without being combined with other polygons: 1) equilateral triangle 2)square 3) regular hexagon. Once a basic design has been constructed, it may be extended through 3 different transformations: translation (slide), rotation (turn), or reflection (flip). FLIP: A figure has line symmetry if there is a line of reflection such that each point in the figure can be reflected into the figure. Line symmetry is sometimes referred to as mirror symmetry, axial, symmetry, bilateral symmetry, or reflective symmetry. The line of symmetry may be vertical, horizontal, or slanted. A figure may have one or more lines of symmetry, or none. Regular polygons with n sides have n lines of symmetry. Any line through the center of a circle is a line of symmetry for a circle. The circle is the most symmetric of all plane figures. The musical counterpart of horizontal reflection (across the y-axis) is called retrogression, while vertical reflection (across the x-axis) is called inversion. SLIDE: Translation (or slide translation) is one in which a figure moves without changing size or shape. It can be thought of as a motion that moves the figure from one location in a plane to another. The distance that the figure moves, or slides, is called the magnitude. -

Symmetry Groups of Platonic Solids

Symmetry Groups of Platonic Solids David Newcomb Stanford University 03/09/12 Problem Describe the symmetry groups of the Platonic solids, with proofs, and with an emphasis on the icosahedron. Figure 1: The five Platonic solids. 1 Introduction The first group of people to carefully study Platonic solids were the ancient Greeks. Although they bear the namesake of Plato, the initial proofs and descriptions of such solids come from Theaetetus, a contemporary. Theaetetus provided the first known proof that there are only five Platonic solids, in addition to a mathematical description of each one. The five solids are the cube, the tetrahedron, the octahedron, the icosahedron, and the dodecahedron. Euclid, in his book Elements also offers a more thorough description of the construction and properties of each solid. Nearly 2000 years later, Kepler attempted a beautiful planetary model involving the use of the Platonic solids. Although the model proved to be incorrect, it helped Kepler discover fundamental laws of the universe. Still today, Platonic solids are useful tools in the natural sciences. Primarily, they are important tools for understanding the basic chemistry of molecules. Additionally, dodecahedral behavior has been found in the shape of the earth's crust as well as the structure of protons in nuclei. 1 The scope of this paper, however, is limited to the mathematical dissection of the sym- metry groups of such solids. Initially, necessary definitions will be provided along with important theorems. Next, the brief proof of Theaetetus will be discussed, followed by a discussion of the broad technique used to determine the symmetry groups of Platonic solids. -

Symmetry in Chemistry - Group Theory

Symmetry in Chemistry - Group Theory Group Theory is one of the most powerful mathematical tools used in Quantum Chemistry and Spectroscopy. It allows the user to predict, interpret, rationalize, and often simplify complex theory and data. At its heart is the fact that the Set of Operations associated with the Symmetry Elements of a molecule constitute a mathematical set called a Group. This allows the application of the mathematical theorems associated with such groups to the Symmetry Operations. All Symmetry Operations associated with isolated molecules can be characterized as Rotations: k (a) Proper Rotations: Cn ; k = 1,......, n k When k = n, Cn = E, the Identity Operation n indicates a rotation of 360/n where n = 1,.... k (b) Improper Rotations: Sn , k = 1,....., n k When k = 1, n = 1 Sn = , Reflection Operation k When k = 1, n = 2 Sn = i , Inversion Operation In general practice we distinguish Five types of operation: (i) E, Identity Operation k (ii) Cn , Proper Rotation about an axis (iii) , Reflection through a plane (iv) i, Inversion through a center k (v) Sn , Rotation about an an axis followed by reflection through a plane perpendicular to that axis. Each of these Symmetry Operations is associated with a Symmetry Element which is a point, a line, or a plane about which the operation is performed such that the molecule's orientation and position before and after the operation are indistinguishable. The Symmetry Elements associated with a molecule are: (i) A Proper Axis of Rotation: Cn where n = 1,.... This implies n-fold rotational symmetry about the axis. -

1 2. the Lattice 2 3. the Components of Plane Symmetry Groups 3 4

PLANE SYMMETRY GROUPS MAXWELL LEVINE Abstract. This paper discusses plane symmetry groups, also known as pla- nar crystallographic groups or wallpaper groups. The seventeen unique plane symmetry groups describe the symmetries found in two-dimensional patterns such as those found on weaving patterns, the work of the artist M.C. Escher, and of course wallpaper. We shall discuss the fundamental components and properties of plane symmetry groups. Contents 1. What are Plane Symmetry Groups? 1 2. The Lattice 2 3. The Components of Plane Symmetry Groups 3 4. Generating Regions 5 5. The Crystallographic Restriction 6 6. Some Illustrated Examples 7 References 8 1. What are Plane Symmetry Groups? Since plane symmetry groups basically describe two-dimensional images, it is necessary to make sense of how such images can have isometries. An isometry is commonly understood as a distance- and shape-preserving map, but we must define isometries for this new context. 2 Definition 1.1. A planar image is a function Φ: R −! fc1; : : : ; cng where c1 ··· cn are colors. Note that we must make a distinction between a planar image and an image of a function. Definition 1.2. An isometry of a planar image Φ is an isometry f : R2 −! R2 such that 8~x 2 R2; Φ(f(~x)) = Φ(~x). Definition 1.3. A translation in the plane is a function T : ~x −! ~x + ~v where ~v is a vector in the plane. Two translations T1 : ~x −! ~x + ~v1 and T2 : ~x −! ~x + ~v2 are linearly independent n if ~v1 and ~v2 are linearly independent. -

Symmetry Groups

Symmetry Groups Symmetry plays an essential role in particle theory. If a theory is invariant under transformations by a symmetry group one obtains a conservation law and quantum numbers. For example, invariance under rotation in space leads to angular momentum conservation and to the quantum numbers j, mj for the total angular momentum. Gauge symmetries: These are local symmetries that act differently at each space-time point xµ = (t,x) . They create particularly stringent constraints on the structure of a theory. For example, gauge symmetry automatically determines the interaction between particles by introducing bosons that mediate the interaction. All current models of elementary particles incorporate gauge symmetry. (Details on p. 4) Groups: A group G consists of elements a , inverse elements a−1, a unit element 1, and a multiplication rule “ ⋅ ” with the properties: 1. If a,b are in G, then c = a ⋅ b is in G. 2. a ⋅ (b ⋅ c) = (a ⋅ b) ⋅ c 3. a ⋅ 1 = 1 ⋅ a = a 4. a ⋅ a−1 = a−1 ⋅ a = 1 The group is called Abelian if it obeys the additional commutation law: 5. a ⋅ b = b ⋅ a Product of Groups: The product group G×H of two groups G, H is defined by pairs of elements with the multiplication rule (g1,h1) ⋅ (g2,h2) = (g1 ⋅ g2 , h1 ⋅ h2) . A group is called simple if it cannot be decomposed into a product of two other groups. (Compare a prime number as analog.) If a group is not simple, consider the factor groups. Examples: n×n matrices of complex numbers with matrix multiplication. -

Convex Pentagons and Convex Hexagons That Can Form Rotationally Symmetric Tilings

Convex pentagons and convex hexagons that can form rotationally symmetric tilings Teruhisa SUGIMOTO1);2) 1) The Interdisciplinary Institute of Science, Technology and Art 2) Japan Tessellation Design Association E-mail: [email protected] Abstract In this study, the properties of convex hexagons that can generate rotationally symmet- ric edge-to-edge tilings are discussed. Since the convex hexagons are equilateral convex parallelohexagons, convex pentagons generated by bisecting the hexagons can form ro- tationally symmetric non-edge-to-edge tilings. In addition, under certain circumstances, tiling-like patterns with an equilateral convex polygonal hole at the center can be formed using these convex hexagons or pentagons. Keywords: pentagon, hexagon, tiling, rotationally symmetry, monohedral 1 Introduction In [3], Klaassen has demonstrated that there exist countless rotationally symmetric non-edge- to-edge tilings with convex pentagonal tiles.1;2 The convex pentagonal tiles are considered to be equivalent to bisecting equilateral convex parallelohexagons, which are hexagons where the opposite edges are parallel and equal in length. Thus, there exist countless rotationally symmetric edge-to-edge tilings with convex hexagonal tiles. Figure 1 shows a five-fold rotationally symmetric edge-to-edge tiling by a convex hexagonal tile (equilateral convex parallelohexagon) that satisfies the conditions, \A = D = 72◦;B = C = E = F = 144◦; a = b = c = d = e = f." Figure 2 shows examples of five-fold rotation- ally symmetric non-edge-to-edge tilings with convex pentagonal tiles generated by bisecting the equilateral convex parallelohexagon in Figure 1. Since the equilateral convex parallelo- hexagons have two-fold rotational symmetry, the number of ways to form convex pentagons generated by bisecting the convex hexagons (the dividing line needs to be a straight line that passes through the rotational center of the convex hexagon and intersects the opposite edge) arXiv:2005.10639v1 [math.MG] 19 May 2020 is countless. -

Elementary Functions Even and Odd Functions Reflection Across the Y-Axis Rotation About the Origin

Even and odd functions In this lesson we look at even and odd functions. A symmetry of a function is a transformation that leaves the graph unchanged. Consider the functions f(x) = x2 and g(x) = jxj whose graphs are drawn Elementary Functions below. Part 1, Functions Both graphs allow us to view the y-axis as a mirror. Lecture 1.4a, Symmetries of Functions: Even and Odd Functions A reflection across the y-axis leaves the function unchanged. This reflection is an example of a symmetry. Dr. Ken W. Smith Sam Houston State University 2013 Smith (SHSU) Elementary Functions 2013 1 / 25 Smith (SHSU) Elementary Functions 2013 2 / 25 Reflection across the y-axis Rotation about the origin A symmetry of a function can be represented by an algebra statement. What other symmetries might functions have? Reflection across the y-axis interchanges positive x-values with negative We can reflect a graph about the x-axis by replacing f(x) by −f(x): x-values, swapping x and −x: But could a graph be fixed by this reflection? Whenever a number is equal Therefore f(−x) = f(x): to its negative, then the number is zero. The statement, \For all x 2 R;f (−x) = f(x)" (x = −x =) 2x = 0 =) x = 0:) is equivalent to the statement So if f(x) = −f(x) then f(x) = 0: \The graph of the function is unchanged by reflection across the y-axis." But we could reflect a graph across first one axis and then the other. Reflecting a graph across the y-axis and then across the x-axis is equivalent to rotating the graph 180◦ around the origin.