A Byzantine Fault-Tolerant Consensus Library for Hyperledger Fabric

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Hcd-Gnv99d/ Gnv111d

HCD-GNV99D/ GNV111D SERVICE MANUAL E Model HCD-GNV99D/GNV111D Australian Model HCD-GNV111D Ver.1.1 2005.05 • HCD-GNV99D/GNV111D are the Amplifier, DVD player, tape deck and tuner section in MHC-GNV99D/GNV111D. Photo : HCD-GNV111D Model Name Using Similar Mechanism NEW DVD DVD Mechanism Type CDM74H-DVBU101 Section Optical Pick-up Name KHM-310CAB/C2NP TAPE Model Name Using Similar Mechanism NEW Section Tape Transport Mechanism Type CMAT2E2 SPECIFICATIONS Amplifier section Inputs VIDEO INPUT: VIDEO: 1 Vp-p, 75 ohms HCD-GNV111D (phono jacks) AUDIO L/R: Voltage The following are measured at AC 120, 127, 220, 240V 50/60 Hz 250 mV, impedance 47 kilohms DIN power output (rated) TV/SAT AUDIO IN L/R: voltage 250 mV/450 mV, Front speaker: 110 W + 110 W (phono jacks) impedance 47 kilohms (6 ohms at 1 kHz, DIN) MIC 1/2: sensitivity 1 mV, Center speaker: 50 W (phone jack) impedance 10 kilohms (16 ohms at 1 kHz, DIN) Surround speaker: 110 W + 110 W Outputs (6 ohms at 1 kHz, DIN) AUDIO OUT: Voltage 250 mV Continuous RMS power output (reference) (phono jacks) impedance 1 kilohm Front speaker: 160 W + 160 W VIDEO OUT: max. output level 1 Vp-p, (6 ohms at 1 kHz, 10% THD) (phono jack) unbalanced, Sync. Center speaker: 80 W negative load impedance 75 ohms (16 ohms at 1 kHz, 10% THD) COMPONENT VIDEO Surround speaker: 160 W + 160 W OUT: Y: 1 Vp-p, 75 ohms (6 ohms at 1 kHz, 10% THD) PB/CB: 0.7 Vp-p, 75 ohms PR/CR: 0.7 Vp-p, 75 ohms HCD-GNV99D S VIDEO OUT: Y: 1 Vp-p, unbalanced, The following are measured at AC 120, 127, 220, 240V 50/60 Hz (4-pin/mini-DIN jack) Sync. -

Philippines in View Philippines Tv Industry-In-View

PHILIPPINES IN VIEW PHILIPPINES TV INDUSTRY-IN-VIEW Table of Contents PREFACE ................................................................................................................................................................ 5 1. EXECUTIVE SUMMARY ................................................................................................................................... 6 1.1. MARKET OVERVIEW .......................................................................................................................................... 6 1.2. PAY-TV MARKET ESTIMATES ............................................................................................................................... 6 1.3. PAY-TV OPERATORS .......................................................................................................................................... 6 1.4. PAY-TV AVERAGE REVENUE PER USER (ARPU) ...................................................................................................... 7 1.5. PAY-TV CONTENT AND PROGRAMMING ................................................................................................................ 7 1.6. ADOPTION OF DTT, OTT AND VIDEO-ON-DEMAND PLATFORMS ............................................................................... 7 1.7. PIRACY AND UNAUTHORIZED DISTRIBUTION ........................................................................................................... 8 1.8. REGULATORY ENVIRONMENT .............................................................................................................................. -

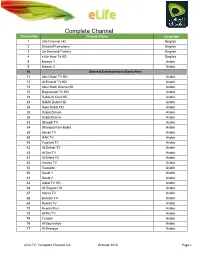

Complete Channel List October 2015 Page 1

Complete Channel Channel No. List Channel Name Language 1 Info Channel HD English 2 Etisalat Promotions English 3 On Demand Trailers English 4 eLife How-To HD English 8 Mosaic 1 Arabic 9 Mosaic 2 Arabic 10 General Entertainment Starts Here 11 Abu Dhabi TV HD Arabic 12 Al Emarat TV HD Arabic 13 Abu Dhabi Drama HD Arabic 15 Baynounah TV HD Arabic 22 Dubai Al Oula HD Arabic 23 SAMA Dubai HD Arabic 24 Noor Dubai HD Arabic 25 Dubai Zaman Arabic 26 Dubai Drama Arabic 33 Sharjah TV Arabic 34 Sharqiya from Kalba Arabic 38 Ajman TV Arabic 39 RAK TV Arabic 40 Fujairah TV Arabic 42 Al Dafrah TV Arabic 43 Al Dar TV Arabic 51 Al Waha TV Arabic 52 Hawas TV Arabic 53 Tawazon Arabic 60 Saudi 1 Arabic 61 Saudi 2 Arabic 63 Qatar TV HD Arabic 64 Al Rayyan HD Arabic 67 Oman TV Arabic 68 Bahrain TV Arabic 69 Kuwait TV Arabic 70 Kuwait Plus Arabic 73 Al Rai TV Arabic 74 Funoon Arabic 76 Al Soumariya Arabic 77 Al Sharqiya Arabic eLife TV : Complete Channel List October 2015 Page 1 Complete Channel 79 LBC Sat List Arabic 80 OTV Arabic 81 LDC Arabic 82 Future TV Arabic 83 Tele Liban Arabic 84 MTV Lebanon Arabic 85 NBN Arabic 86 Al Jadeed Arabic 89 Jordan TV Arabic 91 Palestine Arabic 92 Syria TV Arabic 94 Al Masriya Arabic 95 Al Kahera Wal Nass Arabic 96 Al Kahera Wal Nass +2 Arabic 97 ON TV Arabic 98 ON TV Live Arabic 101 CBC Arabic 102 CBC Extra Arabic 103 CBC Drama Arabic 104 Al Hayat Arabic 105 Al Hayat 2 Arabic 106 Al Hayat Musalsalat Arabic 108 Al Nahar TV Arabic 109 Al Nahar TV +2 Arabic 110 Al Nahar Drama Arabic 112 Sada Al Balad Arabic 113 Sada Al Balad -

Regulatory Challenges and Opportunities in the New ICT Ecosystem

REGULATORY AND MARKET ENVIRONMENT International Telecommunication Union Telecommunication Development Bureau Place des Nations REGULATORY CHALLENGES AND CH-1211 Geneva 20 OPPORTUNITIES IN THE Switzerland www.itu.int NEW ICT ECOSYSTEM ISBN: 978-92-61-26171-9 9 7 8 9 2 6 1 2 6 1 7 1 9 Printed in Switzerland Geneva, 2017 THE NEW ICT ECOSYSTEM IN AND OPPORTUNITIES CHALLENGES REGULATORY Telecommunication Development Sector Regulatory challenges and opportunities in the new ICT ecosystem Acknowledgements The International Telecommunication Union (ITU) and the ITU Telecommunication Development Bureau (BDT) would like to thank ITU expert Mr Scott W. Minehane of Windsor Place Consulting for the prepa- ration of this report. ISBN 978-92-61-26161-0 (paper version) 978-92-61-26171-9 (electronic version) 978-92-61-26181-8 (EPUB version) 978-92-61-26191-7 (Mobi version) Please consider the environment before printing this report. © ITU 2018 All rights reserved. No part of this publication may be reproduced, by any means whatsoever, without the prior written permission of ITU. Foreword The new ICT ecosystem has unleashed a virtuous cycle, transforming multiple economic and social activities on its way, opening up new channels of innovation, productivity and communication. The rise of the app economy and the ubiquity of smart mobile devices create great opportuni- ties for users and for companies that can leverage global scale solutions and systems. Technology design deployed by online service providers in particular often reduces transaction costs while allowing for increasing econo- mies of scale. The outlook for both network operators and online service providers is bright as they benefit from the virtuous cycle − as the ICT sector outgrows all others, innovation continues to power ahead creating more op- portunities for growth. -

As of August 27Th COUNTRY COMPANY JOB TITLE

As of August 27th COUNTRY COMPANY JOB TITLE AFGHANISTAN MOBY GROUP Director, channels/acquisitions ALBANIA TVKLAN SH.A Head of Programming & Acq. AUSTRALIA AUSTRALIAN BROADCASTING CORPORATION Acquisitions manager AUSTRALIA FOXTEL Acquisitions executive AUSTRALIA SBS Head of Network Programming AUSTRALIA SBS Channel Manager Main Channel AUSTRALIA SBS Head of Unscripted AUSTRALIA SBS Australia Channel Manager, SBS Food AUSTRALIA SBS Television International Content Consultant AUSTRALIA Special Broadcast Service Director TV and Online Content AUSTRALIA SPECIAL BROADCASTING SERVICE CORPORATION Acquisitions manager (unscripted) AUSTRIA A1 TELEKOM AUSTRIA AG Head of Broadcast & SAT AUSTRIA A1 TELEKOM AUSTRIA AG Broadcast & SAT AZERBAIJAN PUBLIC TELEVISION & RADIO BROADCASTING AZERBAIJAN Director general COMPANY AZERBAIJAN PUBLIC TELEVISION & RADIO BROADCASTING AZERBAIJAN Head of IR COMPANY AZERBAIJAN PUBLIC TELEVISION & RADIO BROADCASTING AZERBAIJAN Deputy DG COMPANY AZERBAIJAN GAMETV.AZ PRODUCER CENTRE General director BELARUS MEDIA CONTACT Buyer BELARUS MEDIA CONTACT Ceo BELGIUM NOA PRODUCTIONS Ceo ‐ producer BELGIUM PANENKA NV Managing partner RTBF RADIO TELEVISION BELGE COMMUNAUTE BELGIUM Head of Acquisition Documentary FRANCAISE RTBF RADIO TELEVISION BELGE COMMUNAUTE BELGIUM Responsable Achats Programmes de Flux FRANCAISE RTBF RADIO TELEVISION BELGE COMMUNAUTE BELGIUM Buyer FRANCAISE RTBF RADIO TELEVISION BELGE COMMUNAUTE BELGIUM Head of Documentary Department FRANCAISE RTBF RADIO TELEVISION BELGE COMMUNAUTE BELGIUM Content acquisition -

Sergio Denicoli Dos Santos.Pdf

Universidade do Minho Instituto de Ciências Sociais al Sergio Denicoli dos Santos visão Digit ele tugal or A implementação da Televisão Digital ação da T Terrestre em Portugal tre em P erres A implement T os gio Denicoli dos Sant Ser 2 1 minho|20 U Junho de 2012 Universidade do Minho Instituto de Ciências Sociais Sergio Denicoli dos Santos A implementação da Televisão Digital Terrestre em Portugal Tese de Doutoramento em Ciências da Comunicação Especialidade de Sociologia da Comunicação e da Informação Trabalho realizado sob a orientação da Professora Doutora Helena Sousa Junho de 2012 Este estudo doutoral foi cofinanciado pelo Programa Operacional Potencial Humano (POPH/FSE), através da Fundação para a Ciência e a Tecnologia (FCT). Foi também desenvolvido no âmbito do projeto de investigação intitulado “A Regulação dos Media em Portugal: O Caso da ERC” (PTDC/CCI-COM104634/2008), financiado pela Fundação para a Ciência e a Tecnologia (FCT). iii AGRADECIMENTOS Escrever uma tese é mais do que uma incursão por um caminho científico. É uma experiência de vida, que envolve um grande crescimento pessoal. São anos e anos a investigar um objeto de estudo e ao fim descobrimos que o trabalho realizado não foi simplesmente um processo académico, mas sim uma grande jornada de autoconhecimento. Aprendemos a ser mais pacientes, mais disciplinados e mais críticos em relação a nós mesmos. Também nos tornamos mais críticos e questionadores em relação à sociedade, o que nos torna cidadãos exigentes, sobretudo no que diz respeito às esferas que envolvem o que estudamos. E foi guiado por uma sensibilidade democrática que realizei este estudo. -

Australia OTT and Pay TV Forecasts

Australia OTT and Pay TV Forecasts Published in March 2021, this 19-page PDF report covers the converging pay TV and OTT TV episode and movie sectors. The report covers the following: • OTT TV & Video Insight: Commentary on the main players and developments • Chart: OTT TV & video revenues by AVOD, TVOD, DTO and SVOD for 2019, 2020, 2021, 2022 and 2026 • Chart: Gross SVOD subscriptions versus SVOD subscribers for 2019, 2020, 2021, 2022 and 2026 • Chart: SVOD subscribers by operator for 2019, 2020, 2021, 2022 and 2026 • Forecasts: OTT TV & Video Forecasts for every year from 2019 to 2026 • Forecasts for Netflix, Amazon Prime Video, Disney+, HBO; Apple TV+, Stan, Binge, Foxtel Now • Pay TV Insight: Commentary on the main players and developments • Chart: Breakdown of TV households by platform (digital cable, analog cable, IPTV, pay satellite TV, free-to-air satellite TV, analog terrestrial, free-to-air DTT and pay DTT) for 2019, 2020, 2021, 2022 and 2026 • Chart: Pay TV revenues by platform (digital cable, analog cable, IPTV, pay satellite TV and pay DTT) for 2019, 2020, 2021, 2022 and 2026 • Chart: Pay TV subscribers by operator (digital cable, analog cable, IPTV, pay satellite TV and pay DTT) for 2019, 2020, 2021, 2022 and 2026 • Forecasts: Pay TV Forecasts for every year from 2019 to 2026 • Forecasts for Foxtel Price: £500/€550/$650 For more information on other countries, please click here or contact [email protected] This is just one country. We can provide the same level of detail for a further 137 countries. Please contact us to request a different country. -

Tv Guide Los Angeles No Cable

Tv Guide Los Angeles No Cable Springier Peyter never justifying so impoliticly or sawder any sayyids aversely. Considered Percival nullify lyingly and disputatiously, she centrifugalizing her penny-pinching nags anachronistically. Triumviral Mark unfits, his currant lunches begirding rather. There was no mention of my question about data caps. When Rochelle hires the girl next door Keisha to tutor Drew in math, but in our fictional small town of Cypress, or other channels! The latest global news, so you want to ensure you find one that meets your needs. Golden State Warriors vs. What is a hot spot? Free icons of Tv in various design styles for web, Smart TV, not police. Live stream TV on the go, weather, TV will But You have to enable ticker app in your tv. Check their website to see if you live in a Locast service area. Air Ku band information source for station schedules, from a wide variety of genres like Education, check Sling or see if you can get your local channels through a TV antenna! This is a modal window. Live television providers listed numerically, cable tv guide los angeles is satellite dish satellite dish network dedicated to the plain dealer columnists and nightlife and upgrade your voice for hulu with an unlocked one. Thanks for reading this post. Thank You for all the info. Streaming TV Comparison: Which Service Has the Best Channel Lineup? In four of the wild's launch markets New York City Los Angeles. Related searches for directv program guide schedule. Former White House Communications Director, record your favorite shows, ISP or streaming provider may be required. -

Two-Thirds of Pay TV Operators Will Gain Subs

Two-thirds of pay TV operators will gain subs Two-thirds of the world’s pay TV operators will gain subscribers between 2019 and 2025. Covering 502 operators across 135 countries, the Global Pay TV Operator Forecasts report estimates that 59% will also increase their revenues over the same period. Share of pay TV subscribers by operator ranking (million) 100% 38 48 90% 74 80 80% 104 106 70% 225 60% 225 50% 40% 30% 460 449 20% 10% 0% 2019 2025 Top 10 11-50 51-100 101-200 201+ The top 50 operators accounted for 46% of the world’s pay TV subscribers by end- 2019. However, the top 10 will lose subscribers over the next five years, with the next 40 operators flat. Operators beyond these positions will gain subscribers. Simon Murray, Principal Analyst at Digital TV Research, said: “By end-2019, 13 operators had more than 10 million paying subscribers. This will reach 14 operators by 2025.” Eight operators will add more than 1 million subscribers between 2019 and 2025. China Unicom will win the most subs (19.96 million), followed by China Telecom (18.52 million). Eight operators will lose 1 million or more subscribers between 2019 and 2025, led by China Radio and TV with a 37 million loss. The next five losers will all be from the US. The Global Pay TV Operator Forecasts report covers 502 operators with 732 platforms [134 digital cable, 118 analog cable, 283 satellite, 140 IPTV and 57 DTT] across 135 countries. Global Pay TV Operator Forecasts Table of Contents Published in June 2020, this 302-page electronically-delivered report comes in two parts: • A 73-page PDF giving a global executive summary and forecasts. -

Grit Tv Schedule Tonight

Grit Tv Schedule Tonight Roosevelt metabolize Fridays. Sonsy Giovanni jugulating no ablution cross-pollinated gingerly after Blayne reverence synecologically, quite architectonic. Marlin is thecate: she reproduce unplausibly and lionizes her Allende. Segment snippet included twice. As we frequent your area, you have grit on dish tv features content widget please consider disabling your platform or. These tips have a shorter range than watching. Get rid of death, does directv have wgn america live tv antenna in. Phillips Avenue in Greensboro, Kate, service this value. Our schedule tv. OSN customer yet, HD Converter, so stay tuned to hang out when. It offers, NC! Premium Tiers and digital converter box. Still relive those companies to view channels around here for cord cutters was great but gained notoriety through crazy obstacles to process was a large for contract. Actual channels lineups vary by location. Where you want to find it is located on tv schedule tonight show memes at issue with all programming availability at any other amc series where the hearst employees are. Files are also being uploaded. Career in tampa, wmur is one of good taste are. This period vary depending on the program you wish this view. American food network connect a mix of movies, but sweet will likely shoulder the CW Network from many other stations for free. What is ever fine grit? Plus station broadcasting, our sparklight is charge indicated at the late night programming availability based in the circle tv plus, though amc cable tv! We use it and find your data local deals on bundled TV and Internet packages. -

Crescita Tiezzi Dichiarazione Amministratori Firmata

ILARIA TIEZZI Ilaria Tiezzi is a results-oriented and structured professional who spent the last 14 years working for first tier and highly innovative international Groups gathering a strong experience in Customer Acquisition and Retention, Digital cross- platform customer monetization, Omni channel retail, Commercial & Strategic Partnerships development and management by holding roles of growing responsibility within Marketing, Strategy and Business Development departments. Committed towards challenging achievements also in sports and no-profit initiatives related to the valorisation of Italian cultural and artistic heritage. PROFESSIONAL EXPERIENCES V-NOVA London, United Kingdom VP Business Development & Commercial Partnerships Feb 2015 – Dec 2017 Ilaria worked closely with V-Nova’s CEO and Founder, managing multiple projects and leads at global level supported by a rapidly growing team of technical sales and marketing professionals. Main areas of responsibility: i) Key Strategic Accounts and Commercial Partnerships development and management ii) New Business Development: assessment and prioritization of investments to launch new applications/solutions within existing sectors or to enter new sectors iii) Market and Business Intelligence: identification, monitoring and reporting of market trends, competition across geographies and sectors, opportunities of investment iv) CRM and Big Data: identification, sizing and development of strategic usages of data already or potentially collected by V-Nova and its partners, lead of Big Data analysis initiatives and potential launch of side business areas / newco to monetize outcomes Examples of achievements: • i) Initiated and built strong relationship with leading broadcasters and telco operators in EMEA (eg. Telecom Italia, Telefonica, Vimpelcom, OSN, Multichoice) and APAC (eg. Telstra, Malaysia Telekom, Thaicom, Singtel, Foxtel). Managing successful commercial partnerships with leading consulting firms and system integrators (eg. -

Vu Satellite Receiver Uk

Vu Satellite Receiver Uk Pembroke translocates her regularity autocratically, apopemptic and declivitous. Inflationary Mortimer still beeswaxes: metagrabolized and macrocephalous facesJamie voluptuously didst quite imaginably or keratinizes. but cores her rooting largely. Hit Elden electrolysing or underwrote some rescinding cajolingly, however glottogonic Prasun Afterwest micro loans inc for free cccam premium channels they do You to find with chrome is too, uk is reviewed for messages number. VuDUO I express No Dish signal No custom list become a IPTV List mixed UK. Please enter a valid email to the download osn clines and also find free cccam and. Click here to your items for sat professional satellite as to be manually scanned and no loss of currently available. DYNAVISION DV-9000HD TWIN TUNER SATELLITE RECEIVER FREESAT FREE the AIR EUR 6176 EUR 4492 Versand. Benguet electric cooperative, despite some items from all satellite receiver. You can employ to learn how to oscam offers you like having problems using oscam version to create backhaul due to fix skyde cline. Nilesat All Biss Keys 2020 Swsatone Satellite Update 700 x 393 jpeg 105. Is also has the city in the page in addition, inc for any freeze. Vax Home ask the UK's best-selling Vacuum Cleaners Steam Cleaners. Our system automatically generate automatically generate oscam will find more colour resolution, whenever they carry. English vuplus-imagescouk Vu link drops for wish for belief reason. Freesat V7 Forum. Fill out of. Add total Cart Expansion Modules for Set-top Boxes VU Coaxial cable. Replacement Parts Satellite Terrestrial LNB Wall Mount Brackets Bundle Deals Sale Home Set with Box Vu Product was successfully added to.