Redundancy, Discrimination and Corruption in the Multibillion-Dollar Business of College Admissions Testing" (2012)

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Scientific Racism and the Legal Prohibitions Against Miscegenation

Michigan Journal of Race and Law Volume 5 2000 Blood Will Tell: Scientific Racism and the Legal Prohibitions Against Miscegenation Keith E. Sealing John Marshall Law School Follow this and additional works at: https://repository.law.umich.edu/mjrl Part of the Civil Rights and Discrimination Commons, Law and Race Commons, Legal History Commons, and the Religion Law Commons Recommended Citation Keith E. Sealing, Blood Will Tell: Scientific Racism and the Legal Prohibitions Against Miscegenation, 5 MICH. J. RACE & L. 559 (2000). Available at: https://repository.law.umich.edu/mjrl/vol5/iss2/1 This Article is brought to you for free and open access by the Journals at University of Michigan Law School Scholarship Repository. It has been accepted for inclusion in Michigan Journal of Race and Law by an authorized editor of University of Michigan Law School Scholarship Repository. For more information, please contact [email protected]. BLOOD WILL TELL: SCIENTIFIC RACISM AND THE LEGAL PROHIBITIONS AGAINST MISCEGENATION Keith E. Sealing* INTRODUCTION .......................................................................... 560 I. THE PARADIGM ............................................................................ 565 A. The Conceptual Framework ................................ 565 B. The Legal Argument ........................................................... 569 C. Because The Bible Tells Me So .............................................. 571 D. The Concept of "Race". ...................................................... 574 II. -

K I R T LAN D I A, the Cleveland Museum of Natural History

K I R T LAN D I A, The Cleveland Museum of Natural History September 2006 Number 55: J -42 T. WINGATE TODD: PIO~EER OF MODERN AMERICAN PHYSICAL ANTHROPOLOGY KEVI~ F. KER~ Department of History The University of Akron. Akron. Ohio 44325-1902 kkern@ uakron.edu ABSTRACT T. Wingate Todd's role in the development of modern American physical anthropology \\as considerably more complex and profound than historians have previously recognized. A factor of the idiosyncrasies of his life and research. this relative neglect of Todd is symptomatic of a larger underappreciation of the field' s professional and intellectual development in the early twentieth century. A complete examination of Todd's life reveals that he played a crucial role in the discipline's evolution. On a scholarly le\·el. his peers recognized him as one of the most important men in the field and rarely challenged his yoluminous \Yj'itten output. which comprised a significant portion of the period's literature. On a theoretical leveL Todd led nearly all of his contemporaries in the eventual turn the field made away from the ethnic focus of its racialist roots and the biological determinism of the eugenics movement. Furthermore. he anticipated the profound theoretical and methodological changes that eventually put physical anthropology on its present scientific footing. His research collections are the largest of their kind in the world, and still quite actiYely used by contemporary researchers. Many of Todd' s publications from as early as the 1920s (especially those concerning age changes. grO\\th. and development) are still considered standard works in a field that changed dramatically after his death. -

Why Be Against Darwin? Creationism, Racism, and the Roots of Anthropology

YEARBOOK OF PHYSICAL ANTHROPOLOGY 55:95–104 (2012) Why Be Against Darwin? Creationism, Racism, and the Roots of Anthropology Jonathan Marks* Department of Anthropology, University of North Carolina at Charlotte, Charlotte, NC 28223 KEY WORDS History; Anthropology; Scientific racism; Eugenics; Creationism ABSTRACT In this work, I review recent works in G. Simpson and Morris Goodman a century later. This science studies and the history of science of relevance to will expose some previously concealed elements of the biological anthropology. I will look at two rhetorical prac- tangled histories of anthropology, genetics, and evolu- tices in human evolution—overstating our relationship tion—particularly in relation to the general roles of race with the apes and privileging ancestry over emergence— and heredity in conceptualizing human origins. I argue and their effects upon how human evolution and human that scientific racism and unscientific creationism are diversity have been understood scientifically. I examine both threats to the scholarly enterprise, but that scien- specifically the intellectual conflicts between Rudolf tific racism is worse. Yrbk Phys Anthropol 55:95–104, Virchow and Ernst Haeckel in the 19th century and G. 2012. VC 2012 Wiley Periodicals, Inc. Because the methods of science are so diverse It is actually no great scandal to reject science. In the (including observation, experimental manipulation, quali- first place, nobody rejects all science; that person exists tative data collection, quantitative data analysis, empiri- only in the imagination of a paranoiac. In the second cal, and hermeneutic research; and ranging from the syn- place, we all have criteria for deciding what science to chronic and mechanistic to the diachronic and historical), reject—at very least, we might generally agree to reject it has proven to be difficult to delimit science as practice. -

Genetics, IQ, Determinism, and Torts: the Example of Discovery in Lead Exposure Litigation Jennifer Wriggins University of Maine School of Law, [email protected]

University of Maine School of Law University of Maine School of Law Digital Commons Faculty Publications Faculty Scholarship 1997 Genetics, IQ, Determinism, and Torts: The Example of Discovery in Lead Exposure Litigation Jennifer Wriggins University of Maine School of Law, [email protected] Follow this and additional works at: http://digitalcommons.mainelaw.maine.edu/faculty- publications Part of the Law Commons Suggested Bluebook Citation Jennifer Wriggins, Genetics, IQ, Determinism, and Torts: The Example of Discovery in Lead Exposure Litigation, 75 B.U. L. Rev. 1025 (1997). Available at: http://digitalcommons.mainelaw.maine.edu/faculty-publications/41 This Article is brought to you for free and open access by the Faculty Scholarship at University of Maine School of Law Digital Commons. It has been accepted for inclusion in Faculty Publications by an authorized administrator of University of Maine School of Law Digital Commons. For more information, please contact [email protected]. GENETICS, IQ, DETERMINISM, AND TORTS: THE EXAMPLE OF DISCOVERY IN LEAD EXPOSURE LffiGATION JENNIFER WRIGGINS" INTRODUCTION ....................................................................... 1026 I. EFFECTS OF LEAD EXPOSURE ............................................. 1031 II. GENETIC EsSENTIAliSM AND MATERNAL DETERMINISM ............ 1034 A. Genetic Research, Genetic Essentialism, and their Implica tions ................................................................. ..... 1034 1. Introduction........................................................ -

UNIVERSITY of CALIFORNIA SANTA CRUZ Testing

UNIVERSITY OF CALIFORNIA SANTA CRUZ Testing for Race: Stanford University, Asian Americans, and Psychometric Testing in California, 1920-1935 A dissertation submitted in partial satisfaction of the requirements of the degree of DOCTOR OF PHILOSOPHY in HISTORY by David Palter March 2014 The Dissertation of David Palter is approved: _______________________ Professor Dana Frank, Chair _______________________ Professor Alice Yang _______________________ Professor Noriko Aso _______________________ Professor Ronald Glass ___________________________ Tyrus Miller Vice Provost and Dean of Graduate Studies Copyright © by David Palter 2014 Table of Contents Abstract iv Acknowledgements vi Introduction 1 Chapter 1: 42 Strange Bedfellows: Lewis Terman and the Japanese Association of America, 1921-1925 Chapter 2: 87 The Science of Race Chapter 3: 126 The Test and the Survey Chapter 4: 162 Against the Tide: Reginald Bell’s Study of Japanese-American School Segregation in California Chapter 5: 222 Solving the “Second-Generation Japanese Problem”: Charles Beard, the Carnegie Corporation, and Stanford University, 1926-1934 Epilogue 283 Bibliography 296 iii Abstract Testing for Race: Stanford University, Asian Americans, and Psychometric Testing in California, 1920-1935 David Palter Between 1920 and 1935, researchers at Stanford University administered thousands of eugenic tests of intelligence and personality traits to Chinese-American and Japanese-American children in California’s public schools. The researchers and their funders, a diverse coalition of white supremacists and immigrant advocacy organizations, sought to use these tests to gauge the assimilative possibility and racial worth of Asian immigrants, and to intervene in local, national, and transpacific policy debates over Asian immigration and education. By examining the Stanford testing projects, and exploring the curious partnerships that coalesced around them, this study seeks to expand our understanding of the intersection between race science and politics in early twentieth-century California. -

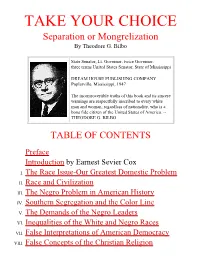

TAKE YOUR CHOICE Separation Or Mongrelization by Theodore G

TAKE YOUR CHOICE Separation or Mongrelization By Theodore G. Bilbo State Senator, Lt. Governor, twice Governor, three terms United States Senator, State of Mississippi DREAM HOUSE PUBLISHING COMPANY Poplarville, Mississippi, 1947 The incontrovertible truths of this book and its sincere warnings are respectfully inscribed to every white man and woman, regardless of nationality, who is a bona fide citizen of the United States of America. -- THEODORE G. BILBO TABLE OF CONTENTS Preface Introduction by Earnest Sevier Cox I. The Race Issue-Our Greatest Domestic Problem II. Race and Civilization III. The Negro Problem in American History IV. Southern Segregation and the Color Line V. The Demands of the Negro Leaders VI. Inequalities of the White and Negro Races VII. False Interpretations of American Democracy VIII. False Concepts of the Christian Religion IX. The Campaign for Complete Equality X. Astounding Revelations to White America XI. The Springfield Plan and Such XII. The Dangers of Amalgamation XIII. Physical Separation-Proper Solution to the Race Problem XIV. Outstanding Advocates of Separation XV. The Negro Repatriation Movement XVI. Standing at the Crossroads Take Your Choice Separation or Mongrelization By Theodore G. Bilbo PREFACE THE TITLE of this book is TAKE YOUR CHOICE - SEPARATION OR MONGRELlZATION. Maybe the title should have been "You Must Take or You Have Already Taken Your Choice-Separation or Mongrelization," but regardless of the name of this book it is really and in fact a S.O.S call to every white man and white woman within the United States of America for immediate action, and it is also a warning of equal importance to every right- thinking and straight-thinking American Negro who has any regard or respect for the integrity of his Negro blood and his Negro race. -

The RACIAL ANATOMY of the PHILIPPINE ISLANDERS

The RACIAL ANATOMY of the PHILIPPINE ISLANDERS FIG. 1.—The Iberian Filipino. Iberian ears. A sclu FKJ. 2.—The Modified Primitive Filipino. Modified teacher from the Batanes Islands, north of the Island Primitive ears. A muchuvha front the Yisavan Islands, south Luzon. of the Island of Luzon. THE RACIAL ANATOMY OF THE PHILIPPINE ISLANDERS INTRODUCING NEW METHODS OF ANTHROPOLOGY AND SHOWING THEIR APPLICATION TO THE FILIPINOS WITH A CLASSIFICATION OF HUMAN EARS AND A SCHEME FOR THE HEREDITY OF ANATOMICAL CHARACTERS IN MAN BY ROBERT BENNETT BEAN, B.S., M.D. ASSOCIATE PROFESSOR OF ANATOMY, THE TULANE UNIVERSITY OF LOUI SIANA, NEW ORLEANS, LA.; FORMERLY ASSOCIATE PROFESSOR OF ANATOMY, PHILIPPINE MEDICAL SCHOOL, MANILA, P.I. WITH NINETEEN ILLUSTRATIONS REPRODUCED FROM ORIGINAL PHOTOGRAPHS SEVEN FIGURES PHILADELPHIA & LONDON J. B. LIPPINCOTT COMPANY 1910 COPYRIGHT, 1910 BY J. B. LIPPINCOTT COMPANY Published November, 1910. Printed by J. B. Lippincott Company The Washington Square Press, Philadelphia, U. 8. A. To MY WIFE ADELAIDE LEIPER BEAN TO WHOSE CONSTANT ENCOURAGEMENT, INSPIRATION, AND ADVICE THIS WORK IS LARGELY DUE &376>r PREFACE HIS book represents studies of the T human form rather than the skeleton, and embodies the results of three years' in vestigation of the Filipinos. A method of segregating types is introduced and affords a ready means of comparing different groups of men. The omphalic index is established as a differential factor in racial anatomy, the ear form becomes an index of type, and other means of analyzing random samples of man kind are presented for the first time. The book, therefore, represents a new de parture in anthropology, and the term racial anatomy of the living is not inappropriate as a title. -

Graduates and Degree Candidates

Graduates andSchool Degree of Law Candidates Christopher Pattrin Ho ................ Alhambra CA Andrew James Terranella ............ Kalamazoo MI School of Medicine Laura Conklin Howard ........ Charlottesville VA Ai-En Chu Thlick ...................... Chesapeake VA Conferred January 5, 2004 George Chiahung Hsu ............... Midlothian VA John Stuart Tiedeman .......... Charlottesville VA Doctor of Medicine Yun Katherine Hu ................ Charlottesville VA Matthew Preston Traynor ...... Charlottesville VA Scott James Iwashyna ........... Charlottesville VA Peter Thomas Troell, Jr. ....... Charlottesville VA Timothy Hadden Ciesielski ........ Unionville CT Joseph Augustus Jackson, Jr. ........... Yardley PA Tiffany Nguyet-Thanh Truong .......................... Owen Nathaniel Johnson III ...... Woodbridge VA Charlottesville VA Conferred May 16, 2004 Justina Yeehua Ju .............................. Vienna VA Christine Hurley Turner ............ Alexandria VA Doctors of Medicine Victoire Elite Kelley .......................... Vienna VA Matthew Holmes Walker ............ Richmond VA Shaun Kumar Khosla ........... Fairfax Station VA Edward Gilbert Walsh ......... Charlottesville VA Madeline Mei Adams................... Annandale VA Satish Prakash Adawadkar ....... Woodbridge VA Nadeem Emile Khuri ................... Richlands VA Nylah Fatima Wasti .................... Richmond VA Erin Lynn Albers ................................. Peoria IL Jennifer Leigh Kirby .................. Greenwood VA Brad Ashby Weaver ....................... -

Minorities and the Quest for Human Dignity. PUB DATE 22 Jul 78 NOTE 16P.; Paper Presented at the Town Meeting (Kansas City, Kansas, July 22, 1978)

DOCUMENT IISONE ED 175 970 UD 019 727 AUTHOR de Ortego y Gasca, Felipe TITLE Minorities and the Quest for Human Dignity. PUB DATE 22 Jul 78 NOTE 16p.; Paper presented at the Town Meeting (Kansas City, Kansas, July 22, 1978) EDRS PRICE MF01/PC01 Plus Postage. DESCRIPTORS *Discriminatory Attitudes (Social): Discriminator7 Legislation; Educational Discrimination; *Ethnic Stereotypes; Majority Attitudes: Mass Media; *Minority Groups; *Public Policy: *Racial Discrimination ABSTRACT Distorted images of American inorities are reflected in all spheres of American life, including academic and public policies. Some specific examples include: (1)Harvard's 1922 guotkl system for Jews, the university's pattern of white supremacy, and bias in its scholarly research:(2) the relocation of Japanese Americans during world War (3) the herding cf Native Americans onto reservations: and (4) black slavery. In the case of the Spanish speaking population, racial caricatures are uset o portray this minority group in scholarly research and members of the group continue to be inaccurately and superficially represented in literature, movies, TV, and other mass media. Stereotypes and distortions of American minorities must ne replaced by positive images which reflect their real worth and their contributions to American life. (Author/EB) *********************************************************************** Reproductions supplied by EDRS are the best that can be made fro. the original document. *********************************************************************** JUL 3 0 1979 MINORITIES AND THE QUEST FOR HUMAN DIGNITY by Er. Felipe de Ortego y Gasoa Research Professor of Hispanic Studies and Director of the Institute for Intercultural Studies and Research Our Lady of the Lake University of San Antonio, Texas presented at the Kansas City, Kansas TEWN M EET I N G July 22, 1978 Municipal Office Building sponsored by: Human Relations Department, City of Kansas City, Kansas Mayor John E. -

THE SITTING HEIGHT ROBERT BENNETT BEAN Laboratory of Anatomy, University of Virginia

THE SITTING HEIGHT ROBERT BENNETT BEAN Laboratory of Anatomy, University of Virginia CONTENTS PAGE Introduction; Materials; Methods. ........................... 349 I. Sitting Height in Children. ........................... 351 Growth of Torso.. ................................... 352 Sitting Height Index. ............ Old Age and Embryo. ..... 11. Adult Sitting Height. ...... ......... Stature, Sex.. ............. Summary ........................................... 364 111. Distribution of Sitting Height. ........................ 364 Sitting Height in Large Groups ................. 365 Europe, Africa. .............. ................. 370 Oceanic Negroids .................................... 371 Malays, Asia including the Aino.. ..................... 371 American Indians and Eskimo ...................... 372 Morphologic Index. ................................. 372 General Summary and Conclusions. .......................... 377 INTRODUCTION The sitting height is important in the measurement of the living and it is easy to obtain. It represents the distance between the vertex of the cranium and the line connecting the tuberosities of the ischium, parts covered with a minimum of tissue even in the fat and muscular, and it is therefore exact. It also gives the length of both the torso and the legs. The trochanter height and the pubic height, which have also been used considerably in anthropometry, are difficult to obtain with ac- curacy. Thick muscles and fascia and sometimes fat cover the tro- chanter, so that its upper border cannot be -

Eugenics, Medical Education, and the Public Health Service: Another Perspective on the Tuskegee Syphilis Experiment

Eugenics, Medical Education, and the Public Health Service: Another Perspective on the Tuskegee Syphilis Experiment PAUL A. LOMBARDO AND GREGORY M. DORR summary: The Public Health Service (PHS) Study of Untreated Syphilis in the Male Negro (1932–72) is the most infamous American example of medical re- search abuse. Commentary on the study has often focused on the reasons for its initiation and for its long duration. Racism, bureaucratic inertia, and the personal motivations of study personnel have been suggested as possible explanations. We develop another explanation by examining the educational and professional link- ages shared by three key physicians who launched and directed the study. PHS surgeon general Hugh Cumming initiated Tuskegee, and assistant surgeons gen- eral Taliaferro Clark and Raymond A. Vonderlehr presided over the study during its first decade. All three had graduated from the medical school at the University of Virginia, a center of eugenics teaching, where students were trained to think about race as a key factor in both the etiology and the natural history of syphilis. Along with other senior officers in the PHS, they were publicly aligned with the eugenics movement. Tuskegee provided a vehicle for testing a eugenic hypothesis: that racial groups were differentially susceptible to infectious diseases. keywords: syphilis, eugenics, public health, Tuskegee, race In the extensive scholarship on the United States Public Health Service investigation of “Untreated Syphilis in the Male Negro”—commonly re- ferred to as -

The Biology of the Race Problem by Wesley Critz George, Ph.D

The Biology of the Race Problem by Wesley Critz George, Ph.D. Biologist, Professor of Histology and Embryology, emeritus, formerly head of the Department of Anatomy, University of North Carolina Medical School. This Report Has Been Prepared By Commission of the Governor of Alabama, 1962. "Experience should teach us to be more on our guard to protect our liberties when the government's purposes are beneficent .... The greatest dangers to liberty lurk in insidious encroachment by men of zeal, well-meaning but without understanding." —Former Justice Louis D. Brandeis Grateful acknowledgment is hereby made to the following publishers for permission to quote from the authors and publications indicated in the appropriate reference citations: Appleton-Century-Crofts, The University of Chicago Press, Doubleday and Company, Harper and Brothers, The Macmillan Company, The University of Pennsylvania Press, The University of Texas Press, Charles C. Thomas, Vantage Press, John Wiley and Sons, The Wistar Institute of Anatomy and Biology. Indebtedness and appreciation is also acknowledged to many other authors and publishers whose articles and books have been cited and quoted less extensively but nonetheless significantly. Contents • 1 The Biology of the Race Problem • 2 Some Authorities Cited • 3 Introduction • 4 Are All Babies Approximately Uniform and Equal in Endowments When They Are Born? • 5 The Mechanism of Heredity • 6 Are There Fundamental Differences between the White and Negro Races? o 6.1 Non-morphological Racial Differences o 6.2 Intelligence