A Counterexample to Modus Tollens

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

On Basic Probability Logic Inequalities †

mathematics Article On Basic Probability Logic Inequalities † Marija Boriˇci´cJoksimovi´c Faculty of Organizational Sciences, University of Belgrade, Jove Ili´ca154, 11000 Belgrade, Serbia; [email protected] † The conclusions given in this paper were partially presented at the European Summer Meetings of the Association for Symbolic Logic, Logic Colloquium 2012, held in Manchester on 12–18 July 2012. Abstract: We give some simple examples of applying some of the well-known elementary probability theory inequalities and properties in the field of logical argumentation. A probabilistic version of the hypothetical syllogism inference rule is as follows: if propositions A, B, C, A ! B, and B ! C have probabilities a, b, c, r, and s, respectively, then for probability p of A ! C, we have f (a, b, c, r, s) ≤ p ≤ g(a, b, c, r, s), for some functions f and g of given parameters. In this paper, after a short overview of known rules related to conjunction and disjunction, we proposed some probabilized forms of the hypothetical syllogism inference rule, with the best possible bounds for the probability of conclusion, covering simultaneously the probabilistic versions of both modus ponens and modus tollens rules, as already considered by Suppes, Hailperin, and Wagner. Keywords: inequality; probability logic; inference rule MSC: 03B48; 03B05; 60E15; 26D20; 60A05 1. Introduction The main part of probabilization of logical inference rules is defining the correspond- Citation: Boriˇci´cJoksimovi´c,M. On ing best possible bounds for probabilities of propositions. Some of them, connected with Basic Probability Logic Inequalities. conjunction and disjunction, can be obtained immediately from the well-known Boole’s Mathematics 2021, 9, 1409. -

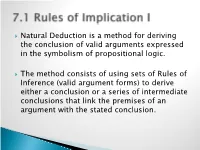

7.1 Rules of Implication I

Natural Deduction is a method for deriving the conclusion of valid arguments expressed in the symbolism of propositional logic. The method consists of using sets of Rules of Inference (valid argument forms) to derive either a conclusion or a series of intermediate conclusions that link the premises of an argument with the stated conclusion. The First Four Rules of Inference: ◦ Modus Ponens (MP): p q p q ◦ Modus Tollens (MT): p q ~q ~p ◦ Pure Hypothetical Syllogism (HS): p q q r p r ◦ Disjunctive Syllogism (DS): p v q ~p q Common strategies for constructing a proof involving the first four rules: ◦ Always begin by attempting to find the conclusion in the premises. If the conclusion is not present in its entirely in the premises, look at the main operator of the conclusion. This will provide a clue as to how the conclusion should be derived. ◦ If the conclusion contains a letter that appears in the consequent of a conditional statement in the premises, consider obtaining that letter via modus ponens. ◦ If the conclusion contains a negated letter and that letter appears in the antecedent of a conditional statement in the premises, consider obtaining the negated letter via modus tollens. ◦ If the conclusion is a conditional statement, consider obtaining it via pure hypothetical syllogism. ◦ If the conclusion contains a letter that appears in a disjunctive statement in the premises, consider obtaining that letter via disjunctive syllogism. Four Additional Rules of Inference: ◦ Constructive Dilemma (CD): (p q) • (r s) p v r q v s ◦ Simplification (Simp): p • q p ◦ Conjunction (Conj): p q p • q ◦ Addition (Add): p p v q Common Misapplications Common strategies involving the additional rules of inference: ◦ If the conclusion contains a letter that appears in a conjunctive statement in the premises, consider obtaining that letter via simplification. -

Chapter 9: Answers and Comments Step 1 Exercises 1. Simplification. 2. Absorption. 3. See Textbook. 4. Modus Tollens. 5. Modus P

Chapter 9: Answers and Comments Step 1 Exercises 1. Simplification. 2. Absorption. 3. See textbook. 4. Modus Tollens. 5. Modus Ponens. 6. Simplification. 7. X -- A very common student mistake; can't use Simplification unless the major con- nective of the premise is a conjunction. 8. Disjunctive Syllogism. 9. X -- Fallacy of Denying the Antecedent. 10. X 11. Constructive Dilemma. 12. See textbook. 13. Hypothetical Syllogism. 14. Hypothetical Syllogism. 15. Conjunction. 16. See textbook. 17. Addition. 18. Modus Ponens. 19. X -- Fallacy of Affirming the Consequent. 20. Disjunctive Syllogism. 21. X -- not HS, the (D v G) does not match (D v C). This is deliberate to make sure you don't just focus on generalities, and make sure the entire form fits. 22. Constructive Dilemma. 23. See textbook. 24. Simplification. 25. Modus Ponens. 26. Modus Tollens. 27. See textbook. 28. Disjunctive Syllogism. 29. Modus Ponens. 30. Disjunctive Syllogism. Step 2 Exercises #1 1 Z A 2. (Z v B) C / Z C 3. Z (1)Simp. 4. Z v B (3) Add. 5. C (2)(4)MP 6. Z C (3)(5) Conj. For line 4 it is easy to get locked into line 2 and strategy 1. But they do not work. #2 1. K (B v I) 2. K 3. ~B 4. I (~T N) 5. N T / ~N 6. B v I (1)(2) MP 7. I (6)(3) DS 8. ~T N (4)(7) MP 9. ~T (8) Simp. 10. ~N (5)(9) MT #3 See textbook. #4 1. H I 2. I J 3. -

Argument Forms and Fallacies

6.6 Common Argument Forms and Fallacies 1. Common Valid Argument Forms: In the previous section (6.4), we learned how to determine whether or not an argument is valid using truth tables. There are certain forms of valid and invalid argument that are extremely common. If we memorize some of these common argument forms, it will save us time because we will be able to immediately recognize whether or not certain arguments are valid or invalid without having to draw out a truth table. Let’s begin: 1. Disjunctive Syllogism: The following argument is valid: “The coin is either in my right hand or my left hand. It’s not in my right hand. So, it must be in my left hand.” Let “R”=”The coin is in my right hand” and let “L”=”The coin is in my left hand”. The argument in symbolic form is this: R ˅ L ~R __________________________________________________ L Any argument with the form just stated is valid. This form of argument is called a disjunctive syllogism. Basically, the argument gives you two options and says that, since one option is FALSE, the other option must be TRUE. 2. Pure Hypothetical Syllogism: The following argument is valid: “If you hit the ball in on this turn, you’ll get a hole in one; and if you get a hole in one you’ll win the game. So, if you hit the ball in on this turn, you’ll win the game.” Let “B”=”You hit the ball in on this turn”, “H”=”You get a hole in one”, and “W”=”you win the game”. -

The Development of Modus Ponens in Antiquity: from Aristotle to the 2Nd Century AD Author(S): Susanne Bobzien Source: Phronesis, Vol

The Development of Modus Ponens in Antiquity: From Aristotle to the 2nd Century AD Author(s): Susanne Bobzien Source: Phronesis, Vol. 47, No. 4 (2002), pp. 359-394 Published by: BRILL Stable URL: http://www.jstor.org/stable/4182708 Accessed: 02/10/2009 22:31 Your use of the JSTOR archive indicates your acceptance of JSTOR's Terms and Conditions of Use, available at http://www.jstor.org/page/info/about/policies/terms.jsp. JSTOR's Terms and Conditions of Use provides, in part, that unless you have obtained prior permission, you may not download an entire issue of a journal or multiple copies of articles, and you may use content in the JSTOR archive only for your personal, non-commercial use. Please contact the publisher regarding any further use of this work. Publisher contact information may be obtained at http://www.jstor.org/action/showPublisher?publisherCode=bap. Each copy of any part of a JSTOR transmission must contain the same copyright notice that appears on the screen or printed page of such transmission. JSTOR is a not-for-profit service that helps scholars, researchers, and students discover, use, and build upon a wide range of content in a trusted digital archive. We use information technology and tools to increase productivity and facilitate new forms of scholarship. For more information about JSTOR, please contact [email protected]. BRILL is collaborating with JSTOR to digitize, preserve and extend access to Phronesis. http://www.jstor.org The Development of Modus Ponens in Antiquity:' From Aristotle to the 2nd Century AD SUSANNE BOBZIEN ABSTRACT 'Aristotelian logic', as it was taught from late antiquity until the 20th century, commonly included a short presentationof the argumentforms modus (ponendo) ponens, modus (tollendo) tollens, modus ponendo tollens, and modus tollendo ponens. -

CMSC 250 Discrete Structures

CMSC 250 Discrete Structures Spring 2019 Recall Conditional Statement Definition A sentence of the form \If p then q" is symbolically donoted by p!q Example If you show up for work Monday morning, then you will get the job. p q p ! q T T T T F F F T T F F T Definitions for Conditional Statement Definition The contrapositive of p ! q is ∼q ! ∼p The converse of p ! q is q ! p The inverse of p ! q is ∼p ! ∼q Contrapositive Definition The contrapositive of a conditional statement is obtained by transposing its conclusion with its premise and inverting. So, Contrapositive of p ! q is ∼q ! ∼p. Example Original statement: If I live in Denver, then I live in Colorado. Contrapositive: If I don't live in Colorado, then I don't live in Denver. Theorem The contrapositive of an implication is equivalent to the original statement Converse Definition The Converse of a conditional statement is obtained by transposing its conclusion with its premise. So, Converse of p ! q is q ! p. Example Original statement: If I live in Denver, then I live in Colorado. Contrapositive: If I live in Colorado, then I live in Denver. The converse is NOT logically equivalent to the original. Inverse Definition The Inverse of a conditional statement is obtained by inverting its premise and conclusion. So, inverse of p ! q is ∼p ! ∼q. Example Original statement: If I live in Denver, then I live in Colorado. Contrapositive: If I do not live in Denver, then I do not live in Colorado. -

A New Probabilistic Explanation of the Modus Ponens–Modus Tollens Asymmetry

A New Probabilistic Explanation of the Modus Ponens–Modus Tollens Asymmetry Benjamin Eva ([email protected]) Department of Philosophy, Univeristy of Konstanz, 78464 Konstanz (Germany) Stephan Hartmann ([email protected]) Munich Center for Mathematical Philosophy, LMU Munich, 80539 Munich (Germany) Henrik Singmann ([email protected]) Department of Psychology, University of Warwick, Coventry, CV4 7AL (UK) Abstract invalid inferences (i.e., they accept more valid than invalid inferences). However, their behavior is clearly not in line A consistent finding in research on conditional reasoning is that individuals are more likely to endorse the valid modus po- with the norms of classical logic. Whereas participants tend nens (MP) inference than the equally valid modus tollens (MT) to unanimously accept the valid MP, the acceptance rates for inference. This pattern holds for both abstract task and prob- the equally valid MT inference scheme is considerably lower. abilistic task. The existing explanation for this phenomenon within a Bayesian framework (e.g., Oaksford & Chater, 2008) In a meta-analysis of the abstract task, Schroyens, Schaeken, accounts for this asymmetry by assuming separate probabil- and d’Ydewalle (2001) found acceptance rates of .97 for MP ity distributions for both MP and MT. We propose a novel compared to acceptance rates of .74 for MT. This MP-MT explanation within a computational-level Bayesian account of 1 reasoning according to which “argumentation is learning”. asymmetry will be the main focus of -

Modus Ponens and the Logic of Dominance Reasoning 1 Introduction

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by PhilPapers Modus Ponens and the Logic of Dominance Reasoning Nate Charlow Abstract If modus ponens is valid, you should take up smoking. 1 Introduction Some recent work has challenged two principles thought to govern the logic of the in- dicative conditional: modus ponens (Kolodny & MacFarlane 2010) and modus tollens (Yalcin 2012). There is a fairly broad consensus in the literature that Kolodny and Mac- Farlane’s challenge can be avoided, if the notion of logical consequence is understood aright (Willer 2012; Yalcin 2012; Bledin 2014). The viability of Yalcin’s counterexam- ple to modus tollens has meanwhile been challenged on the grounds that it fails to take proper account of context-sensitivity (Stojnić forthcoming). This paper describes a new counterexample to modus ponens, and shows that strate- gies developed for handling extant challenges to modus ponens and modus tollens fail for it. It diagnoses the apparent source of the counterexample: there are bona fide in- stances of modus ponens that fail to represent deductively reasonable modes of reasoning. The diagnosis might seem trivial—what else, after all, could a counterexample to modus ponens consist in?1—but, appropriately understood, it suggests something like a method for generating a family of counterexamples to modus ponens. We observe that a family of uncertainty-reducing “Dominance” principles must implicitly forbid deducing a conclusion (that would otherwise be sanctioned by modus ponens) when certain con- ditions on a background object (e.g., a background decision problem or representation of relevant information) are not met. -

Logic Taking a Step Back Some Modeling Paradigms Outline

Help Taking a step back Logic query Languages and expressiveness Propositional logic Specification of propositional logic data Learning model Inference Inference algorithms for propositional logic Inference in propositional logic with only definite clauses Inference in full propositional logic predictions Firstorder logic Specification of firstorder logic Models describe how the world works (relevant to some task) Inference algorithms for firstorder logic Inference in firstorder logic with only definite clauses What type of models? Inference in full firstorder logic Other logics Logic and probability CS221: Artificial Intelligence (Autumn 2012) Percy Liang CS221: Artificial Intelligence (Autumn 2012) Percy Liang 1 Some modeling paradigms Outline State space models: search problems, MDPs, games Languages and expressiveness Applications: route finding, game playing, etc. Propositional logic Specification of propositional logic Think in terms of states, actions, and costs Inference algorithms for propositional logic Variablebased models: CSPs, Markov networks, Bayesian Inference in propositional logic with only definite networks clauses Applications: scheduling, object tracking, medical diagnosis, etc. Inference in full propositional logic Think in terms of variables and factors Firstorder logic Specification of firstorder logic Logical models: propositional logic, firstorder logic Inference algorithms for firstorder logic Applications: proving theorems, program verification, reasoning Inference in firstorder logic with only -

Module 3: Proof Techniques

Module 3: Proof Techniques Theme 1: Rule of Inference Let us consider the following example. Example 1: Read the following “obvious” statements: All Greeks are philosophers. Socrates is a Greek. Therefore, Socrates is a philosopher. This conclusion seems to be perfectly correct, and quite obvious to us. However, we cannot justify it rigorously since we do not have any rule of inference. When the chain of implications is more complicated, as in the example below, a formal method of inference is very useful. Example 2: Consider the following hypothesis: 1. It is not sunny this afternoon and it is colder than yesterday. 2. We will go swimming only if it is sunny. 3. If we do not go swimming, then we will take a canoe trip. 4. If we take a canoe trip, then we will be home by sunset. From this hypothesis, we should conclude: We will be home by sunset. We shall come back to it in Example 5. The above conclusions are examples of a syllogisms defined as a “deductive scheme of a formal argument consisting of a major and a minor premise and a conclusion”. Here what encyclope- dia.com is to say about syllogisms: Syllogism, a mode of argument that forms the core of the body of Western logical thought. Aristotle defined syllogistic logic, and his formulations were thought to be the final word in logic; they underwent only minor revisions in the subsequent 2,200 years. We shall now discuss rules of inference for propositional logic. They are listed in Table 1. These rules provide justifications for steps that lead logically to a conclusion from a set of hypotheses. -

Homework 8 Due Wednesday, 8 April

CIS 194: Homework 8 Due Wednesday, 8 April Propositional Logic In this section, you will prove some theorems in Propositional Logic, using the Haskell compiler to verify your proofs. The Curry-Howard isomorphism states that types are equivalent to theorems and pro- grams are equivalent to proofs. There is no need to run your code or even write test cases; once your code type checks, the proof will be accepted! Implication We will begin by defining some logical connectives. The first logical connective is implication. Luckily, implication is already built in to the Haskell type system in the form of arrow types. The type p -> q is equivalent to the logical statement P ! Q. We can now prove a very simple theorem called Modus Ponens. Modus Ponens states that if P implies Q and P is true, then Q is true. It is often written as: P ! Q Figure 1: Modus Ponens P Q We can write this down in Haskell as well: modus_ponens :: (p -> q) -> p -> q modus_ponens pq p = pq p cis 194: homework 8 2 Notice that the type of modus_ponens is identical to the Prelude function ($). We can therefore simplify the proof1: 1 This is a very interesting observation. The logical theorem Modus Ponens is modus_ponens :: (p -> q) -> p -> q exactly equivalent to function applica- tion! modus_ponens = ($) Disjunction The disjunction (also known as “or”) of the propositions P and Q is often written as P _ Q. In Haskell, we will define disjunction as follows: -- Logical Disjunction data p \/ q = Left p | Right q The disjunction has two constructors, Left and Right. -

Critical Thinking

Critical Thinking Mark Storey Bellevue College Copyright (c) 2013 Mark Storey Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.3 or any later version published by the Free Software Foundation; with no Invariant Sections, no Front-Cover Texts, and no Back-Cover Texts. A copy of the license is found at http://www.gnu.org/copyleft/fdl.txt. 1 Contents Part 1 Chapter 1: Thinking Critically about the Logic of Arguments .. 3 Chapter 2: Deduction and Induction ………… ………………. 10 Chapter 3: Evaluating Deductive Arguments ……………...…. 16 Chapter 4: Evaluating Inductive Arguments …………..……… 24 Chapter 5: Deductive Soundness and Inductive Cogency ….…. 29 Chapter 6: The Counterexample Method ……………………... 33 Part 2 Chapter 7: Fallacies ………………….………….……………. 43 Chapter 8: Arguments from Analogy ………………………… 75 Part 3 Chapter 9: Categorical Patterns….…….………….…………… 86 Chapter 10: Propositional Patterns……..….…………...……… 116 Part 4 Chapter 11: Causal Arguments....……..………….………....…. 143 Chapter 12: Hypotheses.….………………………………….… 159 Chapter 13: Definitions and Analyses...…………………...…... 179 Chapter 14: Probability………………………………….………199 2 Chapter 1: Thinking Critically about the Logic of Arguments Logic and critical thinking together make up the systematic study of reasoning, and reasoning is what we do when we draw a conclusion on the basis of other claims. In other words, reasoning is used when you infer one claim on the basis of another. For example, if you see a great deal of snow falling from the sky outside your bedroom window one morning, you can reasonably conclude that it’s probably cold outside. Or, if you see a man smiling broadly, you can reasonably conclude that he is at least somewhat happy.