Blackett Review of High Impact Low Probability Risks

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The History Group's Silver Jubilee

History of Meteorology and Physical Oceanography Special Interest Group Newsletter 1, 2010 ANNUAL REPORT CONTENTS We asked in the last two newsletters if you Annual Report ........................................... 1 thought the History Group should hold an Committee members ................................ 2 Annual General Meeting. There is nothing in Mrs Jean Ludlam ...................................... 2 the By-Law s or Standing Orders of the Royal Meteorological Society that requires the The 2010 Summer Meeting ..................... 3 Group to hold one, nor does Charity Law Report of meeting on 18 November .......... 4 require one. Which papers have been cited? .............. 10 Don’t try this at home! ............................. 10 Only one person responded, and that was in More Richard Gregory reminiscences ..... 11 passing during a telephone conversation about something else. He was in favour of Storm warnings for seafarers: Part 2 ....... 13 holding an AGM but only slightly so. He Swedish storm warnings ......................... 17 expressed the view that an AGM provides an Rikitea meteorological station ................. 19 opportunity to put forward ideas for the More on the D-Day forecast .................... 20 Group’s committee to consider. Recent publications ................................ 21 As there has been so little response, the Did you know? ........................................ 22 Group’s committee has decided that there will Date for your diary .................................. 23 not be an AGM this year. Historic picture ........................................ 23 2009 members of the Group ................... 24 CHAIRMAN’S REVIEW OF 2009 by Malcolm Walker year. Sadly, however, two people who have supported the Group for many years died during I begin as I did last year. Without an enthusiastic 2009. David Limbert passed away on 3 M a y, and conscientious committee, there would be no and Jean Ludlam died in October (see page 2). -

The Ship 2014/2015

A more unusual focus in your magazine this College St Anne’s year: architecture and the engineering skills that make our modern buildings possible. The start of our new building made this an obvious choice, but from there we go on to look at engineering as a career and at the failures and University of Oxford follies of megaprojects around the world. Not that we are without the usual literary content, this year even wider in range and more honoured by awards than ever. And, as always, thanks to the generosity and skills of our contributors, St Anne’s College Record a variety of content and experience that we hope will entertain, inspire – and at times maybe shock you. My thanks to the many people who made this issue possible, in particular Kate Davy, without whose support it could not happen. Hope you enjoy it – and keep the ideas coming; we need 2014 – 2015 them! - Number 104 - The Ship Annual Publication of the St Anne’s Society 2014 – 2015 The Ship St Anne’s College 2014 – 2015 Woodstock Road Oxford OX2 6HS UK The Ship +44 (0) 1865 274800 [email protected] 2014 – 2015 www.st-annes.ox.ac.uk St Anne’s College St Anne’s College Alumnae log-in area Development Office Contacts: Lost alumnae Register for the log-in area of our website Over the years the College has lost touch (available at https://www.alumniweb.ox.ac. Jules Foster with some of our alumnae. We would very uk/st-annes) to connect with other alumnae, Director of Development much like to re-establish contact, and receive our latest news and updates, and +44 (0)1865 284536 invite them back to our events and send send in your latest news and updates. -

Appendix I Lunar and Martian Nomenclature

APPENDIX I LUNAR AND MARTIAN NOMENCLATURE LUNAR AND MARTIAN NOMENCLATURE A large number of names of craters and other features on the Moon and Mars, were accepted by the IAU General Assemblies X (Moscow, 1958), XI (Berkeley, 1961), XII (Hamburg, 1964), XIV (Brighton, 1970), and XV (Sydney, 1973). The names were suggested by the appropriate IAU Commissions (16 and 17). In particular the Lunar names accepted at the XIVth and XVth General Assemblies were recommended by the 'Working Group on Lunar Nomenclature' under the Chairmanship of Dr D. H. Menzel. The Martian names were suggested by the 'Working Group on Martian Nomenclature' under the Chairmanship of Dr G. de Vaucouleurs. At the XVth General Assembly a new 'Working Group on Planetary System Nomenclature' was formed (Chairman: Dr P. M. Millman) comprising various Task Groups, one for each particular subject. For further references see: [AU Trans. X, 259-263, 1960; XIB, 236-238, 1962; Xlffi, 203-204, 1966; xnffi, 99-105, 1968; XIVB, 63, 129, 139, 1971; Space Sci. Rev. 12, 136-186, 1971. Because at the recent General Assemblies some small changes, or corrections, were made, the complete list of Lunar and Martian Topographic Features is published here. Table 1 Lunar Craters Abbe 58S,174E Balboa 19N,83W Abbot 6N,55E Baldet 54S, 151W Abel 34S,85E Balmer 20S,70E Abul Wafa 2N,ll7E Banachiewicz 5N,80E Adams 32S,69E Banting 26N,16E Aitken 17S,173E Barbier 248, 158E AI-Biruni 18N,93E Barnard 30S,86E Alden 24S, lllE Barringer 29S,151W Aldrin I.4N,22.1E Bartels 24N,90W Alekhin 68S,131W Becquerei -

The Composition of the Lunar Crust: Radiative Transfer Modeling and Analysis of Lunar Visible and Near-Infrared Spectra

THE COMPOSITION OF THE LUNAR CRUST: RADIATIVE TRANSFER MODELING AND ANALYSIS OF LUNAR VISIBLE AND NEAR-INFRARED SPECTRA A DISSERTATION SUBMITTED TO THE GRADUATE DIVISION OF THE UNIVERSITY OF HAWAI‘I IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF DOCTOR OF PHILOSOPHY IN GEOLOGY AND GEOPHYSICS DECEMBER 2009 By Joshua T.S. Cahill Dissertation Committee: Paul G. Lucey, Chairperson G. Jeffrey Taylor Patricia Fryer Jeffrey J. Gillis-Davis Trevor Sorensen Student: Joshua T.S. Cahill Student ID#: 1565-1460 Field: Geology and Geophysics Graduation date: December 2009 Title: The Composition of the Lunar Crust: Radiative Transfer Modeling and Analysis of Lunar Visible and Near-Infrared Spectra We certify that we have read this dissertation and that, in our opinion, it is satisfactory in scope and quality as a dissertation for the degree of Doctor of Philosophy in Geology and Geophysics. Dissertation Committee: Names Signatures Paul G. Lucey, Chairperson ____________________________ G. Jeffrey Taylor ____________________________ Jeffrey J. Gillis-Davis ____________________________ Patricia Fryer ____________________________ Trevor Sorensen ____________________________ ACKNOWLEDGEMENTS I must first express my love and appreciation to my family. Thank you to my wife Karen for providing love, support, and perspective. And to our little girl Maggie who only recently became part of our family and has already provided priceless memories in the form of beautiful smiles, belly laughs, and little bear hugs. The two of you provided me with the most meaningful reasons to push towards the "finish line". I would also like to thank my immediate and extended family. Many of them do not fully understand much about what I do, but support the endeavor acknowledging that if it is something I’m willing to put this much effort into, it must be worthwhile. -

Lhcb Prepares for RICH Physics

I n t e r n at I o n a l J o u r n a l o f H I g H - e n e r g y P H y s I c s CERN COURIERV o l u m e 47 n u m b e r 6 J u ly/a u g u s t 2 0 07 LHCb prepares for RICH physics NEUTRINOS LHC FOCUS InSIDE STORY Borexino starts On the trail of At the far side to take data p8 heavy flavour p30 of the world p58 CCJulAugCover1.indd 1 11/7/07 13:50:51 Project1 10/7/07 13:56 Page 1 CONTENTS Covering current developments in high- energy physics and related fields worldwide CERN Courier is distributed to member-state governments, institutes and laboratories affiliated with CERN, and to their personnel. It is published monthly, except for January and August. The views expressed are not necessarily those of the CERN management. Editor Christine Sutton CERN CERN, 1211 Geneva 23, Switzerland E-mail [email protected] Fax +41 (0) 22 785 0247 Web cerncourier.com Advisory board James Gillies, Rolf Landua and Maximilian Metzger Laboratory correspondents: COURIERo l u m e u m b e r u ly u g u s t V 47 N 6 J /A 20 07 Argonne National Laboratory (US) Cosmas Zachos Brookhaven National Laboratory (US) P Yamin Cornell University (US) D G Cassel DESY Laboratory (Germany) Ilka Flegel, Ute Wilhelmsen EMFCSC (Italy) Anna Cavallini Enrico Fermi Centre (Italy) Guido Piragino Fermi National Accelerator Laboratory (US) Judy Jackson Forschungszentrum Jülich (Germany) Markus Buescher GSI Darmstadt (Germany) I Peter IHEP, Beijing (China) Tongzhou Xu IHEP, Serpukhov (Russia) Yu Ryabov INFN (Italy) Barbara Gallavotti Jefferson Laboratory (US) Steven Corneliussen JINR -

OSAA Boys Track & Field Championships

OSAA Boys Track & Field Championships 4A Individual State Champions Through 2006 100-METER DASH 1992 Seth Wetzel, Jesuit ............................................ 1:53.20 1978 Byron Howell, Central Catholic................................. 10.5 1993 Jon Ryan, Crook County ..................................... 1:52.44 300-METER INTERMEDIATE HURDLES 1979 Byron Howell, Central Catholic............................... 10.67 1994 Jon Ryan, Crook County ..................................... 1:54.93 1978 Rourke Lowe, Aloha .............................................. 38.01 1980 Byron Howell, Central Catholic............................... 10.64 1995 Bryan Berryhill, Crater ....................................... 1:53.95 1979 Ken Scott, Aloha .................................................. 36.10 1981 Kevin Vixie, South Eugene .................................... 10.89 1996 Bryan Berryhill, Crater ....................................... 1:56.03 1980 Jerry Abdie, Sunset ................................................ 37.7 1982 Kevin Vixie, South Eugene .................................... 10.64 1997 Rob Vermillion, Glencoe ..................................... 1:55.49 1981 Romund Howard, Madison ....................................... 37.3 1983 John Frazier, Jefferson ........................................ 10.80w 1998 Tim Meador, South Medford ............................... 1:55.21 1982 John Elston, Lebanon ............................................ 39.02 1984 Gus Envela, McKay............................................. 10.55w 1999 -

On the Moon with Apollo 16. a Guidebook to the Descartes Region. INSTITUTION National Aeronautics and Space Administration, Washington, D.C

DOCUMENT RESUME ED 062 148 SE 013 594 AUTHOR Simmons, Gene TITLE On the Moon with Apollo 16. A Guidebook to the Descartes Region. INSTITUTION National Aeronautics and Space Administration, Washington, D.C. REPORT NO NASA-EP-95 PUB DATE Apr 72 NOTE 92p. AVAILABLE FROM Superintendent of Documents, Government Printing Office, Washington, D. C. 20402 (Stock Number 3300-0421, $1.00) EDRS PRICE MF-$0.65 HC-$3.29 DESCRIPTORS *Aerospace Technology; Astronomy; *Lunar Research; Resource Materials; Scientific Research; *Space Sciences IDENTIFIERS NASA ABSTRACT The Apollo 16 guidebook describes and illustrates (with artist concepts) the physical appearance of the lunar region visited. Maps show the planned traverses (trips on the lunar surface via Lunar Rover); the plans for scientific experiments are described in depth; and timelines for all activities are included. A section on uThe Crewn is illustrated with photos showing training and preparatory activities. (Aathor/PR) ON THE MOON WITH APOLLO 16 A Guidebook to the Descartes Region U.S. DEPARTMENT OF HEALTH. EDUCATION & WELFARE OFFICE OF EDUCATION THIS DOCUMENT HAS BEEN REPRO- DUCED EXACTLY AS RECEIVED FROM THE PERSON OR ORGANIZATION ORIG- grIl INATING IT POINTS OF VIEW OR OPIN- IONS STATED DO NOT NECESSARILY REPRESENT OFFICIAL OFFICE OF EDU- CATION POSITION on POLICY. JAI -0110 44 . UP. 16/11.4LI NATIONAL AERONAUTICS AND SPACE ADMINISTRATION April 1972 EP-95 kr) ON THE MOON WITH APOLLO 16 A Guidebook to the Descartes Region by Gene Simmons A * 40. 7 NATIONAL AERONAUTICS AND SPACE ADMINISTRATION April 1972 2 Gene Simmons was Chief Scientist of the Manned Spacecraft Center in Houston for two years and isnow Professor of Geophysics at the Mas- sachusetts Institute of Technology. -

Annual Report 2014-2015

2014 2015 Annual Report Table of Contents 2/3 The International Space Science Institute (ISSI) is an Institute of Advanced Studies where scientists from all over the world meet in a multi- and interdisciplinary setting to reach out for new scientific horizons. The main function is to contribute to the achievement of a deeper understanding of the re- sults from different space missions, ground based observations and laboratory experiments, and add- ing value to those results through multidisciplinary research. The program of ISSI covers a widespread spectrum of disciplines from the physics of the solar system and planetary sciences to astrophysics and cosmology, and from Earth sciences to astrobiology. 4 From the Board of Trustees 20 International Teams 5 From the Directors 37 International Teams approved in 2015 6 About the International Space Science Institute 39 Visiting Scientists 7 The Board of Trustees 41 International Space Science Institute Beijing 8 The Science Committee 42 Events and ISSI in the media at a glance (including centerfold) 9 ISSI Staff 44 Staff Activities 10 Facilities 48 Staff Publications 11 Financial Overview 51 Visitor Publications 12 The Association Pro ISSI 61 Space Sciences Series of ISSI (SSSI) 13 Scientific Activities: The 20th Year 66 ISSI Scientific Reports Series (SR) 14 Forum 67 Pro ISSI SPATIUM Series 15 Workshops 68 ISSI Publications in the 20th Business Year ISSI Annual Report 2014 | 2015 From the Board of Trustees One year ago the undersigned was appointed by the President as secretary of the Board, succeeding Kathrin Altwegg who had served in that capacity for six years. -

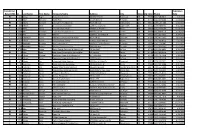

Installers.Pdf

Initial (I) or Expiration Recert (RI) Id Last Name First Sept Name Company Name Address City State Zip Code Phone Date RI 212 Abbas Roger Abbas Construction 6827 NE 33rd St Redmond OR 97756 (541) 548-6812 2/3/2024 I 2888 Adair Benjamin Septic Pros 1751 N Main st Prineville OR 97754 (541) 359-8466 5/10/2024 RI 727 Adams Kenneth Ken Adams Plumbing Inc 906 N 9th Ave Walla Walla WA 99362 (509) 529-7389 6/23/2023 RI 884 Adcock Dean Tri County Construction 22987 SE Dowty Rd Eagle Creek OR 97022 (503) 948-0491 9/25/2023 I 2592 Adelt Georg Cascade Valley Septic 17360 Star Thistle Ln Bend OR 97703 (541) 337-4145 11/18/2022 I 2994 Agee Jetamiah Leavn Trax Excavation LLC 50020 Collar Dr. La Pine OR 97739 (541) 640-3115 9/13/2024 I 2502 Aho Brennon BRX Inc 33887 SE Columbus St Albany OR 97322 (541) 953-7375 6/24/2022 I 2392 Albertson Adam Albertson Construction & Repair 907 N 6th St Lakeview OR 97630 (541) 417-1025 12/3/2021 RI 831 Albion Justin Poe's Backhoe Service 6590 SE Seven Mile Ln Albany OR 97322 (541) 401-8752 7/25/2022 I 2732 Alexander Brandon Blux Excavation LLC 10150 SE Golden Eagle Dr Prineville OR 97754 (541) 233-9682 9/21/2023 RI 144 Alexander Robert Red Hat Construction, Inc. 560 SW B Ave Corvallis OR 97333 (541) 753-2902 2/17/2024 I 2733 Allen Justan South County Concrete & Asphalt, LLC PO Box 2487 La Pine OR 97739 (541) 536-4624 9/21/2023 RI 74 Alley Dale Back Street Construction Corporation PO Box 350 Culver OR 97734 (541) 480-0516 2/17/2024 I 2449 Allison Michael Deschutes Property Solultions, LLC 15751 Park Dr La Pine OR 97739 (541) 241-4298 5/20/2022 I 2448 Allison Tisha Deschutes Property Solultions, LLC 15751 Park Dr La Pine OR 97739 (541) 241-4298 5/20/2022 RI 679 Alman Jay Integrated Water Services 4001 North Valley Dr. -

Adams Adkinson Aeschlimann Aisslinger Akkermann

BUSCAPRONTA www.buscapronta.com ARQUIVO 27 DE PESQUISAS GENEALÓGICAS 189 PÁGINAS – MÉDIA DE 60.800 SOBRENOMES/OCORRÊNCIA Para pesquisar, utilize a ferramenta EDITAR/LOCALIZAR do WORD. A cada vez que você clicar ENTER e aparecer o sobrenome pesquisado GRIFADO (FUNDO PRETO) corresponderá um endereço Internet correspondente que foi pesquisado por nossa equipe. Ao solicitar seus endereços de acesso Internet, informe o SOBRENOME PESQUISADO, o número do ARQUIVO BUSCAPRONTA DIV ou BUSCAPRONTA GEN correspondente e o número de vezes em que encontrou o SOBRENOME PESQUISADO. Número eventualmente existente à direita do sobrenome (e na mesma linha) indica número de pessoas com aquele sobrenome cujas informações genealógicas são apresentadas. O valor de cada endereço Internet solicitado está em nosso site www.buscapronta.com . Para dados especificamente de registros gerais pesquise nos arquivos BUSCAPRONTA DIV. ATENÇÃO: Quando pesquisar em nossos arquivos, ao digitar o sobrenome procurado, faça- o, sempre que julgar necessário, COM E SEM os acentos agudo, grave, circunflexo, crase, til e trema. Sobrenomes com (ç) cedilha, digite também somente com (c) ou com dois esses (ss). Sobrenomes com dois esses (ss), digite com somente um esse (s) e com (ç). (ZZ) digite, também (Z) e vice-versa. (LL) digite, também (L) e vice-versa. Van Wolfgang – pesquise Wolfgang (faça o mesmo com outros complementos: Van der, De la etc) Sobrenomes compostos ( Mendes Caldeira) pesquise separadamente: MENDES e depois CALDEIRA. Tendo dificuldade com caracter Ø HAMMERSHØY – pesquise HAMMERSH HØJBJERG – pesquise JBJERG BUSCAPRONTA não reproduz dados genealógicos das pessoas, sendo necessário acessar os documentos Internet correspondentes para obter tais dados e informações. DESEJAMOS PLENO SUCESSO EM SUA PESQUISA. -

The Search for Christian Doppler

meeting summary Report from the International Workshop on Remote Sensing in Geophysics Using Doppler Techniques* Arnaldo Brandolini,a+ Ben B. Balsley,b# Antonio Mabres,c# Ronald F. Woodman,d# Carlo Capsoni,3 Michele D'Amico,3 Warner L. Ecklund,e Tor Hagfors/ Robert Harper^ Malcolm L. Heron,h Wayne K. Hocking; Markku S. Lehtinen; Jurgen Rottger,k Martin F. Sarango,d Torn Sato,1 Richard J. Stauch,m J. M. Vaughn," Lucy R. Wyatt,0 and Dusan S. Zrnic? 1. Introduction (A. Brandolini, B. Balsley, in different application areas of Doppler remote sens- A. Mabres, R. Woodman) ing. Actually, Doppler remote sensing is employed in a wide range of applications, from atmospheric bound- The International Workshop on Remote Sensing in ary layer sensing to wind profiling, from sea surface Geophysics Using Doppler Techniques was held on 11- sensing to radar astronomy. Such different applications 15 March 1996 in Bellagio, Italy, at the Rockefeller face different challenges, which sometimes lead to Study and Conference Center. Supported by the development of very different techniques. Rockefeller Foundation, the workshop brought to- In the organizers' opinion, a discussion of such gether a small number of expert researchers working techniques between researchers working in different application areas could be both interesting and useful. *Organized by the Department of Electrical Engineering of In particular, ideas of signal-processing techniques Politecnico di Milano. could be profitably exchanged. The purpose of the +Workshop organizing committee. workshop was in fact to provide a forum where such aPolitecnico di Milano, Milan, Italy. a discussion could take place. bUniversity of Colorado at Boulder, Boulder, Colorado. -

Unbroken Meteorite Rough Draft

Space Visitors in Kentucky: Meteorites and Asteroid “Ida.” Most meteorites originate from asteroids. Meteorite Impact Sites in Kentucky Meteorite from Clark County, Ky. Mercury Earth Saturn Venus Mars Neptune Jupiter William D. Ehmann Asteroid Belt with contributions by Warren H. Anderson Uranus Pluto www.uky.edu/KGS Special thanks to Collie Rulo for cover design. Earth image was compiled from satellite images from NOAA and NASA. Kentucky Geological Survey James C. Cobb, State Geologist and Director University of Kentucky, Lexington Space Visitors in Kentucky: Meteorites and Meteorite Impact Sites in Kentucky William D. Ehmann Special Publication 1 Series XII, 2000 i UNIVERSITY OF KENTUCKY Collie Rulo, Graphic Design Technician Charles T. Wethington Jr., President Luanne Davis, Staff Support Associate II Fitzgerald Bramwell, Vice President for Theola L. Evans, Staff Support Associate I Research and Graduate Studies William A. Briscoe III, Publication Sales Jack Supplee, Director, Administrative Supervisor Affairs, Research and Graduate Studies Roger S. Banks, Account Clerk I KENTUCKY GEOLOGICAL SURVEY Energy and Minerals Section: James A. Drahovzal, Head ADVISORY BOARD Garland R. Dever Jr., Geologist V Henry M. Morgan, Chair, Utica Cortland F. Eble, Geologist V Ron D. Gilkerson, Vice Chair, Lexington Stephen F. Greb, Geologist V William W. Bowdy, Fort Thomas David A. Williams, Geologist V, Manager, Steven Cawood, Frankfort Henderson office Hugh B. Gabbard, Winchester David C. Harris, Geologist IV Kenneth Gibson, Madisonville Brandon C. Nuttall, Geologist IV Mark E. Gormley, Versailles William M. Andrews Jr., Geologist II Rosanne Kruzich, Louisville John B. Hickman, Geologist II William A. Mossbarger, Lexington Ernest E. Thacker, Geologist I Jacqueline Swigart, Louisville Anna E.