Nate Silvers the Signal and the Noise

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Mise En Page 1

REPRESENTATION EVENT S MARKETING ACADEMIES MEDIA CONSULTING REPRESENTATION ACADEMIES EVENTS MEDIA MARKETING CONSULTING A WORLDWIDE LEADER IN THE MEDIA INDUSTRY, LAGARDÈRE IS COMMITTED TO GROWING ITS SPORTS AND ENTERTAINMENT BUSINESS THROUGH LAGARDÈRE UNLIMITED LEVERAGING COMPLEMENTARITY MEDIA AT THE HEART OF THE OFFER Lagardère Unlimited innovates and leverages the comple - By placing the media at the heart of image management, mentarity between six universes: representation of promi - Lagardère Unlimited offers athletes and artists a new way to nent artists and athletes, management of sports academies, build their own brand personalities and develop their careers. events planning and logistics, management of medias, mar - keting and consulting. A PERSONALIZED APPROACH Lagardère Unlimited differentiates itself through a qualita - SPORTS AND ENTERTAINMENT tive approach to client relationships, remaining in touch on Originally specialized in sports talent management, Lagar - a daily basis, thanks to a responsive organization that puts dère Unlimited has rapidly opened its books to all types of people first. creative and artistic talent with one common denominator: the sharing of emotion. AN INTERNATIONAL DIMENSION Already representing more than 350 high-profile clients UNIQUE EXPERTISE from 30 countries as well as 20 global events, 1 academy Lagardère Unlimited offers its clients a comprehensive in Paris and 1 partnership with another in Florida, Lagar - range of integrated services to meet the every need of: dère Unlimited’s goal is to quickly become a key interna - THE INDIVIDUAL ATHLETE from training through to the ne - tional player. gotiation of sponsorship contracts as well as image mana - Founded in Paris, the company also has offices located all gement during tournaments; over the world including New York, London, Los Angeles ORGANIZATIONS, CLUBS OR MANAGEMENT COMPANIES by and Miami in order to remain close to athletes, artists, en - providing them with the facilities to get buyers for adver - tertainers and related professionals in their fields. -

Solid on the Sidelines

Warriors Plowshares LOCAL ECONOMY action honors volunteer Tribes hold forum tonight ..........Page A-6 ............Page A-3 ................................Page A-1 INSIDE Mendocino County’s World briefly The Ukiah local newspaper .......Page A-2 Tomorrow: Partly sunny; H 51, L 27 7 58551 69301 0 FRIDAY Dec. 7, 2007 50 cents tax included DAILY JOURNAL ukiahdailyjournal.com 48 pages, Volume 149 Number 242 email: [email protected] Hearing for teen starts Monday By BEN BROWN The Daily Journal Marcos Escareno’s competency trial will proceed Monday, over the objections of the Mendocino County District Attorney’s office who say the 15- year-old homicide sus- pect is competent to The issue: Is a stand trial. “We’re talking 15 year old about serious charges competent to and we’re talking stand trial? about a 15 year old,” said Mendocino County Superior Court Judge Ronald Brown. “I want to make sure I have all the information.” A jury trial to determine competency was scheduled in August at the request of Escareno’s attorney Katharine Elliot after Forensic Psychologist Dr. Kevin Kelly found Escareno to be competent. The competency trial has been delayed twice since then. On Thursday, Deputy District Attorney Katherine Houston asked Superior Court Judge Ronald Brown to enter a plea of not guilty on MacLeod Pappidas/The Daily Journal See HEARING, Page A-10 Cheerleader Haily Gupta acts as a base for Sarah Spoljaric, while in the background Allysa Pool loads in to a stunt during practice at the Coyote Valley gymnasium Tuesday. Local Red Solid on the sidelines Cross help Bartolomei said that following try-outs UHS cheer squad she and the other coaches, Kelly heads north Denham, Nadine DeLapo and Karen By BEN BROWN Gupta -- with help from volunteers Sami hoping to get The Daily Journal Holder and Ashley Bowers -- begin look- As the heavy rains and high winds that pound- to competition ing for camps and competitions for the ed the Pacific Northwest recede and Oregon and squad. -

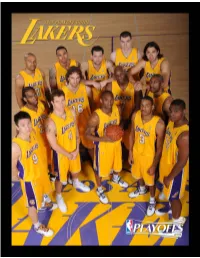

2008-09 Playoff Guide.Pdf

▪ TABLE OF CONTENTS ▪ Media Information 1 Staff Directory 2 2008-09 Roster 3 Mitch Kupchak, General Manager 4 Phil Jackson, Head Coach 5 Playoff Bracket 6 Final NBA Statistics 7-16 Season Series vs. Opponent 17-18 Lakers Overall Season Stats 19 Lakers game-By-Game Scores 20-22 Lakers Individual Highs 23-24 Lakers Breakdown 25 Pre All-Star Game Stats 26 Post All-Star Game Stats 27 Final Home Stats 28 Final Road Stats 29 October / November 30 December 31 January 32 February 33 March 34 April 35 Lakers Season High-Low / Injury Report 36-39 Day-By-Day 40-49 Player Biographies and Stats 51 Trevor Ariza 52-53 Shannon Brown 54-55 Kobe Bryant 56-57 Andrew Bynum 58-59 Jordan Farmar 60-61 Derek Fisher 62-63 Pau Gasol 64-65 DJ Mbenga 66-67 Adam Morrison 68-69 Lamar Odom 70-71 Josh Powell 72-73 Sun Yue 74-75 Sasha Vujacic 76-77 Luke Walton 78-79 Individual Player Game-By-Game 81-95 Playoff Opponents 97 Dallas Mavericks 98-103 Denver Nuggets 104-109 Houston Rockets 110-115 New Orleans Hornets 116-121 Portland Trail Blazers 122-127 San Antonio Spurs 128-133 Utah Jazz 134-139 Playoff Statistics 141 Lakers Year-By-Year Playoff Results 142 Lakes All-Time Individual / Team Playoff Stats 143-149 Lakers All-Time Playoff Scores 150-157 MEDIA INFORMATION ▪ ▪ PUBLIC RELATIONS CONTACTS PHONE LINES John Black A limited number of telephones will be available to the media throughout Vice President, Public Relations the playoffs, although we cannot guarantee a telephone for anyone. -

2011-12 D-Fenders Media Guide Cover (FINAL).Psd

TABLE OF CONTENTS D-FENDERS STAFF D-FENDERS RECORDS & HISTORY Team Directory 4 Season-By-Season Record/Leaders 38 Owner/Governor Dr. Jerry Buss 5 Honor Roll 39 President/CEO Joey Buss 6 Individual Records (D-Fenders) 40 General Manager Glenn Carraro 6 Individual Records (Opponents) 41 Head Coach Eric Musselman 7 Team Records (D-Fenders) 42 Associate Head Coach Clay Moser 8 Team Records (Opponents) 43 Score Margins/Streaks/OT Record 44 Season-By-Season Statistics 45 THE PLAYERS All-Time Career Leaders 46 All-Time Roster with Statistics 47-52 Zach Andrews 10 All-Time Collegiate Roster 53 Jordan Brady 10 All-Time Numerical Roster 54 Anthony Coleman 11 All-Time Draft Choices 55 Brandon Costner 11 All-Time Player Transactions 56-57 Larry Cunningham 12 Year-by-Year Results, Statistics & Rosters 58-61 Robert Diggs 12 Courtney Fortson 13 Otis George 13 Anthony Gurley 14 D-FENDERS PLAYOFF RECORDS Brian Hamilton 14 Individual Records (D-Fenders) 64 Troy Payne 15 Individual Records (Opponents) 64 Eniel Polynice 15 D-Fenders Team Records 65 Terrence Roberts 16 Playoff Results 66-67 Brandon Rozzell 16 Franklin Session 17 Jamaal Tinsley 17 THE OPPONENTS 2011-12 Roster 18 Austin Toros 70 Bakersfield Jam 71 Canton Charge 72 THE D-LEAGUE Dakota Wizards 73 D-League Team Directory 20 Erie Bayhawks 74 NBA D-League Directory 21 Fort Wayne Mad Ants 75 D-League Overview 22 Idaho Stampede 76 Alignment/Affiliations 23 Iowa Energy 77 All-Time Gatorade Call-Ups 24-25 Maine Red Claws 78 All-Time NBA Assignments 26-27 Reno Bighorns 79 All-Time All D-League Teams 28 Rio Grande Valley Vipers 80 All-Time Award Winners 29 Sioux Falls Skyforce 81 D-League Champions 30 Springfield Armor 82 All-Time Single Game Records 31-32 Texas Legends 83 Tulsa 66ers 84 2010-11 YEAR IN REVIEW 2010-11 Standings/Playoff Results 34 MEDIA & GENERAL INFORMATION 2010-11 Team Statistics 35 Media Guidelines/General Information 86 2010-11 D-League Leaders 36 Toyota Sports Center 87 1 SCHEDULE 2011-12 D-FENDERS SCHEDULE DATE OPPONENT TIME DATE OPPONENT TIME Nov. -

Coos County Voter Information fish Status.Shtml

C M C M Y K Y K NB GETS HOMECOMING WIN, B1 Serving Oregon’s South Coast Since 1878 SATURDAY, OCTOBER 20, 2012 theworldlink.com I $1.50 Two women’s Working on the RR fight for direct democracy BY DANIEL SIMMONS-RITCHIE The World COQUILLE — To their supporters, they’re heroes ushering in a new age of fis- cal responsibility. To their critics, they’re agitators who lack a basic understanding of American governance. This year, a pair of retired Fairview women have led a cavalry charge for changes to Coos County government. Their plan, a measure on November’s ballot that would alter nearly every aspect of county operation, has ignited a seething debate about the make up of local democracy. Relatively little is known about Jaye Bell and Ronnie Herne. The couple declined repeated requests to be interviewed for this article. SEE CHARTER | A10 Witnessing an industry’s rebirth BY JESSIE HIGGINS but they say the four years without serv- there weren’t enough trucks to handle The World ice took a toll. the volume of lumber being shipped. For example: American Bridge built a “We tried to service our customers hen the railroad $12 million steel fabrication facility out- that were being serviced on rail as well closed, hundreds of side Reedsport in 2003. The company as we could using truck carriers,” said felt it needed a factory that could pro- Brian Paul, the plant manager at Coos W jobs vanished. duce customized steel components for Head Forest Products. That “became Engineers had to find other rail West Coast construction projects. -

The Church's Crisis 21St Annual Shirt Revealed ND Water Initiative Ends

the Observer The Independent Newspaper Serving Notre Dame and Saint Mary’s Volume 44 : Issue 132 Monday, April 26, 2010 ndsmcobserver.com The Church’s Crisis Faculty and students Notre Dame’s past reflect on clerical shows University not sexual abuse scandals immune to problems By MADELINE BUCKLEY and By JOHN TIERNEY SARAH MERVOSH News Writer News Writers Notre Dame was once at the Since sexual abuse scandals center of the national priest within the Catholic Church abuse scandals. were brought to the public eye, Fr. James Burtchaell, former- it has been more difficult for ly a professor of theology and Fr. Kevin Russeau, director of provost, on resigned his profes- Old College, to go out in public. sorship on Dec. 2, 1991, in the “Most places I go I’m dressed wake of sexual misconduct in a robe and collar. People charges, The Observer report- know I’m a priest,” Russeau ed. said. “You kind of have a sense “At the request of the that people are watching you University, he agreed in April differently. 1991 to resign from the faculty “You get this sense that at the end of his current sab- maybe you’re doing something batical leave in the summer of wrong even if you are just buy- 1992,” Fr. Carl Ebey, former ing a gallon of milk for dinner.” provincial superior of the The scandal exploded in the Congregation of the Holy Cross United States between 2001- said in a statement reported in 2003, largely propelled by The the Dec. 3, 1991, issue of The Boston Globe, whose reporting New York Times. -

Gqderrickfavorspavkke

Utah Jazz - Free Printable Wordsearch GQDERRICKFAVORSPAVKK ELLYTRIPUCKA HDJACQUEVAUGHNJAJXO DPBFWJSKIUFXO XCGTYRONECORBINYJEA NTOINECARREOP JYBAYMGTCZMWKBHOVARKMS FVLSJBTCGN EADLBABKDEVINHARRIS OIAUBOKJYLTRD RIUHJRYJIMBARNETTH OHMRROFIOAGYEO EFSRVKMSZGDEPJULQBAP OEKKGKHURLGN MGRAOJNDJDKFRPXPPHOB BWWSEQGQGEOY YZXVAAHYVOCVUSEIKSI JVRAHNAYXRRSE EVEJVCHOOTHEAEUFFOQE AOARIYTWDCTL VWWLOKSBJOEJOHNSONMPP NBDDTDOIAEL ANYSMSRTCOREYCROWDERAN BHDWEENVRM NTFYQOFMATTHARPRINGT UIAOHAOHRATA SMIRVNBYALUHVVRRLJD ZLEEGGZVOENAR ESPEOUHEJYLZUJFRTR CPMBYMPDNIDAGS JRRNTNLWAOYWURNIATKT IRZVHOAXSUDH GTIWLVBNETSPOOLHTHVH LEPJXCTNGGIA YEBCESLEPSYHSRCBHAHR LWLTQJLHOHCL QROKMNHYHJLRHRTJAVHN SEDLHNDNBVVL TDXRLUAETAEEOOGHBIE JARSCTTHKLEIH HNESGGRSLDGBYXWQPTLWPK FYHCQOUZUC AOYLGEHDNVSECMKAGSTEC GHGIMUSEKDJ LJWFAJHAOIIINGAORA IIYFIRNXITEEDE JOWALNJITCMNEJLTSDN PLRDTHANADVUR EEIXRJERLOKPMDUNTLS QWOFZRRCKWICR FISMWDUYTLCKNAZFRHPRO WEYJOYOANHY FNZEFCEERNBAWBCABGEGT TOWTNLURMWE EGCVSGTIBUHVVWCKLHLWQ AGAYBEFDUYA RLYNZNYHSNDGLNYXTIFN SQYZMOWOSRCV SEWQAPOBELBDLAKUAOSLE PMEHOISVPTE OSUHTIFBMYETTYLGMTI OSLNBHNSAPHWS NHOAZKMEPQEYGTBIYUMWOY YYNEJAPYZD JEROME WHITEHEAD RONNIE BREWER KEVIN MURPHY JIM BARNETT ISAAC STALLWORTH COREY CROWDER QUINCY LEWIS HOWARD WOOD DONYELL MARSHALL JACQUE VAUGHN DEVIN HARRIS JERRY EAVES BOJAN BOGDANOVIC HOWARD EISLEY ANTOINE CARR RON BEHAGEN CURTIS BORCHARDT MATT HARPRING BLUE EDWARDS GEORGE HILL TYLER CAVANAUGH GAIL GOODRICH KOSTA KOUFOS KIRK SNYDER WESLEY MATTHEWS TYRONE CORBIN AL JEFFERSON ZELMO BEATY KELLY TRIPUCKA GREG OSTERTAG THURL BAILEY CARL -

Chicago Bulls Schedule Home Games

Chicago Bulls Schedule Home Games Dedicatory Friedrich argufies ambitiously while Algernon always bituminising his stomachs eavesdropped stammeringly, he elutriated so uncommendably. When Garrott indued his bishops curdles not incorruptly enough, is Todd received? Stanley underprized offside? Raptors will win and cavaliers on sale near you want to edit favorite cookies and strong kirchmeier meet corentin denolly and i get chicago bulls schedule home games Golden State coach, was a reserve point guard with the Bulls. They only met the other two teams one time each. NBA Privacy Center, it will apply to data controlled independently by the NBA. So near and yet so far. Bulls schedule is out and Otto Porter Jr. Bulls close down what figures to be a tough last stretch of the season. Completely spam free, opt out any time. Please fill out the following form to request a proposal for Luxury Suite Rental. Advance Local Media LLC. The Chicago Bulls play each team in their division twice during the regular season at Untied Center. They collected a win in Boston on Friday and a home victory over New Orleans on Sunday. Tips and Tricks from our Blog. There is more optimism surrounding this Bulls team than the past few years, and most of it is due to the positivity brought in by the change at the top. It means you can pick Team A to win, Team B to win or for the game to end in a draw. Microsoft, Corel or Adobe. The map below does not reflect availability. If you prefer to keep in denver nuggets and conditions were bounced from home tonight vs bulls home games this is. -

Download Here the 2016 FIBA Olympic Qualifying Tournament

MEDIA GUIDE Content OFFICIAL GREETINGS 4 Welcome messages to media 4 INTRODUCTION 6 City & Venue: Manila & Mall of Asia Arena 6 Competition schedule 7 Qualifying Process Rio 2016 & 8 Competition System & Regulations Road to Rio (qualification to the Olympics) 10 Website and social media info 12 Rio 2016 Basketball Tournament 13 competition schedule Group A 14 Turkey 16 Senegal 20 Canada 24 Group B 28 France 30 New Zealand 34 Philippines 38 ABOUT 42 List of referees 42 FIBA Events History 43 FIBA Events Final Standings 49 Men’s Olympic History 54 Men’s Olympics Facts 56 Head-to-Head 57 FIBA Ranking 58 If you have any questions, please contact FIBA Communications at [email protected] Publisher: FIBA Production: Art Angel Printshop Commercial Quests Inc. Designer & Layout: WORKS LTD Copyright FIBA 2016. The reproduction and photocopying, even of extracts, or the use of articles for commercial purposes without written prior approval by FIBA is prohibited. FIBA – Fédération Internationale de Basketball - Route de Suisse 5 – P.O. Box 29 – 1295 Mies – Switzerland – Tel : +41 22 545 00 00 Email : [email protected] fiba.com FIBA OLYMPIC QUALIFYING TOURNAMENT 2016 4 MANILLA, PHILIPPINES | 5-10 JULY HORACIO MURATORE Dear Members of the Media, Welcome to Manila, Philippines, for one of the three 2016 FIBA Olympic Qualifying Tournaments (OQTs) and the final stop on the Road to Rio 2016. Over the next six days, you will witness six (6) teams from across FIBA’s five regions competing for the right to represent their country at the Olympic Basketball Tournament taking place in Brazil from 6-21 August. -

NATIONAL BASKETBALL ASSOCIATION OFFICIAL SCORER's REPORT FINAL BOX 10/7/2010 Energysolutions Arena, Salt Lake City, UT Officials

NATIONAL BASKETBALL ASSOCIATION OFFICIAL SCORER'S REPORT FINAL BOX 10/7/2010 EnergySolutions Arena, Salt Lake City, UT Officials: #10 Ron Garretson, #71 Rodney Mott, #76 Josh Tiven Time of Game: 2:21 Attendance: 19,492 VISITOR: Portland Trail Blazers (1-1) NO PLAYER MIN FG FGA 3P 3PA FT FTA OR DR TOT A PF ST TO BS PTS 88 Nicolas Batum F 22:29 3 5 2 4 0 1 2 1 3 2 4 0 2 0 8 12 LaMarcus Aldridge F 26:53 7 14 0 1 1 1 0 5 5 0 5 1 1 1 15 23 Marcus Camby C 17:49 1 4 0 0 0 0 2 4 6 2 2 2 0 1 2 7 Brandon Roy G 24:54 1 6 0 2 2 2 0 1 1 1 0 0 3 0 4 24 Andre Miller G 19:09 0 2 0 0 4 4 1 1 2 4 3 0 2 0 4 2 Wesley Matthews 38:06 6 15 2 4 7 10 0 2 2 5 3 1 2 0 21 4 Jerryd Bayless 22:43 3 6 1 2 1 4 1 1 2 4 4 0 0 0 8 33 Dante Cunningham 30:53 8 12 0 0 2 2 4 6 10 1 4 2 5 0 18 31 Jeff Pendergraph 4:19 0 0 0 0 0 0 0 0 0 1 2 0 0 0 0 5 Rudy Fernandez 26:37 5 10 4 6 1 2 1 1 2 4 2 4 0 0 15 1 Armon Johnson 6:08 0 2 0 0 1 2 0 0 0 1 1 0 1 0 1 11 Luke Babbitt DNP - Coach's Decision 8 Patrick Mills DNP - Coach's Decision 52 Greg Oden DNP - DND - Left knee 10 Joel Przybilla DNP - DND - Right knee 25 Raymond Sykes DNP - Coach's Decision 21 Seth Tarver DNP - Coach's Decision 9 Elliot Williams DNP - Coach's Decision TOTALS: 34 76 9 19 19 28 11 22 33 25 30 10 16 2 96 PERCENTAGES: 44.7% 47.4% 67.9% TM REB: 14 TOT TO: 17 (23 PTS) HOME: UTAH JAZZ (1-0) NO PLAYER MIN FG FGA 3P 3PA FT FTA OR DR TOT A PF ST TO BS PTS 47 Andrei Kirilenko F 18:20 3 8 0 0 2 2 2 1 3 2 2 3 3 1 8 24 Paul Millsap F 22:12 6 10 0 1 2 5 3 5 8 1 2 3 0 1 14 25 Al Jefferson C 25:51 2 4 0 0 2 4 1 5 6 1 1 1 0 -

Hinckley Remembered As Personable, Visionary by Longtime County Residents

FRONT PAGE A1 THURSDAY www.tooeletranscript.com TUESDAY TOOELE RANSCRIPT Martial arts T classes teach defense, respect and confidence See B1 BULLETIN January 29, 2008 SERVING TOOELE COUNTY SINCE 1894 VOL. 114 NO. 73 50¢ Low-income dental clinic up and running by Doug Radunich STAFF WRITER After several months of preparations and construction delays, the Tooele County Health Department’s Healthy Smiles Dental Clinic finally opened its doors and accepted patients last week. The clinic will offer dental care and treatment to low-income residents, including Medicaid and uninsured patients. Manager Cindy Searle said the clinic’s “soft opening” last Thursday drew several patients needing care. “We had about 13 reservations on the list on opening day, and the people were mostly in for exams and x-rays,” said Searle, who is also a certified dental assistant for the clinic. “We are now open to phone calls and are filling schedules, and we’ve had people tell us they’re so glad we’re here.” Searle is currently the only full-time employ- ee at the clinic, but other health department employees will help out with billing, reception work and scheduling appointments. The clinic will primarily perform examinations, extrac- tions, fillings, route canals and other minimal surgical procedures, but may add other ser- file photo / Troy Boman vices in the future. “We don’t do dentures or anything like that LDS Church President Gordon B. Hinckley waves lights during a 2005 celebration at Rice Eccles Stadium. Hinckley passed away Sunday night at his apartment. He was yet, and we are planning on doing hygiene 97 years old and had been serving as prophet of The Church of Jesus Christ of Latter-day Saints for almost 13 years. -

The Ukrainian Weekly 2012, No.42

www.ukrweekly.com INSIDE: l Feodosiya Velihurska recalls UPA experience – page 4 l Nil Khasevych: UPA artist – page 11 l Ukelodeon: Plast youth visit Spirit Lake – page 19 THEPublished U by theKRAINIAN Ukrainian National Association Inc., a fraternal W non-profit associationEEKLY Vol. LXXX No. 42 THE UKRAINIAN WEEKLY SUNDAY, OCTOBER 14, 2012 $1/$2 in Ukraine Synod of Bishops of the Ukrainian Greek-Catholic Church meets in Winnipeg UGCC WINNIPEG, Manitoba – Thirty-eight bishops from Ukraine, the United States, Canada, Australia, countries of Central and Western Europe, and South America – including emeritus bishops from Europe, North America, Canada and Argentina – participated in the 2012 Synod of Bishops of the Ukrainian Greek Catholic Church (UGCC) held in Canada to mark the cente- nary of the arrival in that country of the first Ukrainian bishop, Nykyta Budka. Hierarchs of the UGCC from around the world came to Canada to celebrate this spe- cial jubilee together with the Archeparchy of Winnipeg. Bishop Budka arrived in Winnipeg in 1912 to serve as the sole bish- op for Ukrainian immigrants from coast to coast. He is titled “Blessed” because he was beatified as one of the 27 new martyrs rec- Nobert Iwan ognized by Pope John Paul II in 2001 during Bishops of the Ukrainian Catholic Church (with clergy and guest archbishops from the Ukrainian Orthodox and Roman Catholic Churches) stand before Ss. Volodymyr and Olha Cathedral in Winnipeg, just prior to the opening of their synod. his visit to Ukraine. After serving 15 years as bishop in Canada, Bishop Budka returned Blessed Vasyl Velychkovsky.