Interpreting Deep Learning: the Machine Learning Rorschach Test?

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Dynamic Compression and Expansion in a Classifying Recurrent Network

bioRxiv preprint doi: https://doi.org/10.1101/564476; this version posted March 1, 2019. The copyright holder for this preprint (which was not certified by peer review) is the author/funder, who has granted bioRxiv a license to display the preprint in perpetuity. It is made available under aCC-BY-NC-ND 4.0 International license. Dynamic compression and expansion in a classifying recurrent network Matthew Farrell1-2, Stefano Recanatesi1, Guillaume Lajoie3-4, and Eric Shea-Brown1-2 1Computational Neuroscience Center, University of Washington 2Department of Applied Mathematics, University of Washington 3Mila|Qu´ebec AI Institute 4Dept. of Mathematics and Statistics, Universit´ede Montr´eal Abstract Recordings of neural circuits in the brain reveal extraordinary dynamical richness and high variability. At the same time, dimensionality reduction techniques generally uncover low- dimensional structures underlying these dynamics when tasks are performed. In general, it is still an open question what determines the dimensionality of activity in neural circuits, and what the functional role of this dimensionality in task learning is. In this work we probe these issues using a recurrent artificial neural network (RNN) model trained by stochastic gradient descent to discriminate inputs. The RNN family of models has recently shown promise in reveal- ing principles behind brain function. Through simulations and mathematical analysis, we show how the dimensionality of RNN activity depends on the task parameters and evolves over time and over stages of learning. We find that common solutions produced by the network naturally compress dimensionality, while variability-inducing chaos can expand it. We show how chaotic networks balance these two factors to solve the discrimination task with high accuracy and good generalization properties. -

Dynamics of Excitatory-Inhibitory Neuronal Networks With

I (X;Y) = S(X) - S(X|Y) in c ≈ p + N r V(t) = V 0 + ∫ dτZ 1(τ)I(t-τ) P(N) = 1 V= R I N! λ N e -λ www.cosyne.org R j = R = P( Ψ, υ) + Mγ (Ψ, υ) σ n D +∑ j n k D k n MAIN MEETING Salt Lake City, UT Feb 27 - Mar 2 ................................................................................................................................................................................................................. Program Summary Thursday, 27 February 4:00 pm Registration opens 5:30 pm Welcome reception 6:20 pm Opening remarks 6:30 pm Session 1: Keynote Invited speaker: Thomas Jessell 7:30 pm Poster Session I Friday, 28 February 7:30 am Breakfast 8:30 am Session 2: Circuits I: From wiring to function Invited speaker: Thomas Mrsic-Flogel; 3 accepted talks 10:30 am Session 3: Circuits II: Population recording Invited speaker: Elad Schneidman; 3 accepted talks 12:00 pm Lunch break 2:00 pm Session 4: Circuits III: Network models 5 accepted talks 3:45 pm Session 5: Navigation: From phenomenon to mechanism Invited speakers: Nachum Ulanovsky, Jeffrey Magee; 1 accepted talk 5:30 pm Dinner break 7:30 pm Poster Session II Saturday, 1 March 7:30 am Breakfast 8:30 am Session 6: Behavior I: Dissecting innate movement Invited speaker: Hopi Hoekstra; 3 accepted talks 10:30 am Session 7: Behavior II: Motor learning Invited speaker: Rui Costa; 2 accepted talks 11:45 am Lunch break 2:00 pm Session 8: Behavior III: Motor performance Invited speaker: John Krakauer; 2 accepted talks 3:45 pm Session 9: Reward: Learning and prediction Invited speaker: Yael -

COSYNE 2014 Workshops

I (X;Y) = S(X) - S(X|Y) in c ≈ p + N r V(t) = V0 + ∫dτZ 1(τ)I(t-τ) P(N) = V= R I 1 N! λ N e -λ www.cosyne.org R j = γ R = P( Ψ, υ) + M(Ψ, υ) σ n D n +∑ j k D n k WORKSHOPS Snowbird, UT Mar 3 - 4 ................................................................................................................................................................................................................. COSYNE 2014 Workshops Snowbird, UT Mar 3-4, 2014 Organizers: Tatyana Sharpee Robert Froemke 1 COSYNE 2014 Workshops March 3 & 4, 2014 Snowbird, Utah Monday, March 3, 2014 Organizer(s) Location 1. Computational psychiatry – Day 1 Q. Huys Wasatch A T. Maia 2. Information sampling in behavioral optimization – B. Averbeck Wasatch B Day 1 R.C. Wilson M. R. Nassar 3. Rogue states: Altered Dynamics of neural activity in C. O’Donnell Magpie A brain disorders T. Sejnowski 4. Scalable models for high dimensional neural data I. M. Park Superior A E. Archer J. Pillow 5. Homeostasis and self-regulation of developing J. Gjorgjieva White Pine circuits: From single neurons to networks M. Hennig 6. Theories of mammalian perception: Open and closed E. Ahissar Magpie B loop modes of brain-world interactions E. Assa 7. Noise correlations in the cortex: Quantification, J. Fiser Superior B origins, and functional significance M. Lengyel A. Pouget 8. Excitatory and inhibitory synaptic conductances: M. Lankarany Maybird Functional roles and inference methods T. Toyoizumi Workshop Co-Chairs Email Cell Robert Froemke, NYU [email protected] 510-703-5702 Tatyana Sharpee, Salk [email protected] 858-610-7424 Maps of Snowbird are at the end of this booklet (page 38). -

Predictive Coding in Balanced Neural Networks with Noise, Chaos and Delays

Predictive coding in balanced neural networks with noise, chaos and delays Jonathan Kadmon Jonathan Timcheck Surya Ganguli Department of Applied Physics Department of Physics Department of Applied Physics Stanford University,CA Stanford University,CA Stanford University, CA [email protected] Abstract Biological neural networks face a formidable task: performing reliable compu- tations in the face of intrinsic stochasticity in individual neurons, imprecisely specified synaptic connectivity, and nonnegligible delays in synaptic transmission. A common approach to combatting such biological heterogeneity involves aver- aging over large redundantp networks of N neurons resulting in coding errors that decrease classically as 1= N. Recent work demonstrated a novel mechanism whereby recurrent spiking networks could efficiently encode dynamic stimuli, achieving a superclassical scaling in which coding errors decrease as 1=N. This specific mechanism involved two key ideas: predictive coding, and a tight balance, or cancellation between strong feedforward inputs and strong recurrent feedback. However, the theoretical principles governing the efficacy of balanced predictive coding and its robustness to noise, synaptic weight heterogeneity and communica- tion delays remain poorly understood. To discover such principles, we introduce an analytically tractable model of balanced predictive coding, in which the de- gree of balance and the degree of weight disorder can be dissociated unlike in previous balanced network models, and we develop a mean field theory of coding accuracy. Overall, our work provides and solves a general theoretical framework for dissecting the differential contributions neural noise, synaptic disorder, chaos, synaptic delays, and balance to the fidelity of predictive neural codes, reveals the fundamental role that balance plays in achieving superclassical scaling, and unifies previously disparate models in theoretical neuroscience. -

COSYNE 2012 Workshops February 27 & 28, 2012 Snowbird, Utah

COSYNE 2012 Workshops February 27 & 28, 2012 Snowbird, Utah Monday, February 27 Organizer(s) Location 1. Coding and Computation in visual short-term memory. W.J. Ma Superior A 2. Neuromodulation: beyond the wiring diagram, M. Higley Wasatch B adding functional flexibility to neural circuits. 3. Sensorimotor processes reflected in spatiotemporal Z. Kilpatrick Superior B of neuronal activity. J-Y. Wu 4. Characterizing neural responses to structured K. Rajan Wasatch A and naturalistic stimuli. W.Bialek 5. Neurophysiological and computational mechanisms of D. Freedman Magpie A categorization. XJ. Wang 6. Perception and decision making in rodents S. Jaramillio Magpie B A. Zador 7. Is it time for theory in olfaction? V.Murphy Maybird N. Uchida G. Otazu C. Poo Workshop Co-Chairs Email Cell Brent Doiron, Pitt [email protected] 412-576-5237 Jess Cardin, Yale [email protected] 267-235-0462 Maps of Snowbird are at the end of this booklet (page 32). 1 COSYNE 2012 Workshops February 27 & 28, 2012 Snowbird, Utah Tuesday, February 28 Organizer(s) Location 1. Understanding heterogeneous cortical activity: S. Ardid Wasatch A the quest for structure and randomness. A. Bernacchia T. Engel 2. Humans, neurons, and machines: how can N. Majaj Wasatch B psychophysics, physiology, and modeling collaborate E. Issa to ask better questions in biological vision J. DiCarlo 3. Inhibitory synaptic plasticity T. Vogels Magpie A H Sprekeler R. Fromeke 4. Functions of identified microcircuits A. Hasenstaub Superior B V. Sohal 5. Promise and peril: genetics approaches for systems K. Nagal Superior A neuroscience revisited. D. Schoppik 6. Perception and decision making in rodents S. -

Minian: an Open-Source Miniscope Analysis Pipeline

bioRxiv preprint doi: https://doi.org/10.1101/2021.05.03.442492; this version posted May 4, 2021. The copyright holder for this preprint (which was not certified by peer review) is the author/funder, who has granted bioRxiv a license to display the preprint in perpetuity. It is made available under aCC-BY-NC-ND 4.0 International license. Title: Minian: An open-source miniscope analysis pipeline Authors: Zhe Dong1, William Mau1, Yu (Susie) Feng1, Zachary T. Pennington1, Lingxuan Chen1, YosiF Zaki1, Kanaka RaJan1, Tristan Shuman1, Daniel Aharoni*2, Denise J. Cai*1 *Corresponding Authors 1Nash Family Department oF Neuroscience, Icahn School oF Medicine at Mount Sinai 2Department oF Neurology, David GeFFen School oF Medicine, University oF CaliFornia bioRxiv preprint doi: https://doi.org/10.1101/2021.05.03.442492; this version posted May 4, 2021. The copyright holder for this preprint (which was not certified by peer review) is the author/funder, who has granted bioRxiv a license to display the preprint in perpetuity. It is made available under aCC-BY-NC-ND 4.0 International license. Abstract Miniature microscopes have gained considerable traction For in vivo calcium imaging in Freely behaving animals. However, extracting calcium signals From raw videos is a computationally complex problem and remains a bottleneck For many researchers utilizing single-photon in vivo calcium imaging. Despite the existence oF many powerFul analysis packages designed to detect and extract calcium dynamics, most have either key parameters that are hard-coded or insuFFicient step-by-step guidance and validations to help the users choose the best parameters. -

Neuronal Ensembles Wednesday, May 5Th, 2021

Neuronal Ensembles Wednesday, May 5th, 2021 Hosted by the NeuroTechnology Center at Columbia University and the Norwegian University of Science and Technology in collaboration with the Kavli Foundation Organized by Rafael Yuste and Emre Yaksi US Eastern Time European Time EDT (UTC -4) CEDT (UTC +2) Introduction 09:00 – 09:05 AM 15:00 – 15:05 Rafael Yuste (Kavli Institute for Brain Science) and Emre Yaksi (Kavli Institute for Systems Neuroscience) Introductory Remarks 09:05 – 09:15 AM 15:05 – 15:15 John Hopfield (Princeton University) Metaphor 09:15 – 09:50 AM 15:15 – 15:50 Moshe Abeles (Bar Ilan University) Opening Keynote Session 1 Development (Moderated by Rafael Yuste) 09:50 – 10:20 AM 15:50 – 16:20 Rosa Cossart (Inmed, France) From cortical ensembles to hippocampal assemblies 10:20 – 10:50 AM 16:20 – 16:50 Emre Yaksi (Kavli Institute for Systems Neuroscience) Function and development of habenular circuits in zebrafish brain 10:50 – 11:00 AM 16:50 – 17:00 Break Session 2 Sensory/Motor to Higher Order Computations (Moderated by Emre Yaksi) 11:00 – 11:30 AM 17:00 – 17:30 Edvard Moser (Kavli Institute for Systems Neuroscience) Network dynamics of entorhinal grid cells 11:30 – 12:00 PM 17:30 – 18:00 Gilles Laurent (Max Planck) Non-REM and REM sleep: mechanisms, dynamics, and evolution 12:00 – 12:30 PM 18:00 – 18:30 György Buzsáki (New York University) Neuronal Ensembles: a reader-dependent viewpoint 12:30 – 12:45 PM 18:30 – 18:45 Break US Eastern Time European Time EDT (UTC -4) CEDT (UTC +2) Session 3 Optogenetics (Moderated by Emre Yaksi) -

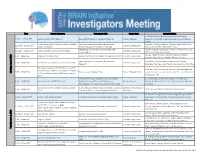

11:30Am EST Joshua Gordon, NIMH Director Neural Mechanisms Of

Time Session Moderator Presentation Title Session Type Session Speakers Dr. Rachel Wilson, Martin Family Professor of Basic 10:30 - 11:30am EST Joshua Gordon, NIMH Director Neural Mechanisms of Navigation Behavior Plenary Keynote Research in the Field of Neurobiology, Harvard Medical School Grace Hwang, Johns Hopkins University Applied How Can Dynamical Systems Neuroscience Konrad P. Kording, Joseph D. Monaco, Kanaka Rajan, Xaq 11:30am - 1:00pm EST Scientific Symposium Physics Laboratory Reciprocally Advance Machine Learning? Pitkow, Brad Pfeiffer, Nathaniel D. Daw Developing and Distributing Novel Electrode Cynthia Chestek, Xie Chong, Tim Harris, Allison Yorita, Eric 11:30am - 1:00pm EST Cynthia Chestek, University of Michigan Scientific Symposium Technologies Yttri, Loren Frank, Rahul Panat Claudia Angeli, Phil Starr, Nanthia Suthana, Michelle 1:30 - 3:00pm EST Greg Worrell, Mayo Clinic Advances in Neurotechnologies for Human Research Scientific Symposium Armenta Salas, Harrison Walker, Winston Chiong Expanding Species Diversity in Neuroscience Cory Miller, Zoe Donaldson, Angeles Salles, Andres 1:30 - 3:00pm EST Zoe Donaldson, University of Colorado Boulder Scientific Symposium Research Bendesky, Paul Katz, Galit Pelled, Karen David, Cynthia Moss John Ngai, Director of NIH BRAIN Initiative AND 15 of our 30 BRAIN Initiative Trainee Travel Awardees will Michelle Jones-London, Chief, Office of Programs Monday, 2020 June 1, 3:30 - 4:30pm EST Trainee Award Highlight Talks Trainee Highlight Talk speak; the names can be found on the Presenter -

How to Study the Neural Mechanisms of Multiple Tasks

Available online at www.sciencedirect.com ScienceDirect How to study the neural mechanisms of multiple tasks Guangyu Robert Yang, Michael W Cole and Kanaka Rajan Most biological and artificial neural systems are capable of computational work has been devoted to understand completing multiple tasks. However, the neural mechanism by the neural mechanisms behind individual tasks. which multiple tasks are accomplished within the same system is largely unclear. We start by discussing how different tasks Although neural systems are usually studied with one task can be related, and methods to generate large sets of inter- at a time, these systems are usually capable of performing related tasks to study how neural networks and animals many different tasks, and there are many reasons to study perform multiple tasks. We then argue that there are how a neural system can accomplish this. Studying mul- mechanisms that emphasize either specialization or flexibility. tiple tasks can serve as a powerful constraint to both We will review two such neural mechanisms underlying multiple biological and artificial neural systems (Figure 1a). For tasks at the neuronal level (modularity and mixed selectivity), one given task, there are often several alternative models and discuss how different mechanisms can emerge depending that describe existing experimental results similarly well. on training methods in neural networks. The space of potential solutions can be reduced by the requirement of solving multiple tasks. Address Zuckerman Mind Brain Behavior Institute, Columbia University, Center Experiments can uncover neural representation or mech- for Molecular and Behavioral Neuroscience, Rutgers University-Newark, anisms that appear sub-optimal for a single task. -

![Arxiv:1907.08549V2 [Q-Bio.NC] 4 Dec 2019 1 Introduction](https://docslib.b-cdn.net/cover/5778/arxiv-1907-08549v2-q-bio-nc-4-dec-2019-1-introduction-3675778.webp)

Arxiv:1907.08549V2 [Q-Bio.NC] 4 Dec 2019 1 Introduction

Universality and individuality in neural dynamics across large populations of recurrent networks Niru Maheswaranathan∗ Alex H. Williams∗ Matthew D. Golub Google Brain, Google Inc. Stanford University Stanford University Mountain View, CA Stanford, CA Stanford, CA [email protected] [email protected] [email protected] Surya Ganguli David Sussilloy Stanford University and Google Brain Google Brain, Google Inc. Stanford, CA and Mountain View, CA Mountain View, CA [email protected] [email protected] Abstract Task-based modeling with recurrent neural networks (RNNs) has emerged as a popular way to infer the computational function of different brain regions. These models are quantitatively assessed by comparing the low-dimensional neural rep- resentations of the model with the brain, for example using canonical correlation analysis (CCA). However, the nature of the detailed neurobiological inferences one can draw from such efforts remains elusive. For example, to what extent does training neural networks to solve common tasks uniquely determine the network dynamics, independent of modeling architectural choices? Or alternatively, are the learned dynamics highly sensitive to different model choices? Knowing the answer to these questions has strong implications for whether and how we should use task-based RNN modeling to understand brain dynamics. To address these foundational questions, we study populations of thousands of networks, with com- monly used RNN architectures, trained to solve neuroscientifically motivated tasks and characterize -

Spike-Timing-Dependent Ensemble Encoding by Non-Classically

RESEARCH ARTICLE Spike-timing-dependent ensemble encoding by non-classically responsive cortical neurons Michele N Insanally1,2,3,4,5, Ioana Carcea1,2,3,4,5, Rachel E Field1,2,3,4,5, Chris C Rodgers6,7, Brian DePasquale8, Kanaka Rajan9,10, Michael R DeWeese11,12, Badr F Albanna13, Robert C Froemke1,2,4,5,14* 1Skirball Institute for Biomolecular Medicine, New York University School of Medicine, New York, United States; 2Neuroscience Institute, New York University School of Medicine, New York, United States; 3Department of Otolaryngology, New York University School of Medicine, New York, United States; 4Department of Neuroscience and Physiology, New York University School of Medicine, New York, United States; 5Center for Neural Science, New York University, New York, United States; 6Department of Neuroscience, Columbia University, New York, United States; 7Kavli Institute of Brain Science, Columbia University, New York, United States; 8Princeton Neuroscience Institute, Princeton University, Princeton, United States; 9Department of Neuroscience, Icahn School of Medicine at Mount Sinai, New York, United States; 10Friedman Brain Institute, Icahn School of Medicine at Mount Sinai, New York, United States; 11Helen Wills Neuroscience Institute, University of California, Berkeley, Berkeley, United States; 12Department of Physics, University of California, Berkeley, Berkeley, United States; 13Department of Natural Sciences, Fordham University, New York, United States; 14Howard Hughes Medical Institute, New York University School of Medicine, New York, United States *For correspondence: Abstract Neurons recorded in behaving animals often do not discernibly respond to sensory [email protected] input and are not overtly task-modulated. These non-classically responsive neurons are difficult to interpret and are typically neglected from analysis, confounding attempts to connect neural activity Competing interests: The to perception and behavior. -

Multi-Region 'Network of Networks' Recurrent Neural Network Models of Adaptive and Maladaptive Learning

Title: Multi-region 'Network of Networks' Recurrent Neural Network Models of Adaptive and Maladaptive Learning PI(s) Grant: Kanaka Rajan Institution(s): Icahn School of Medicine at Mount Sinai Grant Number: 1R01EB028166-01 Title of Grant: Theories, Models, and Methods Abstract Authors: Mathew Perich Aaron Andalman Eugene Carter Other authors in the Deisseroth lab: Burns, V. M., Lovett-Barron, M., Broxton, M., Poole, M., Yang, S. J., Grosenick, L., Lerner, T. N., Chen, R., Benster, T., Mourrain, P., Levoy, M., Karl Deisseroth* Kanaka Rajan* *co-corresponding authors Abstract Text: Based on imaging in over 10,000 neurons across more than 15 regions imaged simultaneously in larval zebrafish during a novel behavioral challenge paradigm, we built and analyzed a recurrent neural network model constrained by the data from the outset. The model focused on activity in the habenula and raphe, identifying a significant and specific change in intra-habenular connectivity as a result of the behavioral challenge paradigm. We are extending this work to a whole-brain level multi-region recurrent neural network model and its accompanying theory of brain-wide control of adaptive to maladaptive state transitions. We consider the complex multi-dimensional interactions in the whole brain and relate them causally to behavior of the intact organism. We therefore present a new, more robust, scalable class of circuit models – multi-region neural network models, with support from a BRAIN Initiative/NIH Theories, Models, and Methods grant (1R01EB028166-01). These models are constrained by experimental data at two levels simultaneously: (a) large-scale neural dynamics – cellular resolution activity imaged from all 15 larval zebrafish brain regions – and (b) the behavior of the organism – tail movements tracked real-time in a range of behavioral states.