Neuronal Ensembles Wednesday, May 5Th, 2021

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Opportunities for US-Israeli Collaborations in Computational Neuroscience

Opportunities for US-Israeli Collaborations in Computational Neuroscience Report of a Binational Workshop* Larry Abbott and Naftali Tishby Introduction Both Israel and the United States have played and continue to play leading roles in the rapidly developing field of computational neuroscience, and both countries have strong interests in fostering collaboration in emerging research areas. A workshop was convened by the US-Israel Binational Science Foundation and the US National Science Foundation to discuss opportunities to encourage and support interdisciplinary collaborations among scientists from the US and Israel, centered around computational neuroscience. Seven leading experts from Israel and six from the US (Appendix 2) met in Jerusalem on November 14, 2012, to evaluate and characterize such research opportunities, and to generate suggestions and ideas about how best to proceed. The participants were asked to characterize the potential scientific opportunities that could be expected from collaborations between the US and Israel in computational neuroscience, and to discuss associated opportunities for training, applications, and other broader impacts, as well as practical considerations for maximizing success. Computational Neuroscience in the United States and Israel The computational research communities in the United States and Israel have both contributed significantly to the foundations of and advances in applying mathematical analysis and computational approaches to the study of neural circuits and behavior. This shared intellectual commitment has led to productive collaborations between US and Israeli researchers, and strong ties between a number of institutions in Israel and the US. These scientific collaborations are built on over 30 years of visits and joint publications and the results have been extremely influential. -

CV Cocuzza, DH Schultz, MW Cole

Guangyu Robert Yang Computational Neuroscientist "What I cannot create, I do not understand." – Richard Feynman Last updated on June 22, 2020 Professional Position 2018- Postdoctoral Research Scientist, Center for Theoretical Neuroscience, Columbia University. 2019- Co-organizer, Computational and Cognitive Neuroscience Summer School. 2017 Software Engineering Intern, Google Brain, Mountain View, CA. Host: David Sussillo 2013–2017 Research Assistant, Center for Neural Science, New York University. 2011 Visiting Student Researcher, Department of Neurobiology, Yale University. Education 2013–2018 Doctor of Philosophy, Center for Neural Science, New York University. Thesis: Neural circuit mechanisms of cognitive flexibility Advisor: Xiao-Jing Wang 2012–2013 Doctoral Study, Interdepartmental Neuroscience Program, Yale University. Rotation Advisors: Daeyeol Lee and Mark Laubach 2008–2012 Bachelor of Science, School of Physics, Peking University. Thesis: Controlling Chaos in Random Recurrent Neural Networks Advisor: Junren Shi. 2010 Computational and Cognitive Neurobiology Summer School, Cold Spring Har- bor Asia. Selected Awards 2018-2021 Junior Fellow, Simons Society of Fellows 2019 CCN 2019 Trainee Travel Award 2018 Dean’s Outstanding Dissertation Award in the Sciences, New York University 2016 Samuel J. and Joan B. Williamson Fellowship, New York University 2013-2016 MacCracken Fellowship, New York University 2011 Benz Scholarship, Peking University 2010 National Scholarship of China, China B [email protected], [email protected] 1/4 2009 University Scholarship, Peking University 2007 Silver Medal, Chinese Physics Olympiad, China Ongoing work presented at conferences *=equal contributions 2020 GR Yang*, PY Wang*, Y Sun, A Litwin-Kumar, R Axel, LF Abbott. Evolving the Olfactory System. CCN 2019 Oral, Cosyne 2020. 2020 S Minni*, L Ji-An*, T Moskovitz, G Lindsay, K Miller, M Dipoppa, GR Yang. -

Dynamic Compression and Expansion in a Classifying Recurrent Network

bioRxiv preprint doi: https://doi.org/10.1101/564476; this version posted March 1, 2019. The copyright holder for this preprint (which was not certified by peer review) is the author/funder, who has granted bioRxiv a license to display the preprint in perpetuity. It is made available under aCC-BY-NC-ND 4.0 International license. Dynamic compression and expansion in a classifying recurrent network Matthew Farrell1-2, Stefano Recanatesi1, Guillaume Lajoie3-4, and Eric Shea-Brown1-2 1Computational Neuroscience Center, University of Washington 2Department of Applied Mathematics, University of Washington 3Mila|Qu´ebec AI Institute 4Dept. of Mathematics and Statistics, Universit´ede Montr´eal Abstract Recordings of neural circuits in the brain reveal extraordinary dynamical richness and high variability. At the same time, dimensionality reduction techniques generally uncover low- dimensional structures underlying these dynamics when tasks are performed. In general, it is still an open question what determines the dimensionality of activity in neural circuits, and what the functional role of this dimensionality in task learning is. In this work we probe these issues using a recurrent artificial neural network (RNN) model trained by stochastic gradient descent to discriminate inputs. The RNN family of models has recently shown promise in reveal- ing principles behind brain function. Through simulations and mathematical analysis, we show how the dimensionality of RNN activity depends on the task parameters and evolves over time and over stages of learning. We find that common solutions produced by the network naturally compress dimensionality, while variability-inducing chaos can expand it. We show how chaotic networks balance these two factors to solve the discrimination task with high accuracy and good generalization properties. -

Dynamics of Excitatory-Inhibitory Neuronal Networks With

I (X;Y) = S(X) - S(X|Y) in c ≈ p + N r V(t) = V 0 + ∫ dτZ 1(τ)I(t-τ) P(N) = 1 V= R I N! λ N e -λ www.cosyne.org R j = R = P( Ψ, υ) + Mγ (Ψ, υ) σ n D +∑ j n k D k n MAIN MEETING Salt Lake City, UT Feb 27 - Mar 2 ................................................................................................................................................................................................................. Program Summary Thursday, 27 February 4:00 pm Registration opens 5:30 pm Welcome reception 6:20 pm Opening remarks 6:30 pm Session 1: Keynote Invited speaker: Thomas Jessell 7:30 pm Poster Session I Friday, 28 February 7:30 am Breakfast 8:30 am Session 2: Circuits I: From wiring to function Invited speaker: Thomas Mrsic-Flogel; 3 accepted talks 10:30 am Session 3: Circuits II: Population recording Invited speaker: Elad Schneidman; 3 accepted talks 12:00 pm Lunch break 2:00 pm Session 4: Circuits III: Network models 5 accepted talks 3:45 pm Session 5: Navigation: From phenomenon to mechanism Invited speakers: Nachum Ulanovsky, Jeffrey Magee; 1 accepted talk 5:30 pm Dinner break 7:30 pm Poster Session II Saturday, 1 March 7:30 am Breakfast 8:30 am Session 6: Behavior I: Dissecting innate movement Invited speaker: Hopi Hoekstra; 3 accepted talks 10:30 am Session 7: Behavior II: Motor learning Invited speaker: Rui Costa; 2 accepted talks 11:45 am Lunch break 2:00 pm Session 8: Behavior III: Motor performance Invited speaker: John Krakauer; 2 accepted talks 3:45 pm Session 9: Reward: Learning and prediction Invited speaker: Yael -

COSYNE 2014 Workshops

I (X;Y) = S(X) - S(X|Y) in c ≈ p + N r V(t) = V0 + ∫dτZ 1(τ)I(t-τ) P(N) = V= R I 1 N! λ N e -λ www.cosyne.org R j = γ R = P( Ψ, υ) + M(Ψ, υ) σ n D n +∑ j k D n k WORKSHOPS Snowbird, UT Mar 3 - 4 ................................................................................................................................................................................................................. COSYNE 2014 Workshops Snowbird, UT Mar 3-4, 2014 Organizers: Tatyana Sharpee Robert Froemke 1 COSYNE 2014 Workshops March 3 & 4, 2014 Snowbird, Utah Monday, March 3, 2014 Organizer(s) Location 1. Computational psychiatry – Day 1 Q. Huys Wasatch A T. Maia 2. Information sampling in behavioral optimization – B. Averbeck Wasatch B Day 1 R.C. Wilson M. R. Nassar 3. Rogue states: Altered Dynamics of neural activity in C. O’Donnell Magpie A brain disorders T. Sejnowski 4. Scalable models for high dimensional neural data I. M. Park Superior A E. Archer J. Pillow 5. Homeostasis and self-regulation of developing J. Gjorgjieva White Pine circuits: From single neurons to networks M. Hennig 6. Theories of mammalian perception: Open and closed E. Ahissar Magpie B loop modes of brain-world interactions E. Assa 7. Noise correlations in the cortex: Quantification, J. Fiser Superior B origins, and functional significance M. Lengyel A. Pouget 8. Excitatory and inhibitory synaptic conductances: M. Lankarany Maybird Functional roles and inference methods T. Toyoizumi Workshop Co-Chairs Email Cell Robert Froemke, NYU [email protected] 510-703-5702 Tatyana Sharpee, Salk [email protected] 858-610-7424 Maps of Snowbird are at the end of this booklet (page 38). -

Predictive Coding in Balanced Neural Networks with Noise, Chaos and Delays

Predictive coding in balanced neural networks with noise, chaos and delays Jonathan Kadmon Jonathan Timcheck Surya Ganguli Department of Applied Physics Department of Physics Department of Applied Physics Stanford University,CA Stanford University,CA Stanford University, CA [email protected] Abstract Biological neural networks face a formidable task: performing reliable compu- tations in the face of intrinsic stochasticity in individual neurons, imprecisely specified synaptic connectivity, and nonnegligible delays in synaptic transmission. A common approach to combatting such biological heterogeneity involves aver- aging over large redundantp networks of N neurons resulting in coding errors that decrease classically as 1= N. Recent work demonstrated a novel mechanism whereby recurrent spiking networks could efficiently encode dynamic stimuli, achieving a superclassical scaling in which coding errors decrease as 1=N. This specific mechanism involved two key ideas: predictive coding, and a tight balance, or cancellation between strong feedforward inputs and strong recurrent feedback. However, the theoretical principles governing the efficacy of balanced predictive coding and its robustness to noise, synaptic weight heterogeneity and communica- tion delays remain poorly understood. To discover such principles, we introduce an analytically tractable model of balanced predictive coding, in which the de- gree of balance and the degree of weight disorder can be dissociated unlike in previous balanced network models, and we develop a mean field theory of coding accuracy. Overall, our work provides and solves a general theoretical framework for dissecting the differential contributions neural noise, synaptic disorder, chaos, synaptic delays, and balance to the fidelity of predictive neural codes, reveals the fundamental role that balance plays in achieving superclassical scaling, and unifies previously disparate models in theoretical neuroscience. -

Neural Dynamics and the Geometry of Population Activity

Neural Dynamics and the Geometry of Population Activity Abigail A. Russo Submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy under the Executive Committee of the Graduate School of Arts and Sciences COLUMBIA UNIVERSITY 2019 © 2019 Abigail A. Russo All Rights Reserved Abstract Neural Dynamics and the Geometry of Population Activity Abigail A. Russo A growing body of research indicates that much of the brain’s computation is invisible from the activity of individual neurons, but instead instantiated via population-level dynamics. According to this ‘dynamical systems hypothesis’, population-level neural activity evolves according to underlying dynamics that are shaped by network connectivity. While these dynamics are not directly observable in empirical data, they can be inferred by studying the structure of population trajectories. Quantification of this structure, the ‘trajectory geometry’, can then guide thinking on the underlying computation. Alternatively, modeling neural populations as dynamical systems can predict trajectory geometries appropriate for particular tasks. This approach of characterizing and interpreting trajectory geometry is providing new insights in many cortical areas, including regions involved in motor control and areas that mediate cognitive processes such as decision-making. In this thesis, I advance the characterization of population structure by introducing hypothesis-guided metrics for the quantification of trajectory geometry. These metrics, trajectory tangling in primary motor cortex and trajectory divergence in the Supplementary Motor Area, abstract away from task- specific solutions and toward underlying computations and network constraints that drive trajectory geometry. Primate motor cortex (M1) projects to spinal interneurons and motoneurons, suggesting that motor cortex activity may be dominated by muscle-like commands. -

COSYNE 2012 Workshops February 27 & 28, 2012 Snowbird, Utah

COSYNE 2012 Workshops February 27 & 28, 2012 Snowbird, Utah Monday, February 27 Organizer(s) Location 1. Coding and Computation in visual short-term memory. W.J. Ma Superior A 2. Neuromodulation: beyond the wiring diagram, M. Higley Wasatch B adding functional flexibility to neural circuits. 3. Sensorimotor processes reflected in spatiotemporal Z. Kilpatrick Superior B of neuronal activity. J-Y. Wu 4. Characterizing neural responses to structured K. Rajan Wasatch A and naturalistic stimuli. W.Bialek 5. Neurophysiological and computational mechanisms of D. Freedman Magpie A categorization. XJ. Wang 6. Perception and decision making in rodents S. Jaramillio Magpie B A. Zador 7. Is it time for theory in olfaction? V.Murphy Maybird N. Uchida G. Otazu C. Poo Workshop Co-Chairs Email Cell Brent Doiron, Pitt [email protected] 412-576-5237 Jess Cardin, Yale [email protected] 267-235-0462 Maps of Snowbird are at the end of this booklet (page 32). 1 COSYNE 2012 Workshops February 27 & 28, 2012 Snowbird, Utah Tuesday, February 28 Organizer(s) Location 1. Understanding heterogeneous cortical activity: S. Ardid Wasatch A the quest for structure and randomness. A. Bernacchia T. Engel 2. Humans, neurons, and machines: how can N. Majaj Wasatch B psychophysics, physiology, and modeling collaborate E. Issa to ask better questions in biological vision J. DiCarlo 3. Inhibitory synaptic plasticity T. Vogels Magpie A H Sprekeler R. Fromeke 4. Functions of identified microcircuits A. Hasenstaub Superior B V. Sohal 5. Promise and peril: genetics approaches for systems K. Nagal Superior A neuroscience revisited. D. Schoppik 6. Perception and decision making in rodents S. -

The IBRO Simons Computational Neuroscience Imbizo

IBRO Simons IBRO Simons Imbizo Imbizo 2019 The IBRO Simons Computational Neuroscience Imbizo Muizenberg, South Africa 2017 - 2019 im’bi-zo | Xhosa - Zulu A gathering of the people to share knowledge. HTTP://IMBIZO.AFRICA IN BRIEF, the Imbizo was conceived in 2016, to bring together and connect those interested in Neuroscience, Africa, and African Neuroscience. To share knowledge and create a pan-continental and international community of scientists. With the generous support from the Simons Foundation and the International Brain Research Organisation (IBRO), the Imbizo became a wild success. As a summer school for computational and systems neuroscience, it’s quickly becoming an established force in African Neuroscience. Here, we review and assess the first three years and discuss future plans. DIRECTORS Professor Peter Latham, Gatsby Computational Neuroscience Unit, Sainsbury Wellcome Centre, 25 Howland Street London W1T 4JG, United Kingdom, Email: [email protected] Dr Joseph Raimondo, Department of Human Biology, University of Cape Town, Anzio Road, Cape Town, 7925, South Africa, Email: [email protected] Professor Tim Vogels CNCB, Tinsley Building, Mansfield Road Oxford University, OX1 3SR, United Kingdom, Email: [email protected] LOCAL ORGANIZERS Alexander Antrobus, Gatsby Computational Neuroscience Unit, Sainsbury Wellcome Centre, 25 Howland Street London W1T 4JG, United Kingdom, Email: [email protected] (2017) Jenni Jones, Pie Managment, 17 Riverside Rd., Pinelands, Cape Town Tel: +27 21 530 6060 Email: [email protected] -

Minian: an Open-Source Miniscope Analysis Pipeline

bioRxiv preprint doi: https://doi.org/10.1101/2021.05.03.442492; this version posted May 4, 2021. The copyright holder for this preprint (which was not certified by peer review) is the author/funder, who has granted bioRxiv a license to display the preprint in perpetuity. It is made available under aCC-BY-NC-ND 4.0 International license. Title: Minian: An open-source miniscope analysis pipeline Authors: Zhe Dong1, William Mau1, Yu (Susie) Feng1, Zachary T. Pennington1, Lingxuan Chen1, YosiF Zaki1, Kanaka RaJan1, Tristan Shuman1, Daniel Aharoni*2, Denise J. Cai*1 *Corresponding Authors 1Nash Family Department oF Neuroscience, Icahn School oF Medicine at Mount Sinai 2Department oF Neurology, David GeFFen School oF Medicine, University oF CaliFornia bioRxiv preprint doi: https://doi.org/10.1101/2021.05.03.442492; this version posted May 4, 2021. The copyright holder for this preprint (which was not certified by peer review) is the author/funder, who has granted bioRxiv a license to display the preprint in perpetuity. It is made available under aCC-BY-NC-ND 4.0 International license. Abstract Miniature microscopes have gained considerable traction For in vivo calcium imaging in Freely behaving animals. However, extracting calcium signals From raw videos is a computationally complex problem and remains a bottleneck For many researchers utilizing single-photon in vivo calcium imaging. Despite the existence oF many powerFul analysis packages designed to detect and extract calcium dynamics, most have either key parameters that are hard-coded or insuFFicient step-by-step guidance and validations to help the users choose the best parameters. -

11:30Am EST Joshua Gordon, NIMH Director Neural Mechanisms Of

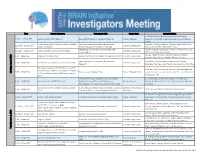

Time Session Moderator Presentation Title Session Type Session Speakers Dr. Rachel Wilson, Martin Family Professor of Basic 10:30 - 11:30am EST Joshua Gordon, NIMH Director Neural Mechanisms of Navigation Behavior Plenary Keynote Research in the Field of Neurobiology, Harvard Medical School Grace Hwang, Johns Hopkins University Applied How Can Dynamical Systems Neuroscience Konrad P. Kording, Joseph D. Monaco, Kanaka Rajan, Xaq 11:30am - 1:00pm EST Scientific Symposium Physics Laboratory Reciprocally Advance Machine Learning? Pitkow, Brad Pfeiffer, Nathaniel D. Daw Developing and Distributing Novel Electrode Cynthia Chestek, Xie Chong, Tim Harris, Allison Yorita, Eric 11:30am - 1:00pm EST Cynthia Chestek, University of Michigan Scientific Symposium Technologies Yttri, Loren Frank, Rahul Panat Claudia Angeli, Phil Starr, Nanthia Suthana, Michelle 1:30 - 3:00pm EST Greg Worrell, Mayo Clinic Advances in Neurotechnologies for Human Research Scientific Symposium Armenta Salas, Harrison Walker, Winston Chiong Expanding Species Diversity in Neuroscience Cory Miller, Zoe Donaldson, Angeles Salles, Andres 1:30 - 3:00pm EST Zoe Donaldson, University of Colorado Boulder Scientific Symposium Research Bendesky, Paul Katz, Galit Pelled, Karen David, Cynthia Moss John Ngai, Director of NIH BRAIN Initiative AND 15 of our 30 BRAIN Initiative Trainee Travel Awardees will Michelle Jones-London, Chief, Office of Programs Monday, 2020 June 1, 3:30 - 4:30pm EST Trainee Award Highlight Talks Trainee Highlight Talk speak; the names can be found on the Presenter -

How to Study the Neural Mechanisms of Multiple Tasks

Available online at www.sciencedirect.com ScienceDirect How to study the neural mechanisms of multiple tasks Guangyu Robert Yang, Michael W Cole and Kanaka Rajan Most biological and artificial neural systems are capable of computational work has been devoted to understand completing multiple tasks. However, the neural mechanism by the neural mechanisms behind individual tasks. which multiple tasks are accomplished within the same system is largely unclear. We start by discussing how different tasks Although neural systems are usually studied with one task can be related, and methods to generate large sets of inter- at a time, these systems are usually capable of performing related tasks to study how neural networks and animals many different tasks, and there are many reasons to study perform multiple tasks. We then argue that there are how a neural system can accomplish this. Studying mul- mechanisms that emphasize either specialization or flexibility. tiple tasks can serve as a powerful constraint to both We will review two such neural mechanisms underlying multiple biological and artificial neural systems (Figure 1a). For tasks at the neuronal level (modularity and mixed selectivity), one given task, there are often several alternative models and discuss how different mechanisms can emerge depending that describe existing experimental results similarly well. on training methods in neural networks. The space of potential solutions can be reduced by the requirement of solving multiple tasks. Address Zuckerman Mind Brain Behavior Institute, Columbia University, Center Experiments can uncover neural representation or mech- for Molecular and Behavioral Neuroscience, Rutgers University-Newark, anisms that appear sub-optimal for a single task.