Hebbian Plasticity Requires Compensatory Processes on Multiple Timescales

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Synaptic Weight Matrix

The Synaptic Weight Matrix: Dynamics, Symmetry Breaking, and Disorder Francesco Fumarola Submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy in the Graduate School of Arts and Sciences COLUMBIA UNIVERSITY 2018 © 2018 Francesco Fumarola All rights reserved ABSTRACT The Synaptic Weight Matrix: Dynamics, Symmetry Breaking, and Disorder Francesco Fumarola A key role in simplified models of neural circuitry (Wilson and Cowan, 1972) is played by the matrix of synaptic weights, also called connectivity matrix, whose elements describe the amount of influence the firing of one neuron has on another. Biologically, this matrix evolves over time whether or not sensory inputs are present, and symmetries possessed by the internal dynamics of the network may break up sponta- neously, as found in the development of the visual cortex (Hubel and Wiesel, 1977). In this thesis, a full analytical treatment is provided for the simplest case of such a biologi- cal phenomenon, a single dendritic arbor driven by correlation-based dynamics (Linsker, 1988). Borrowing methods from the theory of Schrödinger operators, a complete study of the model is performed, analytically describing the break-up of rotational symmetry that leads to the functional specialization of cells. The structure of the eigenfunctions is calcu- lated, lower bounds are derived on the critical value of the correlation length, and explicit expressions are obtained for the long-term profile of receptive fields, i.e. the dependence of neural activity on external inputs. The emergence of a functional architecture of orientation preferences in the cortex is another crucial feature of visual information processing. -

Ce Document Est Le Fruit D'un Long Travail Approuvé Par Le Jury De Soutenance Et Mis À Disposition De L'ensemble De La Communauté Universitaire Élargie

AVERTISSEMENT Ce document est le fruit d'un long travail approuvé par le jury de soutenance et mis à disposition de l'ensemble de la communauté universitaire élargie. Il est soumis à la propriété intellectuelle de l'auteur. Ceci implique une obligation de citation et de référencement lors de l’utilisation de ce document. D'autre part, toute contrefaçon, plagiat, reproduction illicite encourt une poursuite pénale. Contact : [email protected] LIENS Code de la Propriété Intellectuelle. articles L 122. 4 Code de la Propriété Intellectuelle. articles L 335.2- L 335.10 http://www.cfcopies.com/V2/leg/leg_droi.php http://www.culture.gouv.fr/culture/infos-pratiques/droits/protection.htm Ecole´ doctorale IAEM Lorraine D´epartement de formation doctorale en informatique Apprentissage spatial de corr´elations multimodales par des m´ecanismes d'inspiration corticale THESE` pour l'obtention du Doctorat de l'universit´ede Lorraine (sp´ecialit´einformatique) par Mathieu Lefort Composition du jury Pr´esident: Jean-Christophe Buisson, Professeur, ENSEEIHT Rapporteurs : Arnaud Revel, Professeur, Universit´ede La Rochelle Hugues Berry, CR HDR, INRIA Rh^one-Alpes Examinateurs : Beno^ıtGirard, CR HDR, CNRS ISIR Yann Boniface, MCF, Universit´ede Lorraine Directeur de th`ese: Bernard Girau, Professeur, Universit´ede Lorraine Laboratoire Lorrain de Recherche en Informatique et ses Applications | UMR 7503 Mis en page avec la classe thloria. i À tous ceux qui ont trouvé ou trouveront un intérêt à lire ce manuscrit ou à me côtoyer. ii iii Remerciements Je tiens à remercier l’ensemble de mes rapporteurs, Arnaud Revel et Hugues Berry, pour avoir accepter de prendre le temps d’évaluer mon travail et de l’avoir commenté de manière constructive malgré les délais relativement serrés. -

Dynamic Compression and Expansion in a Classifying Recurrent Network

bioRxiv preprint doi: https://doi.org/10.1101/564476; this version posted March 1, 2019. The copyright holder for this preprint (which was not certified by peer review) is the author/funder, who has granted bioRxiv a license to display the preprint in perpetuity. It is made available under aCC-BY-NC-ND 4.0 International license. Dynamic compression and expansion in a classifying recurrent network Matthew Farrell1-2, Stefano Recanatesi1, Guillaume Lajoie3-4, and Eric Shea-Brown1-2 1Computational Neuroscience Center, University of Washington 2Department of Applied Mathematics, University of Washington 3Mila|Qu´ebec AI Institute 4Dept. of Mathematics and Statistics, Universit´ede Montr´eal Abstract Recordings of neural circuits in the brain reveal extraordinary dynamical richness and high variability. At the same time, dimensionality reduction techniques generally uncover low- dimensional structures underlying these dynamics when tasks are performed. In general, it is still an open question what determines the dimensionality of activity in neural circuits, and what the functional role of this dimensionality in task learning is. In this work we probe these issues using a recurrent artificial neural network (RNN) model trained by stochastic gradient descent to discriminate inputs. The RNN family of models has recently shown promise in reveal- ing principles behind brain function. Through simulations and mathematical analysis, we show how the dimensionality of RNN activity depends on the task parameters and evolves over time and over stages of learning. We find that common solutions produced by the network naturally compress dimensionality, while variability-inducing chaos can expand it. We show how chaotic networks balance these two factors to solve the discrimination task with high accuracy and good generalization properties. -

Synaptic Plasticity in Correlated Balanced Networks

bioRxiv preprint doi: https://doi.org/10.1101/2020.04.26.061515; this version posted April 28, 2020. The copyright holder for this preprint (which was not certified by peer review) is the author/funder, who has granted bioRxiv a license to display the preprint in perpetuity. It is made available under aCC-BY-NC-ND 4.0 International license. Synaptic Plasticity in Correlated Balanced Networks Alan Eric Akil Department of Mathematics, University of Houston, Houston, Texas, United States of America Robert Rosenbaum Department of Applied and Computational Mathematics and Statistics, University of Notre Dame, Notre Dame, Indiana, United States of America∗ Kreˇsimir Josi´cy Department of Mathematics, University of Houston, Houston, Texas, United States of America z (Dated: April 2020) 1 bioRxiv preprint doi: https://doi.org/10.1101/2020.04.26.061515; this version posted April 28, 2020. The copyright holder for this preprint (which was not certified by peer review) is the author/funder, who has granted bioRxiv a license to display the preprint in perpetuity. It is made available under aCC-BY-NC-ND 4.0 International license. Abstract The dynamics of local cortical networks are irregular, but correlated. Dynamic excitatory{ inhibitory balance is a plausible mechanism that generates such irregular activity, but it remains unclear how balance is achieved and maintained in plastic neural networks. In particular, it is not fully understood how plasticity induced changes in the network affect balance, and in turn, how correlated, balanced activity impacts learning. How does the dynamics of balanced networks change under different plasticity rules? How does correlated spiking activity in recurrent networks change the evolution of weights, their eventual magnitude, and structure across the network? To address these questions, we develop a general theory of plasticity in balanced networks. -

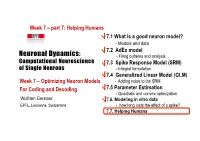

Neuronal Dynamics

Week 7 – part 7: Helping Humans 7.1 What is a good neuron model? - Models and data 7.2 AdEx model Neuronal Dynamics: - Firing patterns and analysis Computational Neuroscience 7.3 Spike Response Model (SRM) of Single Neurons - Integral formulation 7.4 Generalized Linear Model (GLM) Week 7 – Optimizing Neuron Models - Adding noise to the SRM For Coding and Decoding 7.5 Parameter Estimation - Quadratic and convex optimization Wulfram Gerstner 7.6. Modeling in vitro data EPFL, Lausanne, Switzerland - how long lasts the effect of a spike? 7.7. Helping Humans Week 7 – part 7: Helping Humans 7.1 What is a good neuron model? - Models and data 7.2 AdEx model - Firing patterns and analysis 7.3 Spike Response Model (SRM) - Integral formulation 7.4 Generalized Linear Model (GLM) - Adding noise to the SRM 7.5 Parameter Estimation - Quadratic and convex optimization 7.6. Modeling in vitro data - how long lasts the effect of a spike? 7.7. Helping Humans Neuronal Dynamics – Review: Models and Data -Predict spike times -Predict subthreshold voltage -Easy to interpret (not a ‘black box’) -Variety of phenomena -Systematic: ‘optimize’ parameters BUT so far limited to in vitro Neuronal Dynamics – 7.7 Systems neuroscience, in vivo Now: extracellular recordings visual cortex A) Predict spike times, given stimulus B) Predict subthreshold voltage C) Easy to interpret (not a ‘black box’) Model of ‘Encoding’ D) Flexible enough to account for a variety of phenomena E) Systematic procedure to ‘optimize’ parameters Neuronal Dynamics – 7.7 Estimation of receptive fields Estimation of spatial (and temporal) receptive fields ut() k I u LNP =+∑ kKk− rest firing intensity ρϑ()tfutt= ( ()− ()) Neuronal Dynamics – 7.7 Estimation of Receptive Fields visual LNP = stimulus Linear-Nonlinear-Poisson Special case of GLM= Generalized Linear Model GLM for prediction of retinal ganglion ON cell activity Pillow et al. -

Energy Efficient Synaptic Plasticity Ho Ling Li1, Mark CW Van Rossum1,2*

RESEARCH ARTICLE Energy efficient synaptic plasticity Ho Ling Li1, Mark CW van Rossum1,2* 1School of Psychology, University of Nottingham, Nottingham, United Kingdom; 2School of Mathematical Sciences, University of Nottingham, Nottingham, United Kingdom Abstract Many aspects of the brain’s design can be understood as the result of evolutionary drive toward metabolic efficiency. In addition to the energetic costs of neural computation and transmission, experimental evidence indicates that synaptic plasticity is metabolically demanding as well. As synaptic plasticity is crucial for learning, we examine how these metabolic costs enter in learning. We find that when synaptic plasticity rules are naively implemented, training neural networks requires extremely large amounts of energy when storing many patterns. We propose that this is avoided by precisely balancing labile forms of synaptic plasticity with more stable forms. This algorithm, termed synaptic caching, boosts energy efficiency manifold and can be used with any plasticity rule, including back-propagation. Our results yield a novel interpretation of the multiple forms of neural synaptic plasticity observed experimentally, including synaptic tagging and capture phenomena. Furthermore, our results are relevant for energy efficient neuromorphic designs. Introduction The human brain only weighs 2% of the total body mass but is responsible for 20% of resting metab- olism (Attwell and Laughlin, 2001; Harris et al., 2012). The brain’s energy need is believed to have shaped many aspects of its design, such as its sparse coding strategy (Levy and Baxter, 1996; Len- nie, 2003), the biophysics of the mammalian action potential (Alle et al., 2009; Fohlmeister, 2009), *For correspondence: and synaptic failure (Levy and Baxter, 2002; Harris et al., 2012). -

Hebbian Learning and Spiking Neurons

PHYSICAL REVIEW E VOLUME 59, NUMBER 4 APRIL 1999 Hebbian learning and spiking neurons Richard Kempter* Physik Department, Technische Universita¨tMu¨nchen, D-85747 Garching bei Mu¨nchen, Germany Wulfram Gerstner† Swiss Federal Institute of Technology, Center of Neuromimetic Systems, EPFL-DI, CH-1015 Lausanne, Switzerland J. Leo van Hemmen‡ Physik Department, Technische Universita¨tMu¨nchen, D-85747 Garching bei Mu¨nchen, Germany ~Received 6 August 1998; revised manuscript received 23 November 1998! A correlation-based ~‘‘Hebbian’’! learning rule at a spike level with millisecond resolution is formulated, mathematically analyzed, and compared with learning in a firing-rate description. The relative timing of presynaptic and postsynaptic spikes influences synaptic weights via an asymmetric ‘‘learning window.’’ A differential equation for the learning dynamics is derived under the assumption that the time scales of learning and neuronal spike dynamics can be separated. The differential equation is solved for a Poissonian neuron model with stochastic spike arrival. It is shown that correlations between input and output spikes tend to stabilize structure formation. With an appropriate choice of parameters, learning leads to an intrinsic normal- ization of the average weight and the output firing rate. Noise generates diffusion-like spreading of synaptic weights. @S1063-651X~99!02804-4# PACS number~s!: 87.19.La, 87.19.La, 05.65.1b, 87.18.Sn I. INTRODUCTION In contrast to the standard rate models of Hebbian learn- ing, we introduce and analyze a learning rule where synaptic Correlation-based or ‘‘Hebbian’’ learning @1# is thought modifications are driven by the temporal correlations be- to be an important mechanism for the tuning of neuronal tween presynaptic and postsynaptic spikes. -

Connectivity Reflects Coding: a Model of Voltage-‐Based Spike-‐ Timing-‐Dependent-‐P

Connectivity reflects Coding: A Model of Voltage-based Spike- Timing-Dependent-Plasticity with Homeostasis Claudia Clopath, Lars Büsing*, Eleni Vasilaki, Wulfram Gerstner Laboratory of Computational Neuroscience Brain-Mind Institute and School of Computer and Communication Sciences Ecole Polytechnique Fédérale de Lausanne 1015 Lausanne EPFL, Switzerland * permanent address: Institut für Grundlagen der Informationsverarbeitung, TU Graz, Austria Abstract Electrophysiological connectivity patterns in cortex often show a few strong connections, sometimes bidirectional, in a sea of weak connections. In order to explain these connectivity patterns, we use a model of Spike-Timing-Dependent Plasticity where synaptic changes depend on presynaptic spike arrival and the postsynaptic membrane potential, filtered with two different time constants. The model describes several nonlinear effects in STDP experiments, as well as the voltage dependence of plasticity. We show that in a simulated recurrent network of spiking neurons our plasticity rule leads not only to development of localized receptive fields, but also to connectivity patterns that reflect the neural code: for temporal coding paradigms with spatio-temporal input correlations, strong connections are predominantly unidirectional, whereas they are bidirectional under rate coded input with spatial correlations only. Thus variable connectivity patterns in the brain could reflect different coding principles across brain areas; moreover simulations suggest that plasticity is surprisingly fast. Keywords: Synaptic plasticity, STDP, LTP, LTD, Voltage, Spike Introduction Experience-dependent changes in receptive fields1-2 or in learned behavior relate to changes in synaptic strength. Electrophysiological measurements of functional connectivity patterns in slices of neural tissue3-4 or anatomical connectivity measures5 can only present a snapshot of the momentary connectivity -- which may change over time6. -

Enhancing Nervous System Recovery Through Neurobiologics, Neural Interface Training, and Neurorehabilitation

UCLA UCLA Previously Published Works Title Enhancing Nervous System Recovery through Neurobiologics, Neural Interface Training, and Neurorehabilitation. Permalink https://escholarship.org/uc/item/0rb4m424 Authors Krucoff, Max O Rahimpour, Shervin Slutzky, Marc W et al. Publication Date 2016 DOI 10.3389/fnins.2016.00584 Peer reviewed eScholarship.org Powered by the California Digital Library University of California REVIEW published: 27 December 2016 doi: 10.3389/fnins.2016.00584 Enhancing Nervous System Recovery through Neurobiologics, Neural Interface Training, and Neurorehabilitation Max O. Krucoff 1*, Shervin Rahimpour 1, Marc W. Slutzky 2, 3, V. Reggie Edgerton 4 and Dennis A. Turner 1, 5, 6 1 Department of Neurosurgery, Duke University Medical Center, Durham, NC, USA, 2 Department of Physiology, Feinberg School of Medicine, Northwestern University, Chicago, IL, USA, 3 Department of Neurology, Feinberg School of Medicine, Northwestern University, Chicago, IL, USA, 4 Department of Integrative Biology and Physiology, University of California, Los Angeles, Los Angeles, CA, USA, 5 Department of Neurobiology, Duke University Medical Center, Durham, NC, USA, 6 Research and Surgery Services, Durham Veterans Affairs Medical Center, Durham, NC, USA After an initial period of recovery, human neurological injury has long been thought to be static. In order to improve quality of life for those suffering from stroke, spinal cord injury, or traumatic brain injury, researchers have been working to restore the nervous system and reduce neurological deficits through a number of mechanisms. For example, Edited by: neurobiologists have been identifying and manipulating components of the intra- and Ioan Opris, University of Miami, USA extracellular milieu to alter the regenerative potential of neurons, neuro-engineers have Reviewed by: been producing brain-machine and neural interfaces that circumvent lesions to restore Yoshio Sakurai, functionality, and neurorehabilitation experts have been developing new ways to revitalize Doshisha University, Japan Brian R. -

Dynamics of Excitatory-Inhibitory Neuronal Networks With

I (X;Y) = S(X) - S(X|Y) in c ≈ p + N r V(t) = V 0 + ∫ dτZ 1(τ)I(t-τ) P(N) = 1 V= R I N! λ N e -λ www.cosyne.org R j = R = P( Ψ, υ) + Mγ (Ψ, υ) σ n D +∑ j n k D k n MAIN MEETING Salt Lake City, UT Feb 27 - Mar 2 ................................................................................................................................................................................................................. Program Summary Thursday, 27 February 4:00 pm Registration opens 5:30 pm Welcome reception 6:20 pm Opening remarks 6:30 pm Session 1: Keynote Invited speaker: Thomas Jessell 7:30 pm Poster Session I Friday, 28 February 7:30 am Breakfast 8:30 am Session 2: Circuits I: From wiring to function Invited speaker: Thomas Mrsic-Flogel; 3 accepted talks 10:30 am Session 3: Circuits II: Population recording Invited speaker: Elad Schneidman; 3 accepted talks 12:00 pm Lunch break 2:00 pm Session 4: Circuits III: Network models 5 accepted talks 3:45 pm Session 5: Navigation: From phenomenon to mechanism Invited speakers: Nachum Ulanovsky, Jeffrey Magee; 1 accepted talk 5:30 pm Dinner break 7:30 pm Poster Session II Saturday, 1 March 7:30 am Breakfast 8:30 am Session 6: Behavior I: Dissecting innate movement Invited speaker: Hopi Hoekstra; 3 accepted talks 10:30 am Session 7: Behavior II: Motor learning Invited speaker: Rui Costa; 2 accepted talks 11:45 am Lunch break 2:00 pm Session 8: Behavior III: Motor performance Invited speaker: John Krakauer; 2 accepted talks 3:45 pm Session 9: Reward: Learning and prediction Invited speaker: Yael -

COSYNE 2014 Workshops

I (X;Y) = S(X) - S(X|Y) in c ≈ p + N r V(t) = V0 + ∫dτZ 1(τ)I(t-τ) P(N) = V= R I 1 N! λ N e -λ www.cosyne.org R j = γ R = P( Ψ, υ) + M(Ψ, υ) σ n D n +∑ j k D n k WORKSHOPS Snowbird, UT Mar 3 - 4 ................................................................................................................................................................................................................. COSYNE 2014 Workshops Snowbird, UT Mar 3-4, 2014 Organizers: Tatyana Sharpee Robert Froemke 1 COSYNE 2014 Workshops March 3 & 4, 2014 Snowbird, Utah Monday, March 3, 2014 Organizer(s) Location 1. Computational psychiatry – Day 1 Q. Huys Wasatch A T. Maia 2. Information sampling in behavioral optimization – B. Averbeck Wasatch B Day 1 R.C. Wilson M. R. Nassar 3. Rogue states: Altered Dynamics of neural activity in C. O’Donnell Magpie A brain disorders T. Sejnowski 4. Scalable models for high dimensional neural data I. M. Park Superior A E. Archer J. Pillow 5. Homeostasis and self-regulation of developing J. Gjorgjieva White Pine circuits: From single neurons to networks M. Hennig 6. Theories of mammalian perception: Open and closed E. Ahissar Magpie B loop modes of brain-world interactions E. Assa 7. Noise correlations in the cortex: Quantification, J. Fiser Superior B origins, and functional significance M. Lengyel A. Pouget 8. Excitatory and inhibitory synaptic conductances: M. Lankarany Maybird Functional roles and inference methods T. Toyoizumi Workshop Co-Chairs Email Cell Robert Froemke, NYU [email protected] 510-703-5702 Tatyana Sharpee, Salk [email protected] 858-610-7424 Maps of Snowbird are at the end of this booklet (page 38). -

Predictive Coding in Balanced Neural Networks with Noise, Chaos and Delays

Predictive coding in balanced neural networks with noise, chaos and delays Jonathan Kadmon Jonathan Timcheck Surya Ganguli Department of Applied Physics Department of Physics Department of Applied Physics Stanford University,CA Stanford University,CA Stanford University, CA [email protected] Abstract Biological neural networks face a formidable task: performing reliable compu- tations in the face of intrinsic stochasticity in individual neurons, imprecisely specified synaptic connectivity, and nonnegligible delays in synaptic transmission. A common approach to combatting such biological heterogeneity involves aver- aging over large redundantp networks of N neurons resulting in coding errors that decrease classically as 1= N. Recent work demonstrated a novel mechanism whereby recurrent spiking networks could efficiently encode dynamic stimuli, achieving a superclassical scaling in which coding errors decrease as 1=N. This specific mechanism involved two key ideas: predictive coding, and a tight balance, or cancellation between strong feedforward inputs and strong recurrent feedback. However, the theoretical principles governing the efficacy of balanced predictive coding and its robustness to noise, synaptic weight heterogeneity and communica- tion delays remain poorly understood. To discover such principles, we introduce an analytically tractable model of balanced predictive coding, in which the de- gree of balance and the degree of weight disorder can be dissociated unlike in previous balanced network models, and we develop a mean field theory of coding accuracy. Overall, our work provides and solves a general theoretical framework for dissecting the differential contributions neural noise, synaptic disorder, chaos, synaptic delays, and balance to the fidelity of predictive neural codes, reveals the fundamental role that balance plays in achieving superclassical scaling, and unifies previously disparate models in theoretical neuroscience.