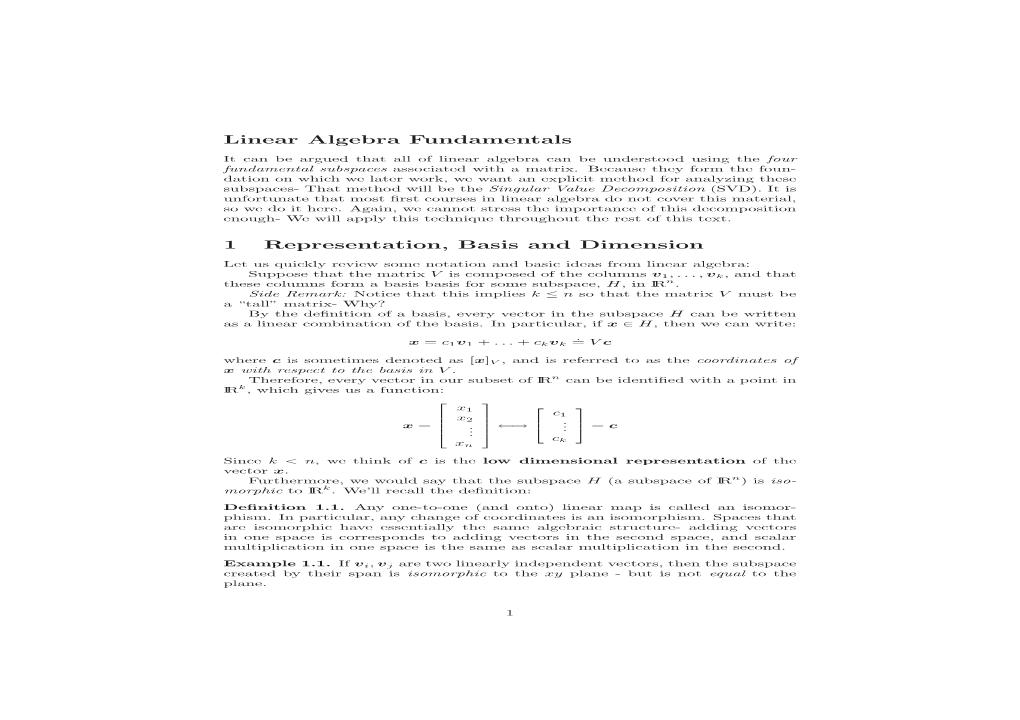

Linear Algebra Fundamentals 1 Representation, Basis and Dimension

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

21. Orthonormal Bases

21. Orthonormal Bases The canonical/standard basis 011 001 001 B C B C B C B0C B1C B0C e1 = B.C ; e2 = B.C ; : : : ; en = B.C B.C B.C B.C @.A @.A @.A 0 0 1 has many useful properties. • Each of the standard basis vectors has unit length: q p T jjeijj = ei ei = ei ei = 1: • The standard basis vectors are orthogonal (in other words, at right angles or perpendicular). T ei ej = ei ej = 0 when i 6= j This is summarized by ( 1 i = j eT e = δ = ; i j ij 0 i 6= j where δij is the Kronecker delta. Notice that the Kronecker delta gives the entries of the identity matrix. Given column vectors v and w, we have seen that the dot product v w is the same as the matrix multiplication vT w. This is the inner product on n T R . We can also form the outer product vw , which gives a square matrix. 1 The outer product on the standard basis vectors is interesting. Set T Π1 = e1e1 011 B C B0C = B.C 1 0 ::: 0 B.C @.A 0 01 0 ::: 01 B C B0 0 ::: 0C = B. .C B. .C @. .A 0 0 ::: 0 . T Πn = enen 001 B C B0C = B.C 0 0 ::: 1 B.C @.A 1 00 0 ::: 01 B C B0 0 ::: 0C = B. .C B. .C @. .A 0 0 ::: 1 In short, Πi is the diagonal square matrix with a 1 in the ith diagonal position and zeros everywhere else. -

Partitioned (Or Block) Matrices This Version: 29 Nov 2018

Partitioned (or Block) Matrices This version: 29 Nov 2018 Intermediate Econometrics / Forecasting Class Notes Instructor: Anthony Tay It is frequently convenient to partition matrices into smaller sub-matrices. e.g. 2 3 2 1 3 2 3 2 1 3 4 1 1 0 7 4 1 1 0 7 A B (2×2) (2×3) 3 1 1 0 0 = 3 1 1 0 0 = C I 1 3 0 1 0 1 3 0 1 0 (3×2) (3×3) 2 0 0 0 1 2 0 0 0 1 The same matrix can be partitioned in several different ways. For instance, we can write the previous matrix as 2 3 2 1 3 2 3 2 1 3 4 1 1 0 7 4 1 1 0 7 a b0 (1×1) (1×4) 3 1 1 0 0 = 3 1 1 0 0 = c D 1 3 0 1 0 1 3 0 1 0 (4×1) (4×4) 2 0 0 0 1 2 0 0 0 1 One reason partitioning is useful is that we can do matrix addition and multiplication with blocks, as though the blocks are elements, as long as the blocks are conformable for the operations. For instance: A B D E A + D B + E (2×2) (2×3) (2×2) (2×3) (2×2) (2×3) + = C I C F 2C I + F (3×2) (3×3) (3×2) (3×3) (3×2) (3×3) A B d E Ad + BF AE + BG (2×2) (2×3) (2×1) (2×3) (2×1) (2×3) = C I F G Cd + F CE + G (3×2) (3×3) (3×1) (3×3) (3×1) (3×3) | {z } | {z } | {z } (5×5) (5×4) (5×4) 1 Intermediate Econometrics / Forecasting 2 Examples (1) Let 1 2 1 1 2 1 c 1 4 2 3 4 2 3 h i A = = = a a a and c = c 1 2 3 2 3 0 1 3 0 1 c 0 1 3 0 1 3 3 c1 h i then Ac = a1 a2 a3 c2 = c1a1 + c2a2 + c3a3 c3 The product Ac produces a linear combination of the columns of A. -

Linear Algebra for Dummies

Linear Algebra for Dummies Jorge A. Menendez October 6, 2017 Contents 1 Matrices and Vectors1 2 Matrix Multiplication2 3 Matrix Inverse, Pseudo-inverse4 4 Outer products 5 5 Inner Products 5 6 Example: Linear Regression7 7 Eigenstuff 8 8 Example: Covariance Matrices 11 9 Example: PCA 12 10 Useful resources 12 1 Matrices and Vectors An m × n matrix is simply an array of numbers: 2 3 a11 a12 : : : a1n 6 a21 a22 : : : a2n 7 A = 6 7 6 . 7 4 . 5 am1 am2 : : : amn where we define the indexing Aij = aij to designate the component in the ith row and jth column of A. The transpose of a matrix is obtained by flipping the rows with the columns: 2 3 a11 a21 : : : an1 6 a12 a22 : : : an2 7 AT = 6 7 6 . 7 4 . 5 a1m a2m : : : anm T which evidently is now an n × m matrix, with components Aij = Aji = aji. In other words, the transpose is obtained by simply flipping the row and column indeces. One particularly important matrix is called the identity matrix, which is composed of 1’s on the diagonal and 0’s everywhere else: 21 0 ::: 03 60 1 ::: 07 6 7 6. .. .7 4. .5 0 0 ::: 1 1 It is called the identity matrix because the product of any matrix with the identity matrix is identical to itself: AI = A In other words, I is the equivalent of the number 1 for matrices. For our purposes, a vector can simply be thought of as a matrix with one column1: 2 3 a1 6a2 7 a = 6 7 6 . -

Handout 9 More Matrix Properties; the Transpose

Handout 9 More matrix properties; the transpose Square matrix properties These properties only apply to a square matrix, i.e. n £ n. ² The leading diagonal is the diagonal line consisting of the entries a11, a22, a33, . ann. ² A diagonal matrix has zeros everywhere except the leading diagonal. ² The identity matrix I has zeros o® the leading diagonal, and 1 for each entry on the diagonal. It is a special case of a diagonal matrix, and A I = I A = A for any n £ n matrix A. ² An upper triangular matrix has all its non-zero entries on or above the leading diagonal. ² A lower triangular matrix has all its non-zero entries on or below the leading diagonal. ² A symmetric matrix has the same entries below and above the diagonal: aij = aji for any values of i and j between 1 and n. ² An antisymmetric or skew-symmetric matrix has the opposite entries below and above the diagonal: aij = ¡aji for any values of i and j between 1 and n. This automatically means the digaonal entries must all be zero. Transpose To transpose a matrix, we reect it across the line given by the leading diagonal a11, a22 etc. In general the result is a di®erent shape to the original matrix: a11 a21 a11 a12 a13 > > A = A = 0 a12 a22 1 [A ]ij = A : µ a21 a22 a23 ¶ ji a13 a23 @ A > ² If A is m £ n then A is n £ m. > ² The transpose of a symmetric matrix is itself: A = A (recalling that only square matrices can be symmetric). -

Equations of Lines and Planes

Equations of Lines and 12.5 Planes Copyright © Cengage Learning. All rights reserved. Lines A line in the xy-plane is determined when a point on the line and the direction of the line (its slope or angle of inclination) are given. The equation of the line can then be written using the point-slope form. Likewise, a line L in three-dimensional space is determined when we know a point P0(x0, y0, z0) on L and the direction of L. In three dimensions the direction of a line is conveniently described by a vector, so we let v be a vector parallel to L. 2 Lines Let P(x, y, z) be an arbitrary point on L and let r0 and r be the position vectors of P0 and P (that is, they have representations and ). If a is the vector with representation as in Figure 1, then the Triangle Law for vector addition gives r = r0 + a. Figure 1 3 Lines But, since a and v are parallel vectors, there is a scalar t such that a = t v. Thus which is a vector equation of L. Each value of the parameter t gives the position vector r of a point on L. In other words, as t varies, the line is traced out by the tip of the vector r. 4 Lines As Figure 2 indicates, positive values of t correspond to points on L that lie on one side of P0, whereas negative values of t correspond to points that lie on the other side of P0. -

Week 8-9. Inner Product Spaces. (Revised Version) Section 3.1 Dot Product As an Inner Product

Math 2051 W2008 Margo Kondratieva Week 8-9. Inner product spaces. (revised version) Section 3.1 Dot product as an inner product. Consider a linear (vector) space V . (Let us restrict ourselves to only real spaces that is we will not deal with complex numbers and vectors.) De¯nition 1. An inner product on V is a function which assigns a real number, denoted by < ~u;~v> to every pair of vectors ~u;~v 2 V such that (1) < ~u;~v>=< ~v; ~u> for all ~u;~v 2 V ; (2) < ~u + ~v; ~w>=< ~u;~w> + < ~v; ~w> for all ~u;~v; ~w 2 V ; (3) < k~u;~v>= k < ~u;~v> for any k 2 R and ~u;~v 2 V . (4) < ~v;~v>¸ 0 for all ~v 2 V , and < ~v;~v>= 0 only for ~v = ~0. De¯nition 2. Inner product space is a vector space equipped with an inner product. Pn It is straightforward to check that the dot product introduces by ~u ¢ ~v = j=1 ujvj is an inner product. You are advised to verify all the properties listed in the de¯nition, as an exercise. The dot product is also called Euclidian inner product. De¯nition 3. Euclidian vector space is Rn equipped with Euclidian inner product < ~u;~v>= ~u¢~v. De¯nition 4. A square matrix A is called positive de¯nite if ~vT A~v> 0 for any vector ~v 6= ~0. · ¸ 2 0 Problem 1. Show that is positive de¯nite. 0 3 Solution: Take ~v = (x; y)T . Then ~vT A~v = 2x2 + 3y2 > 0 for (x; y) 6= (0; 0). -

Lecture 3.Pdf

ENGR-1100 Introduction to Engineering Analysis Lecture 3 POSITION VECTORS & FORCE VECTORS Today’s Objectives: Students will be able to : a) Represent a position vector in Cartesian coordinate form, from given geometry. In-Class Activities: • Applications / b) Represent a force vector directed along Relevance a line. • Write Position Vectors • Write a Force Vector along a line 1 DOT PRODUCT Today’s Objective: Students will be able to use the vector dot product to: a) determine an angle between In-Class Activities: two vectors, and, •Applications / Relevance b) determine the projection of a vector • Dot product - Definition along a specified line. • Angle Determination • Determining the Projection APPLICATIONS This ship’s mooring line, connected to the bow, can be represented as a Cartesian vector. What are the forces in the mooring line and how do we find their directions? Why would we want to know these things? 2 APPLICATIONS (continued) This awning is held up by three chains. What are the forces in the chains and how do we find their directions? Why would we want to know these things? POSITION VECTOR A position vector is defined as a fixed vector that locates a point in space relative to another point. Consider two points, A and B, in 3-D space. Let their coordinates be (XA, YA, ZA) and (XB, YB, ZB), respectively. 3 POSITION VECTOR The position vector directed from A to B, rAB , is defined as rAB = {( XB –XA ) i + ( YB –YA ) j + ( ZB –ZA ) k }m Please note that B is the ending point and A is the starting point. -

New Foundations for Geometric Algebra1

Text published in the electronic journal Clifford Analysis, Clifford Algebras and their Applications vol. 2, No. 3 (2013) pp. 193-211 New foundations for geometric algebra1 Ramon González Calvet Institut Pere Calders, Campus Universitat Autònoma de Barcelona, 08193 Cerdanyola del Vallès, Spain E-mail : [email protected] Abstract. New foundations for geometric algebra are proposed based upon the existing isomorphisms between geometric and matrix algebras. Each geometric algebra always has a faithful real matrix representation with a periodicity of 8. On the other hand, each matrix algebra is always embedded in a geometric algebra of a convenient dimension. The geometric product is also isomorphic to the matrix product, and many vector transformations such as rotations, axial symmetries and Lorentz transformations can be written in a form isomorphic to a similarity transformation of matrices. We collect the idea Dirac applied to develop the relativistic electron equation when he took a basis of matrices for the geometric algebra instead of a basis of geometric vectors. Of course, this way of understanding the geometric algebra requires new definitions: the geometric vector space is defined as the algebraic subspace that generates the rest of the matrix algebra by addition and multiplication; isometries are simply defined as the similarity transformations of matrices as shown above, and finally the norm of any element of the geometric algebra is defined as the nth root of the determinant of its representative matrix of order n. The main idea of this proposal is an arithmetic point of view consisting of reversing the roles of matrix and geometric algebras in the sense that geometric algebra is a way of accessing, working and understanding the most fundamental conception of matrix algebra as the algebra of transformations of multiple quantities. -

Matrix Determinants

MATRIX DETERMINANTS Summary Uses ................................................................................................................................................. 1 1‐ Reminder ‐ Definition and components of a matrix ................................................................ 1 2‐ The matrix determinant .......................................................................................................... 2 3‐ Calculation of the determinant for a matrix ................................................................. 2 4‐ Exercise .................................................................................................................................... 3 5‐ Definition of a minor ............................................................................................................... 3 6‐ Definition of a cofactor ............................................................................................................ 4 7‐ Cofactor expansion – a method to calculate the determinant ............................................... 4 8‐ Calculate the determinant for a matrix ........................................................................ 5 9‐ Alternative method to calculate determinants ....................................................................... 6 10‐ Exercise .................................................................................................................................... 7 11‐ Determinants of square matrices of dimensions 4x4 and greater ........................................ -

Exercise 7.5

IN SUMMARY Key Idea • A projection of one vector onto another can be either a scalar or a vector. The difference is the vector projection has a direction. A A a a u u O O b NB b N B Scalar projection of a on b Vector projection of a on b Need to Know ! ! ! ! a # b ! • The scalar projection of a on b ϭϭ! 0 a 0 cos u, where u is the angle @ b @ ! ! between a and b. ! ! ! ! ! ! a # b ! a # b ! • The vector projection of a on b ϭ ! b ϭ a ! ! b b @ b @ 2 b # b ! • The direction cosines for OP ϭ 1a, b, c2 are a b cos a ϭ , cos b ϭ , 2 2 2 2 2 2 Va ϩ b ϩ c Va ϩ b ϩ c c cos g ϭ , where a, b, and g are the direction angles 2 2 2 Va ϩ b ϩ c ! between the position vector OP and the positive x-axis, y-axis and z-axis, respectively. Exercise 7.5 PART A ! 1. a. The vector a ϭ 12, 32 is projected onto the x-axis. What is the scalar projection? What is the vector projection? ! b. What are the scalar and vector projections when a is projected onto the y-axis? 2. Explain why it is not possible to obtain! either a scalar! projection or a vector projection when a nonzero vector x is projected on 0. 398 7.5 SCALAR AND VECTOR PROJECTIONS NEL ! ! 3. Consider two nonzero vectors,a and b, that are perpendicular! ! to each other.! Explain why the scalar and vector projections of a on b must! be !0 and 0, respectively. -

MATH 304 Linear Algebra Lecture 24: Scalar Product. Vectors: Geometric Approach

MATH 304 Linear Algebra Lecture 24: Scalar product. Vectors: geometric approach B A B′ A′ A vector is represented by a directed segment. • Directed segment is drawn as an arrow. • Different arrows represent the same vector if • they are of the same length and direction. Vectors: geometric approach v B A v B′ A′ −→AB denotes the vector represented by the arrow with tip at B and tail at A. −→AA is called the zero vector and denoted 0. Vectors: geometric approach v B − A v B′ A′ If v = −→AB then −→BA is called the negative vector of v and denoted v. − Vector addition Given vectors a and b, their sum a + b is defined by the rule −→AB + −→BC = −→AC. That is, choose points A, B, C so that −→AB = a and −→BC = b. Then a + b = −→AC. B b a C a b + B′ b A a C ′ a + b A′ The difference of the two vectors is defined as a b = a + ( b). − − b a b − a Properties of vector addition: a + b = b + a (commutative law) (a + b) + c = a + (b + c) (associative law) a + 0 = 0 + a = a a + ( a) = ( a) + a = 0 − − Let −→AB = a. Then a + 0 = −→AB + −→BB = −→AB = a, a + ( a) = −→AB + −→BA = −→AA = 0. − Let −→AB = a, −→BC = b, and −→CD = c. Then (a + b) + c = (−→AB + −→BC) + −→CD = −→AC + −→CD = −→AD, a + (b + c) = −→AB + (−→BC + −→CD) = −→AB + −→BD = −→AD. Parallelogram rule Let −→AB = a, −→BC = b, −−→AB′ = b, and −−→B′C ′ = a. Then a + b = −→AC, b + a = −−→AC ′. -

Basics of Linear Algebra

Basics of Linear Algebra Jos and Sophia Vectors ● Linear Algebra Definition: A list of numbers with a magnitude and a direction. ○ Magnitude: a = [4,3] |a| =sqrt(4^2+3^2)= 5 ○ Direction: angle vector points ● Computer Science Definition: A list of numbers. ○ Example: Heights = [60, 68, 72, 67] Dot Product of Vectors Formula: a · b = |a| × |b| × cos(θ) ● Definition: Multiplication of two vectors which results in a scalar value ● In the diagram: ○ |a| is the magnitude (length) of vector a ○ |b| is the magnitude of vector b ○ Θ is the angle between a and b Matrix ● Definition: ● Matrix elements: ● a)Matrix is an arrangement of numbers into rows and columns. ● b) A matrix is an m × n array of scalars from a given field F. The individual values in the matrix are called entries. ● Matrix dimensions: the number of rows and columns of the matrix, in that order. Multiplication of Matrices ● The multiplication of two matrices ● Result matrix dimensions ○ Notation: (Row, Column) ○ Columns of the 1st matrix must equal the rows of the 2nd matrix ○ Result matrix is equal to the number of (1, 2, 3) • (7, 9, 11) = 1×7 +2×9 + 3×11 rows in 1st matrix and the number of = 58 columns in the 2nd matrix ○ Ex. 3 x 4 ॱ 5 x 3 ■ Dot product does not work ○ Ex. 5 x 3 ॱ 3 x 4 ■ Dot product does work ■ Result: 5 x 4 Dot Product Application ● Application: Ray tracing program ○ Quickly create an image with lower quality ○ “Refinement rendering pass” occurs ■ Removes the jagged edges ○ Dot product used to calculate ■ Intersection between a ray and a sphere ■ Measure the length to the intersection points ● Application: Forward Propagation ○ Input matrix * weighted matrix = prediction matrix http://immersivemath.com/ila/ch03_dotprodu ct/ch03.html#fig_dp_ray_tracer Projections One important use of dot products is in projections.