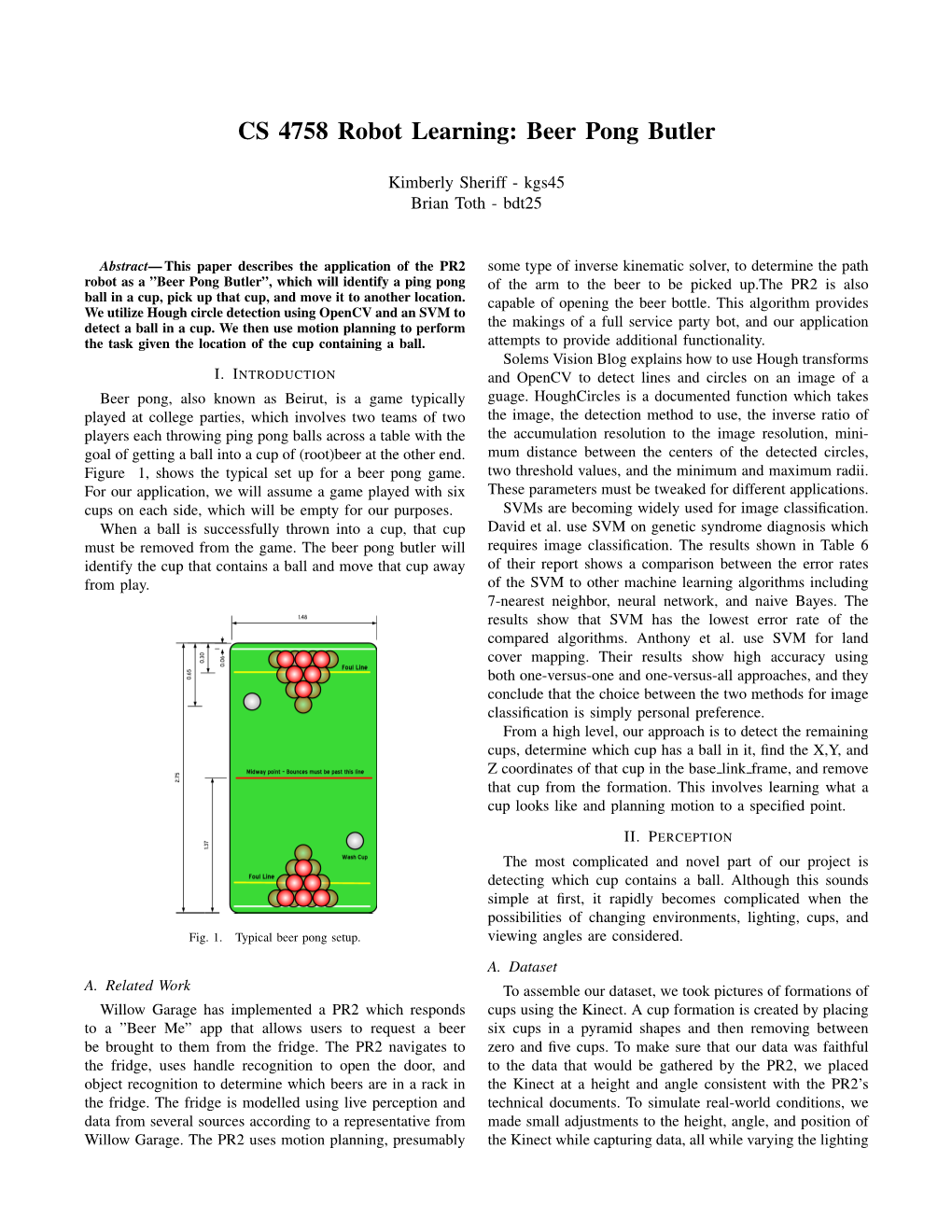

Beer Pong Butler

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

University Microiilms, a XERQ\Company, Ann Arbor, Michigan

71-18,075 RINEHART, John McLain, 1937- IVES' COMPOSITIONAL IDIOMS: AN INVESTIGATION OF SELECTED SHORT COMPOSITIONS AS MICROCOSMS' OF HIS MUSICAL LANGUAGE. The Ohio State University, Ph.D., 1970 Music University Microiilms, A XERQ\Company, Ann Arbor, Michigan © Copyright by John McLain Rinehart 1971 tutc nTccrSTATmil HAS fiEEM MICROFILMED EXACTLY AS RECEIVED IVES' COMPOSITIONAL IDIOMS: AM IMVESTIOAT10M OF SELECTED SHORT COMPOSITIONS AS MICROCOSMS OF HIS MUSICAL LANGUAGE DISSERTATION Presented in Partial Fulfillment of the Requirements for the Degree Doctor of Philosophy 3n the Graduate School of The Ohio State University £ JohnfRinehart, A.B., M«M. # # * -k * * # The Ohio State University 1970 Approved by .s* ' ( y ^MrrXfOor School of Music ACm.WTji.D0F,:4ENTS Grateful acknov/ledgement is made to the library of the Yale School of Music for permission to make use of manuscript materials from the Ives Collection, I further vrish to express gratitude to Professor IJoman Phelps, whose wise counsel and keen awareness of music theory have guided me in thi3 project. Finally, I wish to acknowledge my wife, Jennifer, without whose patience and expertise this project would never have come to fruition. it VITA March 17, 1937 • ••••• Dorn - Pittsburgh, Pennsylvania 1959 • • • • • .......... A#B#, Kent State University, Kent, Ohio 1960-1963 . * ........... Instructor, Cleveland Institute of Music, Cleveland, Ohio 1 9 6 1 ................ • • • M.M., Cleveland Institute of ITu3ic, Cleveland, Ohio 1963-1970 .......... • • • Associate Professor of Music, Heidelberg College, Tiffin, Ohio PUBLICATIONS Credo, for unaccompanied chorus# New York: Plymouth Music Company, 1969. FIELDS OF STUDY Major Field: Theory and Composition Studies in Theory# Professor Norman Phelps Studies in Musicology# Professors Richard Hoppin and Lee Rigsby ill TAPLE OF CC NTEKTS A C KI JO WLE DGEME MT S ............................................... -

2018 Fall Olympics Events and Rules: Game 1: Flip Cup- 5 Games

2018 Fall Olympics Events and Rules: Game 1: Flip Cup- 5 games • Teams of 4 line up across the table from each other. • Each player has approximately half a cup of beer. The amount should be the same for each player. • The goal is for each player to chug their drink and then flip their empty solo cup using the edge of the table so that it lands upside down. • Once a cup is successfully flipped, the next player will than need to drink their beer and then flip the cup. • This is a relay race so the first team to have all their beer finished and their cups flipped wins. • Winning of team receives 1 point for each of the 5 games. Winning team remains at their table, losing team rotates to next table over. Game 2: Dizzy Bat – 2 Games • 4 players on each team • There will be 2 players on each side of a 20 yard “field” • Side A: Person 1 will do 5 spins with bat, then chug their beer, then run to side B with bat in hand • Person 1 from side A will hand bat to person 2 on Side B who then does the same…. 5 spins w/ bat, chug, then run to Side A to person 3…. Repeat until person 4 crosses line at sign A. • First team to get person 4 across side A wins. • Teams will be lined up 10 yds apart from each other (no tripping, adjusting the cones or interfering with the competing team or you will be given a 10 second penalty each time). -

Drinking Games at ISU Ethos Magazine

Volume 56 Issue 1 Article 7 November 2004 Drinking Games at ISU Ethos Magazine Follow this and additional works at: http://lib.dr.iastate.edu/ethos Recommended Citation Ethos Magazine (2004) "Drinking Games at ISU," Ethos: Vol. 2005 , Article 7. Available at: http://lib.dr.iastate.edu/ethos/vol2005/iss1/7 This Article is brought to you for free and open access by the Student Publications at Iowa State University Digital Repository. It has been accepted for inclusion in Ethos by an authorized editor of Iowa State University Digital Repository. For more information, please contact [email protected]. Flip Cup: Two teams. One beer per person. One person from each team starts by chugging his beer, setting the empty cup down on the edge of the table, and trying to flip it over so the open end lands down. Then the next person goes. Repeat until one team finishes. Losing team drinks a pitcher. This game sounds easy enough, but wait until you start seeing three cups. Beer Pong: It's like ping pong. With beer. Only different. more like a cross between basketball and bowling. Look for it in the 2008 Olympics. Quarters: Our favorite version: Sit in a circle and start two empty mugs in front o.f people sitting across from each other. You have to bounce a quarter in the mug when it's your turn, and then pass it to the left. Make it on your first try and you can pass it to your left or right. If you end up with both mugs in front of you, you have to chug a beer. -

53 Conversational La

Other Publications by the Author The New College Latin & English Dictionary, Second Edition, 1994. Amsco School Publications, Inc., 315 Hudson Street, New York, NY 10013-1085. ISBN 0-87720-561-2. Second Edition, 1995. Simultaneously published by Bantam Books, Inc., 1540 Broadway, New York, NY 10036. ISBN 0-553-57301-2. Latin is Fun: Book I: Lively Lessons for Beginners. Amsco, 1989. ISBN 0-87720-550-7. Teacher’s Manual and Key. Amsco, 1989. ISBN 0-87720-554-X. Latin is Fun: Book II: Lively Lessons for Beginners. Amsco, 1995. ISBN 0-87720-565-5. Teacher’s Manual with Answers. Amsco, 1995. ISBN 0-87720-567-1. The New College German & English Dictionary. Amsco, 1981. ISBN 0-87720-584-1. Bantam, 1981. ISBN 0-533-14155-4. German Fundamentals: Basic Grammar and Vocabulary. 1992. Barron’s Educational Series, Inc., Hauppauge, NY 11788. Lingua Latina: Book I Latin First Year. Amsco, 1999, ISBN 1-56765-426-6 (Hardbound); ISBN 1-56765-425-8 (Softbound). Teacher’s Manual and Key, 1999, ISBN 1-56765-428-2. Lingua Latina: Book II: Latin Second Year. Amsco, 2001, ISBN 1-56765-429-0 (Softbound); Teacher’s Manual and Key, Amsco, 2001, ISBN 1-56765-431-2 Contents Acknowledgements ...........................................................8 How to Use This Book......................................................9 Pronunciation...................................................................10 Abbreviations ..................................................................14 Chapter I: Greetings ........................................................15 Boy meets girl. Mario runs into his friend Julia. Tullia introduces her friend to Luke. Chapter II: Family ...........................................................20 The censor asks the father some questions. A son learns about his family tree from his father. Two friends discuss their family circumstances. -

Beer Pong Directions

Beer Pong Beer Pong is a drinking game, usually played in pairs, with teams standing at opposing ends of a table. Cups are formed in a pyramid at either end of the table. The player attempts to toss or bounce a pong ball into the cups. The cups are filled 1/3 with liquid. The player makes a shot into a cup of the opposing team, a player from the opposing team drinks the contents of the cup and removes it from the table. The game continues in this way, with both players from one team taking a shot, followed by both players from the other team. The team that is able to clear all of the opposing team's cups first is the winner, with the losing team splitting the contents of the winning team's remaining cups. Be safe, Be Healthy, Be Clean. Implement the " Health Cup " Rule. Each player identifies and marks their "Health Cup" usually by writing their name on the cup. This cup is to be used only by that individual as a container for which the liquid from the playing cups is poured into. The "Health Cup" is not part of the 20 playing cups. Rules & Regulations 1.) Each team consist of 1 or 2 players. 2.) One Official Party Pong Beer Pong Table. 3.) Two Official Party Pong balls per table. 4.) Cups will be set up in a 4, 3, 2, 1 formation. 5.) Distribute 24 oz. of liquid evenly among all 10 cups per side. 6.) Rock, Paper, Scissors will decide the team that shoots first ( Best of 3 ). -

81 Drinking Games – FREE E-Book

81 Drinking Games – FREE E-Book We thank you for your purchase of our Beer Bong. As a token of our appreciation, we would like to provide you this free E-Book loaded with 81 drinking game ideas. This E-Book was created by some of our best researchers who traveled the web from east to west and from north to south to find and compile some of the best drinking games that will keep your party alive and going. Enjoy!! Party Like Sophia 1 of 75 Game #1: Beer Pong What you need: Ping pong table Pack of ping-pong balls Pack of 16oz plastic cups How to play: You can play beer pong in teams of one or two players. You will need to set up two formations of 10 cups on either end of the ping-pong table. The cups should form a triangle, similar to how balls are racked at the beginning of a game of pool. Fill each cup with roughly three to four ounces of beer. Each team stands on either side of the table. The goal is to toss a ping- pong ball into one of the cups on the opposing team’s side. You can toss the ball directly into a cup, and the opposing team is not permitted to try to swat the ball away. Or, for an easier shot, you can bounce the ball when you toss it, but in this scenario, the opposing team is permitted to try to swat the ball away. When a ball lands in a cup, a member of the opposing team has to drink it. -

Professors & Drinking Games

Professors & Drinking Games Michael Steel, Kyle Carlos Prof. Cynthia Monroe (Writing Department) ● Game: Quarters ● Context: Dartmouth undergraduate 1984-86 Sigma Phi Epsilon ● Rules: Bounce a quarter off the table into a cup, if it misses, then one takes a drink of beer (water optional) ● Intent/interpretation: ○ “It’s like the undrinking game… “ ○ Or “a way for people who wanted to get intoxicated quickly, to get intoxicated quickly with a point” Jane Doe (Art Department) ● Game: Beer Pong ● Context: Dartmouth Undergrad 1996-2000 ● Rules: Played on 4x8 plywood, with cups lined up as a V, paddle handles optional ● Intent/interpretation: ○ Continuation of tradition ○ “It was harmless fun and a way not to talk in a loud basement and socialize” ○ “Looking back it creates problems such as sexual assault and alcohol abuse which is very concerning” Prof. Steven Swain (Music Department) ● Ritual: Booting & Rallying ● Context: Told to by undergraduate students 1999-2005 ● Rules: Drink quickly, forcefully vomit, keep drinking... ● Intent/interpretation: ○ “Why climb Mt. Everest? Because it’s there” ○ Encourage other people to drink ○ Competition ○ Social lubricant John Doe (Writing Department) ● Ritual: Shotgunning ● Context: Undergraduate years ● Rules: Use key to pierce beer can, open it so it shoots into the mouth, people around them respond with “Woop’s and Hooray” (variation; drinking from tap of a keg) ● Intent/interpretation: ○ “People have no respect for beer” because ○ “They want to get drunk quick so they buy cheap stuff” Prof. Nicolay Ostrau (German Department) ● Games: Boßeln, Fingerhakeln, Komasaufen ● Context: Northern Germany ● Rules: ○ Boßeln: Similar to mini golf, but played with a bowling ball, rolling it along the ground outside, towing a wagon full of liquor & taking shots after each throw. -

Tailgating Policies

Tailgating Policies Lawrence Technological University permits tailgating in an authorized location prior to home football games. Tailgating is defined as parking a motorized vehicle and consuming food and beverages, which may include alcoholic beverages, outside the sports event area. Parking Lots A and G are designated lots for tailgating. The safety of our students and visitors is of the utmost importance to the University; therefore the following guidelines are established for tailgating. 1. Tailgating must occur only in Parking Lots A and G, which will be clearly marked by signs. 2. Tailgating cannot start before four hours prior to the beginning of any home football game and must end no later than the scheduled kick-off. 3. Alcoholic beverages are limited to single-serving, non-glass containers. No common sources (e.g., kegs, beer balls or other large quantity containers) are permitted. 4. Serving alcohol to or consumption of alcohol by minors (those under 21 years of age) is a violation of state law and Lawrence Technological University’s Code of Conduct. Michigan law states that underage drinking is illegal everywhere—on public or private property, indoors or out—including tailgating. 5. Individuals consuming alcohol may be asked to provide legal identification and proof of age to a campus safety officer, student affair staff or local law enforcement officer. 6. Carrying an open container of alcohol on public streets or sidewalks is illegal, regardless of age. When guests leave the designated tailgate area, they must leave alcoholic beverages in the designated tailgate area. 7. Playing of games that involve consumption of alcohol or use of alcohol-related paraphernalia are prohibited, including but not limited to beer pong, flip cup, shot gunning, etc. -

Drinking Games the Complete Guide Contents

Drinking Games The Complete Guide Contents 1 Overview 1 1.1 Drinking game ............................................. 1 1.1.1 History ............................................ 1 1.1.2 Types ............................................. 2 1.1.3 See also ............................................ 3 1.1.4 References .......................................... 3 1.1.5 Bibliography ......................................... 4 1.1.6 External links ......................................... 4 2 Word games 5 2.1 21 ................................................... 5 2.1.1 Rules ............................................. 5 2.1.2 Additional rules ........................................ 5 2.1.3 Example ............................................ 6 2.1.4 Variations ........................................... 6 2.1.5 See also ............................................ 6 2.2 Fuzzy Duck .............................................. 6 2.2.1 References .......................................... 6 2.3 Ibble Dibble .............................................. 7 2.3.1 Ibble Dibble .......................................... 7 2.3.2 Commercialisation ...................................... 7 2.3.3 References .......................................... 7 2.4 Never have I ever ........................................... 7 2.4.1 Rules ............................................. 7 2.4.2 In popular culture ....................................... 8 2.4.3 See also ............................................ 8 2.4.4 References ......................................... -

Barnett (Doherty).Pdf

Canterbury Christ Church University’s repository of research outputs http://create.canterbury.ac.uk Copyright © and Moral Rights for this thesis are retained by the author and/or other copyright owners. A copy can be downloaded for personal non-commercial research or study, without prior permission or charge. This thesis cannot be reproduced or quoted extensively from without first obtaining permission in writing from the copyright holder/s. The content must not be changed in any way or sold commercially in any format or medium without the formal permission of the copyright holders. When referring to this work, full bibliographic details including the author, title, awarding institution and date of the thesis must be given e.g. Barnett, L. (2017) Pleasure, agency, space and place: an ethnography of youth drinking cultures in a South West London community. Ph.D. thesis, Canterbury Christ Church University. Contact: [email protected] PLEASURE, AGENCY, SPACE AND PLACE: AN ETHNOGRAPHY OF YOUTH DRINKING CULTURES IN A SOUTH WEST LONDON COMMUNITY By Laura Kelly Barnett Canterbury Christ Church University Thesis submitted for the Degree of Doctor of Philosophy 2017 ABSTRACT Media, government and public discourse in the UK associate young drinkers as mindless, hedonistic consumers of alcohol, resulting in young people epitomising ‘Binge Britain’. This preoccupation with ‘binge’ drinking amplifies moral panics surrounding youth alcohol consumption whereby consideration of the social and cultural nuances of pleasure that give meaning to young people’s excessive drinking practices and values has been given little priority. This sociological study explores how young drinkers regulate their drinking practices through levels of agency which is informed by values linked to the pursuit of pleasurable intoxication alongside friendship groups in a variety of drinking settings. -

1. Take Beer Pong Table out of Box and Place Upright with Handles Facing Up

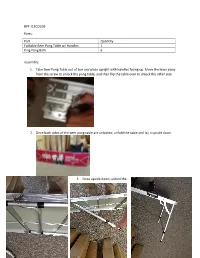

BPT-ICECOLDB Parts: Part Quantity Foldable Beer Pong Table w/ Handles 1 Ping Pong Balls 6 Assembly: 1. Take Beer Pong Table out of box and place upright with handles facing up. Move the lever away from the screw to unlock the pong table, and then flip the table over to unlock the other side 2. Once both sides of the beer pong table are unlocked, unfold the table and lay it upside down. 3. Once upside down, unfold the legs out from the table until they are 90⁰ straight into place. 4. Once all the legs are unfolded and in position, flip table over and upright, and begin playing beer pong. Ping Pong balls are located in the ping pong holder on the bottom of the table. How to play: 2-4 players 1. Fill up 12 cups with water or beer up to the lower fill line of the cup, or however much water/beer you prefer in the cup. 2. Place 6 cups on each side of the table and set them up in a triangle or bowling pen form. 3. Each team or player shoots 2 ping pong balls each from behind their side of the table. If a ball lands in the opposing teams cup, that team or player must drink the beer in the cup that was made, or take a sip of beer if playing with water cups, and take that cup off the playing surface. If the ball was bounced in, the opposing team must take and drink 2 cups off the table. -

Anarchism and the Evolution of Rave / P. 12

Honi Soit Week 9, Semester 1, 2020 / First printed 1929 Anarchism and the evolution of rave / p. 12 Reflections on student The beauty and history of On the urge to key a culture / p. 6 the piano / p. 9 luxury car / p. 17 LETTERS Acknowledgement of Country Letters Honi Soit is published on the stolen land of the Gadigal People of the Eora Nation. For over 230 years, First Nations people in this country have suffered from “Uh oh, WoCo” the destructive effects of invasion. The editors of this paper recognise that, as a team of settlers occupying the lands of the Bidjigal, Darug, Gadigal, Wangal and Wallumedegal people, we are beneficiaries of these reverberations that followed European settlement. As we strive throughout the year to offer a platform to the voices Dear Honi, religious paradigm then there is a flaw really matter what lens you’re looking at to the equivocation of Judaism and mainstream media ignores, we cannot meet this goal without providing a space for First Nations people to share their experiences and perspectives. A student paper which I’m a fan of the WoCo, but some within that logic because dwelling on Christianity through. It still stands that Israel, that among other things allows does not acknowledge historical and ongoing colonisation and the white supremacy embedded within Australian society can never adequately represent the students aspects of WoCo Honi have really biblical semantics is unlikely to lead it already dominates and informs public Israel to be legitimised as a state. But of the institution in which it operates.