Are Chess Discussions Racist? an Adversarial Hate Speech Data Set (Student Abstract)

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Lessons Learned: a Security Analysis of the Internet Chess Club

Lessons Learned: A Security Analysis of the Internet Chess Club John Black Martin Cochran Ryan Gardner University of Colorado Department of Computer Science UCB 430 Boulder, CO 80309 USA [email protected], [email protected], [email protected] Abstract between players. Each move a player made was transmit- ted (in the clear) to an ICS server, which would then relay The Internet Chess Club (ICC) is a popular online chess that move to the opponent. The server enforced the rules of server with more than 30,000 members worldwide including chess, recorded the position of the game after each move, various celebrities and the best chess players in the world. adjusted the ratings of the players according to the outcome Although the ICC website assures its users that the security of the game, and so forth. protocol used between client and server provides sufficient Serious chess players use a pair of clocks to enforce security for sensitive information to be transmitted (such as the requirement that players move in a reasonable amount credit card numbers), we show this is not true. In partic- of time: suppose Alice is playing Bob; at the beginning ular we show how a passive adversary can easily read all of a game, each player is allocated some number of min- communications with a trivial amount of computation, and utes. When Alice is thinking, her time ticks down; after how an active adversary can gain virtually unlimited pow- she moves, Bob begins thinking as his time ticks down. If ers over an ICC user. -

E-Magazine July 2020

E-MAGAZINE JULY 2020 0101 #EOCC2020 European Online Corporate Championships announced for 3 & 4 of October #EOYCC2020 European Online Youth Chess Championhsip announced for 18-20 September. International Chess Day recognised by United Nations & UNESCO celebrated all over the World #InternationalChessDay On, 20th of July, World celebrates the #InternationalChessDay. The International Chess Federation (FIDE) was founded, 96 years ago on 20th July 1924. The idea to celebrate this day as the International Chess Day was proposed by UNESCO, and it has been celebrated as such since 1966. On December 12, 2019, the UN General Assembly unanimously approved a resolution recognising the World Chess Day. On the occasion of the International Chess Day, a tele-meeting between the United Nations and FIDE was held on 20th of July 2020. Top chess personalities and representatives of the U.N. gathered to exchange views and insights to strengthen the productive collaboration European Chess Union has its seat in Switzerland, Address: Rainweidstrasse 2, CH-6333, Hunenberg See, Switzerland ECU decided to make a special promotional #Chess video. Mr. European Chess Union is an independent Zurab Azmaiparashvili visited the Georgian most popular Musical association founded in 1985 in Graz, Austria; Competition Show “Big stage”.ECU President taught the judges of European Chess Union has 54 National Federation Members; Every year ECU organizes more than 20 show, famous artists, how to play chess and then played a game with prestigious events and championships. them! www.europechess.org Federations, clubs, players and chess lovers took part in the [email protected] celebration all over the world. -

The Queen's Gambit

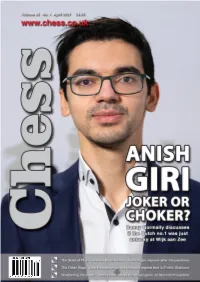

01-01 Cover - April 2021_Layout 1 16/03/2021 13:03 Page 1 03-03 Contents_Chess mag - 21_6_10 18/03/2021 11:45 Page 3 Chess Contents Founding Editor: B.H. Wood, OBE. M.Sc † Editorial....................................................................................................................4 Executive Editor: Malcolm Pein Malcolm Pein on the latest developments in the game Editors: Richard Palliser, Matt Read Associate Editor: John Saunders 60 Seconds with...Geert van der Velde.....................................................7 Subscriptions Manager: Paul Harrington We catch up with the Play Magnus Group’s VP of Content Chess Magazine (ISSN 0964-6221) is published by: A Tale of Two Players.........................................................................................8 Chess & Bridge Ltd, 44 Baker St, London, W1U 7RT Wesley So shone while Carlsen struggled at the Opera Euro Rapid Tel: 020 7486 7015 Anish Giri: Choker or Joker?........................................................................14 Email: [email protected], Website: www.chess.co.uk Danny Gormally discusses if the Dutch no.1 was just unlucky at Wijk Twitter: @CHESS_Magazine How Good is Your Chess?..............................................................................18 Twitter: @TelegraphChess - Malcolm Pein Daniel King also takes a look at the play of Anish Giri Twitter: @chessandbridge The Other Saga ..................................................................................................22 Subscription Rates: John Henderson very much -

Glossary of Chess

Glossary of chess See also: Glossary of chess problems, Index of chess • X articles and Outline of chess • This page explains commonly used terms in chess in al- • Z phabetical order. Some of these have their own pages, • References like fork and pin. For a list of unorthodox chess pieces, see Fairy chess piece; for a list of terms specific to chess problems, see Glossary of chess problems; for a list of chess-related games, see Chess variants. 1 A Contents : absolute pin A pin against the king is called absolute since the pinned piece cannot legally move (as mov- ing it would expose the king to check). Cf. relative • A pin. • B active 1. Describes a piece that controls a number of • C squares, or a piece that has a number of squares available for its next move. • D 2. An “active defense” is a defense employing threat(s) • E or counterattack(s). Antonym: passive. • F • G • H • I • J • K • L • M • N • O • P Envelope used for the adjournment of a match game Efim Geller • Q vs. Bent Larsen, Copenhagen 1966 • R adjournment Suspension of a chess game with the in- • S tention to finish it later. It was once very common in high-level competition, often occurring soon af- • T ter the first time control, but the practice has been • U abandoned due to the advent of computer analysis. See sealed move. • V adjudication Decision by a strong chess player (the ad- • W judicator) on the outcome of an unfinished game. 1 2 2 B This practice is now uncommon in over-the-board are often pawn moves; since pawns cannot move events, but does happen in online chess when one backwards to return to squares they have left, their player refuses to continue after an adjournment. -

White Knight Review September-October- 2010

Chess Magazine Online E-Magazine Volume 1 • Issue 1 September October 2010 Nobel Prize winners and Chess The Fischer King: The illusive life of Bobby Fischer Pt. 1 Sight Unseen-The Art of Blindfold Chess CHESS- theres an app for that! TAKING IT TO THE STREETS Street Players and Hustlers White Knight Review September-October- 2010 White My Move [email protected] Knight editorial elcome to our inaugural Review WIssue of White Knight Review. This chess magazine Chess E-Magazine was the natural outcome of the vision of 3 brothers. The unique corroboration and the divers talent of the “Wall boys” set in motion the idea of putting together this White Knight Table of Contents contents online publication. The oldest of the three is my brother Bill. He Review EDITORIAL-”My Move” 3 is by far the Chess expert of the group being the Chess E-Magazine author of over 30 chess books, several websites on the internet and a highly respected player in FEATURE-Taking it to the Streets 4 the chess world. His books and articles have spanned the globe and have become a wellspring of knowledge for both beginners and Executive Editor/Writer BOOK REVIEW-Diary of a Chess Queen 7 masters alike. Bill Wall Our younger brother is the entrepreneur [email protected] who’s initial idea of a marketable website and HISTORY-The History of Blindfold Chess 8 promoting resource material for chess players became the beginning focus on this endeavor. His sales and promotion experience is an FEATURE-Chessman- Picking up the pieces 10 integral part to the project. -

The Queen's Gambit

Master Class with Aagaard | Shankland on the Online Olympiad | Spiegel’s Three Questions NOVEMBER 2020 | USCHESS.ORG The Queen’s Gambit A new Netflix limited series highlights the Royal Game A seasonal gift from US CHESS: A free copy of Chess Life! NOVEMBER 17, 2020 Dear Chess Friends: GM ELIZABETH SPIEGEL When one of our members has a good idea, we take it seriously. Tweeting on October 31 – Halloween Day! – National Master Han Schut GM JESSE suggested we provide a “holiday present” to chess players around the world. KRAAI GM JACOB AAGAARD What a swell idea. Chess Life is the official magazine of US Chess. Each month we here at FM CARSTEN Chess Life work to publish the best of American chess in all of its facets. HANSEN In recent issues we have brought you articles by GM Jesse Kraai on chess in the time of coronavirus; GM Jon Tisdall’s look at online chess; IM Eric Rosen on “the new chess boom,” featuring a cover that went Michael Tisserand IM JOHN viral on social media!; on Charlie Gabriel, the WATSON coolest octogenarian jazz player and chess fan in New Orleans; and GM Maurice Ashley on 11-year-old phenom IM Abhimanyu Mishra. IM ERIC Our November issue has gained wide attention across the world for its cover ROSEN story on the Netflix limited series The Queen’s Gambit by longtime Chess Life columnist Bruce Pandolfini.It also features articles by GM Jacob Aagaard GM Sam Shankland WFM Elizabeth Spiegel GM MAURICE , , and , made ASHLEY famous in the 2012 documentary Brooklyn Castle. -

Predicting Chess Player Strength from Game Annotations

Picking the Amateur’s Mind – Predicting Chess Player Strength from Game Annotations Christian Scheible Hinrich Schutze¨ Institute for Natural Language Processing Center for Information University of Stuttgart, Germany and Language Processing [email protected] University of Munich, Germany Abstract Results from psychology show a connection between a speaker’s expertise in a task and the lan- guage he uses to talk about it. In this paper, we present an empirical study on using linguistic evidence to predict the expertise of a speaker in a task: playing chess. Instructional chess litera- ture claims that the mindsets of amateur and expert players differ fundamentally (Silman, 1999); psychological science has empirically arrived at similar results (e.g., Pfau and Murphy (1988)). We conduct experiments on automatically predicting chess player skill based on their natural lan- guage game commentary. We make use of annotated chess games, in which players provide their own interpretation of game in prose. Based on a dataset collected from an online chess forum, we predict player strength through SVM classification and ranking. We show that using textual and chess-specific features achieves both high classification accuracy and significant correlation. Finally, we compare ourfindings to claims from the chess literature and results from psychology. 1 Introduction It has been recognized that the language used when describing a certain topic or activity may differ strongly depending on the speaker’s level of expertise. As shown in empirical experiments in psychology (e.g., Solomon (1990), Pfau and Murphy (1988)), a speaker’s linguistic choices are influenced by the way he thinks about the topic. -

The Impact of Artificial Intelligence on the Chess World

JMIR SERIOUS GAMES Duca Iliescu Viewpoint The Impact of Artificial Intelligence on the Chess World Delia Monica Duca Iliescu, BSc, MSc Transilvania University of Brasov, Brasov, Romania Corresponding Author: Delia Monica Duca Iliescu, BSc, MSc Transilvania University of Brasov Bdul Eroilor 29 Brasov Romania Phone: 40 268413000 Email: [email protected] Abstract This paper focuses on key areas in which artificial intelligence has affected the chess world, including cheat detection methods, which are especially necessary recently, as there has been an unexpected rise in the popularity of online chess. Many major chess events that were to take place in 2020 have been canceled, but the global popularity of chess has in fact grown in recent months due to easier conversion of the game from offline to online formats compared with other games. Still, though a game of chess can be easily played online, there are some concerns about the increased chances of cheating. Artificial intelligence can address these concerns. (JMIR Serious Games 2020;8(4):e24049) doi: 10.2196/24049 KEYWORDS artificial intelligence; games; chess; AlphaZero; MuZero; cheat detection; coronavirus strategic games, has affected many other areas of interest, as Introduction has already been seen in recent years. All major chess events that were to take place in the second half One of the most famous human versus machine events was the of 2020 have been canceled, including the 44th Chess Olympiad 1997 victory of Deep Blue, an IBM chess software, against the and the match for the title of World Chess Champion. However, famous chess champion Garry Kasparov [3]. -

Chess As a Commodity?

Debate Chess as a commodity? 10 Aug 2020 Four and a half months have gone by with hardly any significant classical chess. Elite players have started to behave like hustlers. One of the most hyped chess competitions ever was staged between beginners. Raj Tischbierek asks if it is an irreversible development or just a nightmare that will pass. A dream One of those hot, sweaty June nights catapults me back to the year 1970. I am in the Yugoslavian town of Herceg Novi reporting for my magazine from the strongest blitz tournament in chess history until then. Bobby Fischer dominates the competition at will. The fight for second place is more exciting, between the four Soviets Mikhail Tal, Viktor Korchnoi, Tigran Petrosian and David Bronstein. In the last round Tal has gained substantial ground: in an endgame of rook versus rook he flags Petrosian! The Yugoslav commentators discuss with him the subtleties of this tricky endgame, which, I learn, is being played by the young Anatoly Karpov with masterful precision. Tal thus got ahead of Korchnoi, who tried his utmost against the American veteran Samuel Reshevsky. He didn’t simply resign what seemed to me a hopeless endgame, but let his clock run down almost to the last seconds. In vain: unlike in some earlier rounds, Sammy did not fall off the table from exhaustion and Korchnoi had to accept a bitter defeat. Among the lapwings I spot FIDE-President Folke Rogard: “How do you like the tournament?” – “Great! The response is overwhelming! In the next room, a television set is showing a football match between Yugoslavia and Austria. -

Online Chess Masters Club

Volume 5, Issue 2, February 2015 ISSN: 2277 128X International Journal of Advanced Research in Computer Science and Software Engineering Research Paper Available online at: www.ijarcsse.com Online Chess Masters Club Rohini Chindhalore*, Radhika Joshi, Shital Selokar, Sharvari Paretkar, Anagha Khergade Department of Computer Science and Engineering, Rajiv Gandhi College of Engineering and Research, Wanadongri, Nagpur, India Abstract—The paper presents an online chess game which provides human to human facility. In which the players are not required to be of the same region i.e. players could be from any city, country or continent. In offline chess game, players should be from same place in fact on same pc. In this application, players only have to register and after that they get unique id,by using that id you will login to the application, where the players have to only search for online registered users and then send them request to play with them. Your moves and another player’s moves are recorded and will be shown in the panel which is placed near chessboard. Keywords-Chess gameregistration, Profile management, Rational rose, Activities management. I. INTRODUCTION Chess game is already developed game but it is offline game i.e, the player can play with computer or with human but the restriction is it should be played on single or same computer. In online chess game player can play with other players which are unknown for him. In online chess game, you have to first register yourself by filling a simple form with basic information. Then administrator gives unique id and password to that registered user. -

CHAPTER 10 ONLY Revised August 24, 2020

US CHESS FEDERATION’S OFFICIAL RULES OF CHESS 7TH EDITION FREE DOWNLOAD VERSION: CHAPTER 10 ONLY Revised August 24, 2020 Tim Just, Chief Editor US Chess National Tournament Director FIDE National Arbiter 2 Contents Chapter 10: Rules for Online Tournaments and Matches .................................................................4 1. Introduction. ..........................................................................................................................4 1A. Purview. .............................................................................................................................................. 4 1B. Online Tournaments and Matches. ..................................................................................................... 4 2. The US Chess Online Rating System. ........................................................................................4 2A. Online Ratings and Over-The-Board (OTB) Ratings. ............................................................................. 4 2B. Ratable Time Controls for Online Play. ................................................................................................ 4 2C. US Chess Rating System and FIDE Rating System. ................................................................................ 5 2D. Calculating Player Ratings. .................................................................................................................. 5 2E. Assigning Ratings to Unrated Players. ................................................................................................. -

ACN 2021.Indd

Atlantic Chess News It is no secret that women have long been underrepresented in chess, es- pecially at the highest levels. At last year’s Team, some of the top young female chess talents in the Tri-State Area teamed up and nearly took down the whole tournament. While SIG Calls edged them out by half a point to win clear fi rst (more on page 3), the girls’ 5.5/6 tally is still a super impres- sive score in the biggest Team to date. This elite group of titled teens is made up of WFM Martha Samadashvili, WIM Evelyn Zhu, WFM Yassi Ehsani, and WFM Ellen Wang (in board order). All numbering in the top 50 women in the US, they each have many personal accolades. They are all several-year veterans of the Team, though this is the fi rst time they played togther. The team was organized with help from Sophia Rhode, who is one of the top organizers in the state of New York (from which all four girls hail). There was a consensus that, duirng the tourna- ment, they weren’t particularly focused on the fact that they were playing as an all-girls team since there was winning to be done, but they all shared Yassi’s sentiment that “... it was nice to be on an all female team for once.” The team gained a lot of publicity for their performance, and they channeled that into a series of “Unruly Queens” events. These included tourna- ments and camps run in conjunction with the USCF which aimed to attract more young women to the game.