The Impact of Artificial Intelligence on the Chess World

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Game Changer

Matthew Sadler and Natasha Regan Game Changer AlphaZero’s Groundbreaking Chess Strategies and the Promise of AI New In Chess 2019 Contents Explanation of symbols 6 Foreword by Garry Kasparov �������������������������������������������������������������������������������� 7 Introduction by Demis Hassabis 11 Preface 16 Introduction ������������������������������������������������������������������������������������������������������������ 19 Part I AlphaZero’s history . 23 Chapter 1 A quick tour of computer chess competition 24 Chapter 2 ZeroZeroZero ������������������������������������������������������������������������������ 33 Chapter 3 Demis Hassabis, DeepMind and AI 54 Part II Inside the box . 67 Chapter 4 How AlphaZero thinks 68 Chapter 5 AlphaZero’s style – meeting in the middle 87 Part III Themes in AlphaZero’s play . 131 Chapter 6 Introduction to our selected AlphaZero themes 132 Chapter 7 Piece mobility: outposts 137 Chapter 8 Piece mobility: activity 168 Chapter 9 Attacking the king: the march of the rook’s pawn 208 Chapter 10 Attacking the king: colour complexes 235 Chapter 11 Attacking the king: sacrifices for time, space and damage 276 Chapter 12 Attacking the king: opposite-side castling 299 Chapter 13 Attacking the king: defence 321 Part IV AlphaZero’s -

Improving Model-Based Reinforcement Learning with Internal State Representations Through Self-Supervision

1 Improving Model-Based Reinforcement Learning with Internal State Representations through Self-Supervision Julien Scholz, Cornelius Weber, Muhammad Burhan Hafez and Stefan Wermter Knowledge Technology, Department of Informatics, University of Hamburg, Germany [email protected], fweber, hafez, [email protected] Abstract—Using a model of the environment, reinforcement the design decisions made for MuZero, namely, how uncon- learning agents can plan their future moves and achieve super- strained its learning process is. After explaining all necessary human performance in board games like Chess, Shogi, and Go, fundamentals, we propose two individual augmentations to while remaining relatively sample-efficient. As demonstrated by the MuZero Algorithm, the environment model can even be the algorithm that work fully unsupervised in an attempt to learned dynamically, generalizing the agent to many more tasks improve its performance and make it more reliable. After- while at the same time achieving state-of-the-art performance. ward, we evaluate our proposals by benchmarking the regular Notably, MuZero uses internal state representations derived from MuZero algorithm against the newly augmented creation. real environment states for its predictions. In this paper, we bind the model’s predicted internal state representation to the environ- II. BACKGROUND ment state via two additional terms: a reconstruction model loss and a simpler consistency loss, both of which work independently A. MuZero Algorithm and unsupervised, acting as constraints to stabilize the learning process. Our experiments show that this new integration of The MuZero algorithm [1] is a model-based reinforcement reconstruction model loss and simpler consistency loss provide a learning algorithm that builds upon the success of its prede- significant performance increase in OpenAI Gym environments. -

Survey on Reinforcement Learning for Language Processing

Survey on reinforcement learning for language processing V´ıctorUc-Cetina1, Nicol´asNavarro-Guerrero2, Anabel Martin-Gonzalez1, Cornelius Weber3, Stefan Wermter3 1 Universidad Aut´onomade Yucat´an- fuccetina, [email protected] 2 Aarhus University - [email protected] 3 Universit¨atHamburg - fweber, [email protected] February 2021 Abstract In recent years some researchers have explored the use of reinforcement learning (RL) algorithms as key components in the solution of various natural language process- ing tasks. For instance, some of these algorithms leveraging deep neural learning have found their way into conversational systems. This paper reviews the state of the art of RL methods for their possible use for different problems of natural language processing, focusing primarily on conversational systems, mainly due to their growing relevance. We provide detailed descriptions of the problems as well as discussions of why RL is well-suited to solve them. Also, we analyze the advantages and limitations of these methods. Finally, we elaborate on promising research directions in natural language processing that might benefit from reinforcement learning. Keywords| reinforcement learning, natural language processing, conversational systems, pars- ing, translation, text generation 1 Introduction Machine learning algorithms have been very successful to solve problems in the natural language pro- arXiv:2104.05565v1 [cs.CL] 12 Apr 2021 cessing (NLP) domain for many years, especially supervised and unsupervised methods. However, this is not the case with reinforcement learning (RL), which is somewhat surprising since in other domains, reinforcement learning methods have experienced an increased level of success with some impressive results, for instance in board games such as AlphaGo Zero [106]. -

Lessons Learned: a Security Analysis of the Internet Chess Club

Lessons Learned: A Security Analysis of the Internet Chess Club John Black Martin Cochran Ryan Gardner University of Colorado Department of Computer Science UCB 430 Boulder, CO 80309 USA [email protected], [email protected], [email protected] Abstract between players. Each move a player made was transmit- ted (in the clear) to an ICS server, which would then relay The Internet Chess Club (ICC) is a popular online chess that move to the opponent. The server enforced the rules of server with more than 30,000 members worldwide including chess, recorded the position of the game after each move, various celebrities and the best chess players in the world. adjusted the ratings of the players according to the outcome Although the ICC website assures its users that the security of the game, and so forth. protocol used between client and server provides sufficient Serious chess players use a pair of clocks to enforce security for sensitive information to be transmitted (such as the requirement that players move in a reasonable amount credit card numbers), we show this is not true. In partic- of time: suppose Alice is playing Bob; at the beginning ular we show how a passive adversary can easily read all of a game, each player is allocated some number of min- communications with a trivial amount of computation, and utes. When Alice is thinking, her time ticks down; after how an active adversary can gain virtually unlimited pow- she moves, Bob begins thinking as his time ticks down. If ers over an ICC user. -

Monte-Carlo Tree Search As Regularized Policy Optimization

Monte-Carlo tree search as regularized policy optimization Jean-Bastien Grill * 1 Florent Altche´ * 1 Yunhao Tang * 1 2 Thomas Hubert 3 Michal Valko 1 Ioannis Antonoglou 3 Remi´ Munos 1 Abstract AlphaZero employs an alternative handcrafted heuristic to achieve super-human performance on board games (Silver The combination of Monte-Carlo tree search et al., 2016). Recent MCTS-based MuZero (Schrittwieser (MCTS) with deep reinforcement learning has et al., 2019) has also led to state-of-the-art results in the led to significant advances in artificial intelli- Atari benchmarks (Bellemare et al., 2013). gence. However, AlphaZero, the current state- of-the-art MCTS algorithm, still relies on hand- Our main contribution is connecting MCTS algorithms, crafted heuristics that are only partially under- in particular the highly-successful AlphaZero, with MPO, stood. In this paper, we show that AlphaZero’s a state-of-the-art model-free policy-optimization algo- search heuristics, along with other common ones rithm (Abdolmaleki et al., 2018). Specifically, we show that such as UCT, are an approximation to the solu- the empirical visit distribution of actions in AlphaZero’s tion of a specific regularized policy optimization search procedure approximates the solution of a regularized problem. With this insight, we propose a variant policy-optimization objective. With this insight, our second of AlphaZero which uses the exact solution to contribution a modified version of AlphaZero that comes this policy optimization problem, and show exper- significant performance gains over the original algorithm, imentally that it reliably outperforms the original especially in cases where AlphaZero has been observed to algorithm in multiple domains. -

Multi-Agent Reinforcement Learning: a Review of Challenges and Applications

applied sciences Review Multi-Agent Reinforcement Learning: A Review of Challenges and Applications Lorenzo Canese †, Gian Carlo Cardarilli, Luca Di Nunzio †, Rocco Fazzolari † , Daniele Giardino †, Marco Re † and Sergio Spanò *,† Department of Electronic Engineering, University of Rome “Tor Vergata”, Via del Politecnico 1, 00133 Rome, Italy; [email protected] (L.C.); [email protected] (G.C.C.); [email protected] (L.D.N.); [email protected] (R.F.); [email protected] (D.G.); [email protected] (M.R.) * Correspondence: [email protected]; Tel.: +39-06-7259-7273 † These authors contributed equally to this work. Abstract: In this review, we present an analysis of the most used multi-agent reinforcement learn- ing algorithms. Starting with the single-agent reinforcement learning algorithms, we focus on the most critical issues that must be taken into account in their extension to multi-agent scenarios. The analyzed algorithms were grouped according to their features. We present a detailed taxonomy of the main multi-agent approaches proposed in the literature, focusing on their related mathematical models. For each algorithm, we describe the possible application fields, while pointing out its pros and cons. The described multi-agent algorithms are compared in terms of the most important char- acteristics for multi-agent reinforcement learning applications—namely, nonstationarity, scalability, and observability. We also describe the most common benchmark environments used to evaluate the Citation: Canese, L.; Cardarilli, G.C.; performances of the considered methods. Di Nunzio, L.; Fazzolari, R.; Giardino, D.; Re, M.; Spanò, S. Multi-Agent Keywords: machine learning; reinforcement learning; multi-agent; swarm Reinforcement Learning: A Review of Challenges and Applications. -

Combining Off and On-Policy Training in Model-Based Reinforcement Learning

Combining Off and On-Policy Training in Model-Based Reinforcement Learning Alexandre Borges Arlindo L. Oliveira INESC-ID / Instituto Superior Técnico INESC-ID / Instituto Superior Técnico University of Lisbon University of Lisbon Lisbon, Portugal Lisbon, Portugal ABSTRACT factor and game length. AlphaGo [1] was the first computer pro- The combination of deep learning and Monte Carlo Tree Search gram to beat a professional human player in the game of Go by (MCTS) has shown to be effective in various domains, such as combining deep neural networks with tree search. In the first train- board and video games. AlphaGo [1] represented a significant step ing phase, the system learned from expert games, but a second forward in our ability to learn complex board games, and it was training phase enabled the system to improve its performance by rapidly followed by significant advances, such as AlphaGo Zero self-play using reinforcement learning. AlphaGoZero [2] learned [2] and AlphaZero [3]. Recently, MuZero [4] demonstrated that only through self-play and was able to beat AlphaGo soundly. Alp- it is possible to master both Atari games and board games by di- haZero [3] improved on AlphaGoZero by generalizing the model rectly learning a model of the environment, which is then used to any board game. However, these methods were designed only with Monte Carlo Tree Search (MCTS) [5] to decide what move for board games, specifically for two-player zero-sum games. to play in each position. During tree search, the algorithm simu- Recently, MuZero [4] improved on AlphaZero by generalizing lates games by exploring several possible moves and then picks the it even more, so that it can learn to play singe-agent games while, action that corresponds to the most promising trajectory. -

E-Magazine July 2020

E-MAGAZINE JULY 2020 0101 #EOCC2020 European Online Corporate Championships announced for 3 & 4 of October #EOYCC2020 European Online Youth Chess Championhsip announced for 18-20 September. International Chess Day recognised by United Nations & UNESCO celebrated all over the World #InternationalChessDay On, 20th of July, World celebrates the #InternationalChessDay. The International Chess Federation (FIDE) was founded, 96 years ago on 20th July 1924. The idea to celebrate this day as the International Chess Day was proposed by UNESCO, and it has been celebrated as such since 1966. On December 12, 2019, the UN General Assembly unanimously approved a resolution recognising the World Chess Day. On the occasion of the International Chess Day, a tele-meeting between the United Nations and FIDE was held on 20th of July 2020. Top chess personalities and representatives of the U.N. gathered to exchange views and insights to strengthen the productive collaboration European Chess Union has its seat in Switzerland, Address: Rainweidstrasse 2, CH-6333, Hunenberg See, Switzerland ECU decided to make a special promotional #Chess video. Mr. European Chess Union is an independent Zurab Azmaiparashvili visited the Georgian most popular Musical association founded in 1985 in Graz, Austria; Competition Show “Big stage”.ECU President taught the judges of European Chess Union has 54 National Federation Members; Every year ECU organizes more than 20 show, famous artists, how to play chess and then played a game with prestigious events and championships. them! www.europechess.org Federations, clubs, players and chess lovers took part in the [email protected] celebration all over the world. -

Efficiently Mastering the Game of Nogo with Deep Reinforcement

electronics Article Efficiently Mastering the Game of NoGo with Deep Reinforcement Learning Supported by Domain Knowledge Yifan Gao 1,*,† and Lezhou Wu 2,† 1 College of Medicine and Biological Information Engineering, Northeastern University, Liaoning 110819, China 2 College of Information Science and Engineering, Northeastern University, Liaoning 110819, China; [email protected] * Correspondence: [email protected] † These authors contributed equally to this work. Abstract: Computer games have been regarded as an important field of artificial intelligence (AI) for a long time. The AlphaZero structure has been successful in the game of Go, beating the top professional human players and becoming the baseline method in computer games. However, the AlphaZero training process requires tremendous computing resources, imposing additional difficulties for the AlphaZero-based AI. In this paper, we propose NoGoZero+ to improve the AlphaZero process and apply it to a game similar to Go, NoGo. NoGoZero+ employs several innovative features to improve training speed and performance, and most improvement strategies can be transferred to other nonspecific areas. This paper compares it with the original AlphaZero process, and results show that NoGoZero+ increases the training speed to about six times that of the original AlphaZero process. Moreover, in the experiment, our agent beat the original AlphaZero agent with a score of 81:19 after only being trained by 20,000 self-play games’ data (small in quantity compared with Citation: Gao, Y.; Wu, L. Efficiently 120,000 self-play games’ data consumed by the original AlphaZero). The NoGo game program based Mastering the Game of NoGo with on NoGoZero+ was the runner-up in the 2020 China Computer Game Championship (CCGC) with Deep Reinforcement Learning limited resources, defeating many AlphaZero-based programs. -

ELF Opengo: an Analysis and Open Reimplementation of Alphazero

ELF OpenGo: An Analysis and Open Reimplementation of AlphaZero Yuandong Tian 1 Jerry Ma * 1 Qucheng Gong * 1 Shubho Sengupta * 1 Zhuoyuan Chen 1 James Pinkerton 1 C. Lawrence Zitnick 1 Abstract However, these advances in playing ability come at signifi- The AlphaGo, AlphaGo Zero, and AlphaZero cant computational expense. A single training run requires series of algorithms are remarkable demonstra- millions of selfplay games and days of training on thousands tions of deep reinforcement learning’s capabili- of TPUs, which is an unattainable level of compute for the ties, achieving superhuman performance in the majority of the research community. When combined with complex game of Go with progressively increas- the unavailability of code and models, the result is that the ing autonomy. However, many obstacles remain approach is very difficult, if not impossible, to reproduce, in the understanding of and usability of these study, improve upon, and extend. promising approaches by the research commu- In this paper, we propose ELF OpenGo, an open-source nity. Toward elucidating unresolved mysteries reimplementation of the AlphaZero (Silver et al., 2018) and facilitating future research, we propose ELF algorithm for the game of Go. We then apply ELF OpenGo OpenGo, an open-source reimplementation of the toward the following three additional contributions. AlphaZero algorithm. ELF OpenGo is the first open-source Go AI to convincingly demonstrate First, we train a superhuman model for ELF OpenGo. Af- superhuman performance with a perfect (20:0) ter running our AlphaZero-style training software on 2,000 record against global top professionals. We ap- GPUs for 9 days, our 20-block model has achieved super- ply ELF OpenGo to conduct extensive ablation human performance that is arguably comparable to the 20- studies, and to identify and analyze numerous in- block models described in Silver et al.(2017) and Silver teresting phenomena in both the model training et al.(2018). -

The Queen's Gambit

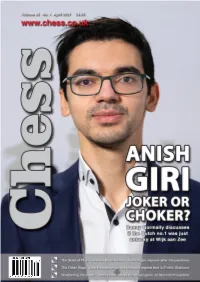

01-01 Cover - April 2021_Layout 1 16/03/2021 13:03 Page 1 03-03 Contents_Chess mag - 21_6_10 18/03/2021 11:45 Page 3 Chess Contents Founding Editor: B.H. Wood, OBE. M.Sc † Editorial....................................................................................................................4 Executive Editor: Malcolm Pein Malcolm Pein on the latest developments in the game Editors: Richard Palliser, Matt Read Associate Editor: John Saunders 60 Seconds with...Geert van der Velde.....................................................7 Subscriptions Manager: Paul Harrington We catch up with the Play Magnus Group’s VP of Content Chess Magazine (ISSN 0964-6221) is published by: A Tale of Two Players.........................................................................................8 Chess & Bridge Ltd, 44 Baker St, London, W1U 7RT Wesley So shone while Carlsen struggled at the Opera Euro Rapid Tel: 020 7486 7015 Anish Giri: Choker or Joker?........................................................................14 Email: [email protected], Website: www.chess.co.uk Danny Gormally discusses if the Dutch no.1 was just unlucky at Wijk Twitter: @CHESS_Magazine How Good is Your Chess?..............................................................................18 Twitter: @TelegraphChess - Malcolm Pein Daniel King also takes a look at the play of Anish Giri Twitter: @chessandbridge The Other Saga ..................................................................................................22 Subscription Rates: John Henderson very much -

Glossary of Chess

Glossary of chess See also: Glossary of chess problems, Index of chess • X articles and Outline of chess • This page explains commonly used terms in chess in al- • Z phabetical order. Some of these have their own pages, • References like fork and pin. For a list of unorthodox chess pieces, see Fairy chess piece; for a list of terms specific to chess problems, see Glossary of chess problems; for a list of chess-related games, see Chess variants. 1 A Contents : absolute pin A pin against the king is called absolute since the pinned piece cannot legally move (as mov- ing it would expose the king to check). Cf. relative • A pin. • B active 1. Describes a piece that controls a number of • C squares, or a piece that has a number of squares available for its next move. • D 2. An “active defense” is a defense employing threat(s) • E or counterattack(s). Antonym: passive. • F • G • H • I • J • K • L • M • N • O • P Envelope used for the adjournment of a match game Efim Geller • Q vs. Bent Larsen, Copenhagen 1966 • R adjournment Suspension of a chess game with the in- • S tention to finish it later. It was once very common in high-level competition, often occurring soon af- • T ter the first time control, but the practice has been • U abandoned due to the advent of computer analysis. See sealed move. • V adjudication Decision by a strong chess player (the ad- • W judicator) on the outcome of an unfinished game. 1 2 2 B This practice is now uncommon in over-the-board are often pawn moves; since pawns cannot move events, but does happen in online chess when one backwards to return to squares they have left, their player refuses to continue after an adjournment.