Alpha Zero Paper

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Elements of DSAI: Game Tree Search, Learning Architectures

Introduction Games Game Search Evaluation Fns AlphaGo/Zero Summary Quiz References Elements of DSAI AlphaGo Part 2: Game Tree Search, Learning Architectures Search & Learn: A Recipe for AI Action Decisions J¨orgHoffmann Winter Term 2019/20 Hoffmann Elements of DSAI Game Tree Search, Learning Architectures 1/49 Introduction Games Game Search Evaluation Fns AlphaGo/Zero Summary Quiz References Competitive Agents? Quote AI Introduction: \Single agent vs. multi-agent: One agent or several? Competitive or collaborative?" ! Single agent! Several koalas, several gorillas trying to beat these up. BUT there is only a single acting entity { one player decides which moves to take (who gets into the boat). Hoffmann Elements of DSAI Game Tree Search, Learning Architectures 3/49 Introduction Games Game Search Evaluation Fns AlphaGo/Zero Summary Quiz References Competitive Agents! Quote AI Introduction: \Single agent vs. multi-agent: One agent or several? Competitive or collaborative?" ! Multi-agent competitive! TWO players deciding which moves to take. Conflicting interests. Hoffmann Elements of DSAI Game Tree Search, Learning Architectures 4/49 Introduction Games Game Search Evaluation Fns AlphaGo/Zero Summary Quiz References Agenda: Game Search, AlphaGo Architecture Games: What is that? ! Game categories, game solutions. Game Search: How to solve a game? ! Searching the game tree. Evaluation Functions: How to evaluate a game position? ! Heuristic functions for games. AlphaGo: How does it work? ! Overview of AlphaGo architecture, and changes in Alpha(Go) Zero. Hoffmann Elements of DSAI Game Tree Search, Learning Architectures 5/49 Introduction Games Game Search Evaluation Fns AlphaGo/Zero Summary Quiz References Positioning in the DSAI Phase Model Hoffmann Elements of DSAI Game Tree Search, Learning Architectures 6/49 Introduction Games Game Search Evaluation Fns AlphaGo/Zero Summary Quiz References Which Games? ! No chance element. -

Development of Games for Users with Visual Impairment Czech Technical University in Prague Faculty of Electrical Engineering

Development of games for users with visual impairment Czech Technical University in Prague Faculty of Electrical Engineering Dina Chernova January 2017 Acknowledgement I would first like to thank Bc. Honza Had´aˇcekfor his valuable advice. I am also very grateful to my supervisor Ing. Daniel Nov´ak,Ph.D. and to all participants that were involved in testing of my application for their precious time. I must express my profound gratitude to my loved ones for their support and continuous encouragement throughout my years of study. This accomplishment would not have been possible without them. Thank you. 5 Declaration I declare that I have developed this thesis on my own and that I have stated all the information sources in accordance with the methodological guideline of adhering to ethical principles during the preparation of university theses. In Prague 09.01.2017 Author 6 Abstract This bachelor thesis deals with analysis and implementation of mobile application that allows visually impaired people to play chess on their smart phones. The application con- trol is performed using special gestures and text{to{speech engine as a sound accompanier. For human against computer game mode I have used currently the best game engine called Stockfish. The application is developed under Android mobile platform. Keywords: chess; visually impaired; Android; Bakal´aˇrsk´apr´acese zab´yv´aanal´yzoua implementac´ımobiln´ıaplikace, kter´aumoˇzˇnuje zrakovˇepostiˇzen´ymlidem hr´atˇsachy na sv´emsmartphonu. Ovl´ad´an´ıaplikace se prov´ad´ı pomoc´ıspeci´aln´ıch gest a text{to{speech enginu pro zvukov´edoprov´azen´ı.V reˇzimu ˇclovˇek versus poˇc´ıtaˇcjsem pouˇzilasouˇcasnˇenejlepˇs´ıhern´ıengine Stockfish. -

Game Changer

Matthew Sadler and Natasha Regan Game Changer AlphaZero’s Groundbreaking Chess Strategies and the Promise of AI New In Chess 2019 Contents Explanation of symbols 6 Foreword by Garry Kasparov �������������������������������������������������������������������������������� 7 Introduction by Demis Hassabis 11 Preface 16 Introduction ������������������������������������������������������������������������������������������������������������ 19 Part I AlphaZero’s history . 23 Chapter 1 A quick tour of computer chess competition 24 Chapter 2 ZeroZeroZero ������������������������������������������������������������������������������ 33 Chapter 3 Demis Hassabis, DeepMind and AI 54 Part II Inside the box . 67 Chapter 4 How AlphaZero thinks 68 Chapter 5 AlphaZero’s style – meeting in the middle 87 Part III Themes in AlphaZero’s play . 131 Chapter 6 Introduction to our selected AlphaZero themes 132 Chapter 7 Piece mobility: outposts 137 Chapter 8 Piece mobility: activity 168 Chapter 9 Attacking the king: the march of the rook’s pawn 208 Chapter 10 Attacking the king: colour complexes 235 Chapter 11 Attacking the king: sacrifices for time, space and damage 276 Chapter 12 Attacking the king: opposite-side castling 299 Chapter 13 Attacking the king: defence 321 Part IV AlphaZero’s -

Reinforcement Learning (1) Key Concepts & Algorithms

Winter 2020 CSC 594 Topics in AI: Advanced Deep Learning 5. Deep Reinforcement Learning (1) Key Concepts & Algorithms (Most content adapted from OpenAI ‘Spinning Up’) 1 Noriko Tomuro Reinforcement Learning (RL) • Reinforcement Learning (RL) is a type of Machine Learning where an agent learns to achieve a goal by interacting with the environment -- trial and error. • RL is one of three basic machine learning paradigms, alongside supervised learning and unsupervised learning. • The purpose of RL is to learn an optimal policy that maximizes the return for the sequences of agent’s actions (i.e., optimal policy). https://en.wikipedia.org/wiki/Reinforcement_learning 2 • RL have recently enjoyed a wide variety of successes, e.g. – Robot controlling in simulation as well as in the real world Strategy games such as • AlphaGO (by Google DeepMind) • Atari games https://en.wikipedia.org/wiki/Reinforcement_learning 3 Deep Reinforcement Learning (DRL) • A policy is essentially a function, which maps the agent’s each action to the expected return or reward. Deep Reinforcement Learning (DRL) uses deep neural networks for the function (and other components). https://spinningup.openai.com/en/latest/spinningup/rl_intro.html 4 5 Some Key Concepts and Terminology 1. States and Observations – A state s is a complete description of the state of the world. For now, we can think of states belonging in the environment. – An observation o is a partial description of a state, which may omit information. – A state could be fully or partially observable to the agent. If partial, the agent forms an internal state (or state estimation). https://spinningup.openai.com/en/latest/spinningup/rl_intro.html 6 • States and Observations (cont.) – In deep RL, we almost always represent states and observations by a real-valued vector, matrix, or higher-order tensor. -

Discrete Sequential Prediction of Continu

Under review as a conference paper at ICLR 2018 DISCRETE SEQUENTIAL PREDICTION OF CONTINU- OUS ACTIONS FOR DEEP RL Anonymous authors Paper under double-blind review ABSTRACT It has long been assumed that high dimensional continuous control problems cannot be solved effectively by discretizing individual dimensions of the action space due to the exponentially large number of bins over which policies would have to be learned. In this paper, we draw inspiration from the recent success of sequence-to-sequence models for structured prediction problems to develop policies over discretized spaces. Central to this method is the realization that com- plex functions over high dimensional spaces can be modeled by neural networks that predict one dimension at a time. Specifically, we show how Q-values and policies over continuous spaces can be modeled using a next step prediction model over discretized dimensions. With this parameterization, it is possible to both leverage the compositional structure of action spaces during learning, as well as compute maxima over action spaces (approximately). On a simple example task we demonstrate empirically that our method can perform global search, which effectively gets around the local optimization issues that plague DDPG. We apply the technique to off-policy (Q-learning) methods and show that our method can achieve the state-of-the-art for off-policy methods on several continuous control tasks. 1 INTRODUCTION Reinforcement learning has long been considered as a general framework applicable to a broad range of problems. However, the approaches used to tackle discrete and continuous action spaces have been fundamentally different. In discrete domains, algorithms such as Q-learning leverage backups through Bellman equations and dynamic programming to solve problems effectively. -

Bridging Imagination and Reality for Model-Based Deep Reinforcement Learning

Bridging Imagination and Reality for Model-Based Deep Reinforcement Learning Guangxiang Zhu⇤ Minghao Zhang⇤ IIIS School of Software Tsinghua University Tsinghua University [email protected] [email protected] Honglak Lee Chongjie Zhang EECS IIIS University of Michigan Tsinghua University [email protected] [email protected] Abstract Sample efficiency has been one of the major challenges for deep reinforcement learning. Recently, model-based reinforcement learning has been proposed to address this challenge by performing planning on imaginary trajectories with a learned world model. However, world model learning may suffer from overfitting to training trajectories, and thus model-based value estimation and policy search will be prone to be sucked in an inferior local policy. In this paper, we propose a novel model-based reinforcement learning algorithm, called BrIdging Reality and Dream (BIRD). It maximizes the mutual information between imaginary and real trajectories so that the policy improvement learned from imaginary trajectories can be easily generalized to real trajectories. We demonstrate that our approach improves sample efficiency of model-based planning, and achieves state-of-the-art performance on challenging visual control benchmarks. 1 Introduction Reinforcement learning (RL) is proposed as a general-purpose learning framework for artificial intelligence problems, and has led to tremendous progress in a variety of domains [1, 2, 3, 4]. Model- free RL adopts a trail-and-error paradigm, which directly learns a mapping function from observations to values or actions through interactions with environments. It has achieved remarkable performance in certain video games and continuous control tasks because of its simplicity and minimal assumptions about environments. -

OLIVAW: Mastering Othello with Neither Humans Nor a Penny Antonio Norelli, Alessandro Panconesi Dept

1 OLIVAW: Mastering Othello with neither Humans nor a Penny Antonio Norelli, Alessandro Panconesi Dept. of Computer Science, Universita` La Sapienza, Rome, Italy Abstract—We introduce OLIVAW, an AI Othello player adopt- of companies. Another aspect of the same problem is the ing the design principles of the famous AlphaGo series. The main amount of training needed. AlphaGo Zero required 4.9 million motivation behind OLIVAW was to attain exceptional competence games played during self-play. While to attain the level of in a non-trivial board game at a tiny fraction of the cost of its illustrious predecessors. In this paper, we show how the grandmaster for games like Starcraft II and Dota 2 the training AlphaGo Zero’s paradigm can be successfully applied to the required 200 years and more than 10,000 years of gameplay, popular game of Othello using only commodity hardware and respectively [7], [8]. free cloud services. While being simpler than Chess or Go, Thus one of the major problems to emerge in the wake Othello maintains a considerable search space and difficulty of these breakthroughs is whether comparable results can be in evaluating board positions. To achieve this result, OLIVAW implements some improvements inspired by recent works to attained at a much lower cost– computational and financial– accelerate the standard AlphaGo Zero learning process. The and with just commodity hardware. In this paper we take main modification implies doubling the positions collected per a small step in this direction, by showing that AlphaGo game during the training phase, by including also positions not Zero’s successful paradigm can be replicated for the game played but largely explored by the agent. -

(2021), 2814-2819 Research Article Can Chess Ever Be Solved Na

Turkish Journal of Computer and Mathematics Education Vol.12 No.2 (2021), 2814-2819 Research Article Can Chess Ever Be Solved Naveen Kumar1, Bhayaa Sharma2 1,2Department of Mathematics, University Institute of Sciences, Chandigarh University, Gharuan, Mohali, Punjab-140413, India [email protected], [email protected] Article History: Received: 11 January 2021; Accepted: 27 February 2021; Published online: 5 April 2021 Abstract: Data Science and Artificial Intelligence have been all over the world lately,in almost every possible field be it finance,education,entertainment,healthcare,astronomy, astrology, and many more sports is no exception. With so much data, statistics, and analysis available in this particular field, when everything is being recorded it has become easier for team selectors, broadcasters, audience, sponsors, most importantly for players themselves to prepare against various opponents. Even the analysis has improved over the period of time with the evolvement of AI, not only analysis one can even predict the things with the insights available. This is not even restricted to this,nowadays players are trained in such a manner that they are capable of taking the most feasible and rational decisions in any given situation. Chess is one of those sports that depend on calculations, algorithms, analysis, decisions etc. Being said that whenever the analysis is involved, we have always improvised on the techniques. Algorithms are somethingwhich can be solved with the help of various software, does that imply that chess can be fully solved,in simple words does that mean that if both the players play the best moves respectively then the game must end in a draw or does that mean that white wins having the first move advantage. -

Download Source Engine for Pc Free Download Source Engine for Pc Free

download source engine for pc free Download source engine for pc free. Completing the CAPTCHA proves you are a human and gives you temporary access to the web property. What can I do to prevent this in the future? If you are on a personal connection, like at home, you can run an anti-virus scan on your device to make sure it is not infected with malware. If you are at an office or shared network, you can ask the network administrator to run a scan across the network looking for misconfigured or infected devices. Another way to prevent getting this page in the future is to use Privacy Pass. You may need to download version 2.0 now from the Chrome Web Store. Cloudflare Ray ID: 67a0b2f3bed7f14e • Your IP : 188.246.226.140 • Performance & security by Cloudflare. Download source engine for pc free. Completing the CAPTCHA proves you are a human and gives you temporary access to the web property. What can I do to prevent this in the future? If you are on a personal connection, like at home, you can run an anti-virus scan on your device to make sure it is not infected with malware. If you are at an office or shared network, you can ask the network administrator to run a scan across the network looking for misconfigured or infected devices. Another way to prevent getting this page in the future is to use Privacy Pass. You may need to download version 2.0 now from the Chrome Web Store. Cloudflare Ray ID: 67a0b2f3c99315dc • Your IP : 188.246.226.140 • Performance & security by Cloudflare. -

Improving the Gating Mechanism of Recurrent

Under review as a conference paper at ICLR 2020 IMPROVING THE GATING MECHANISM OF RECURRENT NEURAL NETWORKS Anonymous authors Paper under double-blind review ABSTRACT Gating mechanisms are widely used in neural network models, where they allow gradients to backpropagate more easily through depth or time. However, their satura- tion property introduces problems of its own. For example, in recurrent models these gates need to have outputs near 1 to propagate information over long time-delays, which requires them to operate in their saturation regime and hinders gradient-based learning of the gate mechanism. We address this problem by deriving two synergistic modifications to the standard gating mechanism that are easy to implement, introduce no additional hyperparameters, and improve learnability of the gates when they are close to saturation. We show how these changes are related to and improve on alternative recently proposed gating mechanisms such as chrono-initialization and Ordered Neurons. Empirically, our simple gating mechanisms robustly improve the performance of recurrent models on a range of applications, including synthetic memorization tasks, sequential image classification, language modeling, and reinforcement learning, particularly when long-term dependencies are involved. 1 INTRODUCTION Recurrent neural networks (RNNs) have become a standard machine learning tool for learning from sequential data. However, RNNs are prone to the vanishing gradient problem, which occurs when the gradients of the recurrent weights become vanishingly small as they get backpropagated through time (Hochreiter et al., 2001). A common approach to alleviate the vanishing gradient problem is to use gating mechanisms, leading to models such as the long short term memory (Hochreiter & Schmidhuber, 1997, LSTM) and gated recurrent units (Chung et al., 2014, GRUs). -

Monte-Carlo Tree Search As Regularized Policy Optimization

Monte-Carlo tree search as regularized policy optimization Jean-Bastien Grill * 1 Florent Altche´ * 1 Yunhao Tang * 1 2 Thomas Hubert 3 Michal Valko 1 Ioannis Antonoglou 3 Remi´ Munos 1 Abstract AlphaZero employs an alternative handcrafted heuristic to achieve super-human performance on board games (Silver The combination of Monte-Carlo tree search et al., 2016). Recent MCTS-based MuZero (Schrittwieser (MCTS) with deep reinforcement learning has et al., 2019) has also led to state-of-the-art results in the led to significant advances in artificial intelli- Atari benchmarks (Bellemare et al., 2013). gence. However, AlphaZero, the current state- of-the-art MCTS algorithm, still relies on hand- Our main contribution is connecting MCTS algorithms, crafted heuristics that are only partially under- in particular the highly-successful AlphaZero, with MPO, stood. In this paper, we show that AlphaZero’s a state-of-the-art model-free policy-optimization algo- search heuristics, along with other common ones rithm (Abdolmaleki et al., 2018). Specifically, we show that such as UCT, are an approximation to the solu- the empirical visit distribution of actions in AlphaZero’s tion of a specific regularized policy optimization search procedure approximates the solution of a regularized problem. With this insight, we propose a variant policy-optimization objective. With this insight, our second of AlphaZero which uses the exact solution to contribution a modified version of AlphaZero that comes this policy optimization problem, and show exper- significant performance gains over the original algorithm, imentally that it reliably outperforms the original especially in cases where AlphaZero has been observed to algorithm in multiple domains. -

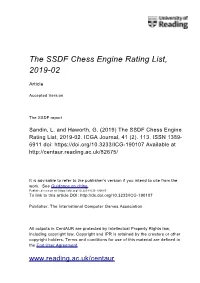

The SSDF Chess Engine Rating List, 2019-02

The SSDF Chess Engine Rating List, 2019-02 Article Accepted Version The SSDF report Sandin, L. and Haworth, G. (2019) The SSDF Chess Engine Rating List, 2019-02. ICGA Journal, 41 (2). 113. ISSN 1389- 6911 doi: https://doi.org/10.3233/ICG-190107 Available at http://centaur.reading.ac.uk/82675/ It is advisable to refer to the publisher’s version if you intend to cite from the work. See Guidance on citing . Published version at: https://doi.org/10.3233/ICG-190085 To link to this article DOI: http://dx.doi.org/10.3233/ICG-190107 Publisher: The International Computer Games Association All outputs in CentAUR are protected by Intellectual Property Rights law, including copyright law. Copyright and IPR is retained by the creators or other copyright holders. Terms and conditions for use of this material are defined in the End User Agreement . www.reading.ac.uk/centaur CentAUR Central Archive at the University of Reading Reading’s research outputs online THE SSDF RATING LIST 2019-02-28 148673 games played by 377 computers Rating + - Games Won Oppo ------ --- --- ----- --- ---- 1 Stockfish 9 x64 1800X 3.6 GHz 3494 32 -30 642 74% 3308 2 Komodo 12.3 x64 1800X 3.6 GHz 3456 30 -28 640 68% 3321 3 Stockfish 9 x64 Q6600 2.4 GHz 3446 50 -48 200 57% 3396 4 Stockfish 8 x64 1800X 3.6 GHz 3432 26 -24 1059 77% 3217 5 Stockfish 8 x64 Q6600 2.4 GHz 3418 38 -35 440 72% 3251 6 Komodo 11.01 x64 1800X 3.6 GHz 3397 23 -22 1134 72% 3229 7 Deep Shredder 13 x64 1800X 3.6 GHz 3360 25 -24 830 66% 3246 8 Booot 6.3.1 x64 1800X 3.6 GHz 3352 29 -29 560 54% 3319 9 Komodo 9.1