Centaur Adds Aito Server Processor

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

MICROPROCESSOR REPORT the INSIDERS’ GUIDE to MICROPROCESSOR HARDWARE Slot Vs

VOLUME 12, NUMBER 1 JANUARY 26, 1998 MICROPROCESSOR REPORT THE INSIDERS’ GUIDE TO MICROPROCESSOR HARDWARE Slot vs. Socket Battle Heats Up Intel Prepares for Transition as Competitors Boost Socket 7 A A look Look by Michael Slater ship as many parts as they hoped, especially at the highest backBack clock speeds where profits are much greater. The past year has brought a great deal The shift to 0.25-micron technology will be central to of change to the x86 microprocessor 1998’s CPU developments. Intel began shipping 0.25-micron A market, with Intel, AMD, and Cyrix processors in 3Q97; AMD followed late in 1997, IDT plans to LookA look replacing virtually their entire product join in by mid-98, and Cyrix expects to catch up in 3Q98. Ahead ahead lines with new devices. But despite high The more advanced process technology will cut power con- hopes, AMD and Cyrix struggled in vain for profits. The sumption, allowing sixth-generation CPUs to be used in financial contrast is stark: in 1997, Intel earned a record notebook systems. The smaller die sizes will enable higher $6.9 billion in net profit, while AMD lost $21 million for the production volumes and make it possible to integrate an L2 year and Cyrix lost $6 million in the six months before it was cache on the CPU chip. acquired by National. New entrant IDT added another com- The processors from Intel’s challengers have lagged in petitor to the mix but hasn’t shipped enough products to floating-point and MMX performance, which the vendors become a significant force. -

World's First High- Performance X86 With

World’s First High- Performance x86 with Integrated AI Coprocessor Linley Spring Processor Conference 2020 April 8, 2020 G Glenn Henry Dr. Parviz Palangpour Chief AI Architect AI Software Deep Dive into Centaur’s New x86 AI Coprocessor (Ncore) • Background • Motivations • Constraints • Architecture • Software • Benchmarks • Conclusion Demonstrated Working Silicon For Video-Analytics Edge Server Nov, 2019 ©2020 Centaur Technology. All Rights Reserved Centaur Technology Background • 25-year-old startup in Austin, owned by Via Technologies • We design, from scratch, low-cost x86 processors • Everything to produce a custom x86 SoC with ~100 people • Architecture, logic design and microcode • Design, verification, and layout • Physical build, fab interface, and tape-out • Shipped by IBM, HP, Dell, Samsung, Lenovo… ©2020 Centaur Technology. All Rights Reserved Genesis of the AI Coprocessor (Ncore) • Centaur was developing SoC (CHA) with new x86 cores • Targeted at edge/cloud server market (high-end x86 features) • Huge inference markets beyond hyperscale cloud, IOT and mobile • Video analytics, edge computing, on-premise servers • However, x86 isn’t efficient at inference • High-performance inference requires external accelerator • CHA has 44x PCIe to support GPUs, etc. • But adds cost, power, another point of failure, etc. ©2020 Centaur Technology. All Rights Reserved Why not integrate a coprocessor? • Very low cost • Many components already on SoC (“free” to Ncore) Caches, memory, clock, power, package, pins, busses, etc. • There often is “free” space on complex SOCs due to I/O & pins • Having many high-performance x86 cores allows flexibility • The x86 cores can do some of the work, in parallel • Didn’t have to implement all strange/new functions • Allows fast prototyping of new things • For customer: nothing extra to buy ©2020 Centaur Technology. -

MPR Article Template

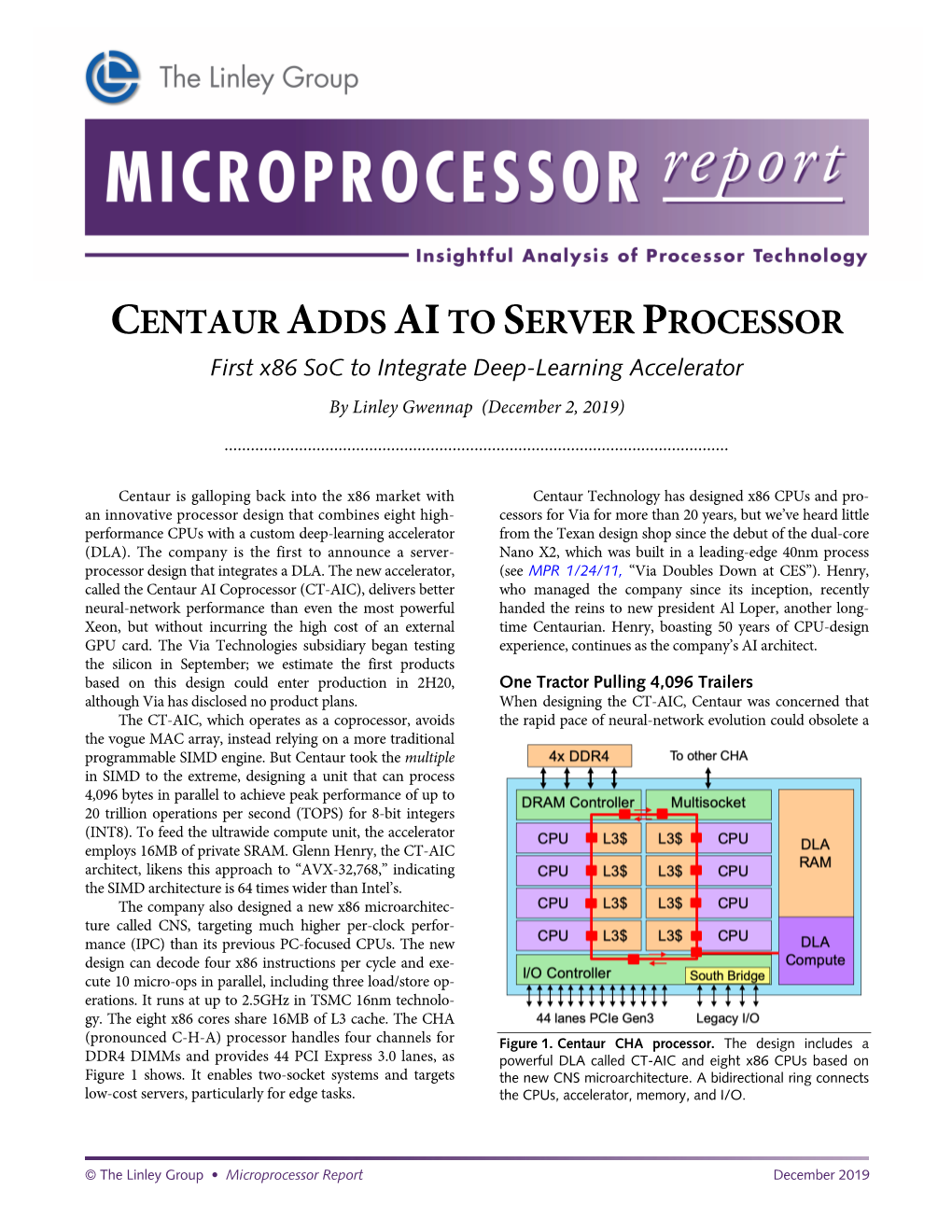

CENTAUR ADDS AI TO SERVER PROCESSOR First x86 SoC to Integrate Deep-Learning Accelerator By Linley Gwennap (December 2, 2019) ................................................................................................................... Centaur is galloping back into the x86 market with Centaur Technology has designed x86 CPUs and pro- an innovative processor design that combines eight high- cessors for Via for more than 20 years, but we’ve heard little performance CPUs with a custom deep-learning accelerator from the Texan design shop since the debut of the dual-core (DLA). The company is the first to announce a server- Nano X2, which was built in a leading-edge 40nm process processor design that integrates a DLA. The new accelerator, (see MPR 1/24/11, “Via Doubles Down at CES”). Henry, called Ncore, delivers better neural-network performance who managed the company since its inception, recently than even the most powerful Xeon, but without incurring handed the reins to new president Al Loper, another long- the high cost of an external GPU card. The Via Technologies time Centaurian. Henry, boasting 50 years of CPU-design subsidiary began testing the silicon in September; we esti- experience, continues as the company’s AI architect. mate the first products based on this design could enter pro- duction in 2H20, although Via has disclosed no product One Tractor Pulling 4,096 Trailers plans. When designing the Ncore accelerator, Centaur was con- Ncore, which operates as a coprocessor, avoids the cerned that the rapid pace of neural-network evolution vogue MAC array, instead relying on a more traditional pro- grammable SIMD engine. But Centaur took the multiple in 4x DDR4 To other CHA SIMD to the extreme, designing a unit that processes 4,096 bytes in parallel to achieve peak performance of 20 trillion operations per second (TOPS) for 8-bit integers (INT8). -

Architecture of VIA Isaiah (NANO)

Architecture of VIA Isaiah (NANO) Jan Davidek dav053 2008/2009 1. Introduction to the VIA Nano™ Processor The last few years have seen significant changes within the microprocessor industry, and indeed the entire IT landscape. Much of this change has been driven by three factors: the increasing focus of both business and consumer on energy efficiency, the rise of mobile computing, and the growing performance requirements of computing devices in a fast expanding multimedia environment. In the microprocessor space, the traditional race for ever faster processing speeds has given way to one that factors in the energy used to achieve those speeds. Performance per watt is the new metric by which quality is measured, with all the major players endeavoring to increase the performance capabilities of their products, while reducing the amount of energy that they require. Based on the recently announced VIA Isaiah Architecture, the new VIA Nano™ processor is a next-generation x86 processor that sets the standard in power efficiency for tomorrow’s immersive internet experience. With advanced power and thermal management features helping to make it the world’s most energy efficient x86 processor architecture, the VIA Nano processor also boasts ultra modern functionality, high-performance computation and media processing, and enhanced VIA PadLock™ hardware security features. Augmenting the VIA C7® family of processors, the VIA Nano processor’s pin compatibility extends the VIA processor platform portfolio, enabling OEMs to offer a wider range of products for different market segments, and furnishing them with the ability to upgrade device performance without incurring the time and cost expense associated with system redesign. -

VIA Technologies Inc. Confidential N D a R Equired

Embedded System Platform VIA Eden-N VIAProcessor Technologies Inc. Confidential Datasheet N D A R equired VIA TECHNOLOGIES, INC. Revision 0.92 October 22, 2004 VIA Eden-N Processor Datasheet October 22, 2004 This is Version 0.92 of the VIA Eden-N Processor Datasheet. © 2003-2004 VIA Technologies, Inc All Rights Reserved. VIA reserves the right to make changes in its products without notice in order to improve design or performance characteristics. This publication neither states nor implies any representations or warranties of any kind, including but not limited to any implied warranty of merchantability or fitness for a particular purpose. No license, express or implied, to any intellectual property rights is granted by this document. VIA makes no representations or warranties with respect to the accuracy or completeness of the con- tents of this publication or the information contained herein, and reserves the right to make. changes at any time, without notice. VIA disclaims responsibility for any consequences resulting from the use of the information included herein. Inc Cyrix and VIA C3 are trademarks of VIA Technologies, Inc. s CentaurHauls is a trademark of Centaur Technology Corporation. e AMD, AMD K6, and Athlon are trademarks of Advanced Micro Devices, Inc. Microsoft and Windows are registered trademarks of Microsoftgi Corporation. l o a Intel, Pentium, Celeron, and MMX are registered trademarks of Intel Corporation.i d Other product names used in this publication are for identification purposes only and maye be trade- marks of their respective companies. nt r hnol i c ide qu e e LIFE SUPPORT POLICY nf T VIA processor products are not authorized for use as componentso in life Rsupport or other medical devices or systems (hereinafter life support devices)A unless a specificC written agreement pertaining to such intended use is executed be- tween the manufacturer and anI officer of VIA. -

The Microarchitecture of Intel and AMD Cpus

3. The microarchitecture of Intel, AMD and VIA CPUs An optimization guide for assembly programmers and compiler makers By Agner Fog. Copenhagen University College of Engineering. Copyright © 1996 - 2012. Last updated 2012-02-29. Contents 1 Introduction ....................................................................................................................... 4 1.1 About this manual ....................................................................................................... 4 1.2 Microprocessor versions covered by this manual........................................................ 6 2 Out-of-order execution (All processors except P1, PMMX)................................................ 8 2.1 Instructions are split into µops..................................................................................... 8 2.2 Register renaming ...................................................................................................... 9 3 Branch prediction (all processors) ................................................................................... 11 3.1 Prediction methods for conditional jumps.................................................................. 11 3.2 Branch prediction in P1............................................................................................. 16 3.3 Branch prediction in PMMX, PPro, P2, and P3 ......................................................... 20 3.4 Branch prediction in P4 and P4E .............................................................................. 21 -

Die VIA Nano-Prozessor Architektur

Fakultät Informatik Institut für Technische Informatik Professur für VLSI-Entwurfssysteme, Diagnostik und Architektur Die VIA Nano-Prozessor Architektur Dresden, 20.05.2009 Gliederung • Einführung • Der Nano • Modelle • Architektur Highlights • Sicherheitsfunktionen • Energiemanagement • Cache subsystem • Struktur • Intel Atom • Performance TU Dresden, 27.05.2009 Folie 2 01 Einführung • Nano ist komplette Neuentwicklung • von Centaur Technology entwickelt • Ziel: • Leistungssteigerung zum C7 • Aber: Strombedarf so gering wie möglich • Warum?: • Wachsender Bedarf an Energie-Effizienz • Mobile computing • Steigende perfomance Anforderungen (Multimedia) TU Dresden, 27.05.2009 Folie 3 02 Der NANO in Fakten • 64 Bit Architektur • Basiert auf VIA Isaiah Architektur • 65 nm Technologie • Out -of -order Issue und Execution • Pin-Kompatible zu C7 • 64 Kbyte L1-Instruction Cache • 1 MB L2 Cache • Spezieller Prefetch-Cache • 95 Millionen Transistoren • TDP von 5-25 W TU Dresden, 27.05.2009 Folie 4 03 Modelle L2100, L2200 (low voltage); TU Dresden, 27.05.2009 Folie 5 04 Architektur Highlights • Superskalar und spekulative in-order fetching • kann 3 x86 Befehle pro Takt dekodieren • Out-of-order execution • Macro- und Micro-fusion • Performancesteigerung durch Befehlskombination • Branch-prediction • 8 verschiedene Vorhersagen in 2 Pipelinestufen • Sicherheitsfunktionen • Energiemanagement • Cache-Subsystem TU Dresden, 27.05.2009 Folie 6 04 Sicherheitsfunktionen Überblick • Verfügt über VSM • Secure execution mode TU Dresden, 27.05.2009 Folie 7 04 -

Software Optimization of High-Performance X86 with Integrated AI Coprocessor

Software Optimization of High-Performance x86 with Integrated AI Coprocessor Mike Thomson Linley Fall Processor Conference CPU, Neural and Performance Engineer October 20 – 29, 2020 [email protected] Centaur Technology Background • 25-year-old startup in Austin, owned by VIA Technologies • We design, from scratch, low-cost x86 processors • Everything to produce a custom x86 SoC with ~100 people • Architecture, logic design, and microcode • Design, verification, and layout • Physical build, fab interface, and tape-out • Designs shipped by Tier 1 OEM’s 2 ©2020 Centaur Technology. All Rights Reserved CHA Platform Overview 194 mm2 in TSMC 16 nm FFC technology I/O & PCIe (44 lanes) CHA: Server-class x86-64 SoC • 8 cores (x86-64) CT-AIC Interconnect rings • 16MB L3 cache RAM • 4 DDR4-3200 controllers • 44 PCIe lanes CT-AIC 4 16MB 4 • AVX512 Compute cores L3 cores bus & debug socket dual • Multi-socket support • Haswell-level IPC CT-AIC • CT-AIC: Centaur AI Coprocessor RAM World’s first high-performance x86 4x DDR controllers processor with integrated AI coprocessor! 3 ©2020 Centaur Technology. All Rights Reserved Centaur AIC Overview CT-AIC: Centaur AI Coprocessor • 20 TOPS CT-AIC • 4096-byte wide SIMD RAM • 16MB SRAM (20 TB/s) CT-AIC • 2 DMA ports Compute • INT8, UINT8, INT16, BFloat16 • 2.5 GHz CT-AIC RAM Real, working silicon with MLPerf1 benchmark suite results! 1 MLPerf name and logo are trademarks. See www.mlperf.org for more information. 4 ©2020 Centaur Technology. All Rights Reserved x86 + AIC = Performance, Flexibility Misc I/O 44 lanes PCIe • Low latency Southbridge PCIe I/O functions • High throughput • Integrated solution CT-AIC x86 core L3 L3 x86 core • Ubiquitous x86-64 platform x86 core L3 L3 x86 core • Expandable ML performance x86 core L3 L3 x86 core • Multi-socket x86 core L3 L3 x86 core • Multi-system Multisocket rd • PCIe: support for 3 -party expansion cards 4x DDR4-3200 DRAM controllers 5 ©2020 Centaur Technology. -

For Hardware Verification

Industrial Use (of ACL2) for Hardware Verification Warren A. Hunt, Jr. Joint work with: Bob Boyer, Marijn Heule, Matt Kaufmann, J Moore, and many others (identified later in talk) June, 2017 Computer Sciences Department Centaur Technology, Inc. University of Texas 7600-C N. Capital of Texas Hwy 2317 Speedway, M/S D9500 Suite 300 Austin, TX 78712-0233 Austin, Texas 78731 [email protected] [email protected] TEL: +1 512 471 9748 TEL: +1 512 418 5797 FAX: +1 512 471 8885 FAX: +1 512 794 0717 September 1, 2017 1 / 25 Ecosystem We collaborate with government and industry. Our own research includes: Development of core technologies Application of these technologies in different domains Commercial Use: Validation of processor design (∼10 FV personnel, 20-30 additional users) at and September 1, 2017 2 / 25 What is Hardware Verification? What is Formal Hardware Verification? Demonstrating that design models have desired functional properties: Combinational circuit implements some function (e.g., addition) Sequential circuit satisfies some property (e.g., no deadlock) A design implements a specification (e.g., cooperating FMs implement an ISA) User-facing physical realization provides predictable and secure operation To assure desired functionality, one must also account for: Circuit delays { depends on implementation technology Power consumption { concerns the size, speed, and work Transient errors { from energy events We present a simple example, common contemporary features, validation procedures and mechanisms, and future needs. September 1, 2017 3 / 25 Is Machine-Checked Formal Verification Even Possible? Is Complete Netlist Verification Possible? When a design is simplified to a netlist, it is possible to mechanically verify that a transistor- or gate-level netlist of a processor design meets its abstract functional specification. -

Centaur Verification Approach

Centaur Verification Approach Jared Davis, Warren Hunt, Jr., Anna Slobodova, Sol Swords August, 2011 Computer Sciences Department Centaur Technology, Inc. University of Texas 7600-C N. Capital of Texas Hwy 1 University Way, M/S C0500 Suite 300 Austin, TX 78712-0233 Austin, Texas 78731 [email protected] [email protected] TEL: +1 512 471 9748 TEL: +1 512 418 5797 FAX: +1 512 471 8885 FAX: +1 512 794 0717 Page 1 (Centaur Technology, UT Austin) Centaur Verification Approach August, 2011 1 / 36 Outline 1 Core Technology: ACL2 2 The ACL2 Theorem Prover 3 ACL2 Clause Processors 4 The GL clause processor 5 Symbolic Simulation in ACL2 6 Symbolic Simulation Proof Examples 7 A Simple Embedded Language 8 The Centaur Verification Tool Relationships 9 The Verilog-to-E Translator 10 ECC Example 11 Centaur Formal Verification Toolflow 12 The Centaur Nano Multiplier Units 13 Conclusion Page 2 (Centaur Technology, UT Austin) Centaur Verification Approach August, 2011 2 / 36 Introduction We have verified add, sub, multiply, divide (microcode), compare, convert, logical, shuffle, blend, insert, extract, min-max instructions from Centaur's 64-bit, X86-compatible, NanoTM microprocessor. Media unit implements over 100 X86 SSE and X87 instructions. Multiplier implements scalar & packed X86, X87, and FMA. For our verifications, we use a combination of AIG- and BDD-based symbolic simulation, case splitting, and theorem proving. We create a theorem for each instruction to be verified. We use ACL2 to mechanically verify each proposed theorem. We discuss our verification approach for formally verifying execution-unit instructions for the Centaur NanoTM { the NanoTM is used by Dell, HP, Lenovo, OLPC, and Samsung. -

Via C3 Nehemiah

EVALUATION OF VIA C3 NEHEMIAH RANDOM NUMBER GENERATOR PREPARED BY Cryptography Research, Inc. 607 Market St., 5th Floor San Francisco, CA 94105 (415) 397-0123 Last Revision: February 27, 2003 Information in this white paper is provided without guarantee or warranty of any kind. This review was performed for VIA Technologies, Inc. but represents the findings and opinions of Cryptography Research, Inc. and may or may not reflect opinions of VIA Technologies, Inc. or Centaur Technology. Characteristics of the C3 Nehemiah may vary with design or implementation changes. © 2003 by Cryptography Research, Inc. VIA C3 NEHEMIAH RNG EVALUATION Contents 1. Introduction _________________________________________________ 4 2. Background__________________________________________________ 4 2.1. Applications Requiring Randomness ______________________________________ 4 2.2. Sources of Randomness__________________________________________________ 5 3. Design Overview______________________________________________ 7 3.1. Entropy Source ________________________________________________________ 7 3.2. Digital Hardware and Firmware _________________________________________ 10 4. Entropy Analysis ____________________________________________ 11 4.1. Background on Entropy ________________________________________________ 11 4.2. Tools and Approaches__________________________________________________ 12 4.3. Aspects Studied _______________________________________________________ 13 4.4. Results ______________________________________________________________ 14 4.4.1. -

VIA C3 Nehemiah Processor Datasheet

VIA C3 Nehemiah Processor Datasheet Revision 1.13 September 29, 2004 VIA TECHNOLOGIES, INC. VIA C3 Nehemiah Processor Datasheet September 29, 2004 This is Version 1.13 of the VIA C3 Nehemiah Processor Datasheet. © 2003-2004 VIA Technologies, Inc All Rights Reserved. VIA reserves the right to make changes in its products without notice in order to improve design or performance characteristics. This publication neither states nor implies any representations or warranties of any kind, including but not limited to any implied warranty of merchantability or fitness for a particular purpose. No license, express or implied, to any intellectual property rights is granted by this document. VIA makes no representations or warranties with respect to the accuracy or completeness of the con- tents of this publication or the information contained herein, and reserves the right to make changes at any time, without notice. VIA disclaims responsibility for any consequences resulting from the use of the information included herein. Cyrix and VIA C3 are trademarks of VIA Technologies, Inc. CentaurHauls is a trademark of Centaur Technology Corporation. AMD, AMD K6, and Athlon are trademarks of Advanced Micro Devices, Inc. Microsoft and Windows are registered trademarks of Microsoft Corporation. Intel, Pentium, Celeron, and MMX are registered trademarks of Intel Corporation. Other product names used in this publication are for identification purposes only and may be trade- marks of their respective companies. LIFE SUPPORT POLICY VIA processor products are not authorized for use as components in life support or other medical devices or systems (hereinafter life support devices) unless a specific written agreement pertaining to such intended use is executed be- tween the manufacturer and an officer of VIA.