A Comparative Study of File-Type Identification Techniques

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Integrated Voice Evacuation System Vx-3000 Series

SETTING SOFTWARE INSTRUCTIONS For Authorized Advanced End User INTEGRATED VOICE EVACUATION SYSTEM VX-3000 SERIES Tip In this manual, the VX-3004F/3008F/3016F Voice Evacuation Frames are collectively referred to as "VX-3000F." Thank you for purchasing TOA’s Integrated Voice Evacuation System. Please carefully follow the instructions in this manual to ensure long, trouble-free use of your equipment. TABLE OF CONTENTS 1. SOFTWARE OUTLINE ................................................................................... 3 2. NOTES ON PERFORMING SETTINGS .............................................. 3 2.1. System Requirements .......................................................................................... 3 2.2. Notes .................................................................................................................... 3 3. SOFTWARE SETUP ........................................................................................ 4 3.1. Setting Software Installation ................................................................................. 4 3.2. Uninstallation ....................................................................................................... 6 4. STARTING THE VX-3000 SETTING SOFTWARE ....................... 7 5. SETTING ITEMS ................................................................................................ 8 5.1. Setting Item Button Configuration ........................................................................ 8 5.2. Menu Bar ............................................................................................................. -

Secrets of Powershell Remoting

Secrets of PowerShell Remoting The DevOps Collective, Inc. This book is for sale at http://leanpub.com/secretsofpowershellremoting This version was published on 2018-10-28 This is a Leanpub book. Leanpub empowers authors and publishers with the Lean Publishing process. Lean Publishing is the act of publishing an in-progress ebook using lightweight tools and many iterations to get reader feedback, pivot until you have the right book and build traction once you do. © 2016 - 2018 The DevOps Collective, Inc. Also By The DevOps Collective, Inc. Creating HTML Reports in Windows PowerShell A Unix Person’s Guide to PowerShell The Big Book of PowerShell Error Handling DevOps: The Ops Perspective Ditch Excel: Making Historical and Trend Reports in PowerShell The Big Book of PowerShell Gotchas The Monad Manifesto, Annotated Why PowerShell? Windows PowerShell Networking Guide The PowerShell + DevOps Global Summit Manual for Summiteers Why PowerShell? (Spanish) Secrets of PowerShell Remoting (Spanish) DevOps: The Ops Perspective (Spanish) The Monad Manifesto: Annotated (Spanish) Creating HTML Reports in PowerShell (Spanish) The Big Book of PowerShell Gotchas (Spanish) The Big Book of PowerShell Error Handling (Spanish) DevOps: WTF? PowerShell.org: History of a Community Contents Secrets of PowerShell Remoting ..................................... 1 Remoting Basics ................................................ 3 What is Remoting? ............................................ 3 Examining Remoting Architecture .................................. 3 Enabling -

Luno Tools Run Command Documentation Page 1/6

Luno Tools Run Command documentation Page 1/6 Luno Tools Run Command Description Run Command aims to be a user-friendly way to run command line applications, command line system calls and single line shell scripts. Setup 1. Build a working command outside Switch It is important to start with a command which achieves your task and which needs to work outside Switch. On Mac, this means you build and test the command in the Terminal App. On Windows, you build the command in “Command Prompt” (Cmd.exe) or “Windows Powershell”. By example, we can build a command which retrieves the permissions of one file and stores it in another file. On mac we can achieve this using the following command: ls -l /path/to/inputfile.txt > /path/to/outputfolder/filename.txt And on Windows Command Prompt using this command : icacls -l C:\path\to\inputfile.txt > C:\path\to\outputfolder\filename.txt If you have issues with your command at this stage (you don’t achieve the output you want, you have errors, …), then the issue is not related to the “Run Command” app itself. The people with the most knowledge about your issue are the people who are expert at the command line application, command line system call or shell script you are trying to use. Usually, there are help pages, support forums, support people specific for these applications who can help you. Alternatively, you can also ask help on the Enfocus forums. 2. Handle spaces correctly When working with these kinds of commands a space is used to separate different parts of the command, by example a space is placed between the command name and the path to the inputfile. -

Chronosync Manual for Macos

ChronoSync Manual for macOS Econ Technologies, Inc. P.O. Box 195780 Winter Springs, FL 32719 www.econtechnologies.com copyright 2002-2018 If you can’t find the information you’re looking for in this manual, you should also refer to ChronoSync’s Help, located within the application, and our website in the Guides, Tech Notes, and Documentation sections. TABLE OF CONTENTS CHAPTER ONE.................................................................................................................. 8 Introduction .................................................................................................................................................. 8 Welcome to ChronoSync ........................................................................................................................... 9 CHAPTER TWO ............................................................................................................... 10 Basic Overview .......................................................................................................................................... 10 ChronoSync Documents .......................................................................................................................... 11 Synchronizer Tasks ............................................................................................................................. 11 Task Container .................................................................................................................................... 11 ChronoSync Organizer -

Macintosh Library Modules Release 2.4.1

Macintosh Library Modules Release 2.4.1 Guido van Rossum Fred L. Drake, Jr., editor 30 March 2005 Python Software Foundation Email: [email protected] Copyright c 2001-2004 Python Software Foundation. All rights reserved. Copyright c 2000 BeOpen.com. All rights reserved. Copyright c 1995-2000 Corporation for National Research Initiatives. All rights reserved. Copyright c 1991-1995 Stichting Mathematisch Centrum. All rights reserved. See the end of this document for complete license and permissions information. Abstract This library reference manual documents Python’s extensions for the Macintosh. It should be used in conjunction with the Python Library Reference, which documents the standard library and built-in types. This manual assumes basic knowledge about the Python language. For an informal introduction to Python, see the Python Tutorial; the Python Reference Manual remains the highest authority on syntactic and semantic questions. Finally, the manual entitled Extending and Embedding the Python Interpreter describes how to add new extensions to Python and how to embed it in other applications. CONTENTS 1 Using Python on a Macintosh 1 1.1 Getting and Installing MacPython .................................. 1 1.2 The IDE ............................................... 2 1.3 The Package Manager ........................................ 3 2 MacPython Modules 5 2.1 macpath — MacOS path manipulation functions ......................... 5 2.2 macfs — Various file system services ............................... 5 2.3 ic — Access to Internet Config ................................... 8 2.4 MacOS — Access to Mac OS interpreter features .......................... 9 2.5 macostools — Convenience routines for file manipulation ................... 10 2.6 findertools — The finder’s Apple Events interface ...................... 10 2.7 EasyDialogs — Basic Macintosh dialogs ............................ 11 2.8 FrameWork — Interactive application framework ........................ -

A Hunting Story: What’S Hiding in Powershell Scripts and Pastebin Code? Saudi Actors

RECORDED FUTURE THREAT INTELLIGENCE REPORT A Hunting Story: What’s Hiding in PowerShell Scripts and Pastebin Code? Saudi Actors By Levi Gundert Vice President of Intelligence and Strategy Summary › U.S. law enforcement recently released a flash bulletin about nation-state adversaries attacking public/private entities using specific TTPs (spearphishing, PowerShell scripts, base64 encoding, etc.). › A hunt for similar TTPs in Recorded Future produces a wealth of recent intelligence, specifically around PowerShell use and base64 string encoding found in PowerShell scripts and code hosted on Pastebin. › Pastebin is routinely used to stage code containing encoded strings that convert to malware, and mainstream business resources like Amazon’s AWS and Microsoft’s Office 365 are equally likely future destinations for staging malicious strings used in targeted attacks › The Arabic speaking actor operating the njRAT instance connecting to osaam2014.no-ip[.]biz may be the same actor operating the njRAT instance that previously connected to htomshi.zapto[.]org. Recorded Future proprietary intelligence indicates with a high degree of confidence that both actors are located in Saudi Arabia. › Hunting in Farsight Security’s passive DNS data produces useful DNS TXT record examples, specifically base64 encoded text records, which may be used in PowerShell Empire scripts. › Enterprise employees fetch favicon.ico files (web browser address bar tab icons) from mainstream websites thousands to millions of times daily making detection of rogue .ico files particularly tricky. › Since 2014 there have been over 550 PowerShell command references in code repositories, over 2,800 references in paste sites, and over 3,000 social media references collected and analyzed by Recorded Future. -

![[MS-FSCC-Diff]: File System Control Codes](https://docslib.b-cdn.net/cover/2780/ms-fscc-diff-file-system-control-codes-2372780.webp)

[MS-FSCC-Diff]: File System Control Codes

[MS-FSCC-Diff]: File System Control Codes Intellectual Property Rights Notice for Open Specifications Documentation ▪ Technical Documentation. Microsoft publishes Open Specifications documentation (“this documentation”) for protocols, file formats, data portability, computer languages, and standards support. Additionally, overview documents cover inter-protocol relationships and interactions. ▪ Copyrights. This documentation is covered by Microsoft copyrights. Regardless of any other terms that are contained in the terms of use for the Microsoft website that hosts this documentation, you can make copies of it in order to develop implementations of the technologies that are described in this documentation and can distribute portions of it in your implementations that use these technologies or in your documentation as necessary to properly document the implementation. You can also distribute in your implementation, with or without modification, any schemas, IDLs, or code samples that are included in the documentation. This permission also applies to any documents that are referenced in the Open Specifications documentation. ▪ No Trade Secrets. Microsoft does not claim any trade secret rights in this documentation. ▪ Patents. Microsoft has patents that might cover your implementations of the technologies described in the Open Specifications documentation. Neither this notice nor Microsoft's delivery of this documentation grants any licenses under those patents or any other Microsoft patents. However, a given Open Specifications document might be covered by the Microsoft Open Specifications Promise or the Microsoft Community Promise. If you would prefer a written license, or if the technologies described in this documentation are not covered by the Open Specifications Promise or Community Promise, as applicable, patent licenses are available by contacting [email protected]. -

Spoofing Downloaded Filename's Extension in Chromium

SAFE SECURITY | 2021 Spoofing Downloaded Filename’s Extension in Chromium CVE 2021-21123 Archie Midha | Vallari Sharma www.safe.security V.15.06.21.01 Table of Contents 1 2 3 Introduction About the Exploitation ● What is Spoofing Vulnerability ● Prerequisites ● The Payload (HTML ● How does Spoofing work ● Impact of the Webpage) ● What is a Filename Vulnerability Extension ● Proof of Concept ● Spoofing the Downloaded Filename’s Extension PAGE - 05 PAGE - 07 PAGE - 03 5 4 References Prevention and Mitigation PAGE - 13 PAGE - 13 Safe Security 2021 2 CVE 2021-21123 Spoofing Downloaded Filename’s Extension in Chromium 1. Introduction 1.1 What is Spoofing Spoofing is an umbrella term for the type of behaviour that involves a cybercriminal impersonating a trusted entity or a device to access, destroy or misuse the victim’s information. Think of it as an online scammer disguising his identity as someone trustable. Spoofing can be done on various communication channels and can involve different levels of technical complexity. Spoofing attacks usually utilise an element of social engineering, i.e psychologically manipulating the attacker’s targets through their emotions like fear, greed or sometimes even lack of a technical knowledge. Safe Security 2021 3 CVE 2021-21123 Spoofing Downloaded Filename’s Extension in Chromium 1.2 Spoofing typically uses two elements – How does ● The spoof itself; like a fake email or website made to look legitimate Spoofing work ● The social engineering aspect, i.e nudging victims to take action Spoofing attacks are generally accompanied by phishing attacks. For example, you receive a mail that you have won a lottery. -

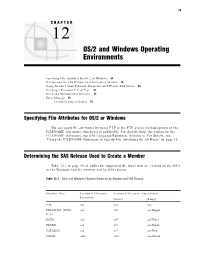

OS/2 and Windows Operating Environments

89 CHAPTER 12 OS/2 and Windows Operating Environments Specifying File Attributes for OS/2 or Windows 89 Determining the SAS Release Used to Create a Member 89 Using Version 8 Short Filename Extensions on FAT-style Disk Drives 90 Creating a Transport File on Tape 91 OS/2 and Windows Idiosyncrasies 91 Error Message 91 Encrypted data is invalid 91 Specifying File Attributes for OS/2 or Windows You can apply file attributes by using FTP or the FTP access method options in the FILENAME statement, whichever is applicable. For details about the syntax for the FILENAME statement, see SAS Language Reference: Dictionary. For details, see “Using the FILENAME Statement to Specify File Attributes for All Hosts” on page 32. Determining the SAS Release Used to Create a Member Table 12.1 on page 89 identifies the supported file types that are created on the OS/2 or the Windows host by member and by SAS release: Table 12.1 OS/2 and Windows Filename Extension by Member and SAS Release Member Type Version 6 Filename Version 8 Filename Extensions1 Extension (Short) (Long) SAS .sas .sas .sas PROGRAM (DATA .ss2 .ss7 .sas7bpgm step) DATA .sd2 .sd7 .sas7bdat INDEX .si2 .si7 .sas7bndx CATALOG .sc2 .sc7 .sas7bcat MDDB .sm2 .sm7 .sas7bmdb 90 Using Version 8 Short Filename Extensions on FAT-style Disk Drives R Chapter 12 Member Type Version 6 Filename Version 8 Filename Extensions1 Extension (Short) (Long) DMDB .sb2 .s7m .sas7bdmd PROC SQL view .sv2 .sv7 .sas7bvew 1 For details about OS/2 and Windows Version 8 short and long filename extensions, see “Using Version 8 Short Filename Extensions on FAT-style Disk Drives” on page 90. -

Tcpware for Openvms User's Guide

TCPware® for OpenVMS User's Guide Part Number: N-6004-60-NN-A January 2014 This manual describes how to use the network services provided by the TCPware for OpenVMS product. Revision/Update: This is a revised manual. Operating System/Version: VAX/VMS V5.5-2 or later, OpenVMS VAX V6.0 or later, OpenVMS Alpha V6.1 or later, or OpenVMS I64 V8.2 or later Software Version: 6.0 Process Software Framingham, Massachusetts USA i The material in this document is for informational purposes only and is subject to change without notice. It should not be construed as a commitment by Process Software. Process Software assumes no responsibility for any errors that may appear in this document. Use, duplication, or disclosure by the U.S. Government is subject to restrictions as set forth in subparagraph (c)(1)(ii) of the Rights in Technical Data and Computer Software clause at DFARS 252.227-7013. The following third-party software may be included with your product and will be subject to the software license agreement. Network Time Protocol (NTP). Copyright © 1992 by David L. Mills. The University of Delaware makes no representations about the suitability of this software for any purpose. Point-to-Point Protocol. Copyright © 1989 by Carnegie-Mellon University. All rights reserved. The name of the University may not be used to endorse or promote products derived from this software without specific prior written permission. Redistribution and use in source and binary forms are permitted provided that the above copyright notice and this paragraph are duplicated in all such forms and that any documentation, advertising materials, and other materials related to such distribution and use acknowledge that the software was developed by Carnegie Mellon University. -

X-Ways Forensics & Winhex Manual

X-Ways Software Technology AG X-Ways Forensics/ WinHex Integrated Computer Forensics Environment. Data Recovery & IT Security Tool. Hexadecimal Editor for Files, Disks & RAM. Manual Copyright © 1995-2021 Stefan Fleischmann, X-Ways Software Technology AG. All rights reserved. Contents 1 Preface ..................................................................................................................................................1 1.1 About WinHex and X-Ways Forensics.........................................................................................1 1.2 Legalities.......................................................................................................................................2 1.3 License Types ...............................................................................................................................4 1.4 More differences between WinHex & X-Ways Forensics............................................................5 1.5 Getting Started with X-Ways Forensics........................................................................................6 2 Technical Background ........................................................................................................................7 2.1 Using a Hex Editor........................................................................................................................7 2.2 Endian-ness...................................................................................................................................8 2.3 Integer -

Module 8 File Management

Module 8 File Management 1 Module Objectives Develop file management strategies Explore files and folders Create, name, copy, move, and delete folders Name, copy, move, and delete files Cyber Safety tips for managing files and folders Key to Symbols = Advanced Topic = Cyber Safety = Hints and Tips 2 What are files and folders? How do I manage them? Managing Files and Folders What are files? A file is a collection of information “data” that we give a name and store somewhere in the computer. Files can be stored in USB drives, Compact discs (CDs), Digital video discs (DVDs) and Hard disks. Files are organized and managed inside folders. So what are folders? Folders are objects “cabinet drawers” that can house many files inside them. Think of folders, like the rooms you have in your house, every room is dedicated for a very specific purpose. 3 One of the main programs to manage and show files in windows is Windows Explorer. There are many ways to start Windows Explorer. Press start, accessories and then Windows Explorer. 4 Exploring Files and Folders Viewing folder content in Windows Explorer. The name of the selected folder Click to expand Contents of the selected folder 5 File Names and Extensions Files will have a name and an extension. The file name describes what the file is about. A file extension (usually 3 or 4 letters after the . ) tells ouy what kind of file it is (or what program uses it. This file (.jpg) is a picture This file (.docx) works Common File Extensions with Microsoft Word Documents .doc or .docx (Microsoft Word files) .txt (plain text (Notepad) documents) Pictures .jpg or .jpeg .gif .bmp .png Music .mp3 (works with most music playing devices, such as iPods or MP3 players) Movies and Videos .avi .wmv .mp4 Program Files .exe (An “executable” file.