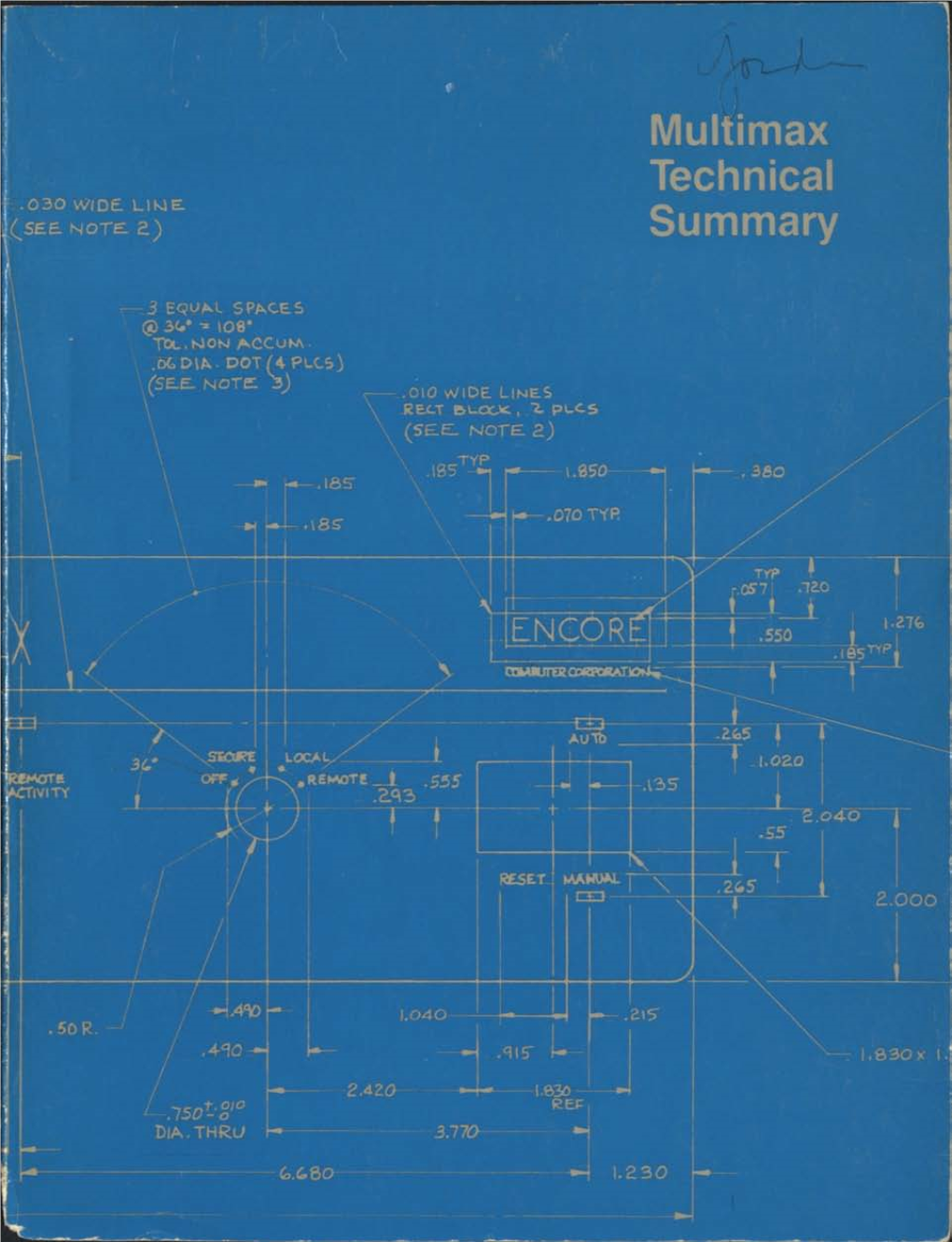

Multimax Technical Summary Contents

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Using the GNU Compiler Collection (GCC)

Using the GNU Compiler Collection (GCC) Using the GNU Compiler Collection by Richard M. Stallman and the GCC Developer Community Last updated 23 May 2004 for GCC 3.4.6 For GCC Version 3.4.6 Published by: GNU Press Website: www.gnupress.org a division of the General: [email protected] Free Software Foundation Orders: [email protected] 59 Temple Place Suite 330 Tel 617-542-5942 Boston, MA 02111-1307 USA Fax 617-542-2652 Last printed October 2003 for GCC 3.3.1. Printed copies are available for $45 each. Copyright c 1988, 1989, 1992, 1993, 1994, 1995, 1996, 1997, 1998, 1999, 2000, 2001, 2002, 2003, 2004 Free Software Foundation, Inc. Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.2 or any later version published by the Free Software Foundation; with the Invariant Sections being \GNU General Public License" and \Funding Free Software", the Front-Cover texts being (a) (see below), and with the Back-Cover Texts being (b) (see below). A copy of the license is included in the section entitled \GNU Free Documentation License". (a) The FSF's Front-Cover Text is: A GNU Manual (b) The FSF's Back-Cover Text is: You have freedom to copy and modify this GNU Manual, like GNU software. Copies published by the Free Software Foundation raise funds for GNU development. i Short Contents Introduction ...................................... 1 1 Programming Languages Supported by GCC ............ 3 2 Language Standards Supported by GCC ............... 5 3 GCC Command Options ......................... -

Emerging Technologies Multi/Parallel Processing

Emerging Technologies Multi/Parallel Processing Mary C. Kulas New Computing Structures Strategic Relations Group December 1987 For Internal Use Only Copyright @ 1987 by Digital Equipment Corporation. Printed in U.S.A. The information contained herein is confidential and proprietary. It is the property of Digital Equipment Corporation and shall not be reproduced or' copied in whole or in part without written permission. This is an unpublished work protected under the Federal copyright laws. The following are trademarks of Digital Equipment Corporation, Maynard, MA 01754. DECpage LN03 This report was produced by Educational Services with DECpage and the LN03 laser printer. Contents Acknowledgments. 1 Abstract. .. 3 Executive Summary. .. 5 I. Analysis . .. 7 A. The Players . .. 9 1. Number and Status . .. 9 2. Funding. .. 10 3. Strategic Alliances. .. 11 4. Sales. .. 13 a. Revenue/Units Installed . .. 13 h. European Sales. .. 14 B. The Product. .. 15 1. CPUs. .. 15 2. Chip . .. 15 3. Bus. .. 15 4. Vector Processing . .. 16 5. Operating System . .. 16 6. Languages. .. 17 7. Third-Party Applications . .. 18 8. Pricing. .. 18 C. ~BM and Other Major Computer Companies. .. 19 D. Why Success? Why Failure? . .. 21 E. Future Directions. .. 25 II. Company/Product Profiles. .. 27 A. Multi/Parallel Processors . .. 29 1. Alliant . .. 31 2. Astronautics. .. 35 3. Concurrent . .. 37 4. Cydrome. .. 41 5. Eastman Kodak. .. 45 6. Elxsi . .. 47 Contents iii 7. Encore ............... 51 8. Flexible . ... 55 9. Floating Point Systems - M64line ................... 59 10. International Parallel ........................... 61 11. Loral .................................... 63 12. Masscomp ................................. 65 13. Meiko .................................... 67 14. Multiflow. ~ ................................ 69 15. Sequent................................... 71 B. Massively Parallel . 75 1. Ametek.................................... 77 2. Bolt Beranek & Newman Advanced Computers ........... -

An Implementation of Multiprocessor Path Pascal

AN IMPLEMENTATION OF MULTIPROCESSOR PATH PASCAL BY BRIAN JOHN HAFNER B.S., University of Illinois, 1986 THESIS Submitted in partial fulfillment of the requirements for the degree of Master of Science in Computer Science in the Graduate College of the University of Illinois at Urbana-Champaign, 1991 Urbana, Illinois CHAPTER 1. INTRODUCTION Path Pascal [Kols84] is a non-preemptive concurrent computer language. It is a super- set of Berkeley Pascal [Joy84] with additional constructs for specifying processes, data−encapsulating objects, and path expressions [Camp76] which synchronize processes with respect to objects. Objects are similar in concept to monitors [Deit84], except that the number of processes allowed in an object is not limited to one, but rather is controlled by the path expressions. This thesis describes an implementation of a multiprocessor Path Pascal compiler. Multiprocessor Path Pascal differs from the previous single processor implementation [Grun85] because it allows Path Pascal processes to truly execute in parallel. The single processor implementation simulates parallelism by context switching among the Path Pascal processes; however, it never executes more than one Path Pascal process at a time. Throughout the thesis, the terms "multiprocessor" and "single processor" refer to the executables produced by the compilers; both compilers use a single processor during compi- lation. There are three primary goals for the multiprocessor implementation. First, it should demonstrate scalable performance as the number of processors is increased. Second, it should preserve the semantics used by the single processor version. Finally, the multiproces- sor implementation should share as much code as possible with the single processor imple- mentation. -

Accessionindex: TCD-SCSS-T.20141120.007 Accession Date: 20-Nov-2014 Accession By: Dr.Brian Coghlan Object Name: NS32000 NSU-3203

AccessionIndex: TCD-SCSS-T.20141120.007 Accession Date: 20-Nov-2014 Accession By: Dr.Brian Coghlan Object name: NS32000 NSU-3203256T-10 Development Boards Vintage: c.1984 Synopsis: Three NS32000 development boards in original packing boxes, including documentation and ancilliaries. Board 1 S/N: H280036025, Board 2 S/N: H280036034, Board 3 S/N: H420016026. Description: The NS16000 series (later renamed NS32000 series) was the first microprocessor to include demand-paged virtual memory. It was based on a very attractive 32-bit architecture and program model with 8 general-purpose registers, some special 24-bit registers like the PC, two stack, a frame and an interrupt base pointer, and a complex (CISC) instruction set, including coprocessor instructions, with 2-operand instructions, memory-to-memory operations, flexible addressing modes, and variable- length byte-aligned instruction encoding. Addressing modes could involve up to two displacements and two memory indirections per operand as well as scaled indexing. Perhaps because of this, there were fewer instructions than many RISC machines. The chipset included a CPU, FPU, MMU, ICU (for interrupts) and TCU (for timing), with a multiplexed address/data bus. The principal chips were simply wired together on this bus. The CPU suffered from persistent bugs that greatly delayed full production, and was bypassed in the market. In the early 1980s a significant amount of research work in the Dept.Computer Science centred on the NS32000 series (see elsewhere in this collection), and these boards supported related testing. Two NS32000 development boards are in one original packing box marked NSU- 3203256T-10, S/N: H280036034 (and so came with development board 2), including documentation and ancilliaries. -

Acorn ABC 210/Cambridge Workstation

ACORN COMPUTERS LTD. ACW 443 SERVICE MANUAL 0420,001 Issue 1 January 1987 ACW SERVICE MANUAL Title: ACW SERVICE MANUAL Reference: 0420,001 Issue: 1 Replaces: 0.56 Applicability: Product Support Distribution: Authorised Service Agents Status: for publication Author: C.Watters, J.Wilkins and Others Date: 7 January 1987 Published by: Acorn Computers Ltd, Fulbourn Road, Cherry Hinton, Cambridge, CB1 4JN, England Within this publication the term 'BBC' is used as an abbreviation for 'British Broadcasting Corporation'. Copyright ACORN Computers Limited 1985 Neither the whole or any part of the information contained in, or the product described in, this manual may be adapted or reproduced in any material form except with the prior written approval of ACORN Computers Limited ( ACORN Computers). The product described in this manual and products for use with it, are subject to continuous development and improvement. All information of a technical nature and particulars of the product and its use (including the information and particulars in this manual) are given by ACORN Computers in good faith. However, it is acknowledged that there may be errors or omissions in this manual. A list of details of any amendments or revisions to this manual can be obtained upon request from ACORN Computers Technical Enquiries. ACORN Computers welcome comments and suggestions relating to the product and this manual. All correspondence should be addressed to:- Technical Enquiries ACORN Computers Limited Newmarket Road Cambridge CB5 8PD All maintenance and service on the product must be carried out by ACORN Computers' authorised service agents. ACORN Computers can accept no liability whatsoever for any loss or damage caused by service or maintenance by unauthorised personnel. -

Retrobrew Computers Forum Development Seems a Big Hurdle

Subject: National Semi NS32000 series -- Any interest? Posted by jcoffman on Fri, 13 Mar 2020 18:45:37 GMT View Forum Message <> Reply to Message For some time now I've been considering playing with an updated version of the 32000 series. SRAM memory chips are now much bigger now, and the ECB bus looks like a good place to update to a 16-bit system. Here is a link to an S-100 system I built many years ago: http://www.cpu-ns32k.net/index.html The old chips (NS32016 &c.) are VERY expensive, if you can find them. The 32CG16 chips are cheaper, and do not require separate TCU. I would be curious to know if there is anyone interested in playing with this old National Semiconductor line. --John Subject: Re: National Semi NS32000 series -- Any interest? Posted by jcoffman on Fri, 13 Mar 2020 18:46:50 GMT View Forum Message <> Reply to Message This is the direct link to my S-100 system: http://www.cpu-ns32k.net/John.html Subject: Re: National Semi NS32000 series -- Any interest? Posted by Jonas on Fri, 13 Mar 2020 20:59:57 GMT View Forum Message <> Reply to Message Hi John I have never done anything with the NS32000-series, but I followed the discussion at this forum about NS32532, NS32CG160 et cetera a few years ago. I actually bought ten NS32CG160 from Utsource and five NS32081 from someone in Poland. Your board (yes, I have read most of the stuff at www.cpu-ns32k.net) is far far beyond my capabilities. -

Jargon File, Version 4.0.0, 24 Jul 1996

JARGON FILE, VERSION 4.0.0, 24 JUL 1996 This is the Jargon File, a comprehensive compendium of hacker slang illuminating many aspects of hackish tradition, folklore, and humor. This document (the Jargon File) is in the public domain, to be freely used, shared, and modified. There are (by intention) no legal restraints on what you can do with it, but there are traditions about its proper use to which many hackers are quite strongly attached. Please extend the courtesy of proper citation when you quote the File, ideally with a version number, as it will change and grow over time. (Examples of appropriate citation form: "Jargon File 4.0.0" or "The on-line hacker Jargon File, version 4.0.0, 24 JUL 1996".) The Jargon File is a common heritage of the hacker culture. Over the years a number of individuals have volunteered considerable time to maintaining the File and been recognized by the net at large as editors of it. Editorial responsibilities include: to collate contributions and suggestions from others; to seek out corroborating information; to cross-reference related entries; to keep the file in a consistent format; and to announce and distribute updated versions periodically. Current volunteer editors include: Eric Raymond [email protected] Although there is no requirement that you do so, it is considered good form to check with an editor before quoting the File in a published work or commercial product. We may have additional information that would be helpful to you and can assist you in framing your quote to reflect not only the letter of the File but its spirit as well. -

Retrobrew Computers Forum

Subject: What new retrobrew projects are people interested in? Posted by lynchaj on Sat, 03 Jun 2017 21:41:45 GMT View Forum Message <> Reply to Message What new retrobrew projects are people interested in? Subject: Re: What new retrobrew projects are people interested in? Posted by gkaufman on Sun, 04 Jun 2017 16:41:17 GMT View Forum Message <> Reply to Message I'd love to see some of the early single board systems re-created like: Intel 4004 based SIM-4 Prolog PLS-401A 4004 based or later 4040 based version Original 8060 SC/MP board - Gary Subject: Re: What new retrobrew projects are people interested in? Posted by dgf1966 on Sun, 04 Jun 2017 17:20:48 GMT View Forum Message <> Reply to Message Some other CPU's worth consideration for a SBC project are:- TMS9900 / TMS9995 or NS32016 / NS32CG16V regards David Subject: Re: What new retrobrew projects are people interested in? Posted by jcoffman on Mon, 05 Jun 2017 00:19:51 GMT View Forum Message <> Reply to Message David, I would love to do an NS32016 system -- but ... The prices people want to get for some of those old chips are outrageous. What about s/w? --John Page 1 of 43 ---- Generated from RetroBrew Computers Forum Subject: Re: What new retrobrew projects are people interested in? Posted by dgf1966 on Mon, 05 Jun 2017 06:02:10 GMT View Forum Message <> Reply to Message Hi John, Agreed, some sellers are charging silly money for this CPU but there are here and there some sellers selling at a more reasonable price, I think I paid about UK £20 for my 10Mhz plastic version from the following ebay seller in the UK. -

Retrobrew Computers Forum a Z80

Subject: 8086 maximum mode SBC Posted by lynchaj on Sun, 30 Apr 2017 18:03:00 GMT View Forum Message <> Reply to Message Hi I've been mulling over an 8086 maximum mode SBC based on the Intel datasheet. It is simple computer with just CPU, RAM, ROM, & DUART. No PIC, PIT, DMA, and no wait state generator. Take a look at the schematic and tell me what you think. The PCB is fairly small & 2 layer 5.525" x 4.700" which I am guessing would be fairly inexpensive to build. All the parts a PTH so easy construction. Note1: I noticed there was a problem with the memory decoder so I fixed it and updated the schematic. Also included the memory decode truth table and the PCB layout file. Note2:Argh! I thought about the IO decoder some more and realized if I made some minor changes I could use left over gates and eliminate the second 74LS688 so I updated the schematic and PCB layout (again). Subject: Re: 8086 maximum mode SBC Posted by lynchaj on Mon, 01 May 2017 11:42:56 GMT View Forum Message <> Reply to Message Hi The 8086 maximum mode SBC is in no way intended to be IBM PC compatible. In fact, just the opposite. I'd like it to be a clean sheet design without any of the limitations of the IBM PC heritage design. So far, it is an 8086 CPU with 1MB SRAM, 256KB Flash ROM, a DUART which provides two UARTs and a parallel printer port. Also added a general purpose output latch (used to swap the 256KB Flash ROM in/out of memory after booting) and an IDE port (which is practically free on 16 bit x86 computers). -

W<Idowing Scheme in Hardware Allows Stripping of Meoory Maniagement Unit's Page Table Entries. High Ahead/Write Behind Operat

IEEE Transactions on Nuclear Science, Vol. NS-32, No. 4, Auigust 1985 A 32-BIT COMPUTER FOR LARGE MEMORY APPLICATIONS ON THE FASTBUS R. Kelliner, J.P. Hong, and J.M. Blossom Los Alamos National Laboratory E-10 Data Systems, MS K488 Los Alanos, NM 87545 Abstract Detailed Description Aii FASTBUS based 32-bit comlptuter is being built at Los National Semiconductor's NS32000 chip set includes a -'larnos National Laboratory for use in systems requir- powerful combination of functions stipporting fast cal- iog large fast memory in the FASTBUS environment. A culation and data reduction. The NS32032 CPU executes separate local execuition bus allows data reduction to 1.0 million instructions per second whein running on a proceed concurrently with other FASTBUS operations. 10 MHz clock, and addresses 16 megabytes of physical fhe computer, which can operate in either master or memory. Eight 32-bit general purpose registers and slave mode, includes the National Semiconductor full 32-bit internal address and data paths allow -S32032 chip set with demand paged memory management, efficient handling of 32-bit quantities. The 24-bit floating point slave processor, interrupt control external address accesses 16 megabytes of physical uOit, timers, and time-of-day clock. The 16.0 mega- address space. High level language support was bytes of random access memory are interleaved to allow desigined into the very orthogonal instruction set wiindowed direct memory access on and off the FASTBUS replete with many addressing modes, aind several data at 80 megabytes per second. types. Overview The memory management function is performed by the NS32082 MMU. -

Embedded Systems- an Overview

Embedded Systems- An Overview Prof.S.S.S.P.Rao Dept. of Computer Science & Engg. I.I.T.Bombay [email protected] http://www.cse.iitb.ac.in/~ssspr TOPICSTOPICS • Introduction • Microcontrollers • Embedded Systems design issues • Conclusions A Doctor is configuring a cardiac Pacemaker inside his patient’s chest while sitting 2 kms away. Another person is travelling in a driverless car that takes him from Mumbai to New Delhi using his inbuilt navigation programme. Looks impossible and sounds like fairy tale!!!! No not really…. Advances in Technology have taken place at such a speed that these fictitious scenario are likely to be translated into reality very soon in a couple of years. Real Time Operating System (RTOs) and Embedded system are the major technologies that will play a major role in making the above fairy tales come true. IntroductionIntroduction toto EmbeddedEmbedded SystemsSystems What are embedded systems? • Computer (Programmable part) surrounded by other subsystems,sensors and actuators • computer a (small) part of a larger system. • The computer is called a micro-controller • Embedded Systems or Electronics systems that include an application Specific Integrated Circuit or a Microcontroller to perform a specific dedicated application. • Embedded System is pre-programmed to do a specific function while a general purpose system could be used to run any program of your choice. Further, the Embedded Processor Is only one component of the electronic system of which it is the part. It is cooperating with the rest of the components to achieve the overall function. Why Sudden interest in Embedded sytems? Possible Reasons: • Processors have shrunk in size with increased performance • Power consumption has drastically reduced. -

Open Source Used in Staros 21.19

Open Source Used In StarOS 21.19 Cisco Systems, Inc. www.cisco.com Cisco has more than 200 offices worldwide. Addresses, phone numbers, and fax numbers are listed on the Cisco website at www.cisco.com/go/offices. Text Part Number: 78EE117C99-1023749304 Open Source Used In StarOS 21.191 This document contains licenses and notices for open source software used in this product. With respect to the free/open source software listed in this document, if you have any questions or wish to receive a copy of any source code to which you may be entitled under the applicable free/open source license(s) (such as the GNU Lesser/General Public License), please contact us at [email protected]. In your requests please include the following reference number 78EE117C99-1023749304 Contents 1.1 libxml 2.9.2 1.1.1 Available under license 1.2 acpid 2.0.22 1.2.1 Available under license 1.3 net-snmp 5.1.1 1.3.1 Available under license 1.4 libnuma 2.0.11 1.4.1 Available under license 1.5 popt 1.5 1.5.1 Available under license 1.6 procps 3.2.6 1.6.1 Available under license 1.7 python 2.7.6 1.7.1 Available under license 1.8 openssh 7.6 1.8.1 Available under license 1.9 ftpd-bsd 0.3.2 1.9.1 Available under license 1.10 iconv 2.17 1.10.1 Available under license 1.11 procps 3.2.6 1.11.1 Available under license 1.12 dozer 6.4.1 1.12.1 Available under license 1.13 xmlrpc-c 1.06.38 1.13.1 Available under license Open Source Used In StarOS 21.192 1.14 antlr-runtime 4.2 1.15 kexec-tools 2.0.14 1.15.1 Available under license 1.16 pcre 8.41 1.16.1 Available