ELO REGRESSION EXTENDING the ELO RATING SYSTEM a Thesis

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Here Should That It Was Not a Cheap Check Or Spite Check Be Better Collaboration Amongst the Federations to Gain Seconds

Chess Contents Founding Editor: B.H. Wood, OBE. M.Sc † Executive Editor: Malcolm Pein Editorial....................................................................................................................4 Editors: Richard Palliser, Matt Read Malcolm Pein on the latest developments in the game Associate Editor: John Saunders Subscriptions Manager: Paul Harrington 60 Seconds with...Chris Ross.........................................................................7 Twitter: @CHESS_Magazine We catch up with Britain’s leading visually-impaired player Twitter: @TelegraphChess - Malcolm Pein The King of Wijk...................................................................................................8 Website: www.chess.co.uk Yochanan Afek watched Magnus Carlsen win Wijk for a sixth time Subscription Rates: United Kingdom A Good Start......................................................................................................18 1 year (12 issues) £49.95 Gawain Jones was pleased to begin well at Wijk aan Zee 2 year (24 issues) £89.95 3 year (36 issues) £125 How Good is Your Chess?..............................................................................20 Europe Daniel King pays tribute to the legendary Viswanathan Anand 1 year (12 issues) £60 All Tied Up on the Rock..................................................................................24 2 year (24 issues) £112.50 3 year (36 issues) £165 John Saunders had to work hard, but once again enjoyed Gibraltar USA & Canada Rocking the Rock ..............................................................................................26 -

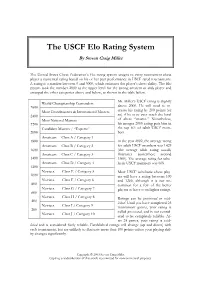

The USCF Elo Rating System by Steven Craig Miller

The USCF Elo Rating System By Steven Craig Miller The United States Chess Federation’s Elo rating system assigns to every tournament chess player a numerical rating based on his or her past performance in USCF rated tournaments. A rating is a number between 0 and 3000, which estimates the player’s chess ability. The Elo system took the number 2000 as the upper level for the strong amateur or club player and arranged the other categories above and below, as shown in the table below. Mr. Miller’s USCF rating is slightly World Championship Contenders 2600 above 2000. He will need to in- Most Grandmasters & International Masters crease his rating by 200 points (or 2400 so) if he is to ever reach the level Most National Masters of chess “master.” Nonetheless, 2200 his meager 2000 rating puts him in Candidate Masters / “Experts” the top 6% of adult USCF mem- 2000 bers. Amateurs Class A / Category 1 1800 In the year 2002, the average rating Amateurs Class B / Category 2 for adult USCF members was 1429 1600 (the average adult rating usually Amateurs Class C / Category 3 fluctuates somewhere around 1400 1500). The average rating for scho- Amateurs Class D / Category 4 lastic USCF members was 608. 1200 Novices Class E / Category 5 Most USCF scholastic chess play- 1000 ers will have a rating between 100 Novices Class F / Category 6 and 1200, although it is not un- 800 common for a few of the better Novices Class G / Category 7 players to have even higher ratings. 600 Novices Class H / Category 8 Ratings can be provisional or estab- 400 lished. -

Elo World, a Framework for Benchmarking Weak Chess Engines

Elo World, a framework for with a rating of 2000). If the true outcome (of e.g. a benchmarking weak chess tournament) doesn’t match the expected outcome, then both player’s scores are adjusted towards values engines that would have produced the expected result. Over time, scores thus become a more accurate reflection DR. TOM MURPHY VII PH.D. of players’ skill, while also allowing for players to change skill level. This system is carefully described CCS Concepts: • Evaluation methodologies → Tour- elsewhere, so we can just leave it at that. naments; • Chess → Being bad at it; The players need not be human, and in fact this can facilitate running many games and thereby getting Additional Key Words and Phrases: pawn, horse, bishop, arbitrarily accurate ratings. castle, queen, king The problem this paper addresses is that basically ACH Reference Format: all chess tournaments (whether with humans or com- Dr. Tom Murphy VII Ph.D.. 2019. Elo World, a framework puters or both) are between players who know how for benchmarking weak chess engines. 1, 1 (March 2019), to play chess, are interested in winning their games, 13 pages. https://doi.org/10.1145/nnnnnnn.nnnnnnn and have some reasonable level of skill. This makes 1 INTRODUCTION it hard to give a rating to weak players: They just lose every single game and so tend towards a rating Fiddly bits aside, it is a solved problem to maintain of −∞.1 Even if other comparatively weak players a numeric skill rating of players for some game (for existed to participate in the tournament and occasion- example chess, but also sports, e-sports, probably ally lose to the player under study, it may still be dif- also z-sports if that’s a thing). -

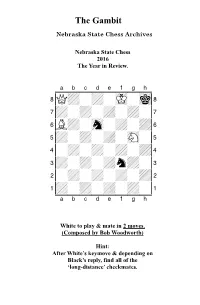

2016 Year in Review

The Gambit Nebraska State Chess Archives Nebraska State Chess 2016 The Year in Review. XABCDEFGHY 8Q+-+-mK-mk( 7+-+-+-+-' 6L+-sn-+-+& 5+-+-+-sN-% 4-+-+-+-+$ 3+-+-+n+-# 2-+-+-+-+" 1+-+-+-+-! xabcdefghy White to play & mate in 2 moves. (Composed by Bob Woodworth) Hint: After White’s keymove & depending on Black’s reply, find all of the ‘long-distance’ checkmates. Gambit Editor- Kent Nelson The Gambit serves as the official publication of the Nebraska State Chess Association and is published by the Lincoln Chess Foundation. Send all games, articles, and editorial materials to: Kent Nelson 4014 “N” St Lincoln, NE 68510 [email protected] NSCA Officers President John Hartmann Treasurer Lucy Ruf Historical Archivist Bob Woodworth Secretary Gnanasekar Arputhaswamy Webmaster Kent Smotherman Regional VPs NSCA Committee Members Vice President-Lincoln- John Linscott Vice President-Omaha- Michael Gooch Vice President (Western) Letter from NSCA President John Hartmann January 2017 Hello friends! Our beloved game finds itself at something of a crossroads here in Nebraska. On the one hand, there is much to look forward to. We have a full calendar of scholastic events coming up this spring and a slew of promising juniors to steal our rating points. We have more and better adult players playing rated chess. If you’re reading this, we probably (finally) have a functional website. And after a precarious few weeks, the Spence Chess Club here in Omaha seems to have found a new home. And yet, there is also cause for concern. It’s not clear that we will be able to have tournaments at UNO in the future. -

YEARBOOK the Information in This Yearbook Is Substantially Correct and Current As of December 31, 2020

OUR HERITAGE 2020 US CHESS YEARBOOK The information in this yearbook is substantially correct and current as of December 31, 2020. For further information check the US Chess website www.uschess.org. To notify US Chess of corrections or updates, please e-mail [email protected]. U.S. CHAMPIONS 2002 Larry Christiansen • 2003 Alexander Shabalov • 2005 Hakaru WESTERN OPEN BECAME THE U.S. OPEN Nakamura • 2006 Alexander Onischuk • 2007 Alexander Shabalov • 1845-57 Charles Stanley • 1857-71 Paul Morphy • 1871-90 George H. 1939 Reuben Fine • 1940 Reuben Fine • 1941 Reuben Fine • 1942 2008 Yury Shulman • 2009 Hikaru Nakamura • 2010 Gata Kamsky • Mackenzie • 1890-91 Jackson Showalter • 1891-94 Samuel Lipchutz • Herman Steiner, Dan Yanofsky • 1943 I.A. Horowitz • 1944 Samuel 2011 Gata Kamsky • 2012 Hikaru Nakamura • 2013 Gata Kamsky • 2014 1894 Jackson Showalter • 1894-95 Albert Hodges • 1895-97 Jackson Reshevsky • 1945 Anthony Santasiere • 1946 Herman Steiner • 1947 Gata Kamsky • 2015 Hikaru Nakamura • 2016 Fabiano Caruana • 2017 Showalter • 1897-06 Harry Nelson Pillsbury • 1906-09 Jackson Isaac Kashdan • 1948 Weaver W. Adams • 1949 Albert Sandrin Jr. • 1950 Wesley So • 2018 Samuel Shankland • 2019 Hikaru Nakamura Showalter • 1909-36 Frank J. Marshall • 1936 Samuel Reshevsky • Arthur Bisguier • 1951 Larry Evans • 1952 Larry Evans • 1953 Donald 1938 Samuel Reshevsky • 1940 Samuel Reshevsky • 1942 Samuel 2020 Wesley So Byrne • 1954 Larry Evans, Arturo Pomar • 1955 Nicolas Rossolimo • Reshevsky • 1944 Arnold Denker • 1946 Samuel Reshevsky • 1948 ONLINE: COVID-19 • OCTOBER 2020 1956 Arthur Bisguier, James Sherwin • 1957 • Robert Fischer, Arthur Herman Steiner • 1951 Larry Evans • 1952 Larry Evans • 1954 Arthur Bisguier • 1958 E. -

An Elo-Based Approach to Model Team Players and Predict the Outcome of Games

AN ELO-BASED APPROACH TO MODEL TEAM PLAYERS AND PREDICT THE OUTCOME OF GAMES A Thesis by RICHARD EINSTEIN DOSS MARVELDOSS Submitted to the Office of Graduate and Professional Studies of Texas A&M University in partial fulfillment of the requirements for the degree of MASTER OF SCIENCE Chair of Committee, Jean Francios Chamberland Committee Members, Gregory H. Huff Thomas R. Ioerger Head of Department, Scott Miller August 2018 Major Subject: Computer Engineering Copyright 2018 Richard Einstein Doss Marveldoss ABSTRACT Sports data analytics has become a popular research area in recent years, with the advent of dif- ferent ways to capture information about a game or a player. Different statistical metrics have been created to quantify the performance of a player/team. A popular application of sport data anaytics is to generate a rating system for all the team/players involved in a tournament. The resulting rating system can be used to predict the outcome of future games, assess player performances, or come up with a tournament brackets. A popular rating system is the Elo rating system. It started as a rating system for chess tour- naments. It’s known for its simple yet elegant way to assign a rating to a particular individual. Over the last decade, several variations of the original Elo rating system have come into existence, collectively know as Elo-based rating systems. This has been applied in a variety of sports like baseball, basketball, football, etc. In this thesis, an Elo-based approach is employed to model an individual basketball player strength based on the plus-minus score of the player. -

Elo and Glicko Standardised Rating Systems by Nicolás Wiedersheim Sendagorta Introduction “Manchester United Is a Great Team” Argues My Friend

Elo and Glicko Standardised Rating Systems by Nicolás Wiedersheim Sendagorta Introduction “Manchester United is a great team” argues my friend. “But it will never be better than Manchester City”. People rate everything, from films and fashion to parks and politicians. It’s usually an effortless task. A pool of judges quantify somethings value, and an average is determined. However, what if it’s value is unknown. In this scenario, economists turn to auction theory or statistical rating systems. “Skill” is one of these cases. The Elo and Glicko rating systems tend to be the go-to when quantifying skill in Boolean games. Elo Rating System Figure 1: Arpad Elo As I started playing competitive chess, I came across the Figure 2: Top Elo Ratings for 2014 FIFA World Cup Elo rating system. It is a method of calculating a player’s relative skill in zero-sum games. Quantifying ability is helpful to determine how much better some players are, as well as for skill-based matchmaking. It was devised in 1960 by Arpad Elo, a professor of Physics and chess master. The International Chess Federation would soon adopt this rating system to divide the skill of its players. The algorithm’s success in categorising people on ability would start to gain traction in other win-lose games; Association football, NBA, and “esports” being just some of the many examples. Esports are highly competitive videogames. The Elo rating system consists of a formula that can determine your quantified skill based on the outcome of your games. The “skill” trend follows a gaussian bell curve. -

Extensions of the Elo Rating System for Margin of Victory

Extensions of the Elo Rating System for Margin of Victory MathSport International 2019 - Athens, Greece Stephanie Kovalchik 1 / 46 2 / 46 3 / 46 4 / 46 5 / 46 6 / 46 7 / 46 How Do We Make Match Forecasts? 8 / 46 It Starts with Player Ratings Assume the the ith player has some true ability θi. Models of player abilities assume game outcomes are a function of the difference in abilities Prob(Wij = 1) = F(θi − θj) 9 / 46 Paired Comparison Models Bradley-Terry models are a general class of paired comparison models of latent abilities with a logistic function for win probabilities. 1 F(θi − θj) = 1 + α−(θi−θj) With BT, player abilities are treated as Hxed in time which is unrealistic in most cases. 10 / 46 Bobby Fischer 11 / 46 Fischer's Meteoric Rise 12 / 46 Arpad Elo 13 / 46 In His Own Words 14 / 46 Ability is a Moving Target 15 / 46 Standard Elo Can be broken down into two steps: 1. Estimate (E-Step) 2. Update (U-Step) 16 / 46 Standard Elo E-Step For tth match of player i against player j, the chance that player i wins is estimated as, 1 W^ ijt = 1 + 10−(Rit−Rjt)/σ 17 / 46 Elo Derivation Elo supposed that the ratings of any two competitors were independent and normal with shared standard deviation δ. Given this, he likened the chance of a win to the chance of observing a difference in ratings for ratings drawn from the same distribution, 2 Rit − Rjt ∼ N(0, 2δ ) which leads to, Rit − Rjt P(Rit − Rjt > 0) = Φ( ) √2δ and ≈ 1/(1 + 10−(Rit−Rjt)/2δ) Elo's formula was just a hack for the cumulative normal density function. -

Package 'Playerratings'

Package ‘PlayerRatings’ March 1, 2020 Version 1.1-0 Date 2020-02-28 Title Dynamic Updating Methods for Player Ratings Estimation Author Alec Stephenson and Jeff Sonas. Maintainer Alec Stephenson <[email protected]> Depends R (>= 3.5.0) Description Implements schemes for estimating player or team skill based on dynamic updating. Implemented methods include Elo, Glicko, Glicko-2 and Stephenson. Contains pdf documentation of a reproducible analysis using approximately two million chess matches. Also contains an Elo based method for multi-player games where the result is a placing or a score. This includes zero-sum games such as poker and mahjong. LazyData yes License GPL-3 NeedsCompilation yes Repository CRAN Date/Publication 2020-03-01 15:50:06 UTC R topics documented: aflodds . .2 elo..............................................3 elom.............................................5 fide .............................................7 glicko . .9 glicko2 . 11 hist.rating . 13 kfide............................................. 14 kgames . 15 krating . 16 kriichi . 17 1 2 aflodds metrics . 17 plot.rating . 19 predict.rating . 20 riichi . 22 steph . 23 Index 26 aflodds Australian Football Game Results and Odds Description The aflodds data frame has 675 rows and 9 variables. It shows the results and betting odds for 675 Australian football games played by 18 teams from 26th March 2009 until 24th June 2012. Usage aflodds Format This data frame contains the following columns: Date A date object showing the date of the game. Week The number of weeks since 25th March 2009. HomeTeam The home team name. AwayTeam The away team name. HomeScore The home team score. AwayScore The home team score. Score A numeric vector giving the value one, zero or one half for a home win, an away win or a draw respectively. -

Intrinsic Chess Ratings 1 Introduction

CORE Metadata, citation and similar papers at core.ac.uk Provided by Central Archive at the University of Reading Intrinsic Chess Ratings Kenneth W. Regan ∗ Guy McC. Haworth University at Buffalo University of Reading, UK February 12, 2011 Abstract This paper develops and tests formulas for representing playing strength at chess by the quality of moves played, rather than the results of games. Intrinsic quality is estimated via evaluations given by computer chess programs run to high depth, ideally whose playing strength is sufficiently far ahead of the best human players as to be a “relatively omniscient” guide. Several formulas, each having intrinsic skill parameters s for “sensitivity” and c for “competence,” are argued theoretically and tested by regression on large sets of tournament games played by humans of varying strength as measured by the internationally standard Elo rating system. This establishes a correspondence between Elo rating and the parame- ters. A smooth correspondence is shown between statistical results and the century points on the Elo scale, and ratings are shown to have stayed quite constant over time (i.e., little or no “inflation”). By modeling human players of various strengths, the model also enables distributional prediction to detect cheating by getting com- puter advice during games. The theory and empirical results are in principle trans- ferable to other rational-choice settings in which the alternatives have well-defined utilities, but bounded information and complexity constrain the perception of the utilitiy values. 1 Introduction Player strength ratings in chess and other games of strategy are based on the results of games, and are subject to both luck when opponents blunder and drift in the player pool. -

Alberto Santini Monte Dei Paschi Di Siena Outline

Chess betting odds Alberto Santini Monte dei Paschi di Siena Outline ● What is chess-bet? ● Chess rating, expected score, team events, odds. ● Anatomy of chess-bet app. ● How to build a Shiny app. ● A few hours (!?) later... What is chess-bet? chess-bet is a web app, implementing a model to calculate the odds for the players and the teams in chess events. With R and Shiny. Credits to Peter Zhdanov Team events: beating the bookmakers?! Chess rating and expected score The Elo rating system is a method for calculating the relative skill levels of players in two-player games such as chess. It is named after its creator Arpad Elo, a Hungarian-born American physics professor. Expected score: source: http://en.wikipedia.org/wiki/Elo_rating_system Team events and odds Usually there are two teams composed of four chess players each. This is typical for the Chess Olympiad, World Team Chess Championship and other important events. How high is the probability that team A will win? How probable is a draw? Team B’s victory? Anatomy of chess-bet app 1. Calculate the rating differences on each board and find out the expected score of the player. 2. Find out the expected probability of a win, draw and loss in each game. 3. Repeat steps 1 and 2 for all the four boards in the team. 4. Then calculate the probabilities of all the outcomes of Team 1’s victories (and draws) and add them up. Model details - I Score EloExpectedScore <- function(rating1, rating2) { 1 / (1 + 10 ^ ((rating2 - rating1) / 400)) } Game probability getProb <- function(score, winPerc, drawPerc) { x <- score / (winPerc + 0.5 * drawPerc) win <- winPerc * x draw <- (score - win) * 2 loss <- 1 - win - draw c(win, draw, loss) } Model details - II Player profile getPlayerProfile <- function(player) { fideRatingsUrl <- "http://ratings.fide.com/search.phtml?search=" playerUrl <- paste(fideRatingsUrl, "'", player, "'", sep="") tables <- readHTMLTable(playerUrl) table <- tables[[1]] card <- getIntegerfromFactor(table[6, 1]) player <- as.character(table[6, 2]) rating <- getIntegerfromFactor(table[6, 7]) .. -

System Requirements Recommended Hardware Supported Hardware

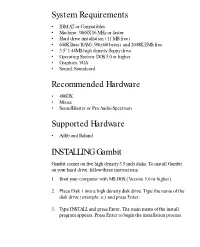

System Requirements • IBM AT or Compatibles • Machine: 386SX 16 MHz or faster • Hard drive installation (11 MB free) • 640K Base RAM (590,000 bytes) and 2048K EMS free • 3.5” 1.44MB high density floppy drive • Operating System: DOS 5.0 or higher • Graphics: VGA • Sound: Soundcard Recommended Hardware • 486DX • Mouse • SoundBlaster or Pro Audio Spectrum Supported Hardware • Adlib and Roland INSTALLING Gambit Gambit comes on five high density 3.5 inch disks. To install Gambit on your hard drive, follow these instructions: 1. Boot your computer with MS-DOS (Version 5.0 or higher). 2. Place Disk 1 into a high density disk drive. Type the name of the disk drive (example: a:) and press Enter. 3. Type INSTALL and press Enter. The main menu of the install program appears. Press Enter to begin the installation process. 4. When Disk 1 has been installed, the program requests Disk 2. Remove Disk 1 from the floppy drive, insert Disk 2, and press Enter. 5. Follow the above process until you have installed all five disks. The Main Menu appears. Select Configure Digital Sound Device from the Main Menu and press Enter. Now choose your sound card by scrolling to it with the highlight bar and pressing Enter. Note: You may have to set the Base Address, IRQ and DMA channel manually by pressing “C” in the Sound Driver Selection menu. After you’ve chosen your card, press Enter. 6. Select Configure Music Sound Device from the Main Menu and press Enter. Now choose your music card by scrolling to it with the highlight bar and pressing Enter.