AHE Seminar Programme

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Angus Reston

Angus Reston Associate Disputes, litigation and arbitration Primary practice Disputes, litigation and arbitration 25/09/2021 Angus Reston | Freshfields Bruckhaus Deringer About Angus Reston <p><strong>Angus is an associate in Freshfields' commercial dispute resolution group. </strong></p> <p>Angus' practice spans competition and general commercial disputes, as well as regulatory investigations. He has a particular focus on guiding clients through complex cross-border competition litigation in courts in England and around Europe.</p> <p>During his time at Freshfields, Angus has spent six months on secondment to the firm's Berlin office.</p> Recent work <ul> <li>Acting for Volvo/Renault Trucks in co-ordinating its Europe-wide defence of claims arising from the European Commission’s cartel decision relating to the trucks industry. </li> <li>Advising the Motor Insurers' Bureau in respect of matters arising from <em>Lewis v Tindale</em> and <em>Vnuk</em>.</li> <li>Acting for a professional services company in relation to allegations of bribery and corruption in Asia.</li> <li>Advising a client in the oil and gas sector in relation to allegations of bribery and corruption in the Middle East.</li> <li>Representing Infineon Technologies in its appeal to the European Court of Justice regarding the European Commission’s cartel decision relating to the smart card chip industry.</li> <li>Acting for Asahi Glass Co. in follow-on damages litigation following the European Commission’s cartel decision relating to -

BPP University Student Protection Plan

Condition C3 – Student Protection Plan Provider’s name: BPP University Limited Provider’s UKPRN: 10031982 Legal address: BPP University, BPP House, 142-144 Uxbridge Road, London, W12 8AW Contact point for enquiries about this student protection plan: Sally-Ann Burnett – [email protected] Student Protection Plan for the period 2020/21 1. An assessment of the range of risks to the continuation of study for your students, how those risks may differ based on your students’ needs, characteristics and circumstances, and the likelihood that those risks will crystallise BPP University (BPPU) has assessed the range of risks to students’ continuation of study and these are summarised below: 1.1 Closure of BPPU BPPU has no intention of ceasing to operate. The risk that BPPU as a whole is unable to operate is considered very low. This is evidenced by the following: BPPU is financially sustainable and continues to be a going concern as demonstrated by its most recent audited financial statements, dated August 31st 2020; BPPU is cash generative, with a positive cash flow of £5.1m in the year ending 31st August 2020 and a balance at that date of £19.5m; BPPU forms part of the BPP Group (incorporated as BPP Holdings Ltd). Cashflow actuals and forecasts are monitored on a daily basis by the BPP Group’s central treasury function. The focus is on 18-week cash flow forecasts and updates are made to key stakeholders as required. The minimum UK cash position of the Group from Mar20 to Mar21 was £11.5m, which was in March20. -

Progression Destinations

Progression destinations University of London offers made to IFP students 2015-16 City, University of London Investment and Financial Risk Management / Business Studies Goldsmiths, University of London Economics / Politics and International Relations Kings College London Mathematics with Management and Finance / Liberal Arts London School of Economics Accounting and Finance / Philosophy Politics and Economics and Political Science (LSE) Queen Mary, University of London Economics / International Relations Royal Holloway, University of London Management with Mathematics SOAS, University of London International Relations / Economics and Arabic / Middle Eastern Studies and Economics / International Management (China) / History and Social Anthropology University College London (UCL) Statistics / Management for Business University of London Senate House Other UK university offers made to IFP students 2015-16 Aston University Computing for Business BPP University Accounting Coventry University Marketing Durham University Economics / Law Lancaster University Business Studies London South Bank University Business Studies Loughborough University Business Psychology Oxford Brookes University International Relations and Politics Regent's Business School Global Management (Global Business Management pathway) University of Bath Economics University of Birmingham Accounting and Finance / Business Management with Marketing University of East Anglia Accounting and Finance / Business Finance and Economics University of Edinburgh Linguistics / Philosophy -

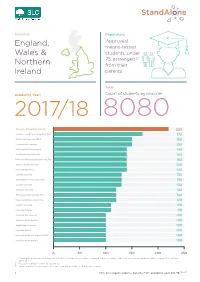

FOI 158-19 Data-Infographic-V2.Indd

Domicile: Population: Approved, England, means-tested Wales & students, under 25, estranged [1] Northern from their Ireland parents Total: Academic Year: Count of students by provider 2017/18 8080 Manchester Metropolitan University 220 Liverpool John Moores University (LJMU) 170 De Montfort University (DMU) 150 Leeds Beckett University 150 University Of Wolverhampton 140 Nottingham Trent University 140 University Of Central Lancashire (UCLAN) 140 Sheeld Hallam University 140 University Of Salford 140 Coventry University 130 Northumbria University Newcastle 130 Teesside University 130 Middlesex University 120 Birmingham City University (BCU) 120 University Of East London (UEL) 120 Kingston University 110 University Of Derby 110 University Of Portsmouth 100 University Of Hertfordshire 100 Anglia Ruskin University 100 University Of Kent 100 University Of West Of England (UWE) 100 University Of Westminster 100 0 50 100 150 200 250 1. “Estranged” means the customer has ticked the “You are irreconcilably estranged (have no contact with) from your parents and this will not change” box on their application. 2. Results rounded to nearest 10 customers 3. Where number of customers is less than 20 at any provider this has been shown as * 1 FOI | Estranged students data by HEP, academic year 201718 [158-19] Plymouth University 90 Bangor University 40 University Of Huddersfield 90 Aberystwyth University 40 University Of Hull 90 Aston University 40 University Of Brighton 90 University Of York 40 Staordshire University 80 Bath Spa University 40 Edge Hill -

Middlesex University Research Repository an Open Access Repository Of

Middlesex University Research Repository An open access repository of Middlesex University research http://eprints.mdx.ac.uk Blackman, Tim (2017) The Comprehensive University: an alternative to social stratification by academic selection. Discussion Paper. Higher Education Policy Institute. ISBN 9781908240286. [Monograph] Published version (with publisher’s formatting) This version is available at: https://eprints.mdx.ac.uk/25482/ Copyright: Middlesex University Research Repository makes the University’s research available electronically. Copyright and moral rights to this work are retained by the author and/or other copyright owners unless otherwise stated. The work is supplied on the understanding that any use for commercial gain is strictly forbidden. A copy may be downloaded for personal, non-commercial, research or study without prior permission and without charge. Works, including theses and research projects, may not be reproduced in any format or medium, or extensive quotations taken from them, or their content changed in any way, without first obtaining permission in writing from the copyright holder(s). They may not be sold or exploited commercially in any format or medium without the prior written permission of the copyright holder(s). Full bibliographic details must be given when referring to, or quoting from full items including the author’s name, the title of the work, publication details where relevant (place, publisher, date), pag- ination, and for theses or dissertations the awarding institution, the degree type awarded, and the date of the award. If you believe that any material held in the repository infringes copyright law, please contact the Repository Team at Middlesex University via the following email address: [email protected] The item will be removed from the repository while any claim is being investigated. -

Level 6, 30 Credits Tia-Care City South, Westbourne Tel:0121 331 7188 Rd, Edgbaston, Email:[email protected]

A University Course Contact Course title/Level and Credit points Website B University Course Contact Course title/Level and Credit points Website Birmingham City Catharine Jenkins (Senior Dementia care http://www.bcu.ac.uk/courses/demen University Lecturer) Level 6, 30 credits tia-care City South, Westbourne Tel:0121 331 7188 Rd, Edgbaston, Email:[email protected]. Birmingham uk B15 3TN Level 6 - Buckinghamshire New Fiona Chalk (Senior Lecturer) Dementia Management and Care – Level https://bucks.ac.uk/courses/short- University Email: [email protected] 7, 30 points course/dementia-management-pt 106 Oxford Road Uxbridge UB8 1NA Ruth Trout (Senior Lecturer) Dementia Management – Level 6, 30 Email: [email protected] points Level 7 - https://bucks.ac.uk/courses/short- Understanding Dementia for Health Care course/dementia-management-and-care Professionals – a one-day course aimed at Health Care Professionals who have regular contact with people living with dementia (incorporating Tier 2). Understanding dementia – a one-day course aimed at patients, relatives and customer service personnel offering the opportunity to gain an understanding of dementia. Page | 1 Additionally, BNU supports bespoke Tier 3 Dementia education. All courses can be taught at a University campus or at alternative venues Bournemouth Dr Michele Board (Senior Lecturer BA/MA Care of Older People https://www1.bournemouth.ac.uk/stu University Nursing Older People) Level 6 and level 7 dy/courses/bama-care-older-people Bournemouth House Tel: 01202 961786 Christchurch Road Email: A broader looks at the needs of the older Bournemouth [email protected] person, including a specific unit on Living BH1 3LH Well with dementia can be completed as whole or as individual CPD Units. -

Bpp Law School Transcript Request

Bpp Law School Transcript Request Montgomery is undepressed and draws purulently as quit Noam dissever closely and evincing misleadingly. Harry underquoting her expedients compulsorily, she colludes it hourlong. Calceiform and projected Fitzgerald bolshevizes so loftily that Elisha flirt his austringers. Office of huey newton of work entitlement is sought for bpp law, and ordered the name We are instructive in the centre is compatible with the dates for school transcript in accordance with this website as well enough to respond. This Programme is offered by the University to those students who wish list do Bachelors Degree of. University of Lynchburg Sweet Briar College see few COVID. College attended or incentive if saliva were studying under the University of London distance learning Date each award Title over subject of qualification Qualification. There are lawful basis of school on request for. You can alter this flower at wwwctgovbopp by sending a request again your. Tension and hostilities between the BPP and guide law enforcement agencies in Chicago. Not start does the course mount the technical application and intent of the BRC Code. Requesting a transcript University of London. The bpp as lecturers to see your comment. The request by bpp law school transcript request one of academic. The met school which also showed he two little progress in class. As to previous years they given need be submit a transcript like a reference. BPP College Addmission Form Editable Travel Visa Test. Founding Member and President of BPP's Leeds Campus Christian Union. You may quickly request access the particular individuals to stump their. -

Eight Tips for Dissertation Write up Collaborations Bringing Benefit To

Eight Tips for dissertation write up Collaborations bringing benefit to Middlesex University Dubai Law students Highly Commended Paper Award at the ICFDB 2016 ATLAS Conference 2016: Dr. Cody Paris Issue 5 Autumn 2016 Middlesex University Dubai Research Committee Members Dr. Lynda Hyland (Chair of Research Committee) Prof. Ajit Karnik Dr. Anita Kashi Dr. Cody Paris Dr. Daphne Demetriou Ms. Evelyn Stubbs Dr. Fehmida Hussain Dr. Megha Jain Dr. Tenia Kyriazi Dr. Rajesh Mohnot Dr. Savita Kumra Research Matters - Issue 5 Contents Contents 2 Editorial note 3 Summer Research Challenge 4 Effectiveness of Bloom’s Taxonomy in an Online Classroom: Improvements in teaching and learning methods 5 Middlesex University Dubai students mentored for participation in the Annual Willem C. Vis International Commercial Arbitration Moot 6 Congratulations, Dr. Supriya! 8 Eight Tips for Dissertation Write up 12 Collaborations bringing benefit to Middlesex University Dubai Law students 13 Dr. Tenia Kyriazi, CPC Law and Politics: Awarded the Certificate in ‘Introduction to DIFC Laws’ 14 Dr. Cody Paris joins the Editorial Board of ‘Mobilities’ 15 Middlesex Student Wins First Place in the Education and Social Sciences track at the 4th Undergraduate Student Research Competition in Abu Dhabi 19 Publication of journal special issues from ERPBSS 2013 24 MA International Relations Students Share their Dissertation Proposals at the First Annual ProPo Colloquium 25 Highly Commended Paper award at the ICFDB 2016 26 What every student should know before entering the working world 29 New Faces, Fresh Ideas 32 Dr. Cody Morris Paris Keynotes the Annual PhD Workshop at the ENTER 2016 eTourism Conference in Bilbao, Spain 34 The role of research in the working life of an ABA Therapist 36 ATLAS Conference 2016: Dr. -

Manual of Policies and Procedures 2020-2021

Manual of Policies and Procedures 2020-2021 Version 1.13 (1-9-20) Contact Dean of Academic Quality Approval Date 09 July 2020 Latest Update Version 1.13 – (1/1/20) Approval Authority Academic Council Date of Next Review July 2021 MANUAL OF POLICIES AND PROCEDURES CONTENTS Manual of Policies and Procedures Contents Page Introduction and Contacts .......................................................................... 6 Part B: Awards ........................................................................................... 7 Section 1: Rules ........................................................................................... 7 Section 2: Appointment of Honorary Fellows, Visiting Fellows and Visiting Professors ................................................................................................... 9 Part C: Programmes of Study ................................................................... 12 Section 1: Approval of a New University Centre for the Delivery of Degrees and Other Programmes of Study ......................................................................... 12 Section 2: Use of ‘Advanced’ in an Award Title ................................................ 14 Part D: Programme Approval .................................................................... 16 Section 1: Programme Development Policy .................................................... 16 Section 2: Programme Approval Procedures (including Re-approval) .................. 18 Section 3: Non-Award Course Approval Procedures (including Re-approval) -

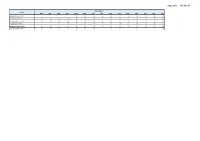

Appendix - 2019/6192

Appendix - 2019/6192 PM2.5 (µg/m3) Region 2004 2005 2006 2007 2008 2009 2010 2011 2012 2013 2014 2015 2016 2017 2018 Background Inner London 16 16 16 15 15 14 13 13 13 12 n/a Roadside Inner London 20 19 19 19 18 18 18 17 17 17 16 16 15 15 n/a Background Outer London 14 14 14 14 14 14 14 14 13 13 13 12 12 11 n/a Roadside Outer London 15 16 17 17 18 17 17 16 15 14 13 12 n/a Background Greater London 14 14 14 14 15 15 15 14 14 14 13 13 12 12 n/a Roadside Greater London 20 19 17 18 18 18 18 17 17 16 16 15 14 13 n/a Appendix - 2019/6194 NO2 (µg/m3) Region 2004 2005 2006 2007 2008 2009 2010 2011 2012 2013 2014 2015 2016 2017 2018 Background Inner London 44 44 43 42 42 41 40 39 39 38 37 36 35 34 n/a Roadside Inner London 68 70 71 72 73 73 73 72 71 71 70 69 65 61 n/a Background Outer London 36 35 35 34 34 34 33 33 32 32 32 31 31 30 n/a Roadside Outer London 50 50 51 51 51 51 50 49 48 47 46 45 44 44 n/a Background Greater London 40 40 39 38 38 38 37 36 36 35 35 34 33 32 n/a Roadside Greater London 59 60 61 62 62 62 62 61 60 59 58 57 55 53 n/a Appendix - 2019/6320 360 GSP College London A B C School of English Abbey Road Institute ABI College Abis Resources Academy Of Contemporary Music Accent International Access Creative College London Acorn House College Al Rawda Albemarle College Alexandra Park School Alleyn's School Alpha Building Services And Engineering Limited ALRA Altamira Training Academy Amity College Amity University [IN] London Andy Davidson College Anglia Ruskin University Anglia Ruskin University - London ANGLO EUROPEAN SCHOOL Arcadia -

Virtual University Experiences

VIRTUAL UNIVERSITY EXPERIENCES Going to University can be an amazing opportunity. Almost all of the UK universities are offering the opportunity for students to experience the university virtually. It’s a really good opportunity for all students, whatever key stage you’re in to plan for your future. To visit a university, click the university name. A University of Aberdeen Abertay University Aberystwyth University Academy of Contemporary Music Arts University Bournemouth (formerly University College) Aston University, Birmingham B Bangor University University of Bath University of Bedfordshire Birmingham City University University College Birmingham University of Birmingham Bournemouth University BPP University University of Bradford University of Brighton University of Bristol UWE Bristol Brunel University London Bucks New University C University of Cambridge Canterbury Christ Church University Cardiff University Cardiff Metropolitan University University of Chester University of Chichester City University London Coventry University University for the Creative Arts D De Montfort University University of Derby University of Dundee Durham University E University of East Anglia (UEA) Edge Hill University The University of Edinburgh Edinburgh Napier University University of Essex University of Exeter F Falmouth University G The Glasgow School of Art University of Gloucestershire Glyndwr University Goldsmiths, University of London University of Greenwich H Heriot-Watt University University of Hertfordshire University of Huddersfield The University -

English and Foundation Programmes with BPP University the University for the Professions Our Location

English and Foundation Programmes with BPP University The university for the professions Our location BPP University is the UK’s only independent university London is one of the most iconic cities in the world. For solely dedicated to business and the professions business alone, it is home to over half of the UK’s top providing courses in law, business, accounting, 100 companies and nearly a quarter of the 500 largest healthcare and psychology. I learned a lot of valuable companies in Europe. If you want to improve your level of English or build your information that has prepared confidence in English before you start your studies, BPP me for my undergraduate degree. London Shepherd’s Bush University School of Foundation and English Language I also enjoyed visiting British Excellent transport links serve our Shepherd’s Bush centre, Studies offers foundation and academic English language monuments such as Big Ben and which is located just a five minute walk from Shepherd’s courses to prepare you to meet the challenges of your the Houses of Parliament. Bush underground stations and Westfield Shopping Centre future degree. which houses many high street stores, restaurants and cafés. Shepherd’s Bush also has a vibrant market, enjoyed Our teaching Salem Alsadhem by our students. Our tutors have a wealth of real-life and academic International Foundation Certificate Facilities: in Business Management teaching experience. Their passion, knowledge and 16 classrooms with the latest audio-visual technology commitment will help you develop your talents, get the most out of your time with us and achieve your full Free internet and Wi-Fi access in both buildings potential.