Supporting the Learning of Rapid Application Development in a Database Environment

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Software Development Career Pathway

Career Exploration Guide Software Development Career Pathway Information Technology Career Cluster For more information about NYC Career and Technical Education, visit: www.cte.nyc Summer 2018 Getting Started What is software? What Types of Software Can You Develop? Computers and other smart devices are made up of Software includes operating systems—like Windows, Web applications are websites that allow users to contact management system, and PeopleSoft, a hardware and software. Hardware includes all of the Apple, and Google Android—and the applications check email, share documents, and shop online, human resources information system. physical parts of a device, like the power supply, that run on them— like word processors and games. among other things. Users access them with a Mobile applications are programs that can be data storage, and microprocessors. Software contains Software applications can be run directly from a connection to the Internet through a web browser accessed directly through mobile devices like smart instructions that are stored and run by the hardware. device or through a connection to the Internet. like Firefox, Chrome, or Safari. Web browsers are phones and tablets. Many mobile applications have Other names for software are programs or applications. the platforms people use to find, retrieve, and web-based counterparts. display information online. Web browsers are applications too. Desktop applications are programs that are stored on and accessed from a computer or laptop, like Enterprise software are off-the-shelf applications What is Software Development? word processors and spreadsheets. that are customized to the needs of businesses. Popular examples include Salesforce, a customer Software development is the design and creation of Quality Testers test the application to make sure software and is usually done by a team of people. -

Chapter 1 Introduction to Computers, Programs, and Java

Chapter 1 Introduction to Computers, Programs, and Java 1.1 Introduction • The central theme of this book is to learn how to solve problems by writing a program . • This book teaches you how to create programs by using the Java programming languages . • Java is the Internet program language • Why Java? The answer is that Java enables user to deploy applications on the Internet for servers , desktop computers , and small hand-held devices . 1.2 What is a Computer? • A computer is an electronic device that stores and processes data. • A computer includes both hardware and software. o Hardware is the physical aspect of the computer that can be seen. o Software is the invisible instructions that control the hardware and make it work. • Computer programming consists of writing instructions for computers to perform. • A computer consists of the following hardware components o CPU (Central Processing Unit) o Memory (Main memory) o Storage Devices (hard disk, floppy disk, CDs) o Input/Output devices (monitor, printer, keyboard, mouse) o Communication devices (Modem, NIC (Network Interface Card)). Bus Storage Communication Input Output Memory CPU Devices Devices Devices Devices e.g., Disk, CD, e.g., Modem, e.g., Keyboard, e.g., Monitor, and Tape and NIC Mouse Printer FIGURE 1.1 A computer consists of a CPU, memory, Hard disk, floppy disk, monitor, printer, and communication devices. CMPS161 Class Notes (Chap 01) Page 1 / 15 Kuo-pao Yang 1.2.1 Central Processing Unit (CPU) • The central processing unit (CPU) is the brain of a computer. • It retrieves instructions from memory and executes them. -

FUNDAMENTALS of COMPUTING (2019-20) COURSE CODE: 5023 502800CH (Grade 7 for ½ High School Credit) 502900CH (Grade 8 for ½ High School Credit)

EXPLORING COMPUTER SCIENCE NEW NAME: FUNDAMENTALS OF COMPUTING (2019-20) COURSE CODE: 5023 502800CH (grade 7 for ½ high school credit) 502900CH (grade 8 for ½ high school credit) COURSE DESCRIPTION: Fundamentals of Computing is designed to introduce students to the field of computer science through an exploration of engaging and accessible topics. Through creativity and innovation, students will use critical thinking and problem solving skills to implement projects that are relevant to students’ lives. They will create a variety of computing artifacts while collaborating in teams. Students will gain a fundamental understanding of the history and operation of computers, programming, and web design. Students will also be introduced to computing careers and will examine societal and ethical issues of computing. OBJECTIVE: Given the necessary equipment, software, supplies, and facilities, the student will be able to successfully complete the following core standards for courses that grant one unit of credit. RECOMMENDED GRADE LEVELS: 9-12 (Preference 9-10) COURSE CREDIT: 1 unit (120 hours) COMPUTER REQUIREMENTS: One computer per student with Internet access RESOURCES: See attached Resource List A. SAFETY Effective professionals know the academic subject matter, including safety as required for proficiency within their area. They will use this knowledge as needed in their role. The following accountability criteria are considered essential for students in any program of study. 1. Review school safety policies and procedures. 2. Review classroom safety rules and procedures. 3. Review safety procedures for using equipment in the classroom. 4. Identify major causes of work-related accidents in office environments. 5. Demonstrate safety skills in an office/work environment. -

Florida Course Code Directory Computer Science Course Information 2019-2020

FLORIDA COURSE CODE DIRECTORY COMPUTER SCIENCE COURSE INFORMATION 2019-2020 Section 1007.2616, Florida Statutes, was amended by the Florida Legislature to include the definition of computer science and a requirement that computer science courses be identified in the Course Code Directory published on the Department of Education’s website. DEFINITION: The study of computers and algorithmic processes, including their principles, hardware and software designs, applications, and their impact on society, and includes computer coding and computer programming. SECONDARY COMPUTER SCIENCE COURSES Middle and high schools in each district, including combination schools in which any of grades 6-12 are taught, must provide an opportunity for students to enroll in a computer science course. If a school district does not offer an identified computer science course the district must provide students access to the course through the Florida Virtual School (FLVS) or through other means. COURSE NUMBER COURSE TITLE 0200000 M/J Computer Science Discoveries 0200010 M/J Computer Science Discoveries 1 0200020 M/J Computer Science Discoveries 2 0200305 Computer Science Discoveries 0200315 Computer Science Principles 0200320 AP Computer Science A 0200325 AP Computer Science A Innovation 0200335 AP Computer Science Principles 0200435 PRE-AICE Computer Studies IGCSE Level 0200480 AICE Computer Science 1 AS Level 0200485 AICE Computer Science 2 A Level 0200490 AICE Information Technology 1 AS Level 0200495 AICE Information Technology 2 A Level 0200800 IB Computer -

Introduction to High Performance Computing

Introduction to High Performance Computing Shaohao Chen Research Computing Services (RCS) Boston University Outline • What is HPC? Why computer cluster? • Basic structure of a computer cluster • Computer performance and the top 500 list • HPC for scientific research and parallel computing • National-wide HPC resources: XSEDE • BU SCC and RCS tutorials What is HPC? • High Performance Computing (HPC) refers to the practice of aggregating computing power in order to solve large problems in science, engineering, or business. • Purpose of HPC: accelerates computer programs, and thus accelerates work process. • Computer cluster: A set of connected computers that work together. They can be viewed as a single system. • Similar terminologies: supercomputing, parallel computing. • Parallel computing: many computations are carried out simultaneously, typically computed on a computer cluster. • Related terminologies: grid computing, cloud computing. Computing power of a single CPU chip • Moore‘s law is the observation that the computing power of CPU doubles approximately every two years. • Nowadays the multi-core technique is the key to keep up with Moore's law. Why computer cluster? • Drawbacks of increasing CPU clock frequency: --- Electric power consumption is proportional to the cubic of CPU clock frequency (ν3). --- Generates more heat. • A drawback of increasing the number of cores within one CPU chip: --- Difficult for heat dissipation. • Computer cluster: connect many computers with high- speed networks. • Currently computer cluster is the best solution to scale up computer power. • Consequently software/programs need to be designed in the manner of parallel computing. Basic structure of a computer cluster • Cluster – a collection of many computers/nodes. • Rack – a closet to hold a bunch of nodes. -

Safety and Security Challenge

SAFETY AND SECURITY CHALLENGE TOP SUPERCOMPUTERS IN THE WORLD - FEATURING TWO of DOE’S!! Summary: The U.S. Department of Energy (DOE) plays a very special role in In fields where scientists deal with issues from disaster relief to the keeping you safe. DOE has two supercomputers in the top ten supercomputers in electric grid, simulations provide real-time situational awareness to the whole world. Titan is the name of the supercomputer at the Oak Ridge inform decisions. DOE supercomputers have helped the Federal National Laboratory (ORNL) in Oak Ridge, Tennessee. Sequoia is the name of Bureau of Investigation find criminals, and the Department of the supercomputer at Lawrence Livermore National Laboratory (LLNL) in Defense assess terrorist threats. Currently, ORNL is building a Livermore, California. How do supercomputers keep us safe and what makes computing infrastructure to help the Centers for Medicare and them in the Top Ten in the world? Medicaid Services combat fraud. An important focus lab-wide is managing the tsunamis of data generated by supercomputers and facilities like ORNL’s Spallation Neutron Source. In terms of national security, ORNL plays an important role in national and global security due to its expertise in advanced materials, nuclear science, supercomputing and other scientific specialties. Discovery and innovation in these areas are essential for protecting US citizens and advancing national and global security priorities. Titan Supercomputer at Oak Ridge National Laboratory Background: ORNL is using computing to tackle national challenges such as safe nuclear energy systems and running simulations for lower costs for vehicle Lawrence Livermore's Sequoia ranked No. -

On the Cognitive Prerequisites of Learning Computer Programming

On the Cognitive Prerequisites of Learning Computer Programming Roy D. Pea D. Midian Kurland Technical Report No. 18 ON THE COGNITIVE PREREQUISITES OF LEARNING COMPUTER PROGRAMMING* Roy D. Pea and D. Midian Kurland Introduction Training in computer literacy of some form, much of which will consist of training in computer programming, is likely to involve $3 billion of the $14 billion to be spent on personal computers by 1986 (Harmon, 1983). Who will do the training? "hardware and software manu- facturers, management consultants, -retailers, independent computer instruction centers, corporations' in-house training programs, public and private schools and universities, and a variety of consultants1' (ibid.,- p. 27). To date, very little is known about what one needs to know in order to learn to program, and the ways in which edu- cators might provide optimal learning conditions. The ultimate suc- cess of these vast training programs in programming--especially toward the goal of providing a basic computer programming compe- tency for all individuals--will depend to a great degree on an ade- quate understanding of the developmental psychology of programming skills, a field currently in its infancy. In the absence of such a theory, training will continue, guided--or to express it more aptly, misguided--by the tacit Volk theories1' of programming development that until now have served as the underpinnings of programming instruction. Our paper begins to explore the complex agenda of issues, promise, and problems that building a developmental science of programming entails. Microcomputer Use in Schools The National Center for Education Statistics has recently released figures revealing that the use of micros in schools tripled from Fall 1980 to Spring 1983. -

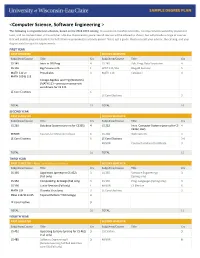

<Computer Science, Software Engineering >

<Computer Science, Software Engineering > The following is a hypothetical schedule, based on the 2018-2019 catalog. It assumes no transferred credits, no requirements waived by placement tests, and no courses taken in the summer. UW-Eau Claire cannot guarantee all courses will be offered as shown, but will provide a range of courses that will enable prepared students to fulfill their requirements in a timely period. This is just a guide. Please consult your advisor, the catalog, and your degree audit for specific requirements. FIRST YEAR FIRST SEMESTER SECOND SEMESTER Subj/Area/Course Title Crs Subj/Area/Course Title Crs CS 145 Intro to OO Prog 4 CS 245 Adv. Prog. Data Structures 4 CS 146 Big Picture in CS 1 WRIT 114/116 Blugold Seminar 5 MATH 112 or Precalculus 4 MATH 114 Calculus I 4 MATH 109 & 113 College Algebra and Trig (Winterim) (MATH 113 – prereq or concurrent enrollment for CS 245 LE Core Electives 6 LE Core Electives 3 TOTAL 15 TOTAL 16 SECOND YEAR FIRST SEMESTER SECOND SEMESTER Subj/Area/Course Title Crs Subj/Area/Course Title Crs CS 260 Database Systems (prereq for CS355) 4 CS 252 Intro. Computer Systems (prereq for CS 4 CS352, 452) MINOR Courses for Minor/Certificate 6 CS 268 Web Systems 3 LE Core Electives 6 LE Core Electives 3-6 MINOR Courses for Minor/Certificate 3 TOTAL 16 TOTAL 15 THIRD YEAR FIRST SEMESTER – Apply for Admission to Major SECOND SEMESTER Subj/Area/Course Title Crs Subj/Area/Course Title Crs CS 335 Algorithms (prereq for CS 452) 3 CS 355 Software Engineering I 3 (Fall only) (Spring only) CS 352 ComputeOrg. -

Artificial Intelligence Program 1

Artificial Intelligence Program 1 Artificial Intelligence Program Reid Simmons, Director of the BSAI program (NSH 3213) 15-122 Principles of Imperative Computation 10 (students without credit or a waiver for 15-112, Jean Harpley, Program Coordinator (NSH 1517) Fundamentals of Programming and Computer www.cs.cmu.edu/bs-in-artificial-intelligence (http://www.cs.cmu.edu/bs-in- Science, must take 15-112 before 15-122) artificial-intelligence/) 15-150 Principles of Functional Programming 10 15-210 Parallel and Sequential Data Structures and 12 Overview Algorithms 15-213 Introduction to Computer Systems 12 Carnegie Mellon University has led the world in artificial intelligence 15-251 Great Ideas in Theoretical Computer Science 12 education and innovation since the field was created. It's only natural, then, that the School of Computer Science would offer the nation's first bachelor's degree in Artificial Intelligence, which started in Fall 2018. Artificial Intelligence The new BSAI program gives students the in-depth knowledge needed to transform large amounts of data into actionable decisions. The program All of the following three AI core courses: Units and its curriculum focus on how complex inputs — such as vision, language 07-180 Concepts in Artificial Intelligence 5 and huge databases — can be used to make decisions or enhance human 15-281 Artificial Intelligence: Representation and 12 capabilities. The curriculum includes coursework in computer science, Problem Solving math, statistics, computational modeling, machine learning and symbolic computation. Because Carnegie Mellon is devoted to AI for social good, 10-315 Introduction to Machine Learning (SCS Majors) 12 students will also take courses in ethics and social responsibility, with the plus one of the following AI core courses: option to participate in independent study projects that change the world for 16-385 Computer Vision 12 the better — in areas like healthcare, transportation and education. -

Software Development a Practical Approach!

Software Development A Practical Approach! Hans-Petter Halvorsen https://www.halvorsen.blog https://halvorsen.blog Software Development A Practical Approach! Hans-Petter Halvorsen Software Development A Practical Approach! Hans-Petter Halvorsen Copyright © 2020 ISBN: 978-82-691106-0-9 Publisher Identifier: 978-82-691106 https://halvorsen.blog ii Preface The main goal with this document: • To give you an overview of what software engineering is • To take you beyond programming to engineering software What is Software Development? It is a complex process to develop modern and professional software today. This document tries to give a brief overview of Software Development. This document tries to focus on a practical approach regarding Software Development. So why do we need System Engineering? Here are some key factors: • Understand Customer Requirements o What does the customer needs (because they may not know it!) o Transform Customer requirements into working software • Planning o How do we reach our goals? o Will we finish within deadline? o Resources o What can go wrong? • Implementation o What kind of platforms and architecture should be used? o Split your work into manageable pieces iii • Quality and Performance o Make sure the software fulfills the customers’ needs We will learn how to build good (i.e. high quality) software, which includes: • Requirements Specification • Technical Design • Good User Experience (UX) • Improved Code Quality and Implementation • Testing • System Documentation • User Documentation • etc. You will find additional resources on this web page: http://www.halvorsen.blog/documents/programming/software_engineering/ iv Information about the author: Hans-Petter Halvorsen The author currently works at the University of South-Eastern Norway. -

Computer Programming and Database Management - Software Engineering Technology Major (SET)

Computer Programming and Database Management - Software Engineering Technology Major (SET) Program Chair: Robert (Bob) Nields, MBA • Email: [email protected] • Phone: (513) 569-1653 Co-Op Coordinator Chair: Noelle Grome, ME, MA • Email: [email protected] • Phone: (513) 569-4693 The Computer Programming and Database Management - Software Engineering Technology Major (SET) focuses on the design, development, implementation, and maintenance of software solutions used in a variety of industries and organizations. Students gain practical knowledge and experience in the software development process and methods using relevant, current programming languages, databases, and database query languages. Students also gain knowledge of core math and science concepts and skills. Graduates earn an Associate of Applied Science degree and are prepared to enter the workforce as skilled software developers/computer programmers. Graduates may continue their education in a bachelor's degree program in computer science, engineering technology, or mathematics. Although some required courses are available through evening and/or online classes, most of the required courses for the Software Engineering Technology Major are scheduled as in-person classes offered on Monday through Friday between 8 a.m. and 5 p.m. Employment Options GRADUATE STARTING SALARY Education Options PROJECTIONS: GRADUATES ARE PREPARED TO: $40,000 to $60,000 annual salary STRONG TRANSFER HISTORY: • Design and write computer programs Northern Kentucky University using programming languages NET, EMPLOYMENT OUTLOOK: University of Cincinnati Python, Java, C, and C++ Graduates of the Software Engineering Western Governors University • Develop applications using the Technology program are in demand by Wilmington College Object-Oriented Programming companies locally and nationally. Wright State University Methodology According to the U.S. -

A Debate on Teaching Computing Science

Teaching Computing Science t the ACM Computer Science Conference last Strategic Defense Initiative. William Scherlis is February, Edsger Dijkstra gave an invited talk known for his articulate advocacy of formal methods called “On the Cruelty of Really Teaching in computer science. M. H. van Emden is known for Computing Science.” He challenged some of his contributions in programming languages and the basic assumptions on which our curricula philosophical insights into science. Jacques Cohen Aare based and provoked a lot of discussion. The edi- is known for his work with programming languages tors of Comwunications received several recommenda- and logic programming and is a member of the Edi- tions to publish his talk in these pages. His comments torial Panel of this magazine. Richard Hamming brought into the foreground some of the background received the Turing Award in 1968 and is well known of controversy that surrounds the issue of what be- for his work in communications and coding theory. longs in the core of a computer science curriculum. Richard M. Karp received the Turing Award in 1985 To give full airing to the controversy, we invited and is known for his contributions in the design of Dijkstra to engage in a debate with selected col- algorithms. Terry Winograd is well known for his leagues, each of whom would contribute a short early work in artificial intelligence and recent work critique of his position, with Dijkstra himself making in the principles of design. a closing statement. He graciously accepted this offer. I am grateful to these people for participating in We invited people from a variety of specialties, this debate and to Professor Dijkstra for creating the backgrounds, and interpretations to provide their opening.