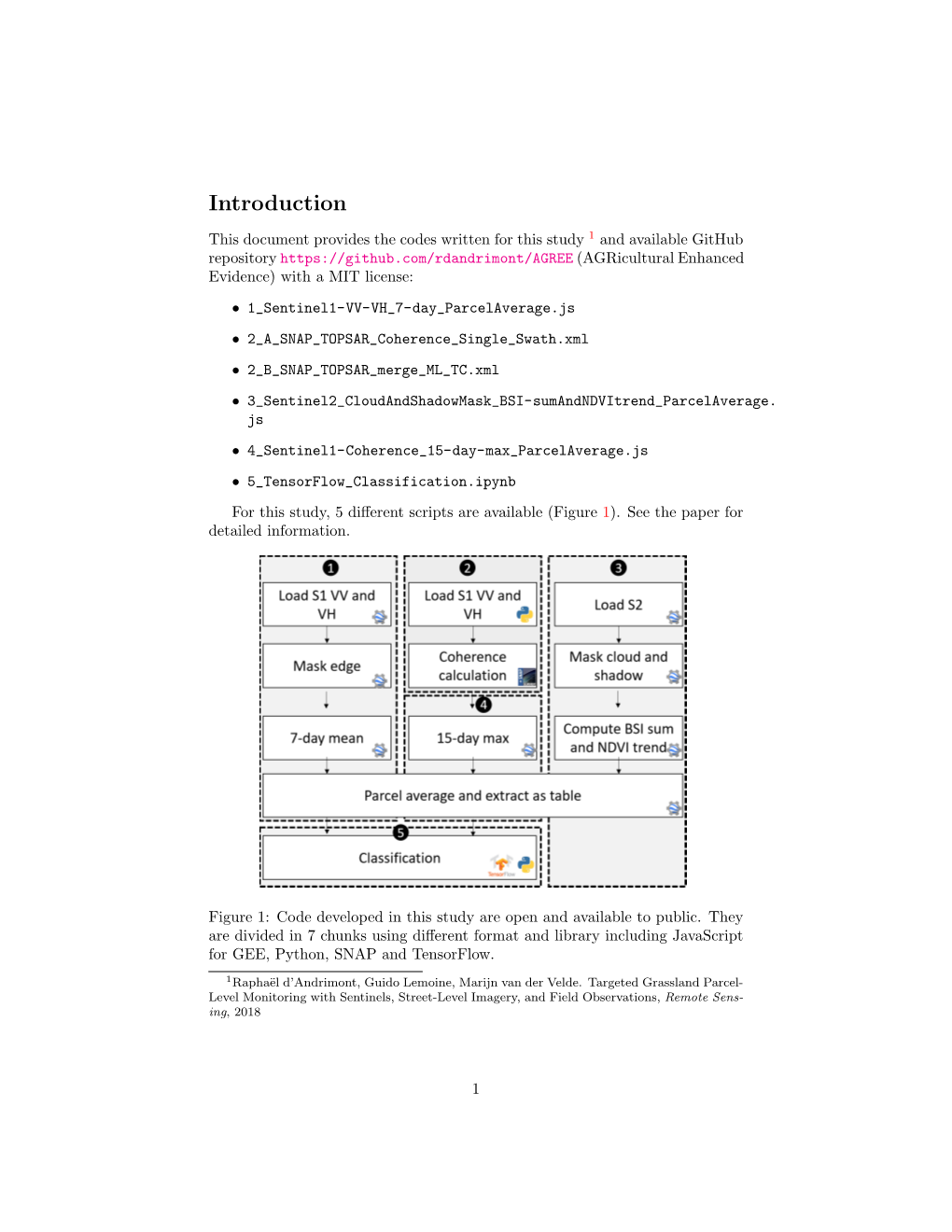

Introduction

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Hoogtebeelden Rond Nijkerk En Putten Een Nieuwe Kijk Op Het Landschap

Hoogtebeelden rond Nijkerk en Putten Een nieuwe kijk op het landschap Peter Bijvank In maart van 2014 kwam het Actueel Hoogtebestand Nederland 2 (AHN2) beschikbaar via internet. Een breed publiek heeft daarmee toegang gekregen tot een zeer gedetailleerd hoogtebestand van Nederland dat voor allerlei doeleinden gebruikt kan worden, waaronder landschapsonderzoek. Het AHN2 bestaat uit opnamen van het aardoppervlak die vanuit een vliegtuig met behulp van lasertechniek zijn gemaakt. Door deze techniek is het mogelijk tot op centimeters nauwkeurig de hoogte van het maaiveld in beeld te brengen. De hoogtekaarten geven een geheel nieuwe kijk op onze omgeving en laten details van het reliëf zien die van grote waarde zijn voor de bestudering van het landschap. In dit artikel een greep uit enkele beelden rond Nijkerk en Putten. Figuur 1: Overzicht van de Gelderse Vallei met links de Utrechtse heuvelrug en rechts het Veluwemassief. oogtekaarten zijn al veel langer beschikbaar als instrument voor o.a. (historisch)geograven. Het voorkomen van natuurlijke hoogteverschillen als H dekzandruggen, smeltwatergeulen, stuwwallen, etc. zijn van groot belang geweest voor de ontginning en bewoning van gebieden. Een gedetailleerd, inzichtelijk beeld van het reliëf van het landschap is daarom van groot belang bij landschapsonderzoek. Zeker wanneer dit beeld details laat zien van enkele centimeters in hoogteverschil waardoor ook antropogene invloeden als greppels, houtwallen, raatakkers, verhoogde akkers, etc. zichtbaar worden. Hoogtepuntenkaarten, geomorfologische kaarten of kaarten met isohypsen (hoogtelijnen) Peter Bijvank mei 2014 1 waren tot voor kort de enige bronnen om inzicht te krijgen in het reliëf van het landschap. Het detailniveau van deze kaarten is veelal te gering om een volledige vertaling naar de omgeving te kunnen maken. -

50 Bus Dienstrooster & Lijnroutekaart

50 bus dienstrooster & lijnkaart 50 Utrecht - Wageningen / Veenendaal Bekijken In Websitemodus De 50 buslijn (Utrecht - Wageningen / Veenendaal) heeft 14 routes. Op werkdagen zijn de diensturen: (1) Amerongen Via Zeist/Doorn: 23:02 (2) Doorn Via Zeist: 00:02 (3) Driebergen Via Doorn: 18:41 - 18:55 (4) Driebergen- Zeist Station: 00:32 - 18:49 (5) Leersum: 19:54 - 23:54 (6) Rhenen Via Zeist/Doorn: 23:02 (7) Utrecht Cs: 05:25 - 06:25 (8) Utrecht Via Doorn/Zeist: 05:38 - 23:33 (9) Utrecht Via Zeist: 05:40 - 06:39 (10) Veenendaal Stat. De Klomp: 20:25 - 23:25 (11) Veenendaal Via Doorn: 06:07 (12) Veenendaal Via Zeist/Doorn: 06:09 - 18:04 (13) Wageningen Via Zeist/Doorn: 05:54 - 23:32 Gebruik de Moovit-app om de dichtstbijzijnde 50 bushalte te vinden en na te gaan wanneer de volgende 50 bus aankomt. Richting: Amerongen Via Zeist/Doorn 50 bus Dienstrooster 39 haltes Amerongen Via Zeist/Doorn Dienstrooster Route: BEKIJK LIJNDIENSTROOSTER maandag Niet Operationeel dinsdag Niet Operationeel Utrecht, Cs Jaarbeurszijde (Perron C4) Stationshal, Utrecht woensdag Niet Operationeel Neude donderdag Niet Operationeel Potterstraat, Utrecht vrijdag Niet Operationeel Janskerkhof zaterdag 06:34 - 07:04 12 Janskerkhof, Utrecht zondag 23:02 Utrecht, Stadsschouwburg 24 Lucasbolwerk, Utrecht Utrecht, Wittevrouwen 107 Biltstraat, Utrecht 50 bus Info Route: Amerongen Via Zeist/Doorn Utrecht, Oorsprongpark (Perron B) Haltes: 39 176 Biltstraat, Utrecht Ritduur: 53 min Samenvatting Lijn: Utrecht, Cs Jaarbeurszijde De Bilt, Knmi (Perron C4), Neude, Janskerkhof, Utrecht, -

Utrecht CRFS Boundaries Options

City Region Food System Toolkit Assessing and planning sustainable city region food systems CITY REGION FOOD SYSTEM TOOLKIT TOOL/EXAMPLE Published by the Food and Agriculture Organization of the United Nations and RUAF Foundation and Wilfrid Laurier University, Centre for Sustainable Food Systems May 2018 City Region Food System Toolkit Assessing and planning sustainable city region food systems Tool/Example: Utrecht CRFS Boundaries Options Author(s): Henk Renting, RUAF Foundation Project: RUAF CityFoodTools project Introduction to the joint programme This tool is part of the City Region Food Systems (CRFS) toolkit to assess and plan sustainable city region food systems. The toolkit has been developed by FAO, RUAF Foundation and Wilfrid Laurier University with the financial support of the German Federal Ministry of Food and Agriculture and the Daniel and Nina Carasso Foundation. Link to programme website and toolbox http://www.fao.org/in-action/food-for-cities-programme/overview/what-we-do/en/ http://www.fao.org/in-action/food-for-cities-programme/toolkit/introduction/en/ http://www.ruaf.org/projects/developing-tools-mapping-and-assessing-sustainable-city- region-food-systems-cityfoodtools Tool summary: Brief description This tool compares the various options and considerations that define the boundaries for the City Region Food System of Utrecht. Expected outcome Definition of the CRFS boundaries for a specific city region Expected Output Comparison of different CRFS boundary options Scale of application City region Expertise required for Understanding of the local context, existing data availability and administrative application boundaries and mandates Examples of Utrecht (The Netherlands) application Year of development 2016 References - Tool description: This document compares the various options and considerations that define the boundaries for the Utrecht City Region. -

Schoolgids 18-19.Pdf

Schoolgids 2018-2019 Inhoud Inleiding ........................................................................................................................... 4 1. Algemeen ..................................................................................................................... 5 Passend onderwijs ................................................................................................................................... 5 Uitgangspunten ....................................................................................................................................... 5 Identiteit en levensbeschouwing ............................................................................................................. 6 2. Aanmelding en toelating ............................................................................................... 7 Aanmeldingsprocedure ........................................................................................................................... 7 Toelatingscriteria ..................................................................................................................................... 8 Commissie van begeleiding ..................................................................................................................... 8 Uitstroom ................................................................................................................................................. 8 Ondersteuning in het veld ...................................................................................................................... -

Orientalism and the Rhetoric of the Family: Javanese Servants in European Household Manuals and Children's Fiction1 Elsbeth L

O rientalism and the Rhetoric of the Family: Javanese Servants in European H ousehold Manuals and C hildren' s F iction1 Elsbeth Locher-Scholten Of all dominated groups in the former colonies, domestic servants were the most "sub altern." Silenced by the subservient nature of their work and the subordinated social class they came from, Indonesian or Javanese servants in the former Dutch East-Indies were neither expected nor allowed to speak for themselves. Neither did they acquire a voice through pressures in the labor market, as was the case with domestic servants in twentieth- century Europe.2 Because of the large numbers of Indonesian servants, the principle of supply and demand functioned to their disadvantage. For all these reasons, it is impossible to present these servants' historical voices and experiences directly from original source material. What we can do is reconstruct fragments of their social history from circumstantial evi dence (censuses). Moreover, in view of the quantity of fictional sources and the growing interest in the history of colonial mentalities, we can analyze the Dutch narratives which chronicle the colonizer-colonized relationship. It is possible to reconstruct pictures of Indo nesian servants by decoding these representations, although we should keep in mind that * A first draft of this article was presented to the Ninth Berkshire Conference of the History of Women, Vassar College, June 1993.1 want to thank the audience as well as Sylvia Vatuk, Rosemarie Buikema, Frances Gouda, Berteke Waaldijk and the anonymous reviewers of this journal for their useful comments on earlier drafts. 2 Since the Vassar historian Lucy Maynard Salmon published the first study of domestic service in the United States in 1890, the history of American and European domestic service has become a well-documented field of historical analysis. -

Indeling Van Nederland in 40 COROP-Gebieden Gemeentelijke Indeling Van Nederland Op 1 Januari 2019

Indeling van Nederland in 40 COROP-gebieden Gemeentelijke indeling van Nederland op 1 januari 2019 Legenda COROP-grens Het Hogeland Schiermonnikoog Gemeentegrens Ameland Woonkern Terschelling Het Hogeland 02 Noardeast-Fryslân Loppersum Appingedam Delfzijl Dantumadiel 03 Achtkarspelen Vlieland Waadhoeke 04 Westerkwartier GRONINGEN Midden-Groningen Oldambt Tytsjerksteradiel Harlingen LEEUWARDEN Smallingerland Veendam Westerwolde Noordenveld Tynaarlo Pekela Texel Opsterland Súdwest-Fryslân 01 06 Assen Aa en Hunze Stadskanaal Ooststellingwerf 05 07 Heerenveen Den Helder Borger-Odoorn De Fryske Marren Weststellingwerf Midden-Drenthe Hollands Westerveld Kroon Schagen 08 18 Steenwijkerland EMMEN 09 Coevorden Hoogeveen Medemblik Enkhuizen Opmeer Noordoostpolder Langedijk Stede Broec Meppel Heerhugowaard Bergen Drechterland Urk De Wolden Hoorn Koggenland 19 Staphorst Heiloo ALKMAAR Zwartewaterland Hardenberg Castricum Beemster Kampen 10 Edam- Volendam Uitgeest 40 ZWOLLE Ommen Heemskerk Dalfsen Wormerland Purmerend Dronten Beverwijk Lelystad 22 Hattem ZAANSTAD Twenterand 20 Oostzaan Waterland Oldebroek Velsen Landsmeer Tubbergen Bloemendaal Elburg Heerde Dinkelland Raalte 21 HAARLEM AMSTERDAM Zandvoort ALMERE Hellendoorn Almelo Heemstede Zeewolde Wierden 23 Diemen Harderwijk Nunspeet Olst- Wijhe 11 Losser Epe Borne HAARLEMMERMEER Gooise Oldenzaal Weesp Hillegom Meren Rijssen-Holten Ouder- Amstel Huizen Ermelo Amstelveen Blaricum Noordwijk Deventer 12 Hengelo Lisse Aalsmeer 24 Eemnes Laren Putten 25 Uithoorn Wijdemeren Bunschoten Hof van Voorst Teylingen -

Foodvalley: Climate for Innovation

FoodValley: climate for innovation A small region with a big ambition, FoodValley is located in the geographic centre of the Netherlands, with Wageningen UR serving as its figurehead. Partners from government, business and education have joined forces with a clear goal: to boost innovation in the agri-food cluster. The region has an advantageous position owing to its high concentration of academic and research institutions. By making connections between various players, the region’s momentum has increased, leading to new projects and thriving businesses. The region consists of eight municipalities: Wageningen, Ede, Barneveld, Nijkerk, Renswoude, Scherpenzeel, Veenendaal and Rhenen, with a population of 330,000 people living in a green area adjacent to the Randstad. Amsterdam, Rotterdam and Eindhoven are all located within one hour travel time. The direct intercity rail link with Schiphol airport offers high-speed travel for international businesspeople and visitors. Entrepreneurs The region has an excellent climate for entrepreneurs, with a strong work ethic. Many companies located here have an excellent international reputation. The NIZO food research institute in Ede has clients from all over the world. MARIN and MeteoGroup have greatly contributed to Wageningen’s climate of expertise. Located in Veenendaal, the food companies HatchTech, SanoRice, Jan Zandbergen and Docomar have a strong international portfolio. Veenendaal has also been chosen as a location by many ICT firms. Barneveld is the centre of a large industrialised poultry sector. Companies such as Moba, Jansen Poultry, BBS Food and Impex are part of an international network in this sector. Nijkerk distinguishes itself with a number of food production companies such as Struik, Bieze, Arla, Vreugdenhil, Denkavit and Festivaldi. -

Directions to Vintura from Amsterdam from Utrecht

Vintura Julianalaan 8 3743 JG Baarn [email protected] T: 035 54 33 540 Directions to Vintura From Amsterdam On the A1 motorway, take exit 10 (Soest/Baarn-Noord, N221) At the traffic lights, turn left (Baarn/Soest, N221) At the roundabout, continue straight. At the second roundabout, continue straight again (taking the second exit) and follow the signing Baarn / Soest After 3,000 meters, at the traffic lights, turn left onto the Luitenant Generaal van Heutszlaan After 370 meters, turn left onto the Julianalaan The office is directly on the corner on your left side From Utrecht Follow the A27 Hilversum/Almere Take exit A1 (Amsterdam/Amersfoort) In this exit, follow A1 direction Amersfoort Take exit 10 (Soest/Baarn-Noord, N221) At the traffic lights, turn left (Baarn/Soest, N221) At the roundabout, continue straight. At the second roundabout, continue straight again (taking the second exit) and follow the signing Baarn / Soest After 3,000 meters, at the traffic lights, turn left onto the Luitenant Generaal van Heutszlaan After 370 meters, turn left onto the Julianalaan The office is directly on the corner on your left side From Schiphol Take the A4/A10 (towards Amsterdam) and stay on the A4 until it joins the A10 Follow the A10 (Amsterdam ring road) in the direction of Amersfoort On the A1 motorway, take exit 10 (Soest/Baarn-Noord, N221) At the traffic lights, turn left (Baarn/Soest, N221) At the roundabout, continue straight. At the second roundabout, continue straight again (taking the second exit) and follow the -

101 Bus Dienstrooster & Lijnroutekaart

101 bus dienstrooster & lijnkaart 101 Harderwijk - Putten - Amersfoort Vathorst Bekijken In Websitemodus De 101 buslijn (Harderwijk - Putten - Amersfoort Vathorst) heeft 2 routes. Op werkdagen zijn de diensturen: (1) Amersfoort Vathorst Via Putten: 06:21 - 20:21 (2) Harderwijk Via Putten: 06:40 - 21:23 Gebruik de Moovit-app om de dichtstbijzijnde 101 bushalte te vinden en na te gaan wanneer de volgende 101 bus aankomt. Richting: Amersfoort Vathorst Via Putten 101 bus Dienstrooster 34 haltes Amersfoort Vathorst Via Putten Dienstrooster Route: BEKIJK LIJNDIENSTROOSTER maandag 06:21 - 20:21 dinsdag 06:21 - 20:21 Harderwijk, Station Stationsplein, Harderwijk woensdag 06:21 - 20:21 Harderwijk, Ziekenh. St. Jansdal donderdag 06:21 - 20:21 Westeinde, Harderwijk vrijdag 06:21 - 20:21 Harderwijk, Stadswei zaterdag Niet Operationeel 2 Kuilpad, Harderwijk zondag Niet Operationeel Harderwijk, De Roef Zuiderzeepad, Harderwijk Harderwijk, Ijsselmeerpad Stadswei, Harderwijk 101 bus Info Route: Amersfoort Vathorst Via Putten Harderwijk, Ragtimedreef Haltes: 34 Ragtimedreef, Harderwijk Ritduur: 60 min Samenvatting Lijn: Harderwijk, Station, Harderwijk, Harderwijk, Triasplein Ziekenh. St. Jansdal, Harderwijk, Stadswei, Waterplassteeg, Harderwijk Harderwijk, De Roef, Harderwijk, Ijsselmeerpad, Harderwijk, Ragtimedreef, Harderwijk, Triasplein, Harderwijk, Salentijnerhout Harderwijk, Salentijnerhout, Ermelo, Kolbaanweg, 2 Salentijnerhout, Harderwijk Ermelo, Lange Haeg, Ermelo, Veldwijkerweg, Ermelo, Dr. Van Dalelaan, Ermelo, Dirk Staalweg, Ermelo, Ermelo, -

Bijlage: Overzicht Gesubsidieerde Instellingen 2012 Invaliden

Bijlage: Overzicht gesubsidieerde instellingen 2012 1. Verenigingen voor amateursport Invaliden Sportvereniging Arnhem e.o. Atletiekvereniging Nijkerk (ISVA) Badmintonclub Hoevelaken Nederlandse Vereniging van Blinden en Badmintonclub Nijkerk Slechtzienden Basketballvereniging Sparta Speel-o-theek Eemland Bridgeclub Hoevelaken Bridgeclub Klaverslag Hoevelaken 3. Culturele amateurverenigingen Jeu de Boules vereniging De Nijeboulers Blue Basement Big Band Gymnastiekvereniging DOTO Chr. Gemengd Koor Con Amore Gymnastiekvereniging Hellas Chr. Streekmannenkoor Noordwest Veluwe Handbalvereniging Voice Chr. Gemengde Zangvereniging Excelsior Hengelsportvereniging Hoop op Geluk Jongerenkoor Testify Hoevelakens Schaakgenootschap Majoretten Showteam Nijkerk Hockeyclub Nijkerk Muziekverenging Concordia Korfbalvereniging Sparta Nijkerks Stedelijk Fanfare Corps (NSCF) Korfbalvereniging Telstar Nijkerkse Klokkenspelvereniging Rijvereniging De Laak Smartlappenkoor uit het leven gegrepen Mixed Hockeyclub Hoevelaken Stichting Voices of Liberty Nijkerkse Bridgeclub Toneelvereniging Het Gebeuren Nijkerkse Crossclub Volksdansgroep Rav Brachot Nijkerkse Gymnastiekvereniging Excelsior Nijkerkse Sport Club 4. Nationale feest- en gedenkdagen Nijkerkse Tennis Club Oranjeverenging Nijkerk Nijkerkse Volleybal Club Oranjevereniging Wilhelmina (Hoevelaken) Nijkerkse Watersportvereniging Flevo Ponyclub De Waaghalsjes 5. Stedenbanden Postduivenvereniging Nijkerk Stichting Stedenband Nijkerk-Scenectady Schaats en Skeelervereniging Nijkerk Stichting Stedenband Hoevelaken -

Journeying Towards Multiculturalism? the Relationship Between

Journal of Religion in Europe Journal of Religion in Europe 3 (2010) 125–154 brill.nl/jre Journeying Towards Multiculturalism? Th e Relationship between Immigrant Christians and Dutch Indigenous Churches Martha Th . Frederiks Centre IIMO/Department of Th eology and Religious Studies Faculty of Humanities, Utrecht University Heidelberglaan 2, 3584 CS Utrecht, Th e Netherlands [email protected] Nienke Pruiksma Centre IIMO/Department of Th eology and Religious Studies Faculty of Humanities, Utrecht University Heidelberglaan 2, 3584 CS Utrecht, Th e Netherlands [email protected] Abstract Due to globalisation and migration western Europe has become home to adher- ents of many diff erent religions. Th is article focuses on one aspect of the changes on the religious scene; it investigates in what way immigration—and Christian immigrant religiosity particularly—has aff ected the structure and identity of the Dutch Roman Catholic Church and the Protestant Church in the Netherlands. We argue that the Roman Catholic Church in the Netherlands has been able to accommodate a substantial group of immigrants whilst the PCN seems to encoun- ter more problems responding to the increasingly multicultural society. We con- clude that both churches, however, in structure and theology, remain largely unaff ected by the infl ux of immigrant Christians. Keywords immigration , Christianity , western Europe , multiculturalism , churches , identity , Netherlands , Protestantism © Koninklijke Brill NV, Leiden, 2010 DOI 10.1163/187489209X478328 126 M. Th . Frederiks, N. Pruiksma / Journal of Religion in Europe 3 (2010) 125–154 1. Introduction In the present age of increased migration the famous words by Heraclitus, panta rhei (everything is moving), could be rephrased as pantes rheousin , everybody is moving. -

Laag Sociaal-Economisch Niveau

Zuid Schets van het gezondheids-, geluks- en welvaartsniveau en de rol van de Eerstelijn Erik Asbreuk, Voorzitter EMC Nieuwegein, Huisarts Gezondheidscentrum Mondriaanlaan 'Nieuwegein 2020: gezond, gelukkig en welvarend?' Rapport Rabobank 2010: Nieuwegein, de werkplaats van Midden Nederland: Nieuwegein heeft een laag sociaal-economisch niveau Zuid % lopende WW uitkeringen op 1 januari (tov potentiële beroepsbevolking) Amersfoort Baarn Bunnik Bunschoten De Bilt De Ronde Venen Eemnes Gemiddelde Houten IJsselstein Leusden Lopik Montfoort Nieuwegein Oudewater Renswoude Rhenen Soest Stichtse Vecht Utrechtse Heuvelrug Veenendaal Vianen Wijk bij Duurstede Woerden Woudenberg Zeist 0 0,5 1 1,5 2 2,5 % lopende WW uitkeringen op 1 januari (tov potentiële beroepsbevolking) Amersfoort Baarn Bunnik Bunschoten De Bilt De Ronde Venen Eemnes Gemiddelde Houten IJsselstein Leusden Lopik Montfoort Nieuwegein Oudewater Renswoude Rhenen Soest Stichtse Vecht Utrechtse Heuvelrug Veenendaal Vianen Wijk bij Duurstede Woerden Woudenberg Zeist 0 0,5 1 1,5 2 2,5 % WAO ontvangers (tov potentiële beroepsbevolking) Amersfoort Baarn Bunnik Bunschoten De Bilt De Ronde Venen Eemnes Gemiddelde Houten IJsselstein Leusden Lopik Montfoort Nieuwegein Oudewater Renswoude Rhenen Soest Stichtse Vecht Utrechtse Heuvelrug Veenendaal Vianen Wijk bij Duurstede Woerden Woudenberg Zeist 0 0,5 1 1,5 2 2,5 3 3,5 4 4,5 5 % WAO ontvangers (tov potentiële beroepsbevolking) Amersfoort Baarn Bunnik Bunschoten De Bilt De Ronde Venen Eemnes Gemiddelde Houten IJsselstein Leusden Lopik Montfoort Nieuwegein Oudewater Renswoude Rhenen Soest Stichtse Vecht Utrechtse Heuvelrug Veenendaal Vianen Wijk bij Duurstede Woerden Woudenberg Zeist 0 0,5 1 1,5 2 2,5 3 3,5 4 4,5 5 Laag sociaal-economisch niveau • In vergelijking met de regio is het sociaal economisch niveau van de bevolking van Nieuwegein laag.