K2/Kleisli and GUS: Experiments in Integrated Access to Genomic Data Sources

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

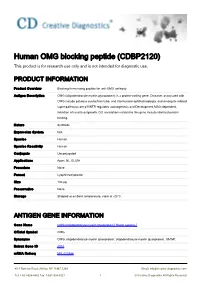

Human OMG Blocking Peptide (CDBP2120) This Product Is for Research Use Only and Is Not Intended for Diagnostic Use

Human OMG blocking peptide (CDBP2120) This product is for research use only and is not intended for diagnostic use. PRODUCT INFORMATION Product Overview Blocking/Immunizing peptide for anti-OMG antibody Antigen Description OMG (oligodendrocyte myelin glycoprotein) is a protein-coding gene. Diseases associated with OMG include patulous eustachian tube, and internuclear ophthalmoplegia, and among its related super-pathways are p75NTR regulates axonogenesis and Development MAG-dependent inhibition of neurite outgrowth. GO annotations related to this gene include identical protein binding. Nature Synthetic Expression System N/A Species Human Species Reactivity Human Conjugate Unconjugated Applications Apuri, BL, ELISA Procedure None Format Lyophilized powder Size 100 μg Preservative None Storage Shipped at ambient temperature, store at -20°C. ANTIGEN GENE INFORMATION Gene Name OMG oligodendrocyte myelin glycoprotein [ Homo sapiens ] Official Symbol OMG Synonyms OMG; oligodendrocyte myelin glycoprotein; oligodendrocyte-myelin glycoprotein; OMGP; Entrez Gene ID 4974 mRNA Refseq NM_002544 45-1 Ramsey Road, Shirley, NY 11967, USA Email: [email protected] Tel: 1-631-624-4882 Fax: 1-631-938-8221 1 © Creative Diagnostics All Rights Reserved Protein Refseq NP_002535 UniProt ID P23515 Chromosome Location 17q11-q12 Pathway Axonal growth inhibition (RHOA activation), organism-specific biosystem; Signal Transduction, organism-specific biosystem; Signalling by NGF, organism-specific biosystem; p75 NTR receptor-mediated signalling, organism-specific biosystem; p75(NTR)-mediated signaling, organism-specific biosystem; p75NTR regulates axonogenesis, organism-specific biosystem; Function identical protein binding; 45-1 Ramsey Road, Shirley, NY 11967, USA Email: [email protected] Tel: 1-631-624-4882 Fax: 1-631-938-8221 2 © Creative Diagnostics All Rights Reserved. -

CALIFORNIA STATE UNIVERSITY, NORTHRIDGE Bioinformatic

CALIFORNIA STATE UNIVERSITY, NORTHRIDGE Bioinformatic Comparison of the EVI2A Promoter and Coding Regions A thesis submitted in partial fulfillment of the requirements for the degree of Master of Science in Biology By Max Weinstein May 2020 The thesis of Max Weinstein is approved: Professor Rheem D. Medh Date Professor Virginia Oberholzer Vandergon Date Professor Cindy Malone, Chair Date California State University Northridge ii Table of Contents Signature Page ii List of Figures v Abstract vi Introduction 1 Ecotropic Viral Integration Site 2A, a Gene within a Gene 7 Materials and Methods 11 PCR and Cloning of Recombinant Plasmid 11 Transformation and Cell Culture 13 Generation of Deletion Constructs 15 Transfection and Luciferase Assay 16 Identification of Transcription Factor Binding Sites 17 Determination of Region for Analysis 17 Multiple Sequence Alignment (MSA) 17 Model Testing 18 Tree Construction 18 Promoter and CDS Conserved Motif Search 19 Results and Discussion 20 Choice of Species for Analysis 20 Mapping of Potential Transcription Factor Binding Sites 20 Confirmation of Plasmid Generation through Gel Electrophoresis 21 Analysis of Deletion Constructs by Transient Transfection 22 EVI2A Coding DNA Sequence Phylogenetics 23 EVI2A Promoter Phylogenetics 23 EVI2A Conserved Leucine Zipper 25 iii EVI2A Conserved Casein kinase II phosphorylation site 26 EVI2A Conserved Sox-5 Binding Site 26 EVI2A Conserved HLF Binding Site 27 EVI2A Conserved cREL Binding Site 28 EVI2A Conserved CREB Binding Site 28 Summary 29 Appendix: Figures 30 Literature Cited 44 iv List of Figures Figure 1. EVI2A is nested within the gene NF1 30 Figure 2 Putative Transcriptions Start Site 31 Figure 3. Gel Electrophoresis of pGC Blue cloned, Restriction Digested Plasmid 32 Figure 4. -

Discoveryspace: an Interactive Data Analysis Application

Open Access Software2007RobertsonetVolume al. 8, Issue 1, Article R6 DiscoverySpace: an interactive data analysis application comment Neil Robertson, Mehrdad Oveisi-Fordorei, Scott D Zuyderduyn, Richard J Varhol, Christopher Fjell, Marco Marra, Steven Jones and Asim Siddiqui Address: Canada's Michael Smith Genome Sciences Centre, British Columbia Cancer Research Centre (BCCRC), British Columbia Cancer Agency (BCCA), Vancouver, BC, Canada. reviews Correspondence: Neil Robertson. Email: [email protected]. Mehrdad Oveisi-Fordorei. Email: [email protected]. Asim Siddiqui. Email: [email protected] Published: 08 January 2007 Received: 24 March 2006 Revised: 4 July 2006 Genome Biology 2007, 8:R6 (doi:10.1186/gb-2007-8-1-r6) Accepted: 8 January 2007 The electronic version of this article is the complete one and can be found online at http://genomebiology.com/2007/8/1/R6 reports © 2007 Robertson et al.; licensee BioMed Central Ltd. This is an open access article distributed under the terms of the Creative Commons Attribution License (http://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. Interactive<p>DiscoverySpace, data analysis a graphical application for bioinformatics data analysis, in particular analysis of SAGE data, is described</p> deposited research Abstract DiscoverySpace is a graphical application for bioinformatics data analysis. Users can seamlessly traverse references between biological databases and draw together annotations in an intuitive tabular interface. Datasets can be compared using a suite of novel tools to aid in the identification of significant patterns. DiscoverySpace is of broad utility and its particular strength is in the analysis of serial analysis of gene expression (SAGE) data. -

In This Table Protein Name, Uniprot Code, Gene Name P-Value

Supplementary Table S1: In this table protein name, uniprot code, gene name p-value and Fold change (FC) for each comparison are shown, for 299 of the 301 significantly regulated proteins found in both comparisons (p-value<0.01, fold change (FC) >+/-0.37) ALS versus control and FTLD-U versus control. Two uncharacterized proteins have been excluded from this list Protein name Uniprot Gene name p value FC FTLD-U p value FC ALS FTLD-U ALS Cytochrome b-c1 complex P14927 UQCRB 1.534E-03 -1.591E+00 6.005E-04 -1.639E+00 subunit 7 NADH dehydrogenase O95182 NDUFA7 4.127E-04 -9.471E-01 3.467E-05 -1.643E+00 [ubiquinone] 1 alpha subcomplex subunit 7 NADH dehydrogenase O43678 NDUFA2 3.230E-04 -9.145E-01 2.113E-04 -1.450E+00 [ubiquinone] 1 alpha subcomplex subunit 2 NADH dehydrogenase O43920 NDUFS5 1.769E-04 -8.829E-01 3.235E-05 -1.007E+00 [ubiquinone] iron-sulfur protein 5 ARF GTPase-activating A0A0C4DGN6 GIT1 1.306E-03 -8.810E-01 1.115E-03 -7.228E-01 protein GIT1 Methylglutaconyl-CoA Q13825 AUH 6.097E-04 -7.666E-01 5.619E-06 -1.178E+00 hydratase, mitochondrial ADP/ATP translocase 1 P12235 SLC25A4 6.068E-03 -6.095E-01 3.595E-04 -1.011E+00 MIC J3QTA6 CHCHD6 1.090E-04 -5.913E-01 2.124E-03 -5.948E-01 MIC J3QTA6 CHCHD6 1.090E-04 -5.913E-01 2.124E-03 -5.948E-01 Protein kinase C and casein Q9BY11 PACSIN1 3.837E-03 -5.863E-01 3.680E-06 -1.824E+00 kinase substrate in neurons protein 1 Tubulin polymerization- O94811 TPPP 6.466E-03 -5.755E-01 6.943E-06 -1.169E+00 promoting protein MIC C9JRZ6 CHCHD3 2.912E-02 -6.187E-01 2.195E-03 -9.781E-01 Mitochondrial 2- -

Genomics and Risk Assessment | Mini-Monograph

Genomics and Risk Assessment | Mini-Monograph Database Development in Toxicogenomics: Issues and Efforts William B. Mattes,1 Syril D. Pettit,2 Susanna-Assunta Sansone,3 Pierre R. Bushel,4 and Michael D. Waters4 1Pfizer Inc, Groton, Connecticut, USA; 2ILSI Health and Environmental Sciences Institute, Washington, DC, USA; 3European Molecular Biology Laboratory–European Bioinformatics Institute, Hinxton, United Kingdom; 4National Center for Toxicogenomics, National Institute of Environmental Health Sciences, National Institutes of Health, Department of Health and Human Services, Research Triangle Park, North Carolina, USA thousands of defined probes, each of which The marriage of toxicology and genomics has created not only opportunities but also novel infor- is intended to detect a single mRNA mole- matics challenges. As with the larger field of gene expression analysis, toxicogenomics faces the cule. The mRNA sample is labeled and problems of probe annotation and data comparison across different array platforms. hybridized to the microarray such that the Toxicogenomics studies are generally built on standard toxicology studies generating biological end signal at a given probe is related to the point data, and as such, one goal of toxicogenomics is to detect relationships between changes in amount of that particular mRNA in the sam- gene expression and in those biological parameters. These challenges are best addressed through ple. This readout characteristic makes data collection into a well-designed toxicogenomics database. A successful publicly accessible toxi- microarray-based transcript profiling particu- cogenomics database will serve as a repository for data sharing and as a resource for analysis, data larly appealing because the identities of the mining, and discussion. -

Gene Expression Specification

Gene Expression Specification October 2003 Version 1.1 formal/03-10-01 An Adopted Specification of the Object Management Group, Inc. Copyright © 2002, EMBL-EBI (European Bioinformatics Institute) Copyright © 2003 Object Management Group Copyright © 2002, Rosetta Inpharmatics USE OF SPECIFICATION - TERMS, CONDITIONS & NOTICES The material in this document details an Object Management Group specification in accordance with the terms, conditions and notices set forth below. This document does not represent a commitment to implement any portion of this specification in any company's products. The information contained in this document is subject to change without notice. LICENSES The companies listed above have granted to the Object Management Group, Inc. (OMG) a nonexclusive, royalty-free, paid up, worldwide license to copy and distribute this document and to modify this document and distribute copies of the modified version. Each of the copyright holders listed above has agreed that no person shall be deemed to have infringed the copyright in the included material of any such copyright holder by reason of having used the specification set forth herein or having conformed any computer software to the specification. Subject to all of the terms and conditions below, the owners of the copyright in this specification hereby grant you a fully-paid up, non-exclusive, nontransferable, perpetual, worldwide license (without the right to sublicense), to use this specification to create and distribute software and special purpose specifications that are based upon this specification, and to use, copy, and distribute this specification as provided under the Copyright Act; provided that: (1) both the copyright notice identified above and this permission notice appear on any copies of this specification; (2) the use of the specifications is for informational purposes and will not be copied or posted on any network computer or broadcast in any media and will not be otherwise resold or transferred for commercial purposes; and (3) no modifications are made to this specification. -

UC Irvine UC Irvine Electronic Theses and Dissertations

UC Irvine UC Irvine Electronic Theses and Dissertations Title Generation and application of a chimeric model to examine human microglia responses to amyloid pathology Permalink https://escholarship.org/uc/item/38g5s0sj Author Hasselmann, Jonathan Publication Date 2021 Supplemental Material https://escholarship.org/uc/item/38g5s0sj#supplemental Peer reviewed|Thesis/dissertation eScholarship.org Powered by the California Digital Library University of California UNIVERSITY OF CALIFORNIA, IRVINE Generation and application of a chimeric model to examine human microglia responses to amyloid pathology DISSERTATION Submitted in partial satisfaction of the requirements for the degree of DOCTOR OF PHILOSOPHY In Biological Sciences By Jonathan Hasselmann Dissertation Committee: Associate Professor Mathew Blurton-Jones, Chair Associate Professor Kim Green Professor Brian Cummings 2021 Portions of the Introduction and Chapter 2 © 2020 Wiley Periodicals, Inc. Chapter 1 and a portion of Chapter 2 © 2019 Elsevier Inc. All other materials © 2021 Jonathan Hasselmann DEDICATION To Nikki and Isla: During this journey, I have been fortunate enough to have the love and support of two of the strongest ladies I have ever met. I can say without a doubt that I would not have made it here without the two of you standing behind me. I love you both with all my heart and this achievement belongs to the two of you just as much as it belongs to me. Thank you for always having my back. ii TABLE OF CONTENTS LIST OF FIGURES .......................................................................................................................v -

Neurofibromatosis I and Multiple Sclerosis Christina Bergqvist1,2, François Hemery3, Salah Ferkal2,4 and Pierre Wolkenstein1,2,4*

Bergqvist et al. Orphanet Journal of Rare Diseases (2020) 15:186 https://doi.org/10.1186/s13023-020-01463-z LETTER TO THE EDITOR Open Access Neurofibromatosis I and multiple sclerosis Christina Bergqvist1,2, François Hemery3, Salah Ferkal2,4 and Pierre Wolkenstein1,2,4* Abstract Neurofibromatosis 1 (NF1) is one of the most common autosomal dominant genetic disorders with a birth incidence as high as 1:2000. It is caused by mutations in the NF1 gene on chromosome 17 which encodes neurofibromin, a regulator of neuronal differentiation. While NF1 individuals are predisposed to develop benign and malignant nervous system tumors, various non-tumoral neurological conditions including multiple sclerosis (MS) have also been reported to occur more frequently in NF1. The number of epidemiologic studies on MS in NF1 individuals is very limited. The aim of this study was to determine the estimated population proportion of MS in NF1 patients followed in our Referral Centre for Neurofibromatosis using the Informatics for Integrated Biology and the Bedside (i2b2) platform to extract information from the hospital’s electronic health records. We found a total 1507 patients with confirmed NF1, aged 18 years (y) and above (mean age 39.2y, range 18-88y; 57% women). Five NF1 individuals were found to have MS, yielding an estimated population proportion of 3.3 per 1000 (0.0033, 95% Confidence Interval 0.0014–0.0077). The median age at diagnosis was 45 y (range 28–49 y). Three patients had relapsing-remitting MS and two patients had secondary progressive MS. Patients with NF1 were found to be twice more likely to develop MS than the general population in France (odds ratio 2.2), however this result was not statistically significant (95% Confidence Interval 0.91–5.29). -

PB #ISAG2017 1 @Isagofficial #ISAG2017 #ISAG2017

Bioinformatics · Comparative Genomics · Computational Biology Epigenetics · Functional Genomics · Genome Diversity · Geno Genome Sequencing · Immunogenetics · Integrative Geno · Microbiomics · Population Genomics · Systems Biolog Genetic Markers and Selection · Genetics and Dis Gene Editing · Bioinformatics · Comparative Computational Biology · Epigenetics · Fun Genome Diversity · Genome Sequeng Integrative Genomics · Microbiom Population Genomics · Syste Genetic Markers and Sel Genetics and Disease Gene Editing · Bi O’Brien Centre for Science Bioinformati and O’Reilly Hall, University College Dublin, Dublin, Ireland ABSTRACTMINI PROGRAMME BOOK www.isag.us/2017 PB #ISAG2017 1 @isagofficial #ISAG2017 #ISAG2017 Contents ORAL PRESENTATIONS 1 Animal Forensic Genetics Workshop 1 Applied Genetics and Genomics in Other Species of Economic Importance 3 Domestic Animal Sequencing and Annotation 5 Genome Edited Animals 8 Horse Genetics and Genomics 9 Avian Genetics and Genomics 12 Comparative MHC Genetics: Populations and Polymorphism 16 Equine Genetics and Thoroughbred Parentage Testing Workshop 19 Genetics of Immune Response and Disease Resistance 20 ISAG-FAO Genetic Diversity 24 Ruminant Genetics and Genomics 28 Animal Epigenetics 31 Cattle Molecular Markers and Parentage Testing 33 Companion Animal Genetics and Genomics 34 Microbiomes 37 Pig Genetics and Genomics 40 Novel, Groundbreaking Research/Methodology Presentation 44 Applied Genetics of Companion Animals 44 Applied Sheep and Goat Genetics 45 Comparative and Functional Genomics 47 Genetics -

(NF1) As a Breast Cancer Driver

INVESTIGATION Comparative Oncogenomics Implicates the Neurofibromin 1 Gene (NF1) as a Breast Cancer Driver Marsha D. Wallace,*,† Adam D. Pfefferle,‡,§,1 Lishuang Shen,*,1 Adrian J. McNairn,* Ethan G. Cerami,** Barbara L. Fallon,* Vera D. Rinaldi,* Teresa L. Southard,*,†† Charles M. Perou,‡,§,‡‡ and John C. Schimenti*,†,§§,2 *Department of Biomedical Sciences, †Department of Molecular Biology and Genetics, ††Section of Anatomic Pathology, and §§Center for Vertebrate Genomics, Cornell University, Ithaca, New York 14853, ‡Department of Pathology and Laboratory Medicine, §Lineberger Comprehensive Cancer Center, and ‡‡Department of Genetics, University of North Carolina, Chapel Hill, North Carolina 27514, and **Memorial Sloan-Kettering Cancer Center, New York, New York 10065 ABSTRACT Identifying genomic alterations driving breast cancer is complicated by tumor diversity and genetic heterogeneity. Relevant mouse models are powerful for untangling this problem because such heterogeneity can be controlled. Inbred Chaos3 mice exhibit high levels of genomic instability leading to mammary tumors that have tumor gene expression profiles closely resembling mature human mammary luminal cell signatures. We genomically characterized mammary adenocarcinomas from these mice to identify cancer-causing genomic events that overlap common alterations in human breast cancer. Chaos3 tumors underwent recurrent copy number alterations (CNAs), particularly deletion of the RAS inhibitor Neurofibromin 1 (Nf1) in nearly all cases. These overlap with human CNAs including NF1, which is deleted or mutated in 27.7% of all breast carcinomas. Chaos3 mammary tumor cells exhibit RAS hyperactivation and increased sensitivity to RAS pathway inhibitors. These results indicate that spontaneous NF1 loss can drive breast cancer. This should be informative for treatment of the significant fraction of patients whose tumors bear NF1 mutations. -

Loci for Primary Ciliary Dyskinesia Map to Chromosome 16P12.1-12.2 And

233 LETTER TO JMG J Med Genet: first published as 10.1136/jmg.2003.014084 on 1 March 2004. Downloaded from Loci for primary ciliary dyskinesia map to chromosome 16p12.1-12.2 and 15q13.1-15.1 in Faroe Islands and Israeli Druze genetic isolates D Jeganathan*, R Chodhari*, M MeeksÀ, O Færoe, D Smyth, K Nielsen, I Amirav, A S Luder, H Bisgaard, R M Gardiner, E M K Chung, H M Mitchison ............................................................................................................................... J Med Genet 2004;41:233–240. doi: 10.1136/jmg.2003.014084 rimary ciliary dyskinesia (PCD; Immotile cilia syndrome; OMIM 242650) is an autosomal recessive disorder Key points Presulting from dysmotility of cilia and sperm flagella.1 Cilia and flagella function either to create circulation of fluid N Primary ciliary dyskinesia is an autosomal recessive over a stationary cell surface or to propel a cell through disorder characterised by recurrent sinopulmonary fluid.23 These related structures are highly complex orga- infections, bronchiectasis, and subfertility, due to nelles composed of over 200 different polypeptides.45 The dysfunction of the cilia. Fifty percent of patients with core or axoneme of cilia and flagella comprises a bundle of primary ciliary dyskinesia have defects of laterality microtubules and many associated proteins. The microtu- (situs inversus) and this association is known as bules are formed from a and b tubulin protofilaments and are Kartagener syndrome. Primary ciliary dyskinesia has arranged in a well recognised ‘9+2’ pattern: nine peripheral an estimated incidence of 1 in 20 000 live births. microtubule doublets in a ring connected around a central N pair of microtubules by radial spoke proteins. -

The Role of Co-Deleted Genes in Neurofibromatosis Type 1

G C A T T A C G G C A T genes Article The Role of Co-Deleted Genes in Neurofibromatosis Type 1 Microdeletions: An Evolutive Approach Larissa Brussa Reis 1,2 , Andreia Carina Turchetto-Zolet 2, Maievi Fonini 1, Patricia Ashton-Prolla 1,2,3 and Clévia Rosset 1,* 1 Laboratório de Medicina Genômica, Centro de Pesquisa Experimental, Hospital de Clínicas de Porto Alegre, Porto Alegre, Rio Grande do Sul 90035-903, Brazil; [email protected] (L.B.R.); [email protected] (M.F.); [email protected] (P.A.-P.) 2 Programa de Pós-Graduação em Genética e Biologia Molecular, Departamento de Genética, UFRGS, Porto Alegre, Rio Grande do Sul 91501-970, Brazil; [email protected] 3 Serviço de Genética Médica do Hospital de Clínicas de Porto Alegre (HCPA), Porto Alegre, Rio Grande do Sul 90035-903, Brazil * Correspondence: [email protected]; Tel.: +55-51-3359-7661 Received: 28 June 2019; Accepted: 16 September 2019; Published: 24 October 2019 Abstract: Neurofibromatosis type 1 (NF1) is a cancer predisposition syndrome that results from dominant loss-of-function mutations mainly in the NF1 gene. Large rearrangements are present in 5–10% of affected patients, generally encompass NF1 neighboring genes, and are correlated with a more severe NF1 phenotype. Evident genotype–phenotype correlations and the importance of the co-deleted genes are difficult to establish. In our study we employed an evolutionary approach to provide further insights into the understanding of the fundamental function of genes that are co-deleted in subjects with NF1 microdeletions. Our goal was to access the ortholog and paralog relationship of these genes in primates and verify if purifying or positive selection are acting on these genes.