Accelerating Convolutional Neural Network by Exploiting Sparsity on Gpus Weizhi Xu, Shengyu Fan, Hui Yu and Xin Fu, Member, IEEE

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Does Your Hardware Support Opencl? - 12-29-2011 by Vincent - Streamcomputing

Does your hardware support OpenCL? - 12-29-2011 by vincent - StreamComputing - http://streamcomputing.eu Does your hardware support OpenCL? by vincent – Thursday, December 29, 2011 http://streamcomputing.eu/blog/2011-12-29/opencl-hardware-support/ Does your computer have OpenCL-capable hardware? Read on and find out… For people who only want to run OpenCL-software and have recent hardware, just read this paragraph. If you have recent drivers for your GPU, you can be sure OpenCL is already supported and you can run OpenCL-capable software. NVidia has support for OpenCL 1.1 since drivers 280.13, so if you need OpenCL 1.1, then make sure you have this version or later. If you want to use Intel-processors and you don’t have an AMD GPU installed, you need to download the runtime of Intel OpenCL. If you want to know if your X86 device is supported, you’ll find answers in this article. Often it is not clear how OpenCL works on CPUs. If you have a 8 core processor with double threading, then it mostly is understood that 16 pipelines of instructions are possible. OpenCL takes care of this threading, but also uses parallelism provided by SSE and AVX extension. I talked more about this here and here. Meaning that an 8-core processor with AVX can compute 8 times 32 bytes (8*8 floats or 8*4 doubles) in parallel. You could see it as parallelism of parallelism. SSE is designed with multimedia-operations in mind, but has enough to be used with OpenCL. -

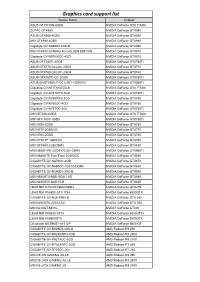

Graphics Card Support List

Graphics card support list Device Name Chipset ASUS GTXTITAN-6GD5 NVIDIA GeForce GTX TITAN ZOTAC GTX980 NVIDIA GeForce GTX980 ASUS GTX980-4GD5 NVIDIA GeForce GTX980 MSI GTX980-4GD5 NVIDIA GeForce GTX980 Gigabyte GV-N980D5-4GD-B NVIDIA GeForce GTX980 MSI GTX970 GAMING 4G GOLDEN EDITION NVIDIA GeForce GTX970 Gigabyte GV-N970IXOC-4GD NVIDIA GeForce GTX970 ASUS GTX780TI-3GD5 NVIDIA GeForce GTX780Ti ASUS GTX770-DC2OC-2GD5 NVIDIA GeForce GTX770 ASUS GTX760-DC2OC-2GD5 NVIDIA GeForce GTX760 ASUS GTX750TI-OC-2GD5 NVIDIA GeForce GTX750Ti ASUS ENGTX560-Ti-DCII/2D1-1GD5/1G NVIDIA GeForce GTX560Ti Gigabyte GV-NTITAN-6GD-B NVIDIA GeForce GTX TITAN Gigabyte GV-N78TWF3-3GD NVIDIA GeForce GTX780Ti Gigabyte GV-N780WF3-3GD NVIDIA GeForce GTX780 Gigabyte GV-N760OC-4GD NVIDIA GeForce GTX760 Gigabyte GV-N75TOC-2GI NVIDIA GeForce GTX750Ti MSI NTITAN-6GD5 NVIDIA GeForce GTX TITAN MSI GTX 780Ti 3GD5 NVIDIA GeForce GTX780Ti MSI N780-3GD5 NVIDIA GeForce GTX780 MSI N770-2GD5/OC NVIDIA GeForce GTX770 MSI N760-2GD5 NVIDIA GeForce GTX760 MSI N750 TF 1GD5/OC NVIDIA GeForce GTX750 MSI GTX680-2GB/DDR5 NVIDIA GeForce GTX680 MSI N660Ti-PE-2GD5-OC/2G-DDR5 NVIDIA GeForce GTX660Ti MSI N680GTX Twin Frozr 2GD5/OC NVIDIA GeForce GTX680 GIGABYTE GV-N670OC-2GD NVIDIA GeForce GTX670 GIGABYTE GV-N650OC-1GI/1G-DDR5 NVIDIA GeForce GTX650 GIGABYTE GV-N590D5-3GD-B NVIDIA GeForce GTX590 MSI N580GTX-M2D15D5/1.5G NVIDIA GeForce GTX580 MSI N465GTX-M2D1G-B NVIDIA GeForce GTX465 LEADTEK GTX275/896M-DDR3 NVIDIA GeForce GTX275 LEADTEK PX8800 GTX TDH NVIDIA GeForce 8800GTX GIGABYTE GV-N26-896H-B -

NVIDIA Quadro RTX for V-Ray Next

NVIDIA QUADRO RTX V-RAY NEXT GPU Image courtesy of © Dabarti Studio, rendered with V-Ray GPU Quadro RTX Accelerates V-Ray Next GPU Rendering Solutions for V-Ray Next GPU V-Ray Next GPU taps into the power of NVIDIA® Quadro® NVIDIA Quadro® provides a wide range of RTX-enabled RTX™ to speed up production rendering with dedicated RT solutions for desktop, mobile, server-based rendering, and Cores for ray tracing and Tensor Cores for AI-accelerated virtual workstations with NVIDIA Quadro Virtual Data denoising.¹ With up to 18X faster rendering than CPU-based Center Workstation (Quadro vDWS) software.2 With up to 96 solutions and enhanced performance with NVIDIA NVLink™, gigabytes (GB) of GPU memory available,3 Quadro RTX V-Ray Next GPU with RTX support provides incredible provides the power you need for the largest professional performance improvements for your rendering workloads. graphics and rendering workloads. “ Accelerating artist productivity is always our top Benchmark: V-Ray Next GPU Rendering Performance Increase on Quadro RTX GPUs priority, so we’re quick to take advantage of the latest ray-tracing hardware breakthroughs. By Quadro RTX 6000 x2 1885 ™ Quadro RTX 6000 104 supporting NVIDIA RTX in V-Ray GPU, we’re Quadro RTX 4000 783 bringing our customers an exciting new boost in PU 1 0 2 4 6 8 10 12 14 16 18 20 their GPU production rendering speeds.” Relatve Performance – Phillip Miller, Vice President, Product Management, Chaos Group Desktop performance Tests run on 1x Xeon old 6154 3 Hz (37 Hz Turbo), 64 B DDR4 RAM Wn10x64 Drver verson 44128 Performance results may vary dependng on the scene NVIDIA Quadro professional graphics solutions are verified and recommended for the most demanding projects by Chaos Group. -

Nvidia Tesla P40 Gpu Accelerator

NVIDIA TESLA P40 GPU ACCELERATOR HIGH-PERFORMANCE VIRTUAL GRAPHICS AND COMPUTE NVIDIA redefined visual computing by giving designers, engineers, scientists, and graphic artists the power to take on the biggest visualization challenges with immersive, interactive, photorealistic environments. NVIDIA® Quadro® Virtual Data GPU 1 NVIDIA Pascal GPU Center Workstation (Quadro vDWS) takes advantage of NVIDIA® CUDA Cores 3,840 Tesla® GPUs to deliver virtual workstations from the data center. Memory Size 24 GB GDDR5 H.264 1080p30 streams 24 Architects, engineers, and designers are now liberated from Max vGPU instances 24 (1 GB Profile) their desks and can access applications and data anywhere. vGPU Profiles 1 GB, 2 GB, 3 GB, 4 GB, 6 GB, 8 GB, 12 GB, 24 GB ® ® The NVIDIA Tesla P40 GPU accelerator works with NVIDIA Form Factor PCIe 3.0 Dual Slot Quadro vDWS software and is the first system to combine an (rack servers) Power 250 W enterprise-grade visual computing platform for simulation, Thermal Passive HPC rendering, and design with virtual applications, desktops, and workstations. This gives organizations the freedom to virtualize both complex visualization and compute (CUDA and OpenCL) workloads. The NVIDIA® Tesla® P40 taps into the industry-leading NVIDIA Pascal™ architecture to deliver up to twice the professional graphics performance of the NVIDIA® Tesla® M60 (Refer to Performance Graph). With 24 GB of framebuffer and 24 NVENC encoder sessions, it supports 24 virtual desktops (1 GB profile) or 12 virtual workstations (2 GB profile), providing the best end-user scalability per GPU. This powerful GPU also supports eight different user profiles, so virtual GPU resources can be efficiently provisioned to meet the needs of the user. -

NVIDIA Launches Tegra X1 Mobile Super Chip

NVIDIA Launches Tegra X1 Mobile Super Chip Maxwell GPU Architecture Delivers First Teraflops Mobile Processor, Powering Deep Learning and Computer Vision Applications NVIDIA today unveiled Tegra® X1, its next-generation mobile super chip with over one teraflops of processing power – delivering capabilities that open the door to unprecedented graphics and sophisticated deep learning and computer vision applications. Tegra X1 is built on the same NVIDIA Maxwell™ GPU architecture rolled out only months ago for the world's top-performing gaming graphics card, the GeForce® GTX 980. The 256-core Tegra X1 provides twice the performance of its predecessor, the Tegra K1, which is based on the previous-generation Kepler™ architecture and debuted at last year's Consumer Electronics Show. Tegra processors are built for embedded products, mobile devices, autonomous machines and automotive applications. Tegra X1 will begin appearing in the first half of the year. It will be featured in the newly announced NVIDIA DRIVE™ car computers. DRIVE PX is an auto-pilot computing platform that can process video from up to 12 onboard cameras to run capabilities providing Surround-Vision, for a seamless 360-degree view around the car, and Auto-Valet, for true self-parking. DRIVE CX is a complete cockpit platform designed to power the advanced graphics required across the increasing number of screens used for digital clusters, infotainment, head-up displays, virtual mirrors and rear-seat entertainment. "We see a future of autonomous cars, robots and drones that see and learn, with seeming intelligence that is hard to imagine," said Jen-Hsun Huang, CEO and co-founder, NVIDIA. -

GPU-Based Deep Learning Inference

Whitepaper GPU-Based Deep Learning Inference: A Performance and Power Analysis November 2015 1 Contents Abstract ......................................................................................................................................................... 3 Introduction .................................................................................................................................................. 3 Inference versus Training .............................................................................................................................. 4 GPUs Excel at Neural Network Inference ..................................................................................................... 5 Inference Optimizations in Caffe and cuDNN 4 ........................................................................................ 5 Experimental Setup and Testing Methodology ........................................................................................ 7 Inference on Small and Large GPUs .......................................................................................................... 8 Conclusion ................................................................................................................................................... 10 References .................................................................................................................................................. 10 2 Abstract Deep learning methods are revolutionizing various areas of machine perception. On a -

Fast Calculation of the Lomb-Scargle Periodogram Using Graphics Processing Units 3

Draft version August 7, 2018 A Preprint typeset using LTEX style emulateapj v. 11/10/09 FAST CALCULATION OF THE LOMB-SCARGLE PERIODOGRAM USING GRAPHICS PROCESSING UNITS R. H. D. Townsend Department of Astronomy, University of Wisconsin-Madison, Sterling Hall, 475 N. Charter Street, Madison, WI 53706, USA; [email protected] Draft version August 7, 2018 ABSTRACT I introduce a new code for fast calculation of the Lomb-Scargle periodogram, that leverages the computing power of graphics processing units (GPUs). After establishing a background to the newly emergent field of GPU computing, I discuss the code design and narrate key parts of its source. Benchmarking calculations indicate no significant differences in accuracy compared to an equivalent CPU-based code. However, the differences in performance are pronounced; running on a low-end GPU, the code can match 8 CPU cores, and on a high-end GPU it is faster by a factor approaching thirty. Applications of the code include analysis of long photometric time series obtained by ongoing satellite missions and upcoming ground-based monitoring facilities; and Monte-Carlo simulation of periodogram statistical properties. Subject headings: methods: data analysis — methods: numerical — techniques: photometric — stars: oscillations 1. INTRODUCTION of the newly emergent field of GPU computing; then, Astronomical time-series observations are often charac- Section 3 reviews the formalism defining the L-S peri- terized by uneven temporal sampling (e.g., due to trans- odogram, and Section 4 presents a GPU-based code im- formation to the heliocentric frame) and/or non-uniform plementing this formalism. Benchmarking calculations coverage (e.g., from day/night cycles, or radiation belt to evaluate the accuracy and performance of the code passages). -

Msi Nvidia Drivers Download Update MSI Graphics Card Driver on Windows 10 & 7

msi nvidia drivers download Update MSI Graphics Card Driver on Windows 10 & 7. Easily! Updating MSI graphics card drivers provides you with high gaming performance. So it’s recommended you keep your graphics card driver up-to- date. In this post, you’ll learn two ways to download and install the latest MSI graphics card driver. Download the MSI graphics card driver manually Download and install the MSI graphics card driver automatically. Way 1: Download the MSI graphics card driver manually. MSI provides the graphics driver on their website, for instance, the NVIDIA graphics card driver and the AMD graphics card driver. So you can check for and download the latest driver you need for your graphics card from MSI’s website. The driver always can be downloaded on the SUPPORT section. Go to MSI website and enter the name of your graphics card and perform a quick search. Then follow the on-screen instructions to download the driver you need. MSI always uploads new drivers to their website. So it’s recommended you to check for the driver release often in order to get the latest driver in time. If you don’t have time and patience to download the driver manually, Way 2 may be a better option for you. Way 2 : Download and install the MSI graphics card driver automatically. If you don’t have the time, patience or computer skills to update the MSI graphics driver manually, you can do it automatically with Driver Easy . Driver Easy will automatically recognize your system and find the correct drivers for it. -

True Random Number Generation Using Genetic Algorithms on High Performance Architectures

True Random Number Generation using Genetic Algorithms on High Performance Architectures by Jose Juan Mijares Chan A thesis submitted to The Faculty of Graduate Studies of The University of Manitoba in partial fulfillment of the requirements of the degree of Doctor of Philosophy Department of Electrical and Computer Engineering The University of Manitoba Winnipeg, Manitoba, Canada October 2016 © Copyright 2016 by Jose Juan Mijares Chan Thesis advisor Author Gabriel Thomas, Parimala Thulasiraman Jose Juan Mijares Chan True Random Number Generation using Genetic Algorithms on High Performance Architectures Abstract Many real-world applications use random numbers generated by pseudo-random number and true random number generators (TRNG). Unlike pseudo-random number generators which rely on an input seed to generate random numbers, a TRNG relies on a non-deterministic source to generate aperiodic random numbers. In this research, we develop a novel and generic software-based TRNG using a random source extracted from compute architectures of today. We show that the non-deterministic events such as race conditions between compute threads follow a near Gamma distribution, independent of the architecture, multi-cores or co-processors. Our design improves the distribution towards a uniform distribution ensuring the stationarity of the sequence of random variables. We improve the random numbers statistical deficiencies by using a post-processing stage based on a heuristic evolutionary algorithm. Our post-processing algorithm is composed of two phases: (i) Histogram Specification and (ii) Stationarity Enforcement. We propose two techniques for histogram equalization, Exact Histogram Equalization (EHE) and Adaptive EHE (AEHE) that maps the random numbers distribution to ii Abstract iii a user-specified distribution. -

Numerical Behavior of NVIDIA Tensor Cores

Numerical behavior of NVIDIA tensor cores Massimiliano Fasi1, Nicholas J. Higham2, Mantas Mikaitis2 and Srikara Pranesh2 1 School of Science and Technology, Örebro University, Örebro, Sweden 2 Department of Mathematics, University of Manchester, Manchester, UK ABSTRACT We explore the floating-point arithmetic implemented in the NVIDIA tensor cores, which are hardware accelerators for mixed-precision matrix multiplication available on the Volta, Turing, and Ampere microarchitectures. Using Volta V100, Turing T4, and Ampere A100 graphics cards, we determine what precision is used for the intermediate results, whether subnormal numbers are supported, what rounding mode is used, in which order the operations underlying the matrix multiplication are performed, and whether partial sums are normalized. These aspects are not documented by NVIDIA, and we gain insight by running carefully designed numerical experiments on these hardware units. Knowing the answers to these questions is important if one wishes to: (1) accurately simulate NVIDIA tensor cores on conventional hardware; (2) understand the differences between results produced by code that utilizes tensor cores and code that uses only IEEE 754-compliant arithmetic operations; and (3) build custom hardware whose behavior matches that of NVIDIA tensor cores. As part of this work we provide a test suite that can be easily adapted to test newer versions of the NVIDIA tensorcoresaswellassimilaracceleratorsfromothervendors,astheybecome available. Moreover, we identify a non-monotonicity issue -

NVIDIA Ampere GA102 GPU Architecture Whitepaper

NVIDIA AMPERE GA102 GPU ARCHITECTURE Second-Generation RTX Updated with NVIDIA RTX A6000 and NVIDIA A40 Information V2.0 Table of Contents Introduction 5 GA102 Key Features 7 2x FP32 Processing 7 Second-Generation RT Core 7 Third-Generation Tensor Cores 8 GDDR6X and GDDR6 Memory 8 Third-Generation NVLink® 8 PCIe Gen 4 9 Ampere GPU Architecture In-Depth 10 GPC, TPC, and SM High-Level Architecture 10 ROP Optimizations 11 GA10x SM Architecture 11 2x FP32 Throughput 12 Larger and Faster Unified Shared Memory and L1 Data Cache 13 Performance Per Watt 16 Second-Generation Ray Tracing Engine in GA10x GPUs 17 Ampere Architecture RTX Processors in Action 19 GA10x GPU Hardware Acceleration for Ray-Traced Motion Blur 20 Third-Generation Tensor Cores in GA10x GPUs 24 Comparison of Turing vs GA10x GPU Tensor Cores 24 NVIDIA Ampere Architecture Tensor Cores Support New DL Data Types 26 Fine-Grained Structured Sparsity 26 NVIDIA DLSS 8K 28 GDDR6X Memory 30 RTX IO 32 Introducing NVIDIA RTX IO 33 How NVIDIA RTX IO Works 34 Display and Video Engine 38 DisplayPort 1.4a with DSC 1.2a 38 HDMI 2.1 with DSC 1.2a 38 Fifth Generation NVDEC - Hardware-Accelerated Video Decoding 39 AV1 Hardware Decode 40 Seventh Generation NVENC - Hardware-Accelerated Video Encoding 40 NVIDIA Ampere GA102 GPU Architecture ii Conclusion 42 Appendix A - Additional GeForce GA10x GPU Specifications 44 GeForce RTX 3090 44 GeForce RTX 3070 46 Appendix B - New Memory Error Detection and Replay (EDR) Technology 49 Appendix C - RTX A6000 GPU Perf ormance 50 List of Figures Figure 1. -

PACKET 22 BOOKSTORE, TEXTBOOK CHAPTER Reading Graphics

A.11 GRAPHICS CARDS, Historical Perspective (edited by J Wunderlich PhD in 2020) Graphics Pipeline Evolution 3D graphics pipeline hardware evolved from the large expensive systems of the early 1980s to small workstations and then to PC accelerators in the 1990s, to $X,000 graphics cards of the 2020’s During this period, three major transitions occurred: 1. Performance-leading graphics subsystems PRICE changed from $50,000 in 1980’s down to $200 in 1990’s, then up to $X,0000 in 2020’s. 2. PERFORMANCE increased from 50 million PIXELS PER SECOND in 1980’s to 1 billion pixels per second in 1990’’s and from 100,000 VERTICES PER SECOND to 10 million vertices per second in the 1990’s. In the 2020’s performance is measured more in FRAMES PER SECOND (FPS) 3. Hardware RENDERING evolved from WIREFRAME to FILLED POLYGONS, to FULL- SCENE TEXTURE MAPPING Fixed-Function Graphics Pipelines Throughout the early evolution, graphics hardware was configurable, but not programmable by the application developer. With each generation, incremental improvements were offered. But developers were growing more sophisticated and asking for more new features than could be reasonably offered as built-in fixed functions. The NVIDIA GeForce 3, described by Lindholm, et al. [2001], took the first step toward true general shader programmability. It exposed to the application developer what had been the private internal instruction set of the floating-point vertex engine. This coincided with the release of Microsoft’s DirectX 8 and OpenGL’s vertex shader extensions. Later GPUs, at the time of DirectX 9, extended general programmability and floating point capability to the pixel fragment stage, and made texture available at the vertex stage.